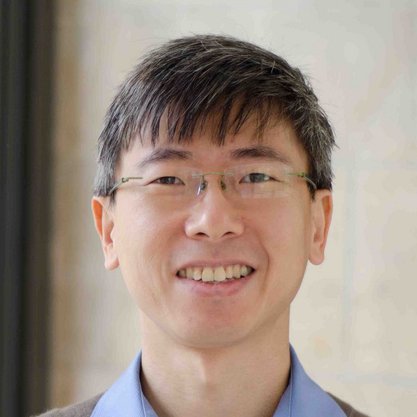

Kevin Swersky

@kswersk

Research Scientist at Deepmind.

Vous pourriez aimer

🚀 All this and more in our paper! arXiv: arxiv.org/abs/2509.20328 Project page: video-zero-shot.github.io By @thwiedemer, Yuxuan Li, @PaulVicol, @shaneguML, @nmatares, @kswersk, @_beenkim, @priyankjaini, and Robert Geirhos.

Do generative video models learn physical principles from watching videos? Very excited to introduce the Physics-IQ benchmark, a challenging dataset of real-world videos designed to test physical understanding of video models. Webpage: physics-iq.github.io

Well, we actually did it. We digitized scent. A fresh summer plum was the first fruit and scent to be fully digitized and reprinted with no human intervention. It smells great. Holy moly, I’m still processing the magnitude of what we’ve done. And yet, it feels like as we cross…

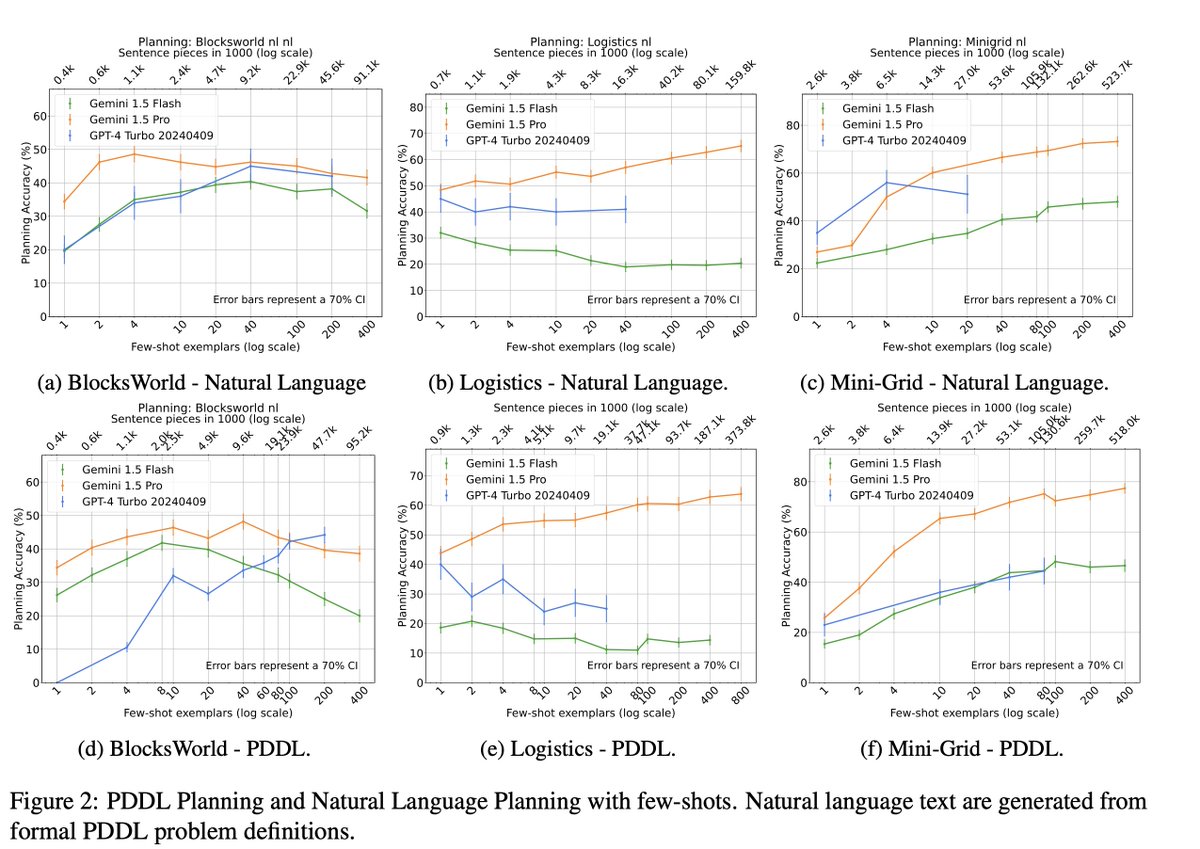

🆕🔥We show that LLMs *can* plan if instructed well! 🔥Instructing the model using ICL leads to a significant boost in planning performance, + can be further improved by using long context. arxiv.org/abs/2406.13094 w/ @Azade_na @bohnetbd A.Parisi @Kgoshvadi @kswersk @hanjundai +

Greedy Growing Enables High-Resolution Pixel-Based Diffusion Models We address the long-standing problem of how to learn effective pixel-based image diffusion models at scale, introducing a remarkably simple greedy growing method for stable training of large-scale,

We have a student researcher opportunity in our team @GoogleDeepMind in Toronto 🍁 If you’re excited about research on diffusion models, and generative video models, please fill the form : forms.gle/auNq61N35AvTZS… and apply here: deepmind.google/about/careers/…

Check out @clark_kev’s and my paper on fine-tuning diffusion models on differentiable rewards! We present DRaFT, which computes gradients through diffusion sampling. DRaFT is efficient & works across many reward functions. With @kswersk, @fleet_dj arXiv: arxiv.org/abs/2309.17400

@PaulVicol and I are excited to introduce DRaFT, a method that fine-tunes diffusion models on rewards (such as scores from human preference models) by backpropagating through the diffusion sampling! with @kswersk, @fleet_dj arXiv: arxiv.org/abs/2309.17400 (1/5)

I’m really excited about this project! Backpropagation and variations are extremely effective at fine-tuning diffusion models on downstream rewards.

@PaulVicol and I are excited to introduce DRaFT, a method that fine-tunes diffusion models on rewards (such as scores from human preference models) by backpropagating through the diffusion sampling! with @kswersk, @fleet_dj arXiv: arxiv.org/abs/2309.17400 (1/5)

This is a really natural framework to improve Bayesian optimization when you have access to related optimization tasks arxiv.org/abs/2109.08215 Joint work with @ziwphd, @GeorgeEDahl, Chansoo Lee, @zacharynado, @jmgilmer, @latentjasper, @ZoubinGhahrama1

Hyper Bayesian optimization (HyperBO) is a highly customizable interface that pre-trains a Gaussian process model and automatically defines model parameters, making Bayesian optimization easier to use while outperforming traditional methods. Learn more → goo.gle/3GnMYHG

📢Introducing Pix2Seq-D, a generalist framework casting panoptic segmentation as a discrete data generation task conditioned on pixels. Works for both images and videos, with minimal task engineering. arxiv.org/abs/2210.06366 work w/ Lala Li, @srbhsxn @geoffreyhinton @fleet_dj

This was a really interesting project for me to learn about and apply neural fields, with some great collaborators!

📢📢📢 𝐂𝐔𝐅 – 𝐂𝐨𝐧𝐭𝐢𝐧𝐮𝐨𝐮𝐬 𝐔𝐩𝐬𝐚𝐦𝐩𝐥𝐢𝐧𝐠 𝐅𝐢𝐥𝐭𝐞𝐫𝐬 Neural-fields beat classical CNNs in (regressive) super-res: cuf-paper.github.io/cuf.pdf AFAIK, a first for @neural_fields in 2D deep learning? Mostly their wins are in sparse, higher-dim signals ~NeRF

The overall recipe is general, and the same method could be applied to many other design problems in principle! More in the paper: arxiv.org/abs/2110.11346 Awesome collaboration led by @aviral_kumar2 & @ayazdanb! w/ Milad Hashemi & @kswersk

I made a bot improvise for 1000 hours and then asked it to come up with a few short-form improv games of it's own. Here's the first three...

Big shout-out to @wgrathwohl @kcjacksonwang @jh_jacobsen @DavidDuvenaud @kswersk @mo_norouzi for their amazing paper "Your classifier is secretly an energy-based model and you should treat it like one"!

This was a very fun project: an elegant algorithm that works well on the difficult task of sampling from discrete EBMs. Congratulations @wgrathwohl and team!

ICML 2021 Outstanding Paper Award Honorable Mentions: 2/4. Will Grathwohl, Kevin Swersky, Milad Hashemi, David Duvenaud, and Chris Maddison 📜Oops I Took A Gradient: Scalable Sampling for Discrete Distributions (Tuesday 9am US Eastern)

ICML 2021 Outstanding Paper Award Honorable Mentions: 2/4. Will Grathwohl, Kevin Swersky, Milad Hashemi, David Duvenaud, and Chris Maddison 📜Oops I Took A Gradient: Scalable Sampling for Discrete Distributions (Tuesday 9am US Eastern)

Hi all! Very pleased to share that my latest paper: "Oops I Took A Gradient: Scalable Sampling for Discrete Distributions" (arxiv.org/abs/2102.04509) has been accepted to ICML for a long presentation. Energy-Based Models have seen amazing progress in the last few years...

I have a new paper on how to represent part-whole hierarchies in neural networks. arxiv.org/abs/2102.12627

Leveraging #MachineLearning for accelerator design enables faster exploration of the architecture search space leading to more efficient hardware across a range of applications. Collaboration w/: @cangermueller, Berkin Akin, Yanqi Zhou, @miladhash, @kswersk.

Check out new work on ML-driven design and exploration of custom accelerators, showing how #MachineLearning facilitates architecture exploration by rapidly identifying high-performing configurations across a range of applications. Learn more ↓ goo.gle/3rngiEh

Check out new work on ML-driven design and exploration of custom accelerators, showing how #MachineLearning facilitates architecture exploration by rapidly identifying high-performing configurations across a range of applications. Learn more ↓ goo.gle/3rngiEh

United States Tendances

- 1. Jets 101K posts

- 2. Justin Fields 19.8K posts

- 3. Broncos 43.1K posts

- 4. Drake Maye 8,120 posts

- 5. Aaron Glenn 8,250 posts

- 6. James Franklin 29.3K posts

- 7. Puka 6,245 posts

- 8. George Pickens 3,403 posts

- 9. Cooper Rush 1,610 posts

- 10. Tyler Warren 1,959 posts

- 11. Steelers 35K posts

- 12. Sean Payton 3,581 posts

- 13. London 201K posts

- 14. TMac 1,570 posts

- 15. Jerry Jeudy N/A

- 16. Garrett Wilson 4,925 posts

- 17. #Pandu N/A

- 18. #HereWeGo 2,476 posts

- 19. #DallasCowboys 1,905 posts

- 20. Karty 1,728 posts

Vous pourriez aimer

-

Tim Rocktäschel

Tim Rocktäschel

@_rockt -

Brandon Amos

Brandon Amos

@brandondamos -

Durk Kingma

Durk Kingma

@dpkingma -

Yee Whye Teh

Yee Whye Teh

@yeewhye -

Jasper

Jasper

@latentjasper -

Yarin

Yarin

@yaringal -

Jakob Foerster

Jakob Foerster

@j_foerst -

Luke Metz

Luke Metz

@Luke_Metz -

Quoc Le

Quoc Le

@quocleix -

Nick Frosst

Nick Frosst

@nickfrosst -

Marc G. Bellemare

Marc G. Bellemare

@marcgbellemare -

Ben Poole

Ben Poole

@poolio -

Tejas Kulkarni

Tejas Kulkarni

@tejasdkulkarni -

Dustin Tran

Dustin Tran

@dustinvtran -

Tom Rainforth

Tom Rainforth

@tom_rainforth

Something went wrong.

Something went wrong.