Cool new working paper on why and how cable news threw gasoline on the culture war fire Link in reply

gander is an R package that brings AI directly into RStudio or Posit. Instead of switching between your IDE and a chat window, gander lets you ask questions or request code changes right inside your script. It automatically shares relevant context such as variable names, data…

AI always calling your ideas “fantastic” can feel inauthentic, but what are sycophancy’s deeper harms? We find that in the common use case of seeking AI advice on interpersonal situations—specifically conflicts—sycophancy makes people feel more right & less willing to apologize.

Individuals are very different in their social behaviour. In this Perspective Kuper and colleagues examine interdisciplinary evidence for why this is and what it means for our understanding of individual and collective human behaviour. nature.com/articles/s4156…

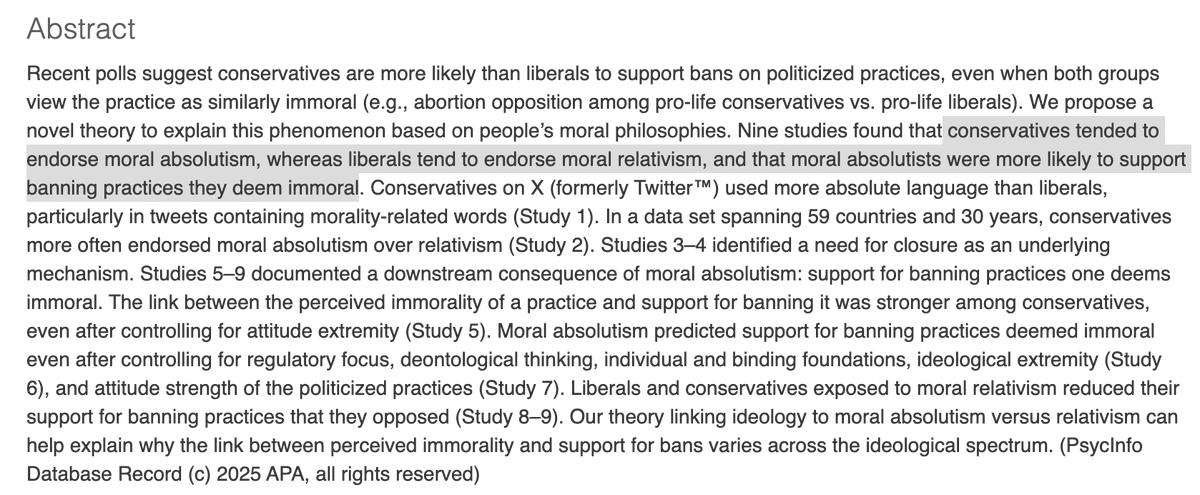

New research finds that conservatives tended to endorse moral absolutism, whereas liberals tend to endorse moral relativism. Moral absolutists are more likely to support banning practices they deem immoral psycnet.apa.org/record/2026-54…

🚨 New working paper! 🚨 Happy to share my new data on affective polarization by party across states, CDs, counties, and towns from 2009-23. Key point: polarization is not just an individual trait... contexts and electorates can be "polarized" too! 1/ papers.ssrn.com/sol3/papers.cf…

Very interesting!

🚨 New paper alert 🚨 Using LLMs as data annotators, you can produce any scientific result you want. We call this **LLM Hacking**. Paper: arxiv.org/pdf/2509.08825

🇺🇸 Can watching dialogue across party lines reduce polarisation? ➡️ L-O Ankori-Karlinsky, @robert_a_blair, J. Gottlieb & @smooreberg show that a documentary of an intergroup workshop reduces polarisation and boosts faith in democracy cambridge.org/core/journals/… #FirstView

1st paper from my lab out @CommunicationsPsychology @CommsPsychol nature.com/articles/s4427… We show an alternative way to understand how people mentally represent other people's characteristics, namely high-dimensional networks, beyond the popular latent factor models.

🚨3rd preprint from my lab out! with my awesome grad @LuJunsong19474🌟 How do people mentally represent numerous inferences about others?🤯 Prior work proposed low-dimensional rep with latent dimensions We show high-dimensional network brings new insights🧵👇…

Currently in FirstView: In “Attention and Political Choice: A Foundation for Eye Tracking in Political Science,” Libby Jenke and Nicolette Sullivan explain what eye tracking allows researchers to measure and how these measures are relevant to political science questions.

This is a useful reading list on recent advances in econometrics.

what are large language models actually doing? i read the 2025 textbook "Foundations of Large Language Models" by tong xiao and jingbo zhu and for the first time, i truly understood how they work. here’s everything you need to know about llms in 3 minutes↓

🚨 New paper in @ScienceAdvances Can changing how we argue about politics online improve the quality of replies we get? @THeideJorgensen, @a_rasmussen, and I use an LLM to manipulate counter-arguments to see how people respond to different approaches to arguments. Thread 🧵1/n

This research advances a mechanistic reward learning account of social learning strategies. Through experiments & simulations, it shows how people learn to learn from others, dynamically shaping the processes involved in cultural evolution. @DSchultner nature.com/articles/s4156…

🚨New paper in @TrendsCognSci 🚨 Why do some ideas spread widely, while others fail to catch on? @Jayvanbavel and I review the “psychology of virality,” or the psychological and structural factors that shape information spread online and offline. Thread 🧵(1/n)

Can large language models (LLMs) fairly annotate data on contentious topics? Our new paper dives into this question—looking at whether LLM-generated labels reflect diverse viewpoints or skew toward majority perspectives. The results are surprisingly nuanced. 🧵

An AI model (Llama 3.1 70B) fine-tuned on the results of 60,000 people in psychology experiments shows some real promise in using LLMs for studying human behavior. It predicts actual human behavior in held-out data & it generalizes to out-of-distribution tasks and experiments.

LLMs can effectively depolarize social media content while maintaining textual coherence, finds Santos et al., using a between-subjects experiment doi.org/10.1145/371786…

This is a stellar paper -- highly recommend if you want an incisive birds-eye view of AI + political science annualreviews.org/content/journa…

United States เทรนด์

- 1. Colts 36.9K posts

- 2. Colts 36.9K posts

- 3. Jets 55.2K posts

- 4. Cheney 174K posts

- 5. AD Mitchell 4,170 posts

- 6. Shaheed 11.7K posts

- 7. Garrett Wilson 1,136 posts

- 8. Election Day 150K posts

- 9. Ballard 3,210 posts

- 10. Joe Tryon 1,218 posts

- 11. Daniel Jones 2,925 posts

- 12. Meyers 14.1K posts

- 13. #ForTheShoe N/A

- 14. Quinnen Williams 2,205 posts

- 15. Seahawks 20K posts

- 16. Two 1st 4,061 posts

- 17. Waddle 7,334 posts

- 18. Jamal Adams N/A

- 19. Olave 2,365 posts

- 20. Mamdani 620K posts

Something went wrong.

Something went wrong.