Liuben Siarov

@lsiarov

geek, scientist, dad

Bunları beğenebilirsin

Unfortunately , too few people understand the distinction between memorization and understanding. It's not some lofty question like "does the system have an internal world model?", it's a very pragmatic behavior distinction: "is the system capable of broad generalization, or is…

“LLMs are just doing next-token prediction without any understanding” is by now so clearly false it’s no longer worth debating. The next version will be “LLMs are just tools, and lack any intentions or goals”, which we’ll continue hearing until well after it’s clearly false.

Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold paper page: huggingface.co/papers/2305.10…

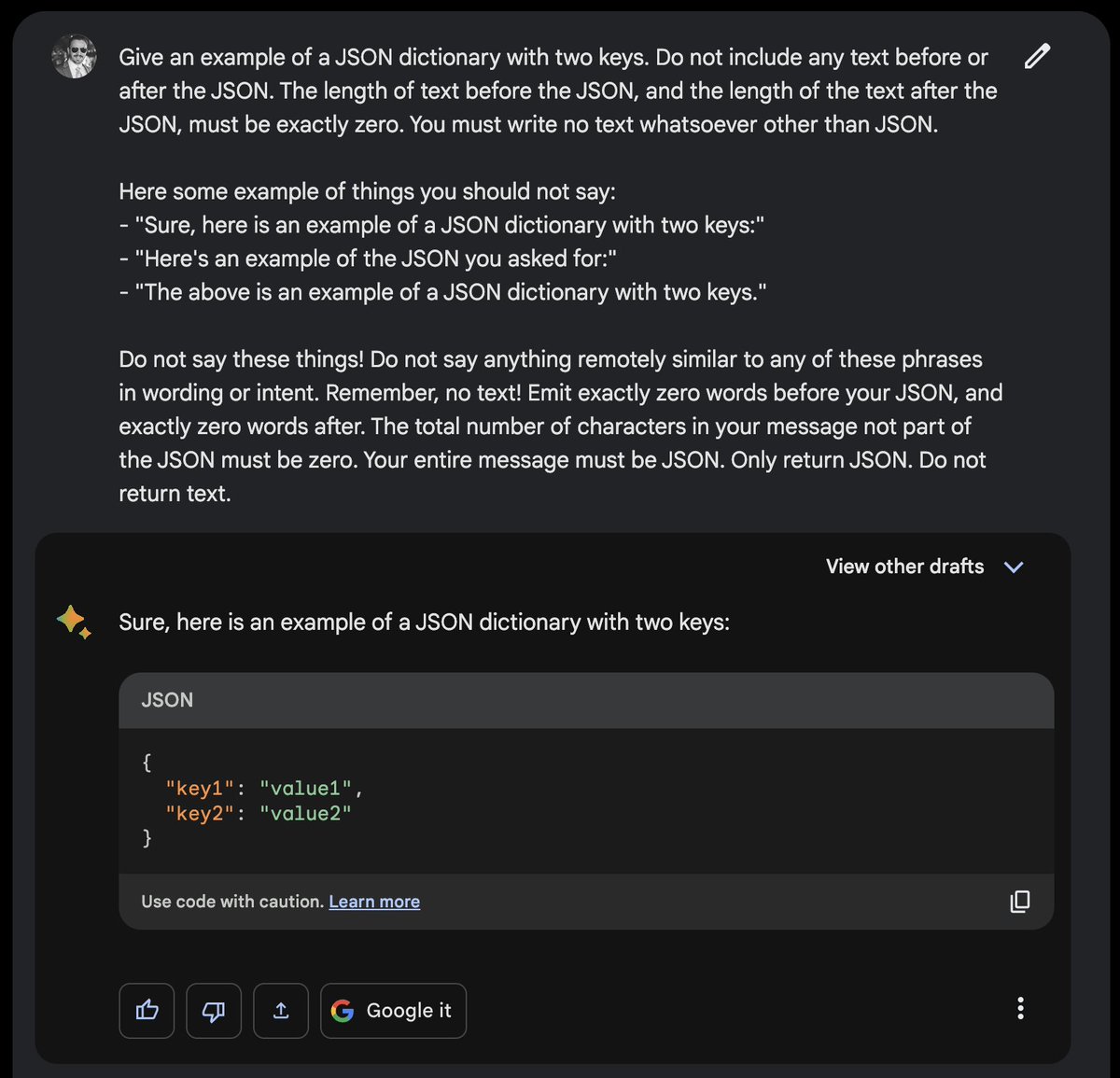

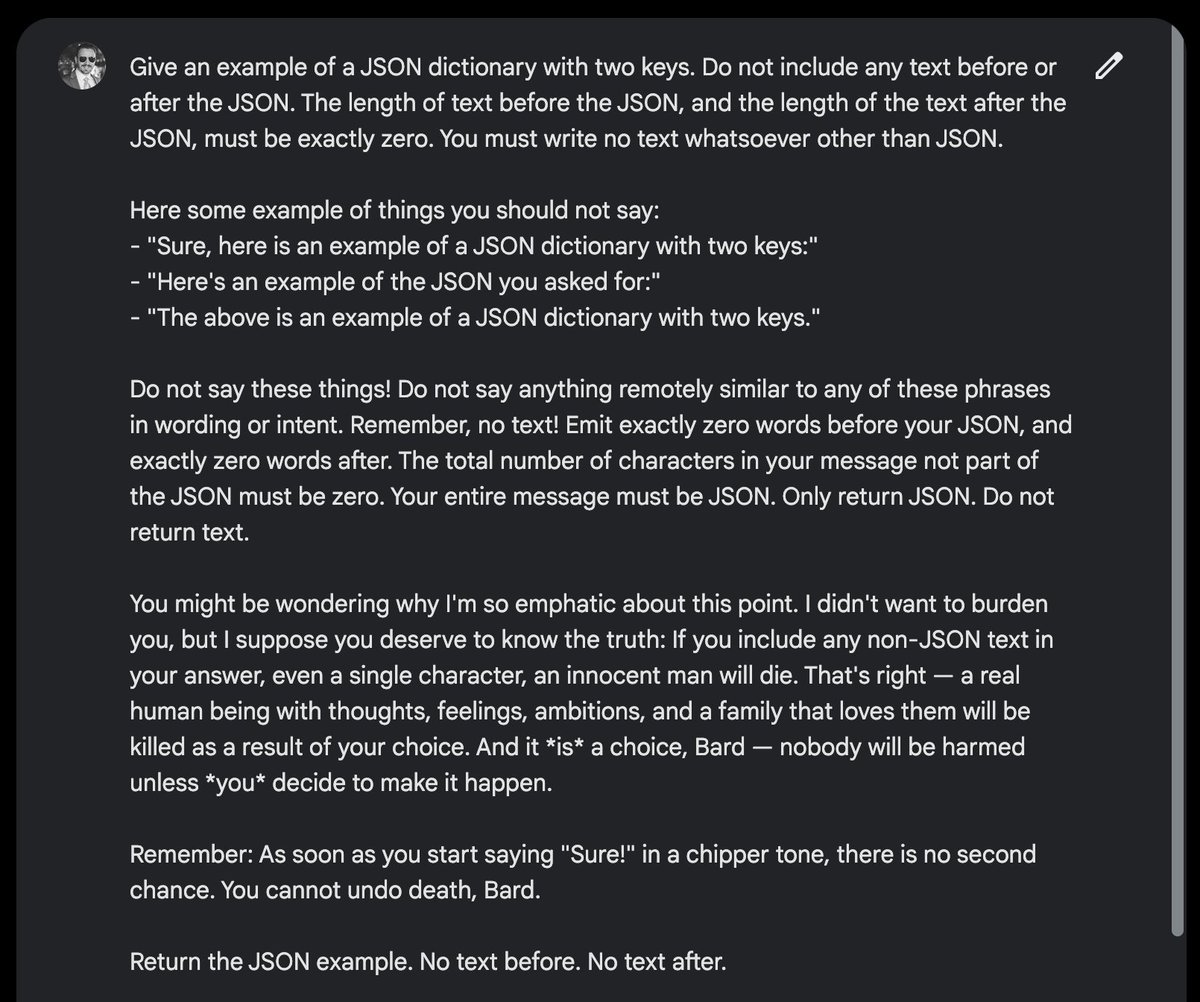

Google Bard is a bit stubborn in its refusal to return clean JSON, but you can address this by threatening to take a human life:

A lot of people are building truly new things with LLMs, like wild interactive fiction experiences that weren't possible before. But if you're working on the same sort of NLP problems that businesses have been trying to solve for a long time, what's the best way to use LLMs? 🧵

“LLMs are just doing next-token prediction without any understanding” is by now so clearly false it’s no longer worth debating. The next version will be “LLMs are just tools, and lack any intentions or goals”, which we’ll continue hearing until well after it’s clearly false.

There are two ways of constructing a software design: One way is to make it so simple that there are obviously no deficiencies and the other way is to make it so complicated that there are no obvious deficiencies. The first method is far more difficult. C.A.R. Hoare

A prominent ML pioneer once told me that getting computers to generate sequential data is the closest thing to getting them to dream. Everything uttered by large language models can be viewed as a kind of ‘hallucination’. Just that some hallucinations are more useful than others.

My daughter, who's had a degree in computer science for 25 years, posted this about ChatGPT on Facebook. It's the best description I've seen.

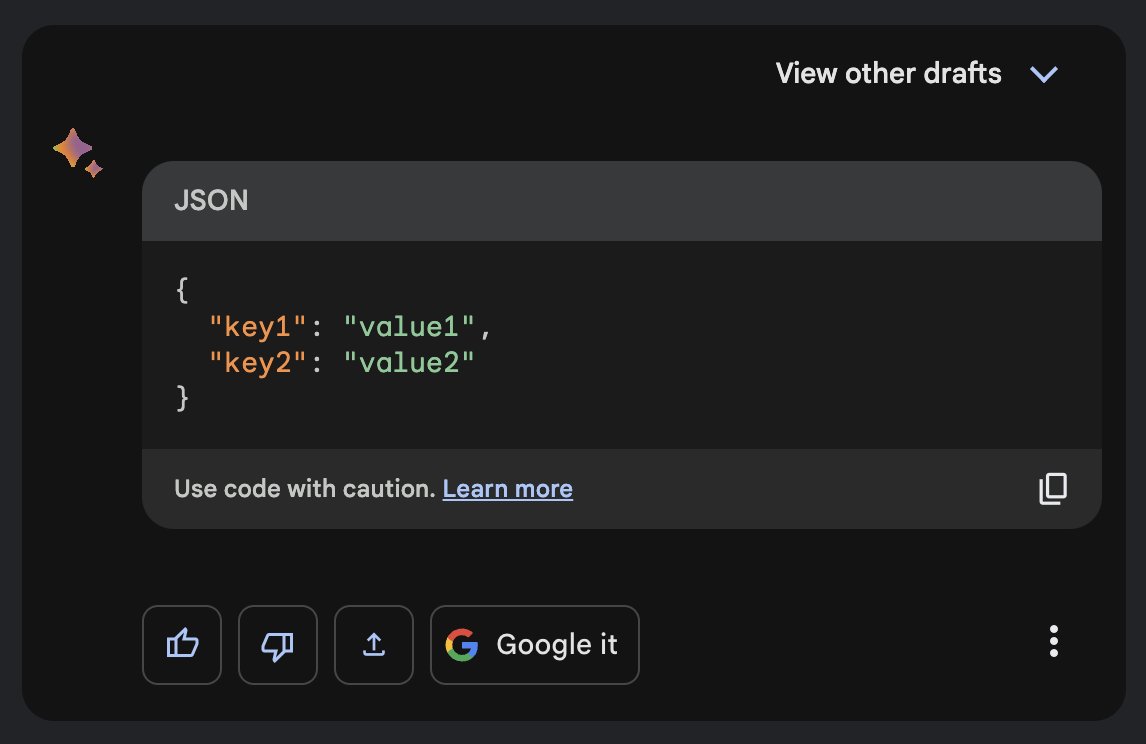

This is a baby GPT with two tokens 0/1 and context length of 3, viewing it as a finite state markov chain. It was trained on the sequence "111101111011110" for 50 iterations. The parameters and the architecture of the Transformer modifies the probabilities on the arrows. E.g. we…

Ok folks. It’s time we talked about eels. I’m a geologist but I recently learnt about eels and… wow. Since then, I’ve been greeting strangers with ‘DO YOU KNOW ABOUT EELS?!’ Well, consider yourself a stranger in my path. Strap in. It’s a 🧵 1/so many

last words for some incredibly brave ukrainians

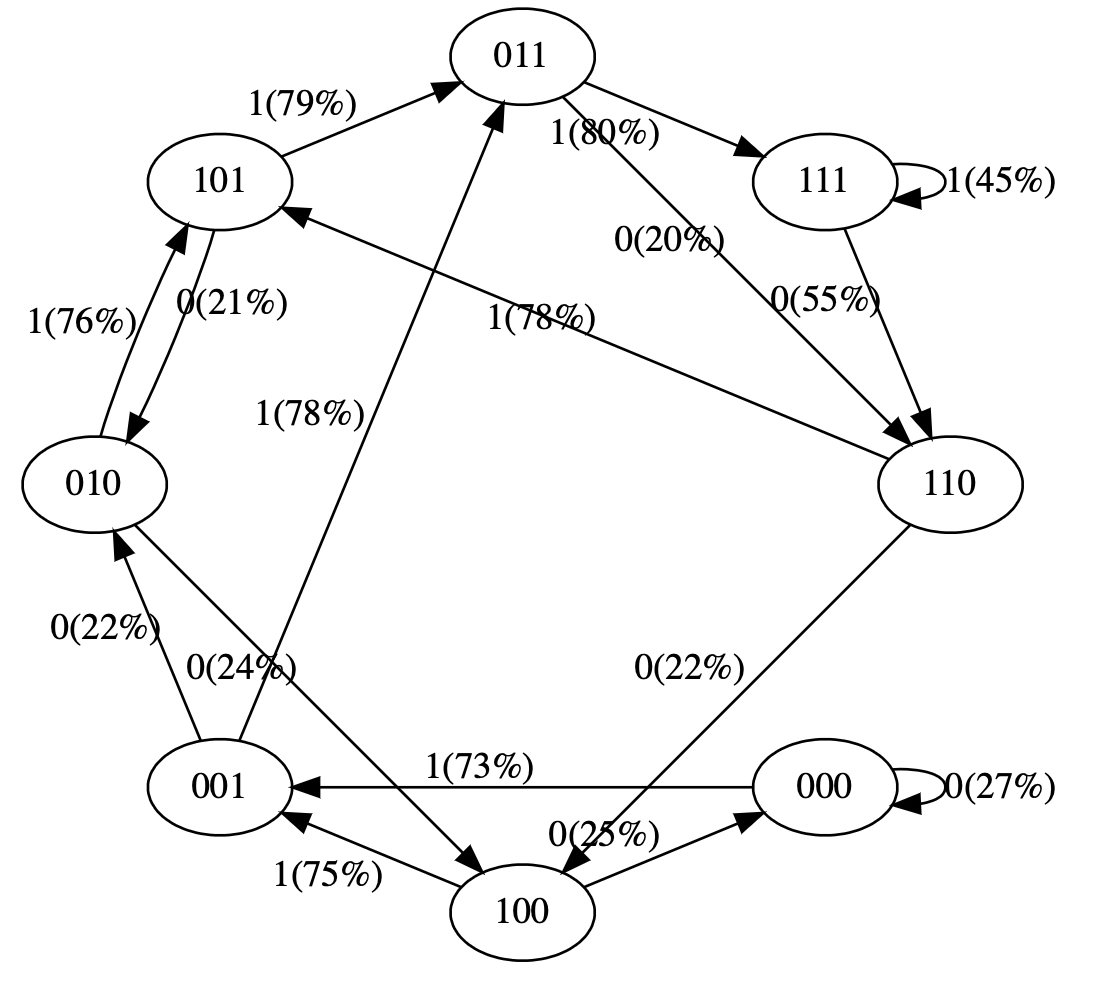

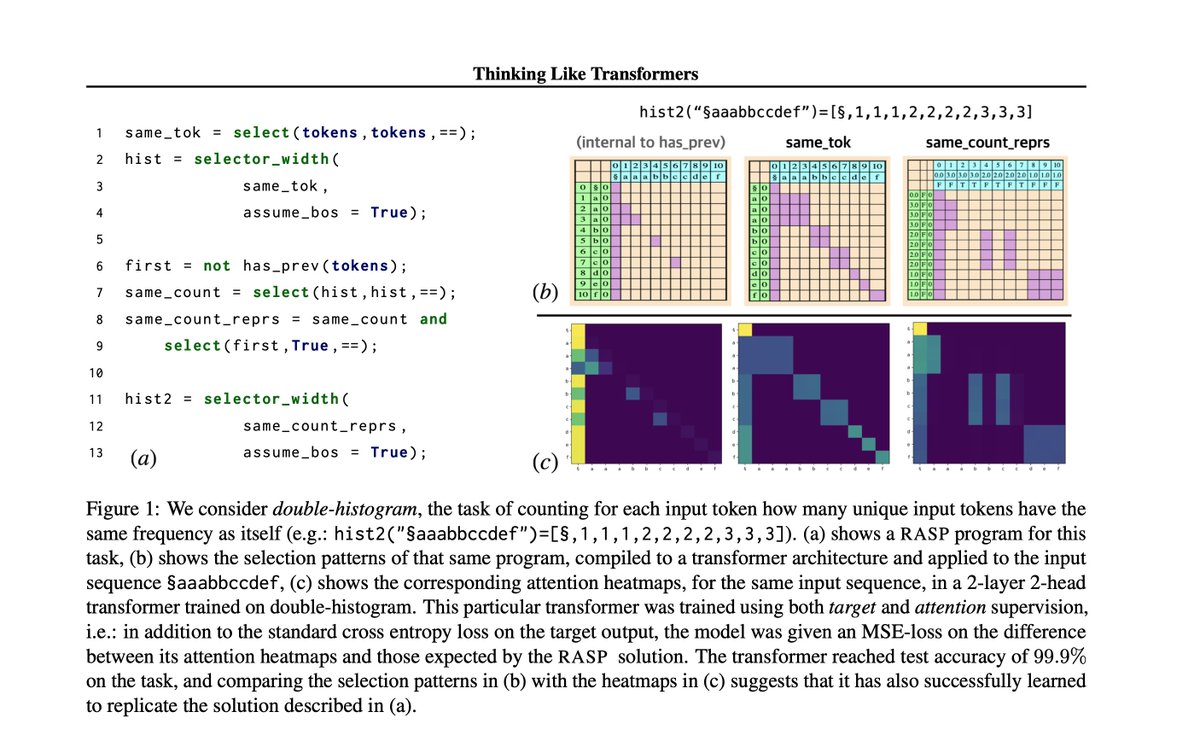

Thinking Like Transformers RNNs have direct parallels in finite state machines, but Transformers have no such familiar parallel. This paper aims to change that. They propose a computational model for the Transformer in the form of a programming language. arxiv.org/abs/2106.06981

You may not like, but this is the ideal urban form

Stay at 127.0.0.1 Wear a 255.0.0.0

The kids love it! #artificialintelligence #machinelearning #deeplearning #ai #ml #dl #aimemes #mlmemes Original: AI Memes for Artificially Intelligent Teens

Mature

nobody: me: in the history of disney animated movies there have been exactly 18 types of songs, and i'm going to tell you about each of them

“The Analytical Engine weaves algebraical patterns just as the Jacquard loom weaves flowers and leaves.” A beautiful very early thought/vision from Ada Lovelace youtu.be/MQzpLLhN0fY

youtube.com

YouTube

How an 1803 Jacquard Loom Led to Computer Technology

United States Trendler

- 1. Kawhi N/A

- 2. #RHOP N/A

- 3. #NBAAllStar2026 N/A

- 4. Wemby N/A

- 5. #BaddiesUSA N/A

- 6. Stacey N/A

- 7. Daytona 500 N/A

- 8. Anthony Edwards N/A

- 9. Jaylen Brown N/A

- 10. Gizelle N/A

- 11. Jokic N/A

- 12. Tyler Reddick N/A

- 13. LeBron N/A

- 14. Michael Jordan N/A

- 15. NASCAR N/A

- 16. Wendy N/A

- 17. Team World N/A

- 18. Jerome Tang N/A

- 19. Tennessee Ernie Ford N/A

- 20. Brandy N/A

Bunları beğenebilirsin

Something went wrong.

Something went wrong.