Matthieu LC

@matthieulc

robots and llms / prev built typeless to make doctors happy, acq by @Doctolib

You might like

Releasing Shoggoth Mini!🐙 Soft tentacle robot meets GPT-4o & RL. I built it to explore the boundaries of weird: expressiveness, aliveness, and AI embodiment. Blogpost: matthieulc.com/posts/shoggoth…

I'm excited to share that my new postdoctoral position is going so well that I submitted a new paper at the end of my first week! A thread below

Sensory Compression as a Unifying Principle for Action Chunking and Time Coding in the Brain biorxiv.org/content/10.110… #biorxiv_neursci

Please give this new model a try, it is so so good!

My favorite demo of the new gpt-realtime model from @matthieulc -- Shoggoth Mini using Realtime API with image input

wrapped up the blogpost and code for the shoggoth mini project! will release everything tomorrow 👀

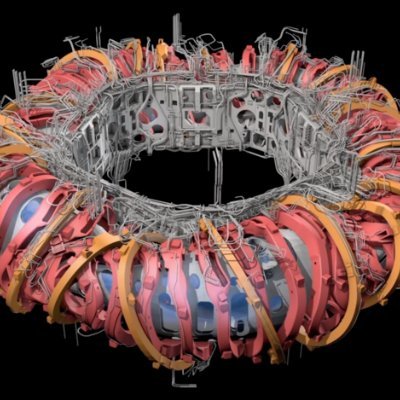

i've been working on a blogpost about the project these past few days, hopefully released by the end of the week! here's a cute animation of the system design

just found out the white in human eyes is adaptive as the contrast makes gaze direction obvious, which helps in communicating intent much better than other animals sciencedirect.com/science/articl…

in dexterity tests, shoggoth mini reveals strong passive grasping generalization

played around with action smoothing due to control instabilities in the PPO policy. see how the raw predicted action diverges as it goes OOD. happy to find that exponential moving average is extremely effective here!

Learning where to look, learning what to see. Work on Continuous Thought Machines continues to be exciting! As a teaser, take a look at our approach to "Foveated Active Vision". Check it out 👇 (details in the thread) pub.sakana.ai/ctm

approaching the end of v1 here :) worked on some new primitives, and integrating them all together. making the gpt4o realtime api consistent in its use of the primitives was harder than i thought. i will now start writing the blogpost and cleaning the vibecode a bit for…

worked on having gpt4o drive open-loop motor primitives like waving or grabbing from stereo vision. special tokens like <distance: 10cm> get streamed straight into the transcript stream, which gpt4o reacts too. next up is integrating the closed-loop hand tracking. main challenge…

The Worldwide @LeRobotHF hackathon is in 2 weeks, and we have been cooking something for you… Introducing SmolVLA, a Vision-Language-Action model with light-weight architecture, pretrained on community datasets, with an asynchronous inference stack, to control robots🧵

United States Trends

- 1. Giants 64.6K posts

- 2. Bills 133K posts

- 3. Seahawks 17.3K posts

- 4. Bears 57.3K posts

- 5. Caleb 46.9K posts

- 6. Dart 25.1K posts

- 7. Dolphins 31.6K posts

- 8. Daboll 10.8K posts

- 9. Jags 6,457 posts

- 10. Josh Allen 15.1K posts

- 11. Russell Wilson 3,827 posts

- 12. Texans 36.7K posts

- 13. Browns 36.4K posts

- 14. Patriots 102K posts

- 15. Ravens 36.5K posts

- 16. Rams 14.6K posts

- 17. Henderson 16.5K posts

- 18. Trevor Lawrence 2,399 posts

- 19. Bryce 15.2K posts

- 20. Drake Maye 15.1K posts

Something went wrong.

Something went wrong.