MIT NLP Group

@mitnlp

MIT Natural Language Processing group.

你可能會喜歡

Hello from the MIT Natural Language Processing group! Follow us to get updates on what our lab members have been up to in #NLP

I’m thrilled to announce Conformal Risk Control: a way to bound quantities other than coverage with conformal prediction. arxiv.org/abs/2208.02814 Check out the worked examples in CV and NLP! The best part is: it’s exactly the same algorithm as split conformal prediction🤯🧵1/5

Need to debias your new task? Learn how, from your old one. Check out our #ICML2022 paper "Learning Stable Classifiers by Transferring Unstable Features" with @CodeTerminator and @BarzilayRegina Paper -> arxiv.org/abs/2106.07847 Code -> github.com/YujiaBao/tofu

New Preprint with @adamjfisch, T.Jaakkola and @BarzilayRegina. We present Consistent Accelerated Inference via 𝐂onfident 𝐀daptive 𝐓ransformers (CATs) CATs can speed up inference 😺 while guaranteeing consistency 😼. The code is available🙀 🔗people.csail.mit.edu/tals/static/Co… #NLProc

Large pre-trained Transformers are great, but expensive to run. But making them more efficient (e.g., early exits) can give undesirable performance hits. In our new work, we speed up inference while guaranteeing consistency with the original model up to a specifiable tolerance.

New Preprint with @adamjfisch, T.Jaakkola and @BarzilayRegina. We present Consistent Accelerated Inference via 𝐂onfident 𝐀daptive 𝐓ransformers (CATs) CATs can speed up inference 😺 while guaranteeing consistency 😼. The code is available🙀 🔗people.csail.mit.edu/tals/static/Co… #NLProc

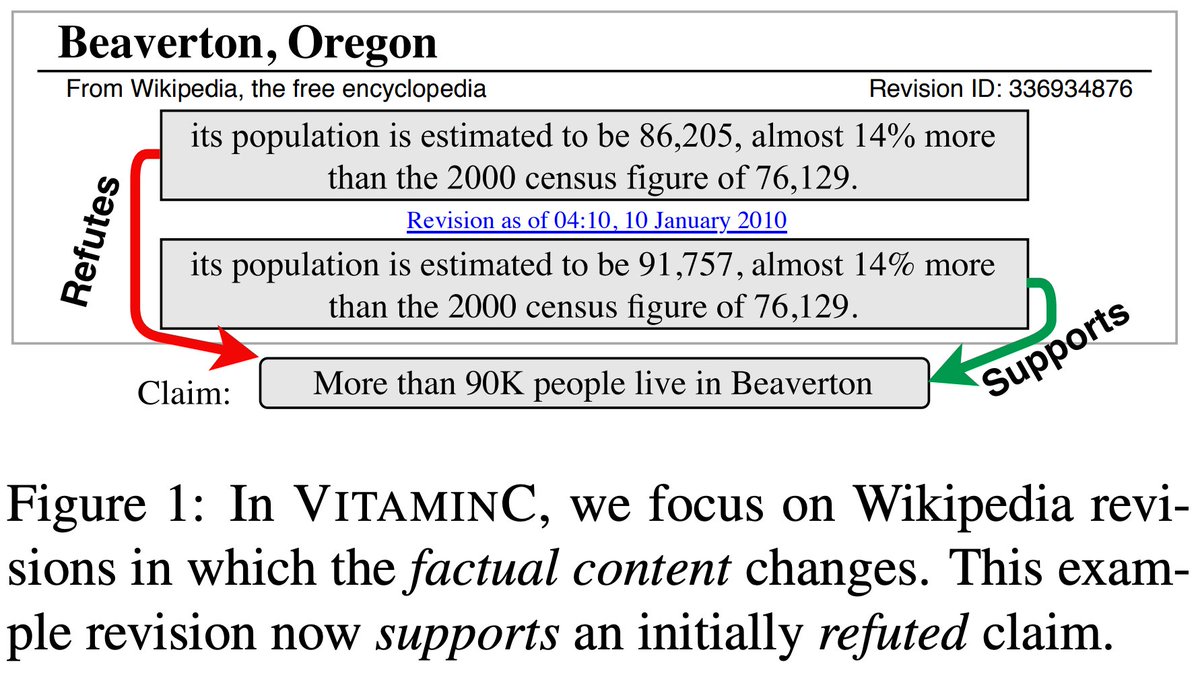

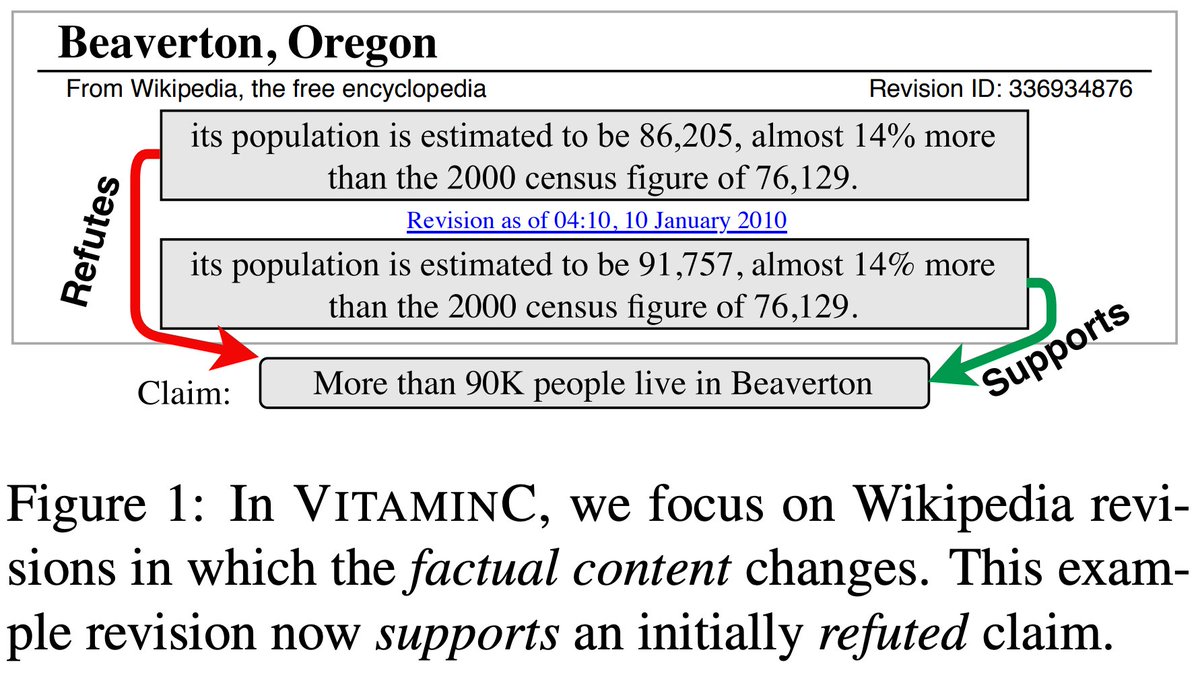

New #NAACL2021 paper out on robust fact verification. Sources like Wikipedia are continuously edited with the latest information. In order to keep up, our models need to be sensitive to these changes in evidence when verifying claims. Work with @TalSchuster and @BarzilayRegina!

Is your Fact Verification model robust enough? Consider adding #VitaminC 🍊 Check out our new #NAACL2021 paper: "Get Your Vitamin C! Robust Fact Verification with Contrastive Evidence" with @adamjfisch and @BarzilayRegina 🔗 arxiv.org/abs/2103.08541 #NLProc #FakeNews📰 🧵1/N

Is your Fact Verification model robust enough? Consider adding #VitaminC 🍊 Check out our new #NAACL2021 paper: "Get Your Vitamin C! Robust Fact Verification with Contrastive Evidence" with @adamjfisch and @BarzilayRegina 🔗 arxiv.org/abs/2103.08541 #NLProc #FakeNews📰 🧵1/N

In our @IEEESSP paper, led by @RoeiSchuster, we control the embeddings of words by introducing minimal changes to the pretraining data (e.g. #Wiki edits). This #word_embeddings attack affects many downstream #NLProc tasks! @cornell_tech @MIT_CSAIL link: arxiv.org/abs/2001.04935

In a new paper, we literally "make war mean peace". Our attack on AI perturbs corpora to change word “meanings” that get encoded in vector embeddings like #W2V. With @TalSchuster , Yoav Meri, Vitaly Shmatikov. To appear at IEEE S&P @IEEESSP #SP20 #NLProc arxiv.org/abs/2001.04935

Congratulations!

Accepted to #ICLR2020. Congratulations to Regina, @CodeTerminator, @menghua_wu and myself! Thank the reviewers and AC for their valuable suggestions and comments. Our code and data are already available on GitHub. Camera-ready will be coming soon. openreview.net/forum?id=H1emf…

#NeurIPS2019 Our work with MIT improves the interpretability of NLP models with an adversarial class-wise rationalization technique, which can find explanations towards any given class. Poster: Tue @ East Exhibition Hall B + C #1. @MITIBMLab @neurobongo @MIT_CSAIL @Bishop_Gorov

If you're at @emnlp2019, don't miss our talks: Towards Debiasing Fact Verification Models * Wednesday 15:42 (2B) * @TalSchuster @darshj_shah Working Hard or Hardly Working: Challenges of Integrating Typology into Neural Dependency Parsers * Thursday 15:30 (201A) * @adamjfisch

Check-out our new paper - arxiv.org/pdf/1909.13838… Automatic Fact-guided sentence modification. Method to automatically modify the factual information in a sentence. Joint work with @str_t5 , Prof. Regina Barzilay.

Are we protected from GPT-2, #GROVER style models generating fake content? What happens if they are also used legitimately as writings assistants? Check our new report: arxiv.org/abs/1908.09805 with @RoeiSchuster, @Darsh71307636, Regina Barzilay. #NLProc #emnlp2019 #FakeNews #GPT2

Few-shot Text Classification with Distributional Signatures. What happens if you take meta-learning for vision and apply it to NLP? Prototypical Networks with lexical features perform worse than nearest neighbors on new classes. How can we do better? ;) arxiv.org/abs/1908.06039

Our #emnlp2019 paper is now on arxiv:arxiv.org/abs/1908.05267 * Extending #FEVER (fact-checking) eval dataset to eliminate bias. * Regularizing the training to alleviate the bias. Coauthors: Darsh Shah, @yeodontsay, Daniel Filizzola, @esantus, Regina Barzilay @emnlp2019 #nlproc

Development datasets released! 6 in-domain and 6 out-of-domain including BioASQ, DROP, DuoRC, RACE, RelationExtraction, TextbookQA! Also released BERT baseline results. All the information at github.com/mrqa/MRQA-Shar…. Check out and let us know if you have questions! #mrqa2019

github.com

GitHub - mrqa/MRQA-Shared-Task-2019: Resources for the MRQA 2019 Shared Task

Resources for the MRQA 2019 Shared Task. Contribute to mrqa/MRQA-Shared-Task-2019 development by creating an account on GitHub.

The new shared task in QA at @MRQA2019 Workshop is released

Announcing new shared task at #mrqa2019 workshop @emnlp2019 Tests if QA systems can generalize to new test distributions. Details and training data available at mrqa.github.io/shared, more updates to come! #NLProc

Our paper "GraphIE: A Graph-Based Framework for Information Extraction" has been accepted to #NAACL2019. We study how to model the graph structure of the data in various IE tasks. Joint work with @esantus @jiangfeng1124 @ZhijingJin and Regina Barzilay. (arxiv.org/abs/1810.13083)

Congratulations Tal! @str_t5

Happy to share that our paper "Cross-Lingual Alignment of Contextual Word Embeddings, with Applications to Zero-shot Dependency Parsing" was accepted to #NAACL2019. The preprint is now available at arxiv.org/abs/1902.09492

United States 趨勢

- 1. Cheney 135K posts

- 2. Election Day 130K posts

- 3. Jakobi Meyers 4,310 posts

- 4. #csm219 3,453 posts

- 5. Mamdani 585K posts

- 6. Logan Wilson 8,378 posts

- 7. Shota 17.6K posts

- 8. Cuomo 280K posts

- 9. New Jersey 212K posts

- 10. Iraq 58.2K posts

- 11. GO VOTE 101K posts

- 12. #TheView N/A

- 13. Rickey 2,003 posts

- 14. New Yorkers 85K posts

- 15. #Election2025 3,135 posts

- 16. #tuesdayvibe 2,637 posts

- 17. Waddle 6,237 posts

- 18. New York City 174K posts

- 19. GOOD MORNING MINTO N/A

- 20. Rolex 18.4K posts

你可能會喜歡

-

UW NLP

UW NLP

@uwnlp -

Jacob Andreas

Jacob Andreas

@jacobandreas -

EdinburghNLP

EdinburghNLP

@EdinburghNLP -

Kai-Wei Chang

Kai-Wei Chang

@kaiwei_chang -

Sean Ren

Sean Ren

@xiangrenNLP -

JHU CLSP

JHU CLSP

@jhuclsp -

Language Technologies Institute | @CarnegieMellon

Language Technologies Institute | @CarnegieMellon

@LTIatCMU -

Danqi Chen

Danqi Chen

@danqi_chen -

Victor Zhong

Victor Zhong

@hllo_wrld -

CopeNLU

CopeNLU

@CopeNLU -

UNC AI

UNC AI

@unc_ai_group -

Mohit Bansal

Mohit Bansal

@mohitban47 -

Mohit Iyyer

Mohit Iyyer

@MohitIyyer -

Tao Yu

Tao Yu

@taoyds -

Peng Qi

Peng Qi

@qi2peng2

Something went wrong.

Something went wrong.