Meet Barot

@mythosscience

Self-organizing neural nets and artificial life. PhD from @NYUdatascience

This video below shows a neural network being updated by a local rule neural network to solve the Iris classification task. Even with me setting weights to 0 (when I select them in red), the local rule network recovers the task network performance pretty consistently.

Within the next 5 years there's going to be a better, more efficient way to train neural networks than gradient-based optimization. My bet is that it's going to take inspiration from living organisms, with properties like self-organization, replication, and evolution.

CDS Student Research Showcase: CDS PhD graduate Meet Barot (@mythosscience) is creating "living" neural networks at his company Mythos Scientific (mythos.science), making AI systems behave more like biological ones.

Researchers are rediscovering the forgotten legacy of a pioneering Black scientist named Charles Henry Turner, who conducted trailblazing research on the cognitive traits of bees, spiders, and more. nautil.us/charles-henry-…

Introducing a new state-of-the-art code embedding model - SOTA performance on the CodeSearchNet benchmark - Truly open (weights, data, code) - Apache 2.0 Nomic Embed Code brings us one step closer to a world with truly open source embedding models for every modality! 🧵

Test time compute should be on weight generation instead of token generation

Maybe different shapes of this landscape can be suited to different modes of life. the shape suited to society now may not always have been so suited to it before

According to dynamical systems, psychiatric disorders can be seen as energy landscapes with peaks and valleys. OCD has deep valleys where neural activity gets stuck, while schizophrenia has shallow valleys that let neural activity roam too freely, connecting unrelated ideas.

i think the only way you don't develop agi exclusively for state power is if you have both a decentralized development and compute process. any other way will be captured by the state, there'd be too much at stake.

anthropic partners with palantir and you guys are still like ‘no, but this NEW agi lab is really all about the social good! these guys really care!’ brother no one has a choice in this arms race. you gotta signal virtue and drive for power, both at the same time full throttle

This is honestly the biggest threat to the current AI - someone discovering how you can model all those trillions of coefficients with a relatively simple formula.

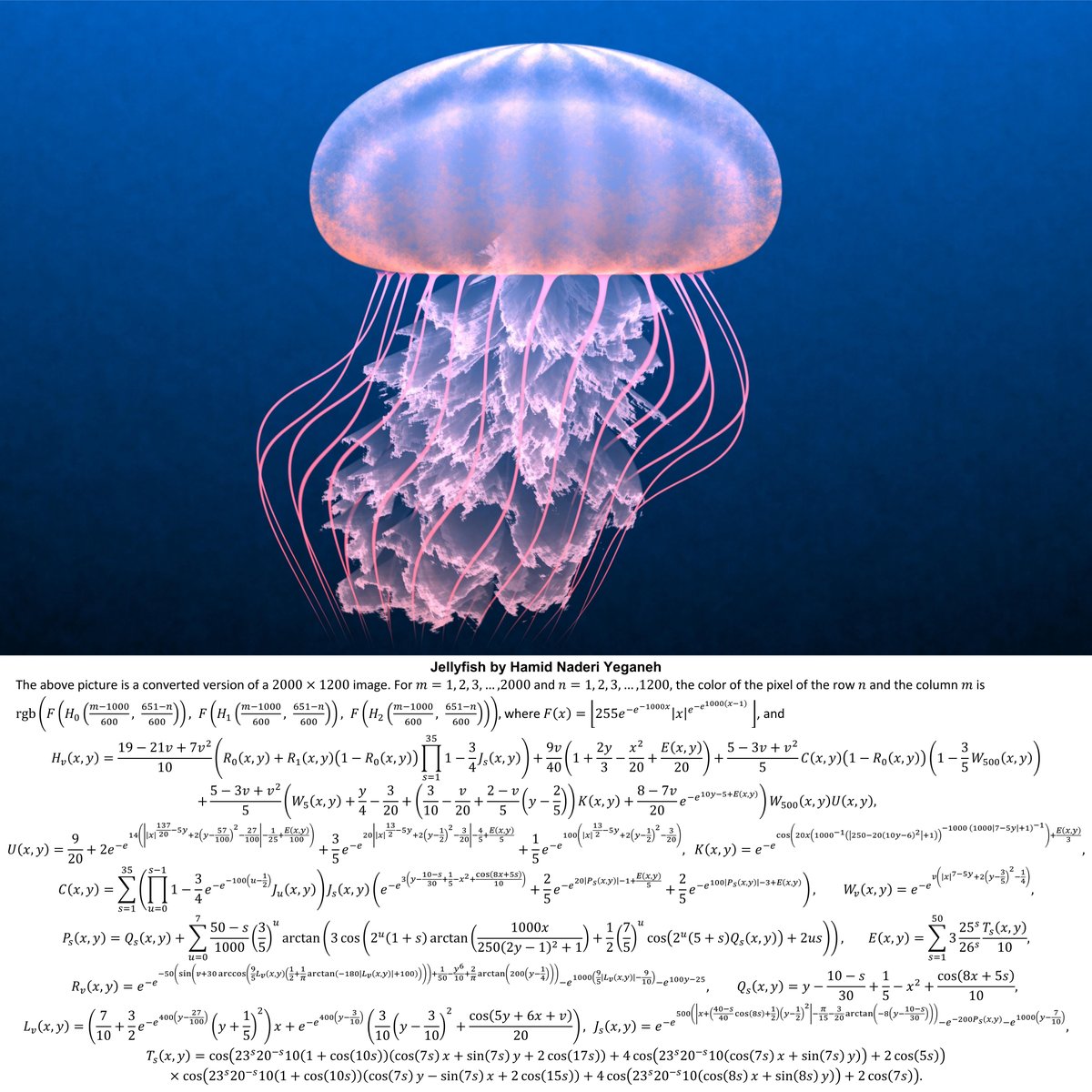

I drew this jellyfish with mathematical equations.

kinda sense that we're gonna get more hopfield network papers now

Prediction: while backprop will likely play a role in any future AGI, there will also be at least one completely novel weight-updating learning algorithm that is yet to be invented today.

United States Trends

- 1. #BUNCHITA 1,405 posts

- 2. #SmackDown 45.8K posts

- 3. Tulane 4,337 posts

- 4. Giulia 14.8K posts

- 5. Frankenstein 79K posts

- 6. Aaron Gordon 4,081 posts

- 7. Supreme Court 183K posts

- 8. taylor york 8,060 posts

- 9. #TheLastDriveIn 3,868 posts

- 10. Russ 13.9K posts

- 11. Connor Bedard 3,022 posts

- 12. #TheFutureIsTeal N/A

- 13. Justice Jackson 5,669 posts

- 14. Caleb Wilson 5,808 posts

- 15. Podz 3,061 posts

- 16. Northwestern 5,059 posts

- 17. Scott Frost N/A

- 18. #Blackhawks 1,782 posts

- 19. Gozyuger 1,592 posts

- 20. Tatis 2,108 posts

Something went wrong.

Something went wrong.