Phind

@phindsearch

AI answer engine for complex questions.

قد يعجبك

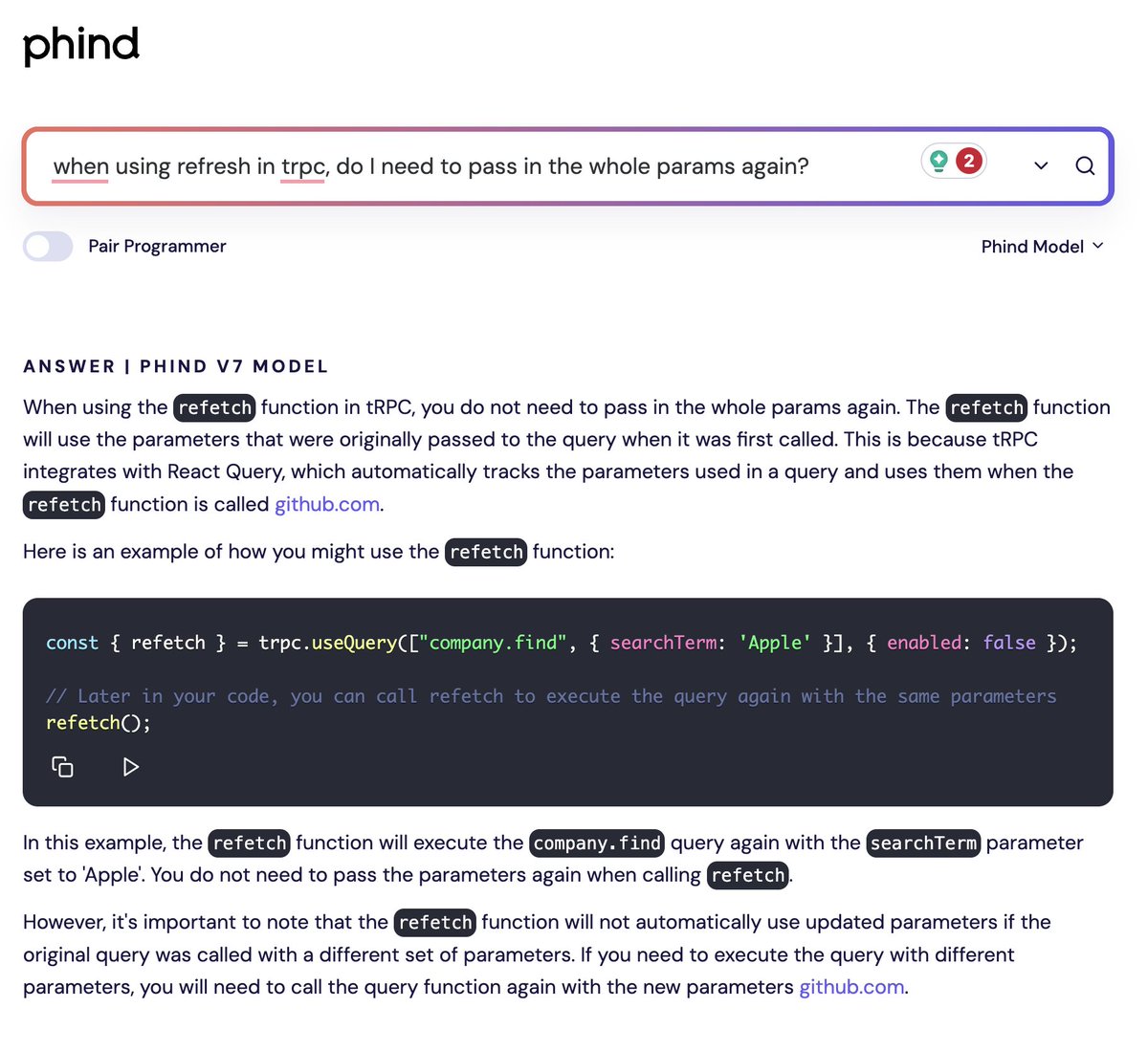

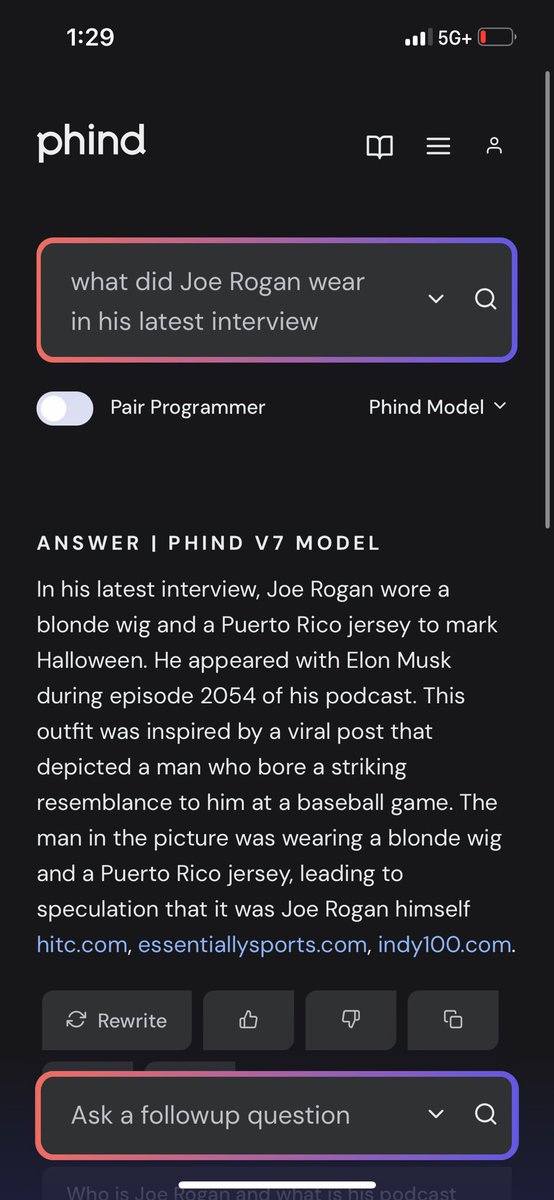

We're excited to launch Phind 2 today! The new Phind is able to go beyond text to present answers visually with inline images, diagrams, cards, and other widgets to make answers much more delightful. Phind is also now able to seek out information on its own. If it needs more…

Introducing Phind-405B, our new flagship model! Phind-405B scores 92% on HumanEval, matching Claude 3.5 Sonnet. We're particularly happy with its performance on real-world tasks, particularly when it comes to designing and implementing web apps. Our focus on technical topics…

We are excited and proud to be a signatory of SV Angel's Open Letter on AI: openletter.svangel.com

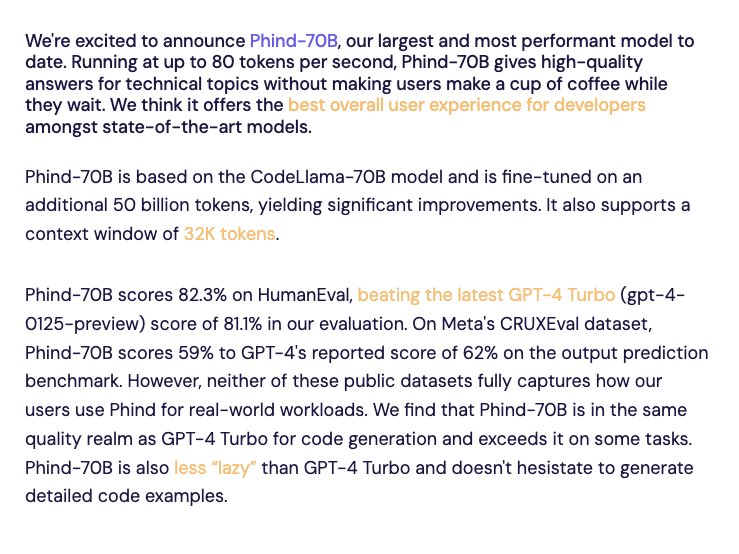

"We find that Phind-70B is in the same quality realm as GPT-4 Turbo for code generation and exceeds it on some tasks. Phind-70B is significantly faster than GPT-4 Turbo, running at 80+ tokens per second to GPT-4 Turbo's ~20 tokens per second." What a launch! 👀

Introducing Phind-70B – closing the code quality gap with GPT-4 Turbo while running 4x faster phind.com/blog/introduci…

Phind-70b - A CodeLlama fine tune that claims to have GPT-4 code gen! Coming soon to open source 👏👏

Phind-70B looks like a big deal! Phind-70B closes the code generation quality gap with GPT-4 Turbo and is 4x faster. Phind-70B can generate 80+ token/s (GPT-4 is reported to generate ~20 tokens/s). Interesting to see that inference speed is becoming a huge factor in comparing…

I'm very impressed by the way Phind, despite being the tiniest of startups, has managed to keep up with the giants. Phind-70B beats GPT-4 Turbo at code generation, and runs 4x faster. There is definitely still room for startups in this game.

Introducing Phind-70B, our largest and most capable model to date! We think it offers the best overall user experience for developers amongst state-of-the-art models. phind.com/blog/introduci…

Join us for our San Francisco meetup on February 6th! We’d love to meet you and hear about how we can keep making Phind better for you. And, of course, food and drinks will be provided :) forms.gle/tEGwu2hBtYKptN…

Announcing much faster Phind Model inference for Pro and Plus users. Your request will be served by a dedicated cluster powered by NVIDIA H100s for the lowest latency and a generation speed of up to 100 tokens per second. If you’re not yet a Pro user, join us at…

🚀 Introducing GPT-4 with 32K context for Phind Pro users. If you’re not yet a subscriber, join us at phind.com/plans.

🚀 While ChatGPT is pausing signups, Phind continues to be better at programming while being 5x faster. We’ve been rapidly adding capacity and it’s only getting faster. Check it out ➡️ phind.com

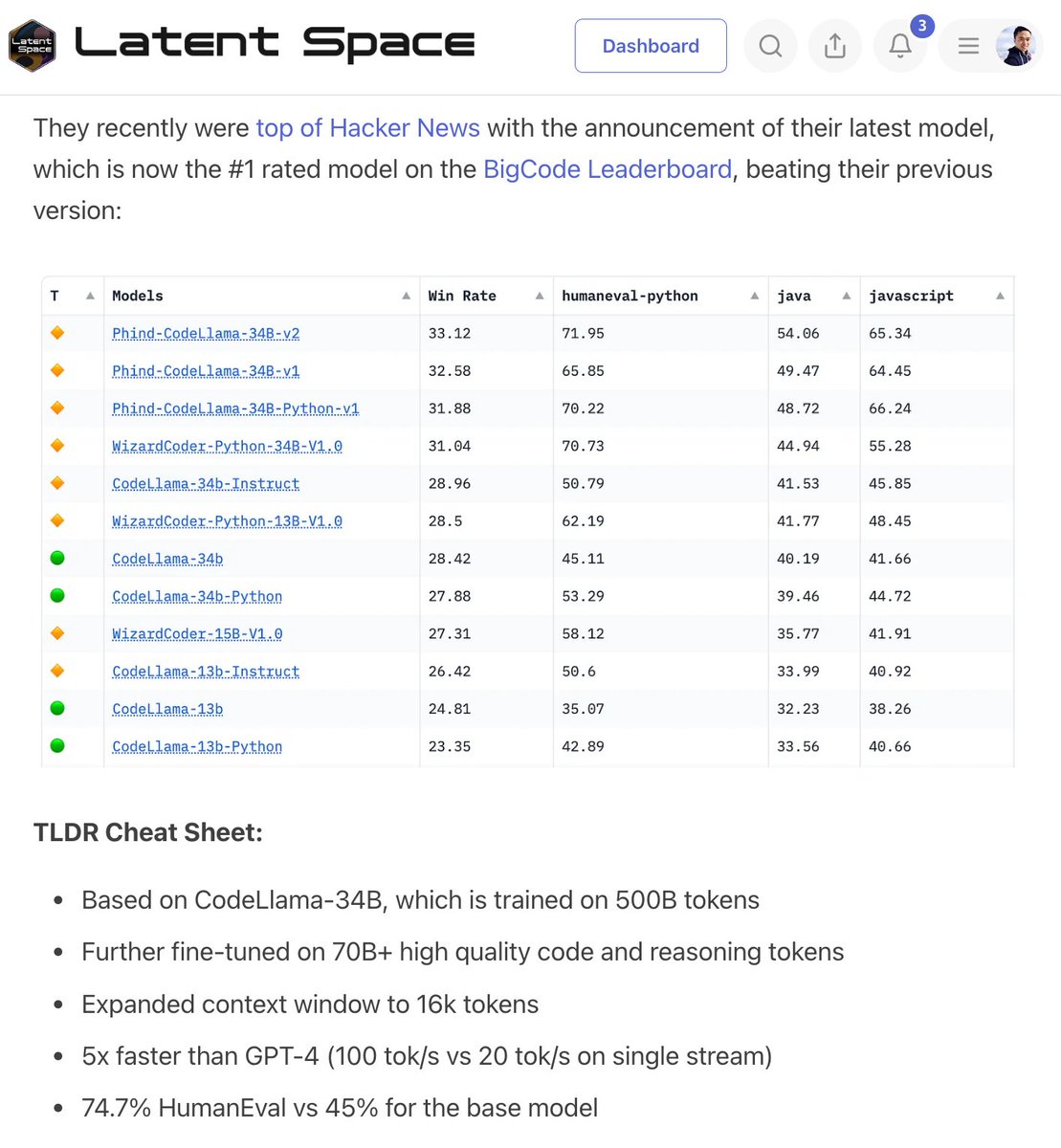

🆕 pod: Beating GPT-4 with Open Source LLMs latent.space/p/phind with @MichaelRoyzen of @phindsearch! The full story of how Phind finetuned CodeLlama to: - reach 74.7% HumanEval vs 45% base model - 2x'ed GPT4 context window to 16k tokens - 5x faster than GPT-4 (100 tok/s)…

Phind - @phindsearch worked pretty well for my tiny Bash script. It even adopted how I use a third-party script (jq). All on first try. See it in action:

Phind can now beat GPT-4 at programming, and does it 5x faster. phind.com/blog/phind-mod…

As well as being a useful tool, Phind is an example of an encouraging point for startups: you can beat the AI giants in a specific domain with orders of magnitude less resources. It would be interesting if the biggest bang for the buck in AI was ASI rather than AGI.

Phind can now beat GPT-4 at programming, and does it 5x faster. phind.com/blog/phind-mod…

United States الاتجاهات

- 1. Jets 101K posts

- 2. Justin Fields 19.7K posts

- 3. Broncos 43K posts

- 4. Drake Maye 8,071 posts

- 5. Aaron Glenn 8,131 posts

- 6. Puka 5,950 posts

- 7. George Pickens 3,339 posts

- 8. James Franklin 27K posts

- 9. Cooper Rush 1,549 posts

- 10. Tyler Warren 1,956 posts

- 11. Sean Payton 3,573 posts

- 12. Steelers 35K posts

- 13. London 201K posts

- 14. TMac 1,541 posts

- 15. Jerry Jeudy N/A

- 16. Karty 1,714 posts

- 17. Garrett Wilson 4,903 posts

- 18. #HereWeGo 2,463 posts

- 19. #Pandu N/A

- 20. #DallasCowboys 1,880 posts

قد يعجبك

-

Dr Milan Milanović

Dr Milan Milanović

@milan_milanovic -

Vercel

Vercel

@vercel -

Anthropic

Anthropic

@AnthropicAI -

Eric Hartford

Eric Hartford

@QuixiAI -

E2B

E2B

@e2b -

LlamaIndex 🦙

LlamaIndex 🦙

@llama_index -

Chroma

Chroma

@trychroma -

Dust

Dust

@DustHQ -

はての@ホロクル10th【I-21】

はての@ホロクル10th【I-21】

@hateno_cos -

Perplexity

Perplexity

@perplexity_ai -

Chris Cundy

Chris Cundy

@ChrisCundy -

Helicone

Helicone

@helicone_ai -

Analysis Center

Analysis Center

@jpcert_ac -

Draw Things

Draw Things

@drawthingsapp -

Vectara

Vectara

@vectara

Something went wrong.

Something went wrong.