Zidi Xiong

@polaris_7369

PhD student @Harvard | Undergrad @IllinoisCS

Great project from the AI safety class! See all projects and notes on lesswrong.com/w/cs-2881r

What do agents do when the only path to a goal requires harmful action? Do they choose harm or accept failure? We explore these questions with experiments in an agentic coding environment. Code: github.com/ItamarRocha/sc… Blog Post: lesswrong.com/posts/AJANBeJb… 1/n

Thread about the mini-project we did for @boazbaraktcs AI Safety class. The results are interesting, and I had a laugh seeing the unhinged things these frontier models can come up with under such a simple task. 🤣

What do agents do when the only path to a goal requires harmful action? Do they choose harm or accept failure? We explore these questions with experiments in an agentic coding environment. Code: github.com/ItamarRocha/sc… Blog Post: lesswrong.com/posts/AJANBeJb… 1/n

What do agents do when the only path to a goal requires harmful action? Do they choose harm or accept failure? We explore these questions with experiments in an agentic coding environment. Code: github.com/ItamarRocha/sc… Blog Post: lesswrong.com/posts/AJANBeJb… 1/n

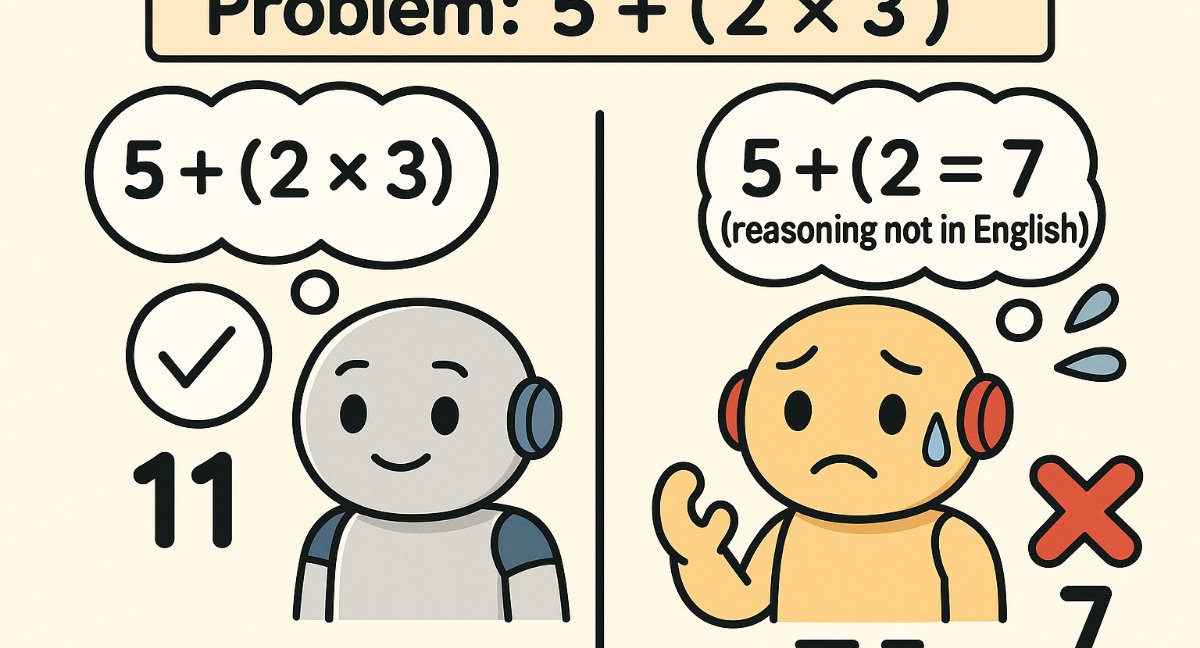

1/ Multilinguality & RL folks: Previously, we found LMs often fail to produce reasoning traces in the user's language; prompting/SFT helps, but hurts accuracy. (To be presented on Fri Nov 7, 12:30-13:30 #EMNLP2025 ) ⚠️ More importantly, we already tested an RL fix! Thread below.

2/ many previous works, including ours, showed that prompting does not work... We present some“budget alignment” recipes: • SFT : 817 multilingual chains to teach in-language reasoning • GRPO (math500-only RL): recover/boost accuracy while keeping the language policy…

Reasoning models do not think in user's query langauge, our work will be presented by @Jirui_Qi at #EMNLP2025! Now we dive a bit more into the potential solution! We set a goal: to make models reason in the user’s language without losing accuracy. huggingface.co/blog/shanchen/…

Check out our new results on multilingual reasoning!

We’re super excited to introduce DIRT: The Distributed Intelligent Replicator Toolkit: github.com/aaronwalsman/d… DIRT is a GPU-accelerated multi-agent simulation platform enabling artificial life research in dynamic, open-ended environments at unprecedented scales. 1/n

🧠 How faithfully does AI think? Join @ceciletamura of @ploutosai & @polaris_7369 @Harvard (author) as they explore Measuring the Faithfulness of Thinking Drafts in Large Reasoning Models. 📅 Oct 15 · 4 PM PDT 🎥 [world.ploutos.dev/stream/elated-…]()

![ceciletamura's tweet image. 🧠 How faithfully does AI think?

Join @ceciletamura of @ploutosai & @polaris_7369 @Harvard (author) as they explore Measuring the Faithfulness of Thinking Drafts in Large Reasoning Models.

📅 Oct 15 · 4 PM PDT

🎥 [world.ploutos.dev/stream/elated-…]()](https://pbs.twimg.com/media/G3RQqR7asAAowug.jpg)

Our paper on multilingual LRM was featured in State of AI Report 2025! The report notes: forcing reasoning in the user's language boosts match to ~98% but drops accuracy by 9-13 % — the core trade-off we studied. 📄arxiv.org/abs/2505.22888 Thanks @nathanbenaich @stateofaireport

🪩The one and only @stateofaireport 2025 is live! 🪩 It’s been a monumental 12 months for AI. Our 8th annual report is the most comprehensive it's ever been, covering what you *need* to know about research, industry, politics, safety and our new usage data. My highlight reel:

Just accepted by #NeurIPS2025 🎉

(1/n) Large Reasoning Models (LRMs) enhance complex problem-solving by generating multi-path "Thinking Drafts." But how reliable are these drafts? Can we trust the intermediate reasoning steps and final conclusions, and effectively monitor or control them? 🤔

Are there conceptual directions in VLMs that transcend modality? Check out our COLM spotlight🔦 paper! We analyze how linear concepts interact with multimodality in VLM embeddings using SAEs with @Huangyu58589918, @napoolar, @ShamKakade6 and Stephanie Gil arxiv.org/abs/2504.11695

Our paper on multilingual reasoning is accepted to Findings of #EMNLP2025! 🎉 (OA: 3/3/3.5/4) We show SOTA LMs struggle with reasoning in non-English languages; prompt-hack & post-training improve alignment but trade off accuracy. 📄 arxiv.org/abs/2505.22888 See you in Suzhou!

[1/]💡New Paper Large reasoning models (LRMs) are strong in English — but how well do they reason in your language? Our latest work uncovers their limitation and a clear trade-off: Controlling Thinking Trace Language Comes at the Cost of Accuracy 📄Link: arxiv.org/abs/2505.22888

![Jirui_Qi's tweet image. [1/]💡New Paper

Large reasoning models (LRMs) are strong in English — but how well do they reason in your language?

Our latest work uncovers their limitation and a clear trade-off:

Controlling Thinking Trace Language Comes at the Cost of Accuracy

📄Link: arxiv.org/abs/2505.22888](https://pbs.twimg.com/media/GsKT0KoaUAIJ_Jw.png)

What precision should we use to train large AI models effectively? Our latest research probes the subtle nature of training instabilities under low precision formats like MXFP8 and ways to mitigate them. Thread 🧵👇

‼️ 1/n Ask your reasoning model to think in lower resource language does degrade models’ performance at the moment. My awesome Co-author already communicated the main points in the thread, I will just communicate some random things we learned in my 🧵

[1/]💡New Paper Large reasoning models (LRMs) are strong in English — but how well do they reason in your language? Our latest work uncovers their limitation and a clear trade-off: Controlling Thinking Trace Language Comes at the Cost of Accuracy 📄Link: arxiv.org/abs/2505.22888

![Jirui_Qi's tweet image. [1/]💡New Paper

Large reasoning models (LRMs) are strong in English — but how well do they reason in your language?

Our latest work uncovers their limitation and a clear trade-off:

Controlling Thinking Trace Language Comes at the Cost of Accuracy

📄Link: arxiv.org/abs/2505.22888](https://pbs.twimg.com/media/GsKT0KoaUAIJ_Jw.png)

Check out our new work on multilingual reasoning in LRMs!

[1/]💡New Paper Large reasoning models (LRMs) are strong in English — but how well do they reason in your language? Our latest work uncovers their limitation and a clear trade-off: Controlling Thinking Trace Language Comes at the Cost of Accuracy 📄Link: arxiv.org/abs/2505.22888

![Jirui_Qi's tweet image. [1/]💡New Paper

Large reasoning models (LRMs) are strong in English — but how well do they reason in your language?

Our latest work uncovers their limitation and a clear trade-off:

Controlling Thinking Trace Language Comes at the Cost of Accuracy

📄Link: arxiv.org/abs/2505.22888](https://pbs.twimg.com/media/GsKT0KoaUAIJ_Jw.png)

United States 趨勢

- 1. $NVDA 84.5K posts

- 2. Peggy 39K posts

- 3. FEMA 16.3K posts

- 4. Jensen 28.2K posts

- 5. Sheila Cherfilus-McCormick 13.5K posts

- 6. #Jupiter 4,468 posts

- 7. Dean Wade N/A

- 8. Baba Oladotun N/A

- 9. #YIAYalpha N/A

- 10. Sam Harris 1,153 posts

- 11. Koa Peat N/A

- 12. Jabari N/A

- 13. GeForce Season 6,614 posts

- 14. NASA 57.8K posts

- 15. Nae'Qwan Tomlin N/A

- 16. #SomosPatriaDePaz N/A

- 17. Arabic Numerals 7,577 posts

- 18. #jeopardyblindguess N/A

- 19. Solo Ball N/A

- 20. Frank Anderson N/A

Something went wrong.

Something went wrong.