Ziyu Wan

@raywzy1

Researcher @Microsoft. Previously @GoogleDeepMind | @Stanford | @RealityLabs | @CityUHongKong | @MSFTResearch | Tencent AI Lab.

You might like

great post!

Our latest post explores on-policy distillation, a training approach that unites the error-correcting relevance of RL with the reward density of SFT. When training it for math reasoning and as an internal chat assistant, we find that on-policy distillation can outperform other…

Want to know how to evaluate the spatial intelligence capability of your VLM? We will present VLM4D this afternoon! #ICCV2025

Can VLMs really think in 4D (3D space + time)? 🤔 When a model can’t tell “left” from “right,” something’s missing. That’s why we built VLM4D — a benchmark for spatiotemporal reasoning, debuting at #ICCV2025 📅 Oct 21 | 🕒 3–5 PM | 📍Exhibit Hall I #798 vlm4d.github.io

Bee A High-Quality Corpus and Full-Stack Suite to Unlock Advanced Fully Open MLLMs

I’ll be at ICCV! 🙋♂️Message me if you’re interested in joining us at Snap Research (Personalization Team) — we’re hiring research interns year-round in 🖼️Image editing 🎨Personalized generation 🤖Agentics 🧠VLMs for generation We also have full-time Research Scientist roles open!

👍👍👍

🚀Excited to share our recent research:🚀 “Learning to Reason as Action Abstractions with Scalable Mid-Training RL” We theoretically study 𝙝𝙤𝙬 𝙢𝙞𝙙-𝙩𝙧𝙖𝙞𝙣𝙞𝙣𝙜 𝙨𝙝𝙖𝙥𝙚𝙨 𝙥𝙤𝙨𝙩-𝙩𝙧𝙖𝙞𝙣𝙞𝙣𝙜 𝙍𝙇. The findings lead to a scalable algorithm for learning action…

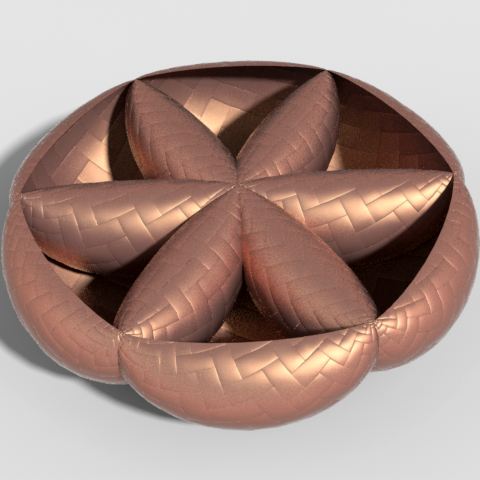

RenderFormer, from Microsoft Research, is the first model to show that a neural network can learn a complete graphics rendering pipeline. It’s designed to support full-featured 3D rendering using only machine learning—no traditional graphics computation required. Learn more:…

“Everyone knows” what an autoencoder is… but there's an important complementary picture missing from most introductory material. In short: we emphasize how autoencoders are implemented—but not always what they represent (and some of the implications of that representation).🧵

Instead of blending colors along rays and supervising the resulting images, we project the training images into the scene to supervise the radiance field. Each point along a ray is treated as a surface candidate, independently optimized to match that ray's reference color.

simple but effective way to inject 3D info into your world simulator😉

✅Videos are 2D projections of our dynamic 3D world. 🧠But can video diffusion models implicitly learn 3D information through training on raw video data, without explicit 3D supervision? ❌Our answer is NO !!!

📢 The submission portal for #3DV2026 is LIVE on OpenReview 👉 openreview.net/group?id=3DV/2… Ready to ride the wave? 🌊 Deadline Aug 18 (≈ 5 weeks)—bring your best and make a splash!

awesome work by @jiacheng_chen_ and @sanghyunwoo1219 on 3D-grounded visual compositing (and nice demos!)

Introducing BlenderFusion: Reassemble your visual elements—objects, camera, and background—to compose a new visual narrative. Play the interactive demo: blenderfusion.github.io

🤯!!!

“Introduction to Algorithms” is truly a phenomenal textbook! (Fun fact: back in high school, my laptop and this 1,300-page giant were inseparable desk buddies 😂😂😂)

MIT’s “Introduction to Algorithms,” published #otd in 1990, is the world’s most cited CS text, with 67K citations & over a million copies sold. bit.ly/3y1yMPR @mitpress

I grew up editing action movies with two vhs players & having to warn police we were filming with painted toy guns. My first VFX was frame by frame in photoshop from minidv. Kids growing up with advanced versions of this tech are going to do absolutely incredible things.

We discovered that imposing a spatio-temporal weight space via LoRAs on DIT-based video models unlocks powerful customization! It captures dynamic concepts with precision and even enables composition of multiple videos together!🎥✨

Inverse (direct G-buffer estimation) and forward rendering (no light transport simulation) using video diffusion model! Congratulations on the great work🥳🥳🥳

🚀 Introducing DiffusionRenderer, a neural rendering engine powered by video diffusion models. 🎥 Estimates high-quality geometry and materials from videos, synthesizes photorealistic light transport, enables relighting and material editing with realistic shadows and reflections

United States Trends

- 1. #AEWDynamite 30.1K posts

- 2. Epstein 1.34M posts

- 3. #Survivor49 3,036 posts

- 4. #AEWBloodAndGuts 4,028 posts

- 5. Hobbs 28.6K posts

- 6. Skye Blue 3,282 posts

- 7. #SistasOnBET 1,482 posts

- 8. Paul Skenes 16.5K posts

- 9. Cy Young 22.7K posts

- 10. Hannah Hidalgo 2,685 posts

- 11. Knicks 34.8K posts

- 12. Raising Arizona N/A

- 13. Marina 31.5K posts

- 14. #TheChallenge41 1,007 posts

- 15. Paul Reed 1,523 posts

- 16. Savannah 5,988 posts

- 17. Mikey 57.5K posts

- 18. Thekla 2,333 posts

- 19. Tarik Skubal 8,181 posts

- 20. Starship 16.1K posts

You might like

-

Julien Philip

Julien Philip

@JulienPhilip2 -

Lingjie Liu

Lingjie Liu

@LingjieLiu1 -

Guy Tevet

Guy Tevet

@GuyTvt -

Seonghyeon Ye

Seonghyeon Ye

@SeonghyeonYe -

Zhengqi Li

Zhengqi Li

@zhengqi_li -

David Rozenberszki

David Rozenberszki

@david_roz_ -

Bo Dai

Bo Dai

@doubledaibo -

Wenjing Bian

Wenjing Bian

@wenjing_bian -

Cheng Lin

Cheng Lin

@_cheng_lin -

Bo Zhang (Tony)

Bo Zhang (Tony)

@zhangboknight -

Phong Nguyen-Ha

Phong Nguyen-Ha

@PhongStormVN -

Zian Wang

Zian Wang

@zianwang97 -

Manuel Dahnert

Manuel Dahnert

@manuel_dahnert -

Ye Yuan

Ye Yuan

@_ye_yuan -

Yiming Xie

Yiming Xie

@YimingXie4

Something went wrong.

Something went wrong.