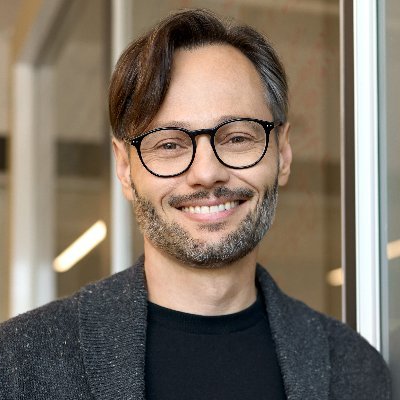

Rich Barton-Cooper

@richb_c

AI safety researcher @ MATS

Dit vind je misschien leuk

Want to be mentored by one of the TIME100? At an org with a cool distinctive red logo? I have the program for you...

Honored and humbled to be in @TIME's list of the TIME100 AI of 2025! time.com/collections/ti… #TIME100AI

MATS has been an incredible experience! I've just completed 8.0, about to start the extension. If you want to work on important and urgent AI safety research under excellent mentorship, within a highly supportive ecosystem, I can't recommend it highly enough - apply by Oct 2nd!

MATS 9.0 applications are open! Launch your career in AI alignment, governance, and security with our 12-week research program. MATS provides field-leading research mentorship, funding, Berkeley & London offices, housing, and talks/workshops with AI experts.

Excited to be working on this. Any feedback is much appreciated!

My current MATS stream is looking into black box monitoring for scheming. We've written a post with early results. If you have suggestions for what we could test, please lmk. If you have good ideas for hard scheming datasets, even better.

United States Trends

- 1. Klay 21.3K posts

- 2. McLaren 72.8K posts

- 3. #AEWFullGear 71K posts

- 4. Lando 118K posts

- 5. #LasVegasGP 208K posts

- 6. Oscar 110K posts

- 7. Ja Morant 9,462 posts

- 8. Piastri 50.1K posts

- 9. Max Verstappen 58.2K posts

- 10. Hangman 10.2K posts

- 11. Samoa Joe 5,014 posts

- 12. gambino 2,686 posts

- 13. LAFC 15.9K posts

- 14. Swerve 6,480 posts

- 15. #Toonami 2,844 posts

- 16. Kimi 45.8K posts

- 17. Fresno State 1,015 posts

- 18. South Asia 35K posts

- 19. Utah 24K posts

- 20. Arsenal 144K posts

Dit vind je misschien leuk

Something went wrong.

Something went wrong.