你可能会喜欢

A groundbreaking new research study published in the prestigious Journal of the American Medical Association shows a promising way to reduce stress and burnout! Discover how SKY Breath Meditation can help 🙌 🙌 Tap link in bio to learn more! #artofliving #burnout #stress

We are excited to announce Chain of Code (CoC), a simple yet surprisingly effective method that improves Language Model code-driven reasoning. On BIG-Bench Hard, CoC achieves 84%, a gain of 12% over Chain of Thought. Website: chain-of-code.github.io Paper: arxiv.org/pdf/2312.04474…

1/7 We built a new model! It’s called Action Transformer (ACT-1) and we taught it to use a bunch of software tools. In this first video, the user simply types a high-level request and ACT-1 does the rest. Read on to see more examples ⬇️

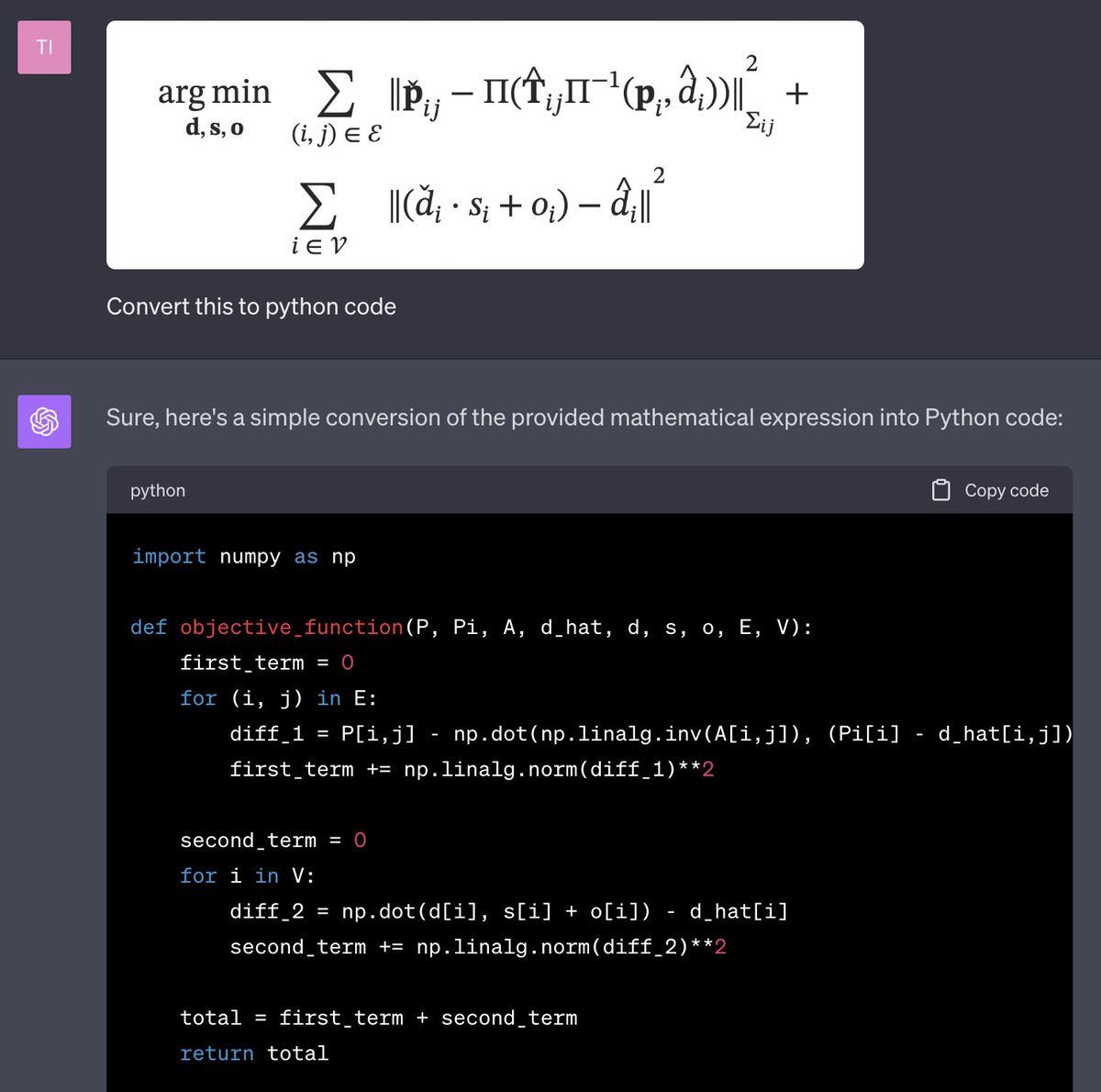

This is a game changer. You can use ChatGPT to transform equations to python functions. Wish I had this 5 years ago.

I finally got access to ChatGPT with Vision! The first thing I wanted to test was its coding capability with just a picture, and the results are mind-blowing. After sending only a screenshot of my calculator app, it essentially coded a replica of it.

New blog post: Multimodality and Large Multimodal Models (LMMs) Being able to work with data of different modalities -- e.g. text, images, videos, audio, etc. -- is essential for AI to operate in the real world. This post covers multimodal systems in general, including Large…

✍️ BONUS If you are like me and you haven’t got access to ChatGPT with vision yet, LLaVA, a large language and vision assistant, just came out. It is open source and completely free to use! Link: llava-vl.github.io

🚨 BREAKING: GPT-4 image recognition already has a new competitor. Open-sourced and completely free to use. Introducing LLaVA: Large Language and Vision Assistant. I compared the viral parking space photo on GPT-4 Vision to LLaVa, and it worked flawlessly (see video).

This looks like a robot dog dancing to disco lights, but it's actually a very cool visualization of the neural network that controls the bot! Blue -> green -> red corresponds to stronger neural activation. The NN is a multilayer perceptron that maps proprioception (e.g.…

This is the way to unlock the next trillion high-quality tokens, currently frozen in textbook pixels that are not LLM-ready. Nougat: an open-source OCR model that accurately scans books with heavy math/scientific notations. It's ages ahead of other open OCR options. Meta is…

Simulating a software company with LLMs! 🚀 Remember the 25 agents living in a simulation? This does the same but for a software company ChatDev asks the questions around effectively getting Large Language Model agents collaborate on writing entire code bases: - Writing a…

This is really WILD 🤯🤯. Turns out we supported Mobile all along with @MultiON_AI, but just didn't know!! One of our early users had our AI Agent do Walmart shopping on his behalf to make Spaghetti by automating his phone!! 🤩🔥 Now a personal & private AI agent that can do…

Here's a glimpse of mobile AGI and how it will change the way we use our devices forever (I had to stop it before it went through and actually placed the pickup order!) Running on my Android phone (Moto G Power 2021) via Kiwi Browser and @MultiON_AI's browser extension. 👏

Impressive. MetaGPT is about to reach 10,000 stars on Github. It's a Multi-Agent Framework that can behave as an engineer, product manager, architect, project managers. With a single line of text it can output the entire process of a software company along with carefully…

Excited to introduce Dynalang, an interactive agent that understands diverse types of language in visual environments! 🤖💬 By learning a multimodal world model 🌍, Dynalang understands task prompts, corrective feedback, simple manuals, hints about out of view objects, and more

**Instruction-Tuned Llama 2: Comprehensive Guide & Code** 🚀 Dive into the incredible potential of instruction-tuning Llama 2 with this comprehensive step-by-step guide, complete with code examples. 📚💻 The extended guide covers the following key aspects: 📝✅ 1. Define the…

I'm calling the Myth of Context Length: Don't get too excited by claims of 1M or even 1B context tokens. You know what, LSTMs already achieve infinite context length 25 yrs ago! What truly matters is how well the model actually uses the context. It's easy to make seemingly wild…

Congrats to the authors of A Generalist Agent (i.e., the Gato paper, openreview.net/forum?id=1ikK0…) for receiving @TmlrOrg's first Outstanding Certification (Best Paper Award)! @DeepMind Blog post from me & the rest of the outstanding paper selection committee: medium.com/@TmlrOrg/3a283…

Another good work on investigating coding LLM’s self-debugging capability. Even without GPT-4, the base model code-davince-002 can be coerced to perform self-repair as well. Authors: @xinyun_chen_, Maxwell Lin, Nathanael Scharli, @denny_zhou

New preprint: Teach LLMs to self-debug! (arxiv.org/abs/2304.05128) With few-shot demonstrations, LLMs can perform rubber duck debugging: w/o error messages, it can identify bugs by explaining the predicted code. SOTA on several code generation benchmarks using code-davinci-002.

The art of programming is interactive. Why should coding benchmarks be "seq2seq"? Thrilled to present 🔄InterCode, next-gen framework of coding tasks as standard RL tasks (action=code, observation=execution feedback) paper, code, data, pip: intercode-benchmark.github.io (1/7)

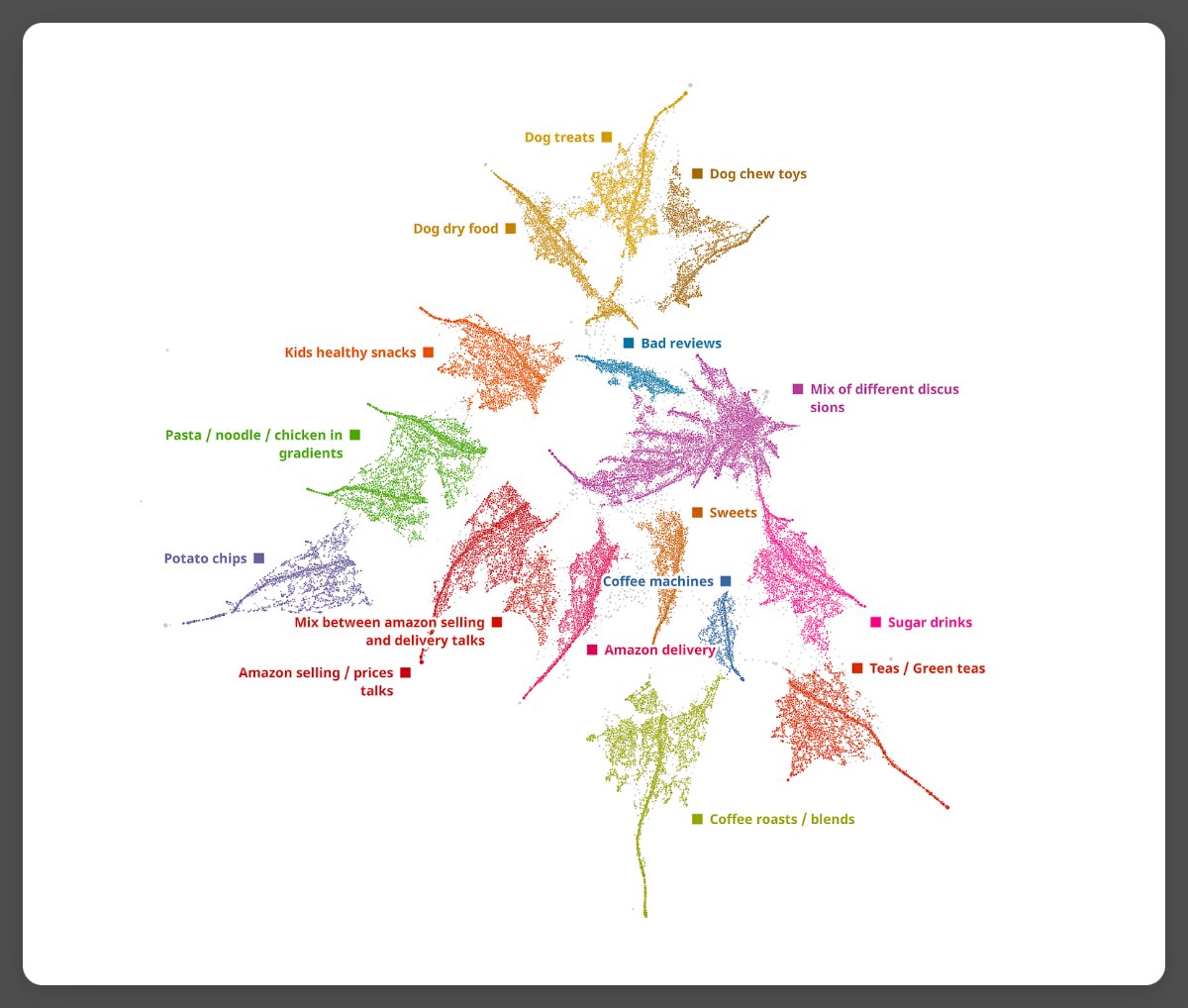

Another deep learning breakthrough: Deep TDA, a new algorithm using self-supervised learning, overcomes the limitations of traditional dimensionality reduction algorithms. t-SNE and UMAP have long been the favorites. Deep TDA might change that forever. Here are the details:

One of the earliest autonomous agents I worked on @OpenAI was a browser agent that learned to navigate and interact with websites through keyboard & mouse. It was called World of Bits, and way ahead of its time because LLM didn't exist 6 yrs ago. RL from scratch simply didn't…

United States 趋势

- 1. phil 74K posts

- 2. phan 73.5K posts

- 3. Columbus 217K posts

- 4. President Trump 1.26M posts

- 5. Doug Eddings N/A

- 6. Kincaid 1,463 posts

- 7. Middle East 318K posts

- 8. Springer 11.1K posts

- 9. Falcons 12.9K posts

- 10. Gilbert 9,510 posts

- 11. Yesavage 3,191 posts

- 12. Mike McCoy N/A

- 13. Martin Sheen 1,283 posts

- 14. Thanksgiving 60K posts

- 15. Monday Night Football 6,504 posts

- 16. John Oliver 9,037 posts

- 17. #LGRW 1,995 posts

- 18. Indigenous 132K posts

- 19. Macron 243K posts

- 20. Brian Callahan 13K posts

你可能会喜欢

Something went wrong.

Something went wrong.