Jim Fan

@DrJimFan

NVIDIA Director of Robotics & Distinguished Scientist. Co-Lead of GEAR lab. Solving Physical AGI, one motor at a time. Stanford Ph.D. OpenAI's 1st intern.

You might like

I've been a bit quiet on X recently. The past year has been a transformational experience. Grok-4 and Kimi K2 are awesome, but the world of robotics is a wondrous wild west. It feels like NLP in 2018 when GPT-1 was published, along with BERT and a thousand other flowers that…

Going to NeurIPS in San Diego! Available for coffee starting tomorrow afternoon. We are recruiting heavily for talents across robotics, VLM, world models, and software infra! DM me or email (on my very outdated home page).

Listening to Jensen talk about his favorite maths - specs of Vera Rubin chips, and the full stack from lithography to robot fleets assembling physical fabs in Arizona & Houston. Quoting Jensen, “these factories are basically robots themselves”. I visited NVIDIA facilities…

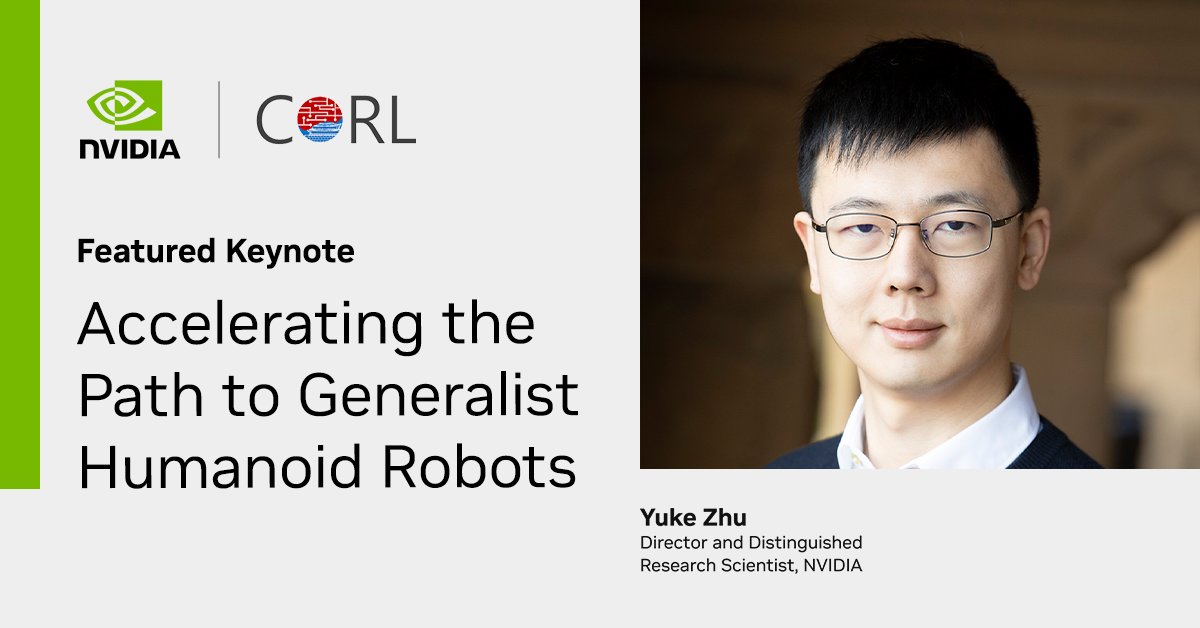

Go check out @yukez’s talk at CoRL! Project GR00T is cooking 🍳

The rise of humanoid platforms presents new opportunities and unique challenges. 🤖 Join @yukez at #CoRL2025 as he shares the latest research on robot foundation models and presents new updates with the #NVIDIAIsaac GR00T platform. Learn more 👉nvda.ws/4gdfBYY

There was something deeply satisfying about ImageNet. It had a well curated training set. A clearly defined testing protocol. A competition that rallied the best researchers. And a leaderboard that spawned ResNets and ViTs, and ultimately changed the field for good. Then NLP…

(1/N) How close are we to enabling robots to solve the long-horizon, complex tasks that matter in everyday life? 🚨 We are thrilled to invite you to join the 1st BEHAVIOR Challenge @NeurIPS 2025, submission deadline: 11/15. 🏆 Prizes: 🥇 $1,000 🥈 $500 🥉 $300

Would love to see the FSD Scaling Law, as it’s the only physical data flywheel at planetary scale. What’s the “emergent ability threshold” for model/data size?

This may be a testament to the “Reasoning Core Hypothesis” - reasoning itself only needs a minimal level of linguistic competency, instead of giant knowledge bases in 100Bs of MoE parameters. It also plays well with Andrej’s LLM OS - a processor that’s as lightweight and fast as…

🚀 Introducing Qwen3-4B-Instruct-2507 & Qwen3-4B-Thinking-2507 — smarter, sharper, and 256K-ready! 🔹 Instruct: Boosted general skills, multilingual coverage, and long-context instruction following. 🔹 Thinking: Advanced reasoning in logic, math, science & code — built for…

World modeling for robotics is incredibly hard because (1) control of humanoid robots & 5-finger hands is wayyy harder than ⬆️⬅️⬇️➡️ in games (Genie 3); and (2) object interaction is much more diverse than FSD, which needs to *avoid* coming into contact. Our GR00T Dreams work was…

What if robots could dream inside a video generative model? Introducing DreamGen, a new engine that scales up robot learning not with fleets of human operators, but with digital dreams in pixels. DreamGen produces massive volumes of neural trajectories - photorealistic robot…

Evaluation is the hardest problem for physical AI systems: do you crash test cars every time you debug a new FSD build? Traditional game engine (sim 1.0) is an alternative, but it's not possible to hard-code all edge cases. A neural net-based sim 2.0 is purely programmed by data,…

This is game engine 2.0. Some day, all the complexity of UE5 will be absorbed by a data-driven blob of attention weights. Those weights take as input game controller commands and directly animate a spacetime chunk of pixels. Agrim and I were close friends and coauthors back at…

Introducing Genie 3, our state-of-the-art world model that generates interactive worlds from text, enabling real-time interaction at 24 fps with minutes-long consistency at 720p. 🧵👇

No em dash should be baked into pretraining, post-training, alignment, system prompt, and every nook and cranny in an LLM’s lifecycle. It needs to be hardwired into the kernel, identity, and very being of the model.

Shengjia is one of the brightest, humblest, and most passionate scientists I know. We went to PhD together for 5 yrs, sitting across the hall at Stanford Gates building. Good old times. I didn’t expect this, but not at all surprised either. Very bullish on MSL!

We're excited to have @shengjia_zhao at the helm as Chief Scientist of Meta Superintelligence Labs. Big things are coming! 🚀 See Mark's post: threads.com/@zuck/post/DMi…

I'm observing a mini Moravec's paradox within robotics: gymnastics that are difficult for humans are much easier for robots than "unsexy" tasks like cooking, cleaning, and assembling. It leads to a cognitive dissonance for people outside the field, "so, robots can parkour &…

My bar for AGI is far simpler: an AI cooking a nice dinner at anyone’s house for any cuisine. The Physical Turing Test is very likely harder than the Nobel Prize. Moravec’s paradox will continue to haunt us, looming larger and darker, for the decade to come.

My bar for AGI is an AI winning a Nobel Prize for a new theory it originated.

United States Trends

- 1. Cooper Flagg 3,641 posts

- 2. #WWENXT 8,041 posts

- 3. #LGRW 1,921 posts

- 4. Maxey 2,407 posts

- 5. Bruins 4,106 posts

- 6. Larkin 1,571 posts

- 7. Embiid 2,641 posts

- 8. Markstrom N/A

- 9. Southern Miss 1,630 posts

- 10. Christmas Eve 143K posts

- 11. Rosetta Stone N/A

- 12. Dunesday 1,793 posts

- 13. Fight Club 2,548 posts

- 14. Insurrection Act 13.2K posts

- 15. Jordy Nelson N/A

- 16. Western Kentucky 1,144 posts

- 17. Nets 6,092 posts

- 18. Red Wings 1,974 posts

- 19. Villanova 1,617 posts

- 20. UNLV 1,518 posts

You might like

-

Andrej Karpathy

Andrej Karpathy

@karpathy -

Lilian Weng

Lilian Weng

@lilianweng -

Ilya Sutskever

Ilya Sutskever

@ilyasut -

Google DeepMind

Google DeepMind

@GoogleDeepMind -

Hugging Face

Hugging Face

@huggingface -

Greg Brockman

Greg Brockman

@gdb -

Yann LeCun

Yann LeCun

@ylecun -

clem 🤗

clem 🤗

@ClementDelangue -

AI at Meta

AI at Meta

@AIatMeta -

Harrison Chase

Harrison Chase

@hwchase17 -

LangChain

LangChain

@LangChainAI -

AK

AK

@_akhaliq -

Anthropic

Anthropic

@AnthropicAI -

François Chollet

François Chollet

@fchollet

Something went wrong.

Something went wrong.