Sathvik

@sathvikn4

computational psycholinguistics @ umd, nsf grfp fellow | he/him | fmr: cognitive & computer science @ uc berkeley | also on bluesky (sathvik@)

You might like

I’ll be presenting two posters at #EMNLP2024! 1⃣ LMs’ Sensitivity to Argument Roles - Session 2 and @BlackboxNLP (with @ekrosalee) 2⃣ LMs’ Generalizations Across Filler-Gap Dependencies - @conll_conf Reach out if you want to chat compling/cogsci! (papers in 🧵)

If I spill the tea—“Did you know Sue, Max’s gf, was a tennis champ?”—but then if you reply “They’re dating?” I’d be puzzled since that’s not the main point! Humans can track what’s ‘at issue’ in conversation. How sensitive are LMs to this distinction? New paper w/ @kanishkamisra

Excited to present our work, "Disability Across Cultures: A Human-Centered Audit of Ableism in Western and Indic LLMs" at ACM/AAAI #AIES this week in Madrid, Spain! 🇪🇸 Paper Link: arxiv.org/abs/2507.16130 Looking forward to meeting new folks, please reach out! ☕ (1/3)

I am hiring a PhD student to start my lab at @ucl! Get in touch if you have any questions, the deadline to apply through ELLIS is 31 October. More details🧵

All of us (@kmahowald, @kanishkamisra and me) are looking for PhD students this cycle! If computational linguistics/NLP is your passion, join us at UT Austin! For my areas see jessyli.com

The compling group at UT Austin (sites.utexas.edu/compling/) is looking for PhD students! Come join me, @kmahowald, and @jessyjli as we tackle interesting research questions at the intersection of ling, cogsci, and ai! Some topics I am particularly interested in:

It is PhD application season again 🍂 For those looking to do a PhD in AI, these are some useful resources 🤖: 1. Examples of statements of purpose (SOPs) for computer science PhD programs: cs-sop.org [1/4]

Computational cognitive science has always felt like an enigma—math, philosophy, psychology, all at once. TAing Intro to CogSci made me want to share material that gets non-experts hooked on its beauty & interdisciplinarity. Here are some videos I’ve found especially useful. 🧵

How do AI models reason in high-stakes decisions like college admissions? We that Large Language Models can display equity-oriented patterns—but also volatile reasoning that underscores the need for transparency, fairness, and accountability ? (1/n) Link: arxiv.org/abs/2509.16400

After a very longggg hiatus, I am back to announce a paper with @rachelrudinger @haldaume3 John Prindle has been accepted to #EMNLP2025 Main conference. Stay tuned for details 🫶😍㊗️㊗️🚀...

Seems to me the "findings of *ACL" decision is really wise and visionary (5 years ago), compared to the "had to reject because of venue limit" thing

@davidpoeppel receiving the well-deserved @SNLmtg Distinguished Career Award David has had a lasting and deep impact on the field and on his many successful trainees. David is also known for his enjoinders to engage positively with the world. #SNL2025

New preprint investigating whether lossy-context surprisal can account for the locality and expectation effects found in Russian, Hindi, and Persian reading data: osf.io/preprints/psya…

How I wish I could be at SNL in DC! If you are there, please do check in on my University of Maryland folks (tons of fun stuff happening over there), and have some soft-shell crabs 🦀️on my behalf! xD linguistics.umd.edu/news/maryland-…

If anyone is around for SNL down the block from me, I have a sandbox poster Sunday! I present some very preliminary data examining cued sentence recall with MEG. If you care about sentence recall, MEG sentence production, or combining psycholing+memory tasks, stop by poster E19!

A paper with Vic Ferreira and Norvin Richards is now out in JML! (1) Speakers syntactically encode zero complementizers as cognitively active mental object. (2) No evidence LLMs capture cross constructional generalizations about zero complementizers. nam10.safelinks.protection.outlook.com/?url=https%3A%…

Friends at Cogsci, I’ll be in SF tomorrow and would love to catch up if you’re there! I should be around from lunch onwards, my DMs/texts/emails are open.

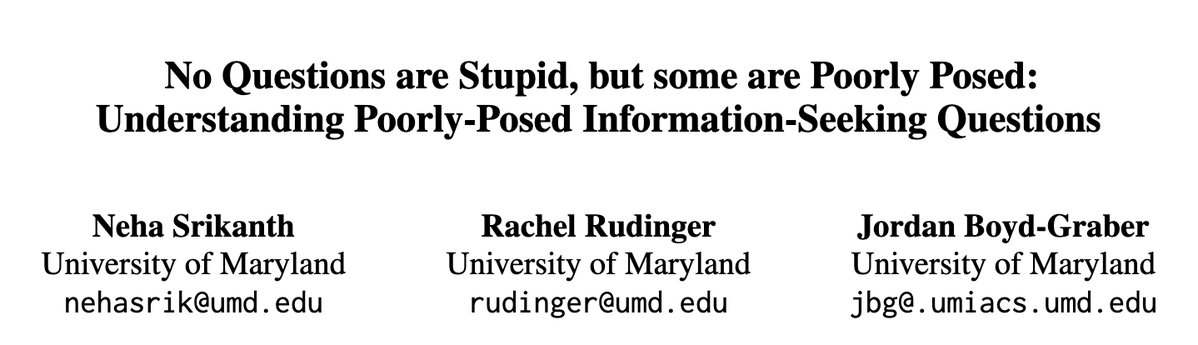

When questions are poorly posed, how do humans vs. models handle them? Our #ACL2025 paper explores this + introduces a framework for detecting and analyzing poorly-posed information-seeking questions! Joint work with @boydgraber & @rachelrudinger! 🔗 aclanthology.org/2025.acl-long.…

Slept in after last night’s social? You can still catch my poster from 11-12:30 today!

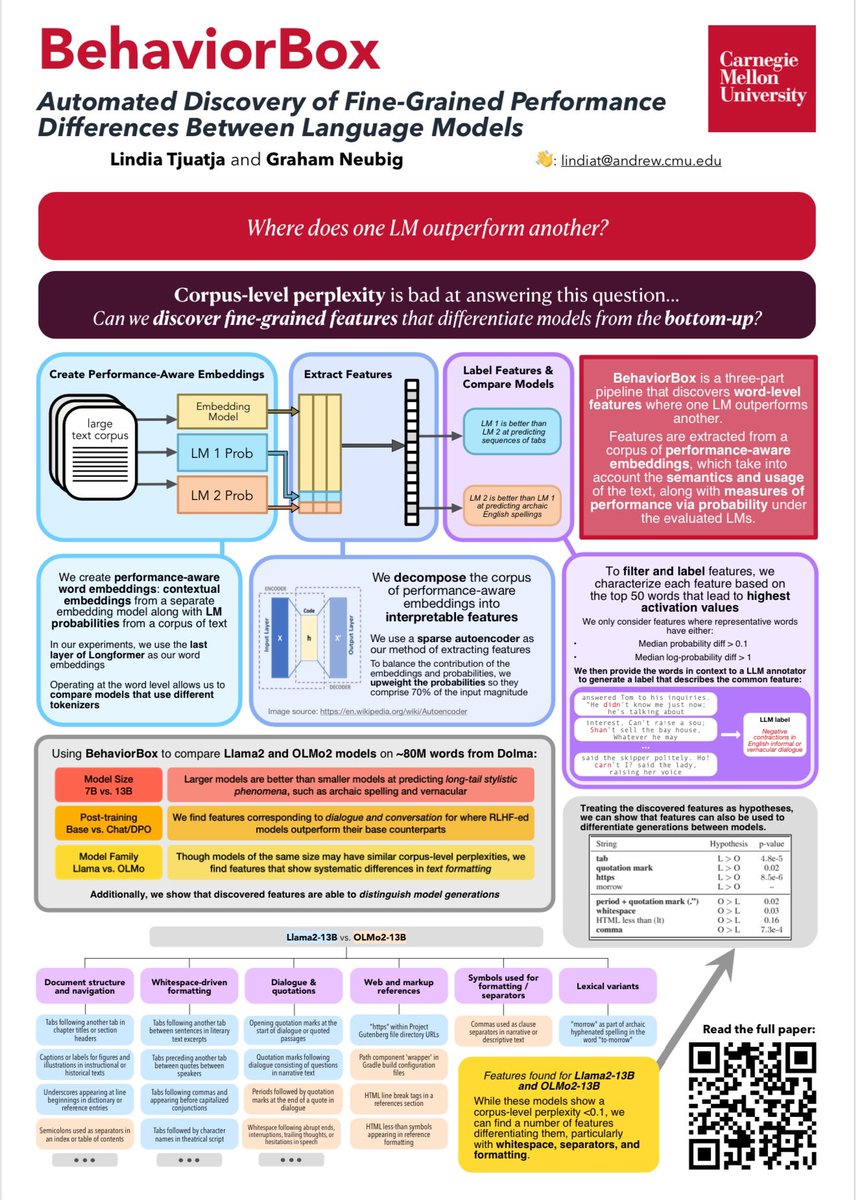

When it comes to text prediction, where does one LM outperform another? If you've ever worked on LM evals, you know this question is a lot more complex than it seems. In our new #acl2025 paper, we developed a method to find fine-grained differences between LMs: 🧵1/9

I am delighted to share our new #PNAS paper, with Gaurav Kamath, @mjieyang @sivareddyg and @masonderegger, looking at whether age matters for the adoption of new meanings. That is, as words change meaning, does the rate of adoption vary across generations? pnas.org/doi/10.1073/pn…

Linguistic theory tells us that common ground is essential to conversational success. But to what extent is it essential? Can LLMs detect when humans lose common ground in conversation? Our ACL 2025 (Oral) paper explores these questions on real-world data. #ACL2025NLP #ACL2025

I will unfortunately have to skip SCiL this year, but I am thrilled to share that Jwalanthi will be presenting this work by her, @Robro612, me, and @kmahowald on a tool that allows you to project contextualized embeddings from LMs to interpretable semantic spaces!

To be presented at ACL 2025: Large Language Models Are Biased Because They Are Large Language Models. Article: doi.org/10.1162/coli_a… Short (8min) video: youtube.com/watch?v=WLSuhe… #ACL2025NLP #NLProc #LLMs

youtube.com

YouTube

Large Language Models Are Biased Because They Are Large Language...

United States Trends

- 1. #911onABC 8,830 posts

- 2. GTA 6 17.4K posts

- 3. Broncos 24.5K posts

- 4. Raiders 36.1K posts

- 5. eddie 35.3K posts

- 6. GTA VI 26.2K posts

- 7. Rockstar 60.7K posts

- 8. #WickedOneWonderfulNight N/A

- 9. Antonio Brown 8,249 posts

- 10. #TNFonPrime 2,140 posts

- 11. UTSA 1,565 posts

- 12. Cynthia 30.1K posts

- 13. Tyler Lockett N/A

- 14. Sidney Crosby 1,644 posts

- 15. AJ Cole N/A

- 16. #RaiderNation 1,950 posts

- 17. Ozempic 24.3K posts

- 18. Buck 18.3K posts

- 19. #ShootingStar N/A

- 20. Nancy Pelosi 147K posts

You might like

-

Masoud

Masoud

@linguistMasoud -

Fedor Golosov

Fedor Golosov

@FedorGolosov -

Cory Shain

Cory Shain

@coryshain -

Adina Williams

Adina Williams

@adinamwilliams -

Ben Lipkin

Ben Lipkin

@ben_lipkin -

Sebastián Mancha

Sebastián Mancha

@seb_mancha -

Tiwa Eisape

Tiwa Eisape

@tiwa_eisape -

Shota Momma

Shota Momma

@shota_momma -

LaeyrZero

LaeyrZero

@Core_LayerZero -

حمد العليان

حمد العليان

@AlolayanH -

سليمان العقيلي

سليمان العقيلي

@aloqeliy -

David Duvenaud

David Duvenaud

@DavidDuvenaud

Something went wrong.

Something went wrong.