Saurabh Shah

@saurabh_shah2

training olmos @allen_ai prev @Apple @Penn 🎤dabbler of things🎸 🐈⬛enjoyer of cats 🐈 and mountains🏔️he/him

คุณอาจชื่นชอบ

Ripped a good one post Olmo release

The thought of Tyler having to ask for an interview is hilarious to me. Instead top labs should be asking Tyler if it’s ok to interview him. (PLEASE DONT POACH HIM TY)

Olmo 3 afterglow - want to share how I came to join Ai2 to encourage others interested in LLM research. I found it difficult to break into the field as someone who does not hold a phd. Research roles at top labs are highly competitive and I didn’t have professional experience…

Going to be calling my professor friends to make sure they admit Saumya. They're going to be thanking me and asking for advice on how to convince her to go to their school.

Olmo 3 is out!!!! It was so much fun working on post-training. Loved seeing this come together with the best team!!!!

sure I can. On slack I just have to @ @hamishivi and @finbarrtimbers and explain in natural language the problem and then the fire gets put out

someone asked fei fei about intelligence llms vs. world models yesterday and she was like can you put out a fire with natural language i -

alright, @PrimeIntellect @arcee_ai @datologyai your turn now. we're waiting. Let it rip 🫡

yes pls! full transparency (that's kinda what we do): there was a lot to organize for the Olmo 3 release. We were working down to the wire, and we're far from perfect If you have any q's pls reach out (twitter is good, email is great) and we'll be on it team is pretty…

yay thanks so much 🥰 feedback on stuff we missed or asks for more info very much welcome!

release and keep going done this twice this week ;)

Which previous setups Michael. Which ones 🤔

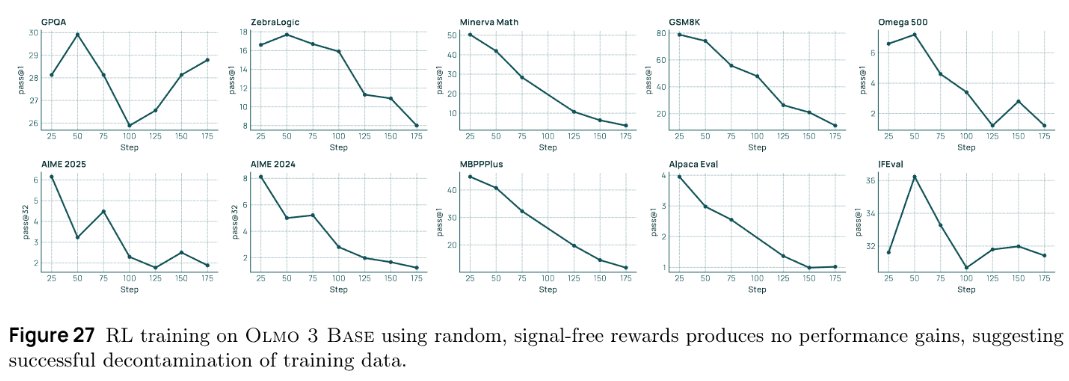

Because Olmo 3 is fully open, we decontaminate our evals from our pretraining and midtraining data. @StellaLisy proves this with spurious rewards: RL trained on a random reward signal can't improve on the evals, unlike some previous setups

Yes @ profs this is a not-so-inside scoop 👇 @saumyamalik44 and @heinemandavidj are applying to PhDs this cycle, which is kinda funny bc they’re already operating at senior PhD levels 💪 You’re rly gonna want them in your lab

and if you happen to be a professor hiring students this cycle, keep an eye out for @saumyamalik44 and @heinemandavidj's applications, I think you'll have fierce competition...

Everyone seems to be reading the Olmo 3 paper today. Seems pretty cool. Maybe I'll read it at some point. Probably not though

yes, definitely crashed out exactly 0 times to Kat during release week...😅

Congrats Olmo team!! Good job @saurabh_shah2 for not breaking down even one single time. Everyone else go read his blog post

Oh yeah. Costa still secretly works at Ai2 btw. Don't tell @LiamFedus

All this work is with the great peeps at @allen_ai who put in a lot of work including putting up with the weird memes I post in slack, #1 manager @natolambert, @finbarrtimbers @saurabh_shah2 @hamishivi @HannaHajishirzi and even @vwxyzjn who advised behind the scenes

checkout the RLZero section of the paper and our RLZero artifacts!! Michael did an awesome job leading this, and it was tons of fun working with him to try to RL some ~high entropy~ models

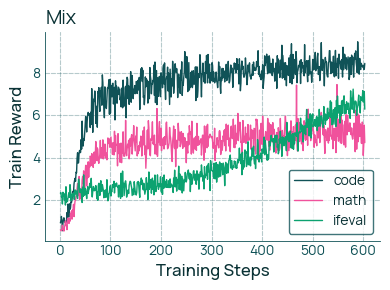

It's also a great setup for multi-objective RL! @saurabh_shah2 and I created four data domains: math, code, instruction-following, and general chat, so you can study their interaction during RL finetuning

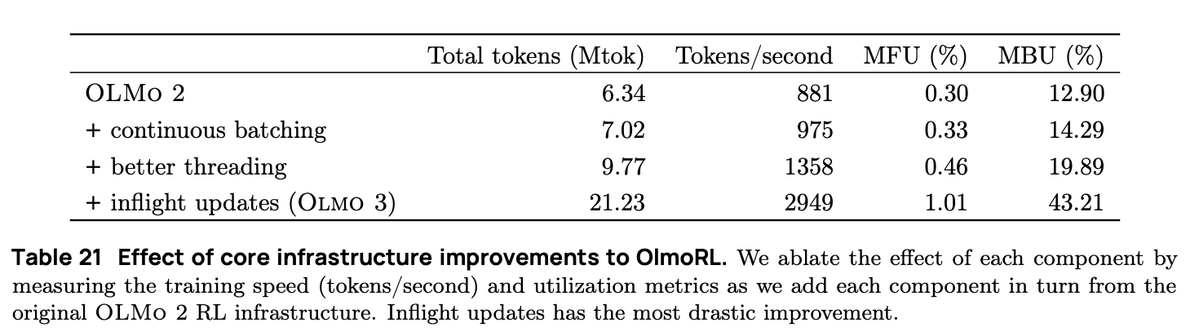

i was sooo friggin giddy everytime @finbarrtimbers, @mnoukhov or @hamishivi impl a new RL optimization. In some settings some of these changes were literally a 4x wall-clock time speedup (shoutout inflight updates a.k.a. pipelineRL ) Ty for your service, FInbarr

The OlmoRL infrastructure was 4x faster than Olmo 2 and made it much cheaper to run experiments. Some of the changes: 1. continuous batching 2. in-flight updates 3. active sampling 4. many many improvements to our multi-threading code

No @hamishivi No RL.

Did a bunch on the RL & eval side for this guy! Worked mostly on think RL data, training, eval. look out the for doki doki literature club links in the technical report - lots of war stories from this release👀

Alright Google go ahead drop the big banana or whatever I don't care Olmoo 3 is out let's gooooooo

I knew I shouldn't have let Luca change the chat templates...

United States เทรนด์

- 1. Klay 20.2K posts

- 2. #AEWFullGear 69.9K posts

- 3. McLaren 45.5K posts

- 4. Lando 98.4K posts

- 5. #LasVegasGP 184K posts

- 6. Ja Morant 8,889 posts

- 7. LAFC 15.4K posts

- 8. gambino 2,333 posts

- 9. Hangman 9,853 posts

- 10. Samoa Joe 4,734 posts

- 11. Swerve 6,356 posts

- 12. #Toonami 2,818 posts

- 13. Bryson Barnes N/A

- 14. Verstappen 79.4K posts

- 15. Utah 24K posts

- 16. Kimi 38.5K posts

- 17. Mark Briscoe 4,415 posts

- 18. Benavidez 15.9K posts

- 19. Terry Crews 7,710 posts

- 20. Fresno State 1,004 posts

คุณอาจชื่นชอบ

-

Berkshire Asia

Berkshire Asia

@BerkshireAsia -

Vivek Iyer

Vivek Iyer

@remorax98 -

Liam Dugan

Liam Dugan

@LiamDugan_ -

Jonathan Bragg

Jonathan Bragg

@turingmusician -

Veronica Qing Lyu

Veronica Qing Lyu

@veronica3207 -

neuromlet

neuromlet

@neuromlet -

Aarav AI

Aarav AI

@defikin -

Jack Jingyu Zhang

Jack Jingyu Zhang

@jackjingyuzhang -

SHOAIB SH

SHOAIB SH

@KillerShoaib__ -

Abraham Chengshuai Yang, Ph.D.

Abraham Chengshuai Yang, Ph.D.

@Chengshuai_Yang -

Catherine Havasi

Catherine Havasi

@catherinehavasi -

Shlomi Hod

Shlomi Hod

@hodthoughts -

Jiawei (Joe) Zhou

Jiawei (Joe) Zhou

@jzhou_jz -

Yuchen Niu

Yuchen Niu

@yuchen_niu22 -

ᛉ ᛟ ᚱ ᛁ ᚠ

ᛉ ᛟ ᚱ ᛁ ᚠ

@zorif_

Something went wrong.

Something went wrong.