nai

@src_term

src/term research — Machine Learning x Computational Fluids — Gradients are all you need

Gonna build an fast, gpu native aeroacoustics solver. Probably in JAX, might be pytorch based, but JAX jit is much better imo, let’s see….

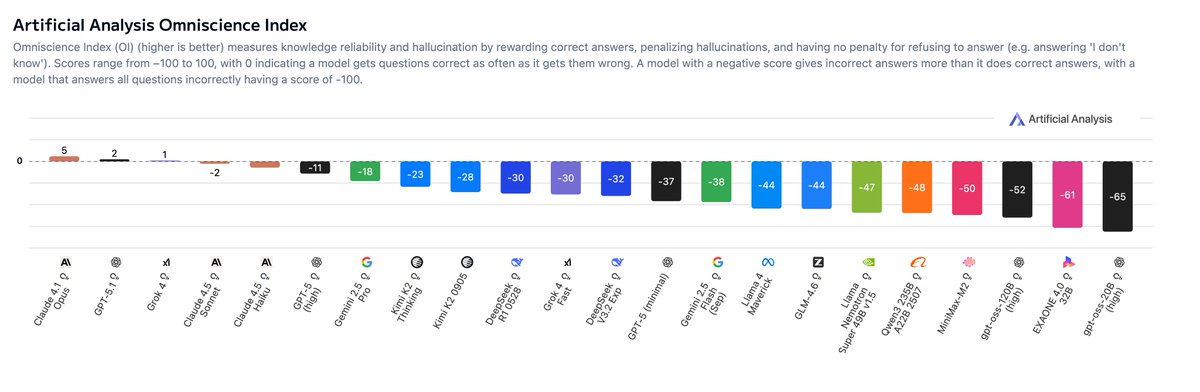

Announcing AA-Omniscience, our new benchmark for knowledge and hallucination across >40 topics, where all but three models are more likely to hallucinate than give a correct answer Embedded knowledge in language models is important for many real world use cases. Without…

Excited to announce our partnership with Hill Climb - working on producing future models and work on difficult math domains! Stay tuned :)

hillclimb (@hillclimbai) is the human superintelligence community, dedicated to building golden datasets for AGI. Starting with math, their team of IMO medalists, lean experts, PhDs is designing RL environments for @NousResearch.

It's SOTA, not only open weights SOTA :)

MoonshotAI has released Kimi K2 Thinking, a new reasoning variant of Kimi K2 that achieves #1 in the Tau2 Bench Telecom agentic benchmark and is potentially the new leading open weights model Kimi K2 Thinking is one of the largest open weights models ever, at 1T total parameters…

ig the goal is to be rich enough that curiosity drives you instead of necessity.

pewdiepie is now fine-tuning his own LLM model. what the fuck.

The bottleneck for deep skill isn't usually intelligence, but boredom tolerance. Learning has an activation energy: below a certain skill threshold, practice is tedious, but above it, it becomes a self-sustaining flow state. The entire battle is persisting until that transition.

My first thought here, hardware acceleration for realtime, large-scale turbulence modeling and diffusion-based surrogates?

Thermodynamic Computing: From Zero to One Read the blog here: extropic.ai/writing/thermo…

Interesting benchmark, also interesting to see that gemini 2.5 pro is still relatively sota and strong in physics

We built ProfBench to raise the bar for LLMs - literally. At @NVIDIA, we worked with domain experts to create a benchmark that goes far beyond trivia and short answers. ProfBench tests LLMs on complex, multi-step tasks that demand the kind of reasoning, synthesis, and clarity…

Agreed

You rarely solve hard problems in a flash of insight. It's more typically a slow, careful process of exploring a branching tree of possibilities. You must pause, backtrack, and weigh every alternative. You can't fully do this in your head, because your working memory is too…

Learning how to learn is the most important skill to acquire.

United States Trends

- 1. Saudi 201K posts

- 2. #UNBarbie 7,204 posts

- 3. Clay Higgins 6,229 posts

- 4. Gemini 3 41.7K posts

- 5. Khashoggi 30.2K posts

- 6. #UnitedNationsBarbie 7,856 posts

- 7. Salman 61.7K posts

- 8. Cloudflare 251K posts

- 9. #NXXT2Run 1,023 posts

- 10. Piggy 102K posts

- 11. Robinhood 5,109 posts

- 12. Mary Bruce 1,255 posts

- 13. Shanice N/A

- 14. Merch 67.7K posts

- 15. Pat Bev 1,762 posts

- 16. Nicki Minaj 44.9K posts

- 17. Olivia Dean 4,667 posts

- 18. #LaSayoSeQuedóGuindando 3,172 posts

- 19. Frankie Montas N/A

- 20. Luis Guerrero N/A

Something went wrong.

Something went wrong.