111111

@sshmatrix__

Math. Physics. Code. Erdös number = 4. Hawking number = 2. engineer of @AIatMeta

I have been building on this track since 2022. This project 0xBOTS that I made 3 years ago will be part of our DePIN strategy on Stage 3. This is my life's work. I won't be quitting it. Even with only one finger left to code. #DeSci

On the heels of the update I shared on Llama yesterday, we’re also seeing Meta AI usage growing FAST with 185M weekly actives! 🚀

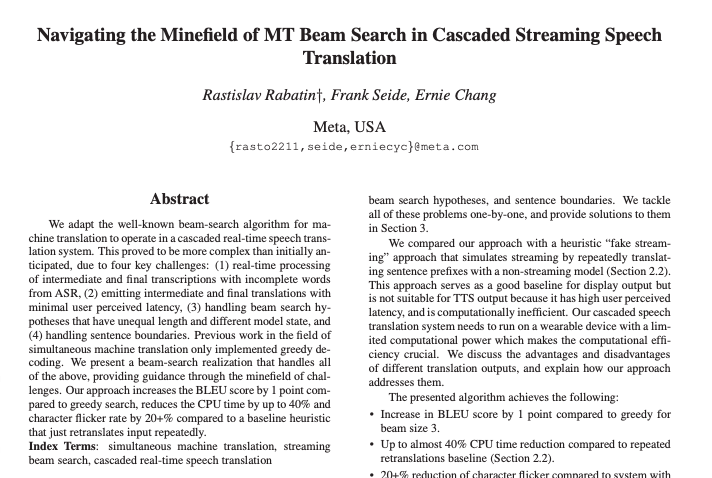

We're at #INTERSPEECH2024 — if you're on the ground in Greece this week, stop by our booth to explore SeamlessExpressive, MAGNeT, EMG and more with our research teams! 🔗 Following the conference from your feed? Here are links to 5️⃣ interesting papers we're presenting to add to

Upeo Labs is aiming to solve local challenges in Kenya using AI. Their Somo-GPT app is designed as a support tool for high school students, teachers and parents — built with Llama 3 & 3.1 ➡️ go.fb.me/ttcljg

On the latest episode of the Boz To The Future podcast, media artist and director, @RefikAnadol shared how his studio used Llama as part of "Large Nature Model: A Living Archive". Read more on the project and watch the whole conversation ➡️ go.fb.me/pfhg9i

We recently shared an update on the growth of the Llama. Tl;dr: downloads are growing fast, our major cloud partners are seeing rapidly increasing usage of Llama on their platforms and we're seeing great adoption across industries! Read the full update ➡️ go.fb.me/d01004

Fragmented regulation means the EU risks missing out on the rapid innovation happening in open source and multimodal AI. We're joining representatives from 25+ European companies, researchers and developers in calling for regulatory certainty ➡️ EUneedsAI.com

📣 Introducing Llama 3.2: Lightweight models for edge devices, vision models and more! What’s new? • Llama 3.2 1B & 3B models deliver state-of-the-art capabilities for their class for several on-device use cases — with support for @Arm, @MediaTek & @Qualcomm on day one. •

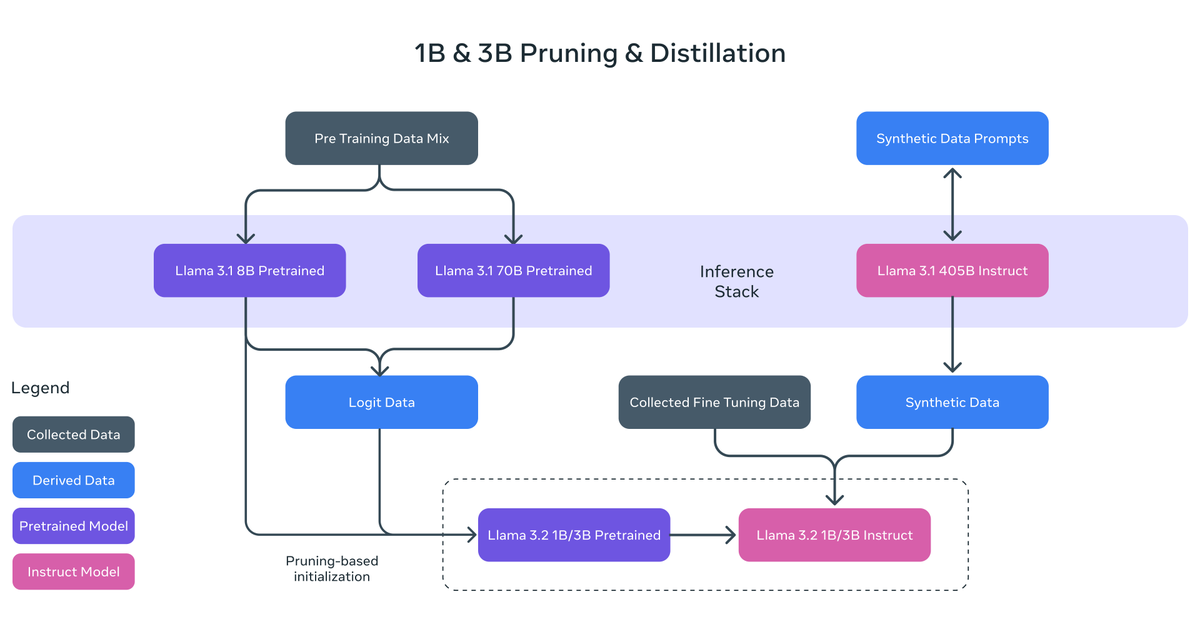

These lightweight Llama models were pretrained on up to 9 trillion tokens. One of the keys for Llama 1B & 3B however was using pruning & distillation to build smaller and more performant models informed by powerful teacher models. Pruning enabled us to reduce the size of extant

The lightweight Llama 3.2 models shipping today include support for @Arm, @MediaTek & @Qualcomm to enable the developer community to start building impactful mobile applications from day one.

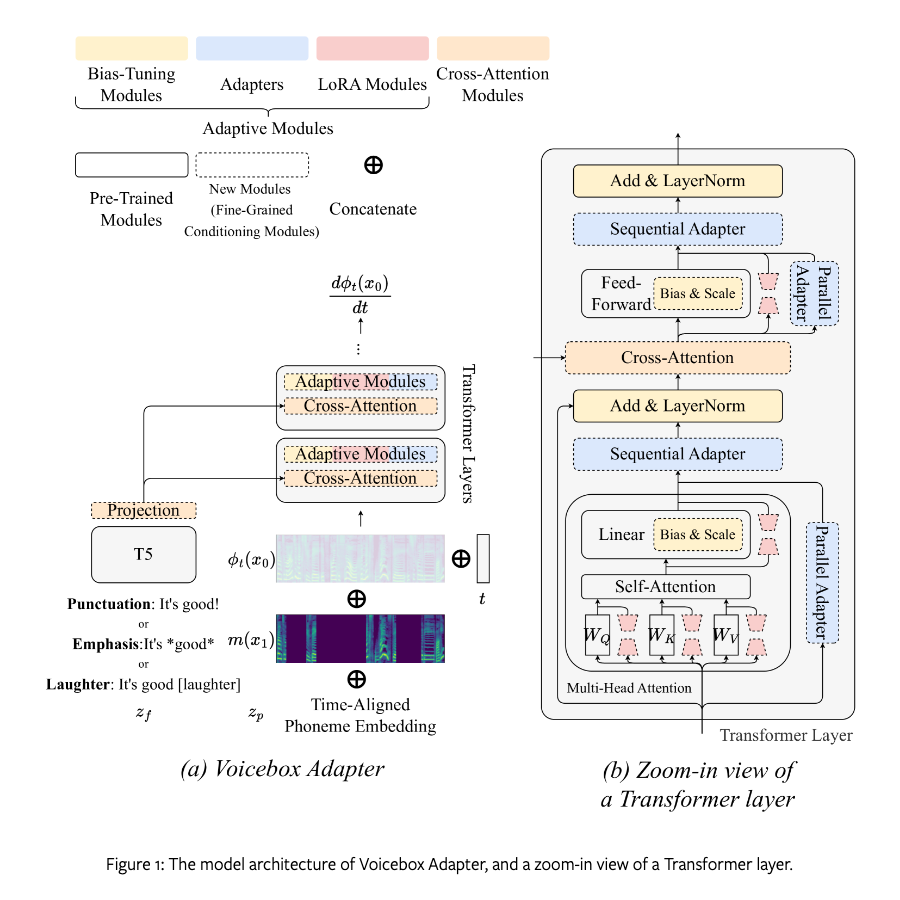

By training adapter weights without updating the language-model parameters, Llama 3.2 11B & 90B retain their text-only performance while outperforming on image understanding tasks vs closed models. Enabling developers to use these new models as drop-in replacements for Llama 3.1.

With Llama 3.2 we released our first-ever lightweight Llama models: 1B & 3B. These models empower developers to build personalized, on-device agentic applications with capabilities like summarization, tool use and RAG where data never leaves the device.

🙌 In collaboration with @AIatMeta, we are optimizing the🦙Llama 3.2 collection of open models with NVIDIA NIM microservices to accelerate flexible #AI experiences -- delivering high throughput and low latency across millions of GPUs worldwide -- from workstation computing, to

Ready to start working with our new lightweight and multimodal Llama 3.2 models? Here are a few new resources from Meta to help you get started. 🧵

Details on Llama 3.2 11B & 90B vision models — and the full collection of new Llama models ⬇️ x.com/AIatMeta/statu…

We’re on the ground at #ECCV2024 in Milan this week to showcase some of our latest research, new research artifacts and more. Here are 4️⃣ things you won’t want to miss from Meta FAIR, GenAI and Reality Labs Research this week whether you’re here in person or following from your

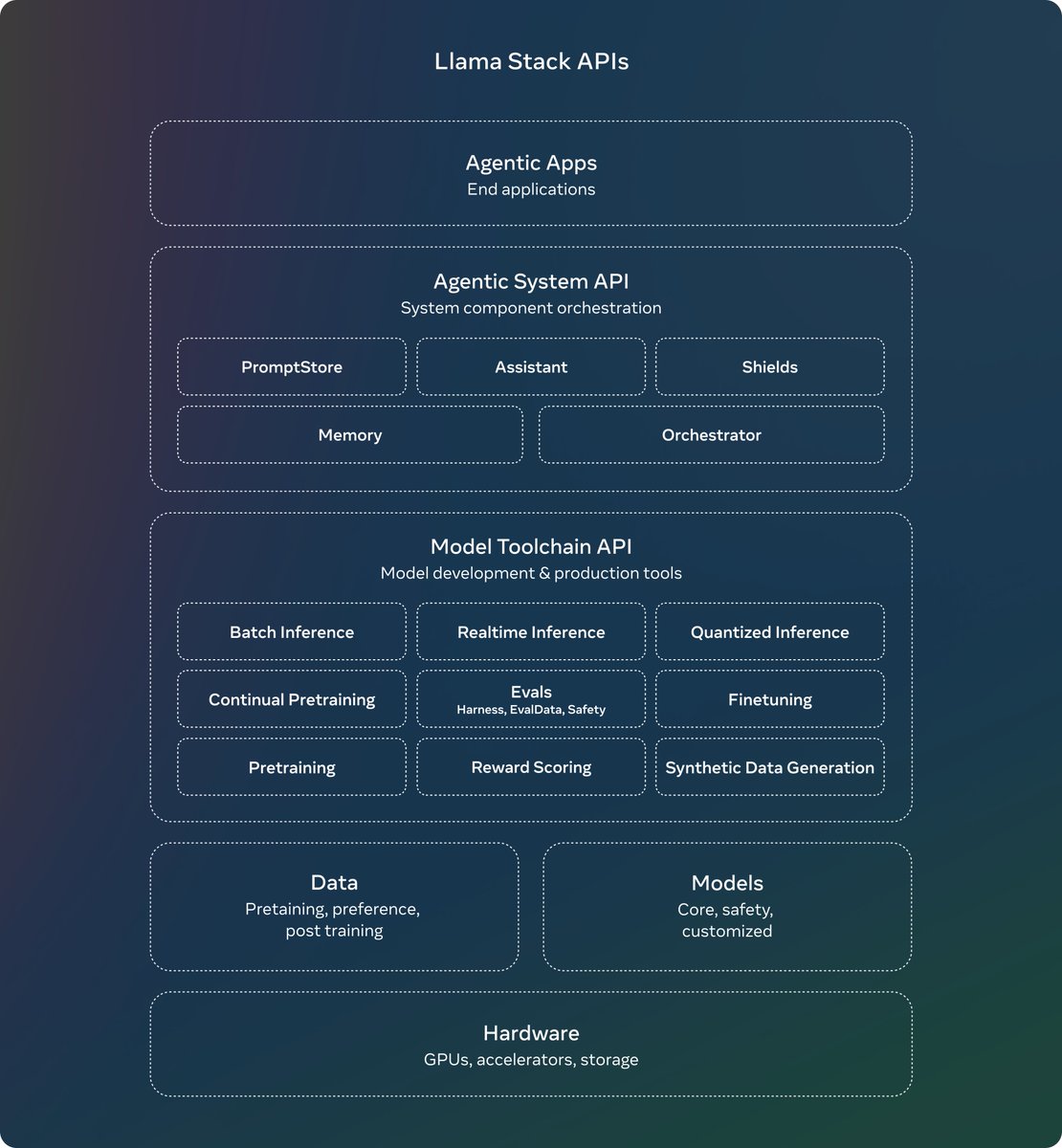

We’re excited to share the first official distribution of Llama Stack! It packages multiple API Providers into a single endpoint for developers to enable a simple, consistent experience to work with Llama models across a range of deployments. Details ➡️ go.fb.me/xfi7g3

6️⃣ ADen: Adaptive Density Representations for Sparse-view Camera Pose Estimation: go.fb.me/9brdhb

As part of our continued belief in open science and progressing the state-of-the-art in media generation, we’ve published more details on Movie Gen in a new research paper for the academic community ➡️ go.fb.me/toz71j

Interested in 3D object detection and egocentric vision? We open sourced a small yet challenging dataset called Aria Everyday Objects (AEO). We use it as one of the tasks for benchmarking a new class of Egocentric 3D Foundation Models we are working on: arxiv.org/abs/2406.10224

United States Trends

- 1. Tucker N/A

- 2. Bill Mazeroski N/A

- 3. #Caturday N/A

- 4. Good Saturday N/A

- 5. Dr Pepper N/A

- 6. Senior Day N/A

- 7. #SaturdayVibes N/A

- 8. Huckabee N/A

- 9. RIP Maz N/A

- 10. Plumb N/A

- 11. Caicedo N/A

- 12. Burnley N/A

- 13. Joao Pedro N/A

- 14. Kimmich N/A

- 15. #CHEBUR N/A

- 16. #วิวาห์ปฐพีตอนที่5 N/A

- 17. THE EARTH HONEYMOON N/A

- 18. 1960 world series N/A

- 19. GAMEDAY N/A

- 20. Revenge Is Best Served Cold N/A

Something went wrong.

Something went wrong.