Andy Keller

@t_andy_keller

Postdoctoral Fellow at The Kempner Institute at Harvard University -- Somewhere between Brains & Bits. PhD at UvA, Intern @ Apple MLR, Prev @ Intel AI & Nervana

You might like

Why do video models handle motion so poorly? It might be lack of motion equivariance. Very excited to introduce: Flow Equivariant RNNs (FERNNs), the first sequence models to respect symmetries over time. Paper: arxiv.org/abs/2507.14793 Blog: kempnerinstitute.harvard.edu/research/deepe… 1/🧵

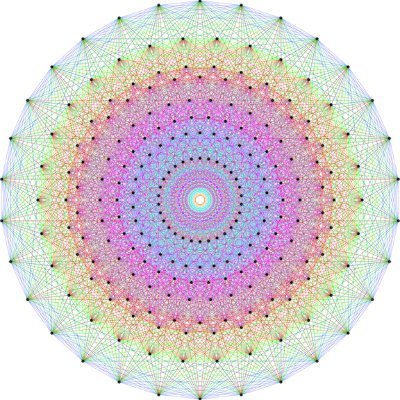

There’s lots of symmetry in neural networks! 🔍 We survey where they appear, how they shape loss landscapes and learning dynamics, and applications in optimization, weight space learning, and much more. ➡️ Symmetry in Neural Network Parameter Spaces arxiv.org/abs/2506.13018

Hidden Spirals Reveal Neurocomputational Mechanisms of Traveling Waves in Human Memory biorxiv.org/content/10.110… #biorxiv_neursci

Join us today (Friday, October 24) at 1:00pm ET for our next IAIFI Colloquium featuring @t_andy_keller (Harvard University Kempner Institute): Flow Equivariance: Enforcing Time-Parameterized Symmetries in Sequence Models. Watch on YouTube: youtube.com/@iaifiinstitut…

As promised after our great discussion, @chaitanyakjoshi! Your inspiring post led to our formal rejoinder: the Platonic Transformer. What if the "Equivariance vs. Scale" debate is a false premise? Our paper shows you can have both. 📄 Preprint: arxiv.org/abs/2510.03511 1/9

After a long hiatus, I've started blogging again! My first post was a difficult one to write, because I don't want to keep repeating what's already in papers. I tried to give some nuanced and (hopefully) fresh takes on equivariance and geometry in molecular modelling.

🕳️🐇Into the Rabbit Hull – Part II Continuing our interpretation of DINOv2, the second part of our study concerns the geometry of concepts and the synthesis of our findings toward a new representational phenomenology: the Minkowski Representation Hypothesis

🕳️🐇Into the Rabbit Hull – Part I (Part II tomorrow) An interpretability deep dive into DINOv2, one of vision’s most important foundation models. And today is Part I, buckle up, we're exploring some of its most charming features.

Appearing soon: State-space trajectories and traveling waves following distraction, J of Cog. Neuro., in press. A direct link between spiking patterns moving through subspace and traveling waves propagating across the cortex. Preprint: doi.org/10.1101/2024.0… #neuroscience

New preprint alert 🧠 Ever wondered how the cortex and hippocampus communicate during health and what changes during disease? With @DrBreaky and an amazing team, we built the first geometry-aware model of cortico-hippocampal interactions to answer this. [biorxiv.org/content/10.110…]

New paper on covariant #neuromorphic networks! We're connecting decades of work in computer vision with decades of work in spiking networks to present spatio-temporal spiking processing. nature.com/articles/s4146…

Incredibly rigorous and precise study of the one of the most fundamental visual processing capabilities of deep feedforward neural networks, contour integration -- just great science. Kudos Fenil! 👏

🧵 Can a purely feedforward network — with no recurrence or lateral connections — capture human-like perceptual organization? 🤯 Yes! Especially for contour integration, and we pinpoint the key inductive biases. New paper in @PLOSCompBiol with @talia_konkle & @grez72! 1/24

Great thread! Very excited to see the 'more' that comes next!

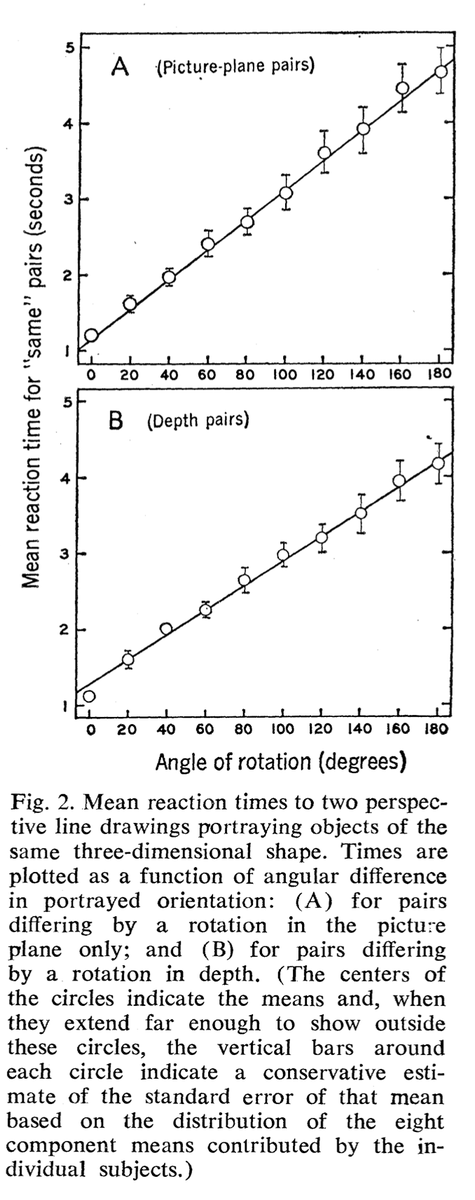

In a classic study of "mental rotation", Shepard and Metzler (1971) found that the time to compare two 3D cube-made objects was proportional to their angular difference. But *what is going in the brain* during this process? 🔗 Metzler & Shepard (1971): semanticscholar.org/paper/Mental-R…

Humans largely learn language through speech. In contrast, most LLMs learn from pre-tokenized text. In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech. Poster P6, Aug 19 at 13:30, Foyer 2.2!

Looking forward to seeing @mozesjacobs give a short talk on this at CCN (@CogCompNeuro) in ~30mins! Watch the recorded livestream below or come by poster C171 to say hi! Stream: hva-uva.cloud.panopto.eu/Panopto/Pages/…

In the physical world, almost all information is transmitted through traveling waves -- why should it be any different in your neural network? Super excited to share recent work with the brilliant @mozesjacobs: "Traveling Waves Integrate Spatial Information Through Time" 1/14

#KempnerInstitute research fellow @t_andy_keller and coauthors Yue Song @wellingmax and Nicu Sebe have a new book out that introduces a framework for developing equivariant #AI & #neuroscience models. Read more: kempnerinstitute.harvard.edu/news/kempner-r… #NeuroAI

Equivariance meets RNNs. An exciting research direction!

Why do video models handle motion so poorly? It might be lack of motion equivariance. Very excited to introduce: Flow Equivariant RNNs (FERNNs), the first sequence models to respect symmetries over time. Paper: arxiv.org/abs/2507.14793 Blog: kempnerinstitute.harvard.edu/research/deepe… 1/🧵

New in the #DeeperLearningBlog: #KempnerInstitute research fellow @t_andy_keller introduces the first flow equivariant neural networks, which reflect motion symmetries, greatly enhancing generalization and sequence modeling. bit.ly/451fQ48 #AI #NeuroAI

United States Trends

- 1. Veterans Day 228K posts

- 2. Veterans 367K posts

- 3. Nico 119K posts

- 4. Luka 73.1K posts

- 5. Mavs 26.9K posts

- 6. Gambit 22.2K posts

- 7. Kyrie 6,155 posts

- 8. #csm220 4,550 posts

- 9. Dumont 21.5K posts

- 10. Wike 54.5K posts

- 11. Vets 20.3K posts

- 12. Mantis 3,435 posts

- 13. Arlington National Cemetery 10.2K posts

- 14. #MFFL 2,128 posts

- 15. Venom 16.7K posts

- 16. Anthony Davis 5,643 posts

- 17. Wanda 20.9K posts

- 18. Shams 4,629 posts

- 19. Mavericks 30.5K posts

- 20. United States Armed Forces 1,517 posts

You might like

-

Taco Cohen

Taco Cohen

@TacoCohen -

Maurice Weiler

Maurice Weiler

@maurice_weiler -

will grathwohl

will grathwohl

@wgrathwohl -

David M. Knigge

David M. Knigge

@davidmknigge -

David Ruhe

David Ruhe

@djjruhe -

Pascal Mettes

Pascal Mettes

@PascalMettes -

Rianne van den Berg

Rianne van den Berg

@vdbergrianne -

Sharvaree Vadgama

Sharvaree Vadgama

@SharvVadgama -

Phillip Lippe

Phillip Lippe

@phillip_lippe -

Shafiq Joty

Shafiq Joty

@JotyShafiq -

Tim Bakker🔸

Tim Bakker🔸

@timbbakker -

Erik Bekkers

Erik Bekkers

@erikjbekkers -

David W. Romero

David W. Romero

@davidwromero -

Benjamin Kurt Miller

Benjamin Kurt Miller

@bkmi13 -

Mazda Moayeri

Mazda Moayeri

@MLMazda

Something went wrong.

Something went wrong.