UIUC NLP

@uiuc_nlp

Natural Language Processing research group at The University of Illinois Urbana-Champaign @IllinoisCS @UofIllinois

You might like

What if your policy could reason and think dynamically, especially about uncertainty, enabling better real-world behavior? ⚡️Introducing EBT-Policy, an instantiation of Energy-Based Transformers for Policies! TLDR: - EBT-Policy broadly outperforms Diffusion Policy in both…

Many of my students cannot attend EMNLP in person due to visa problems, but the super rising star Cheng Qian @qiancheng1231 will be there presenting multiple papers. Please drop by our posters and talk to him!

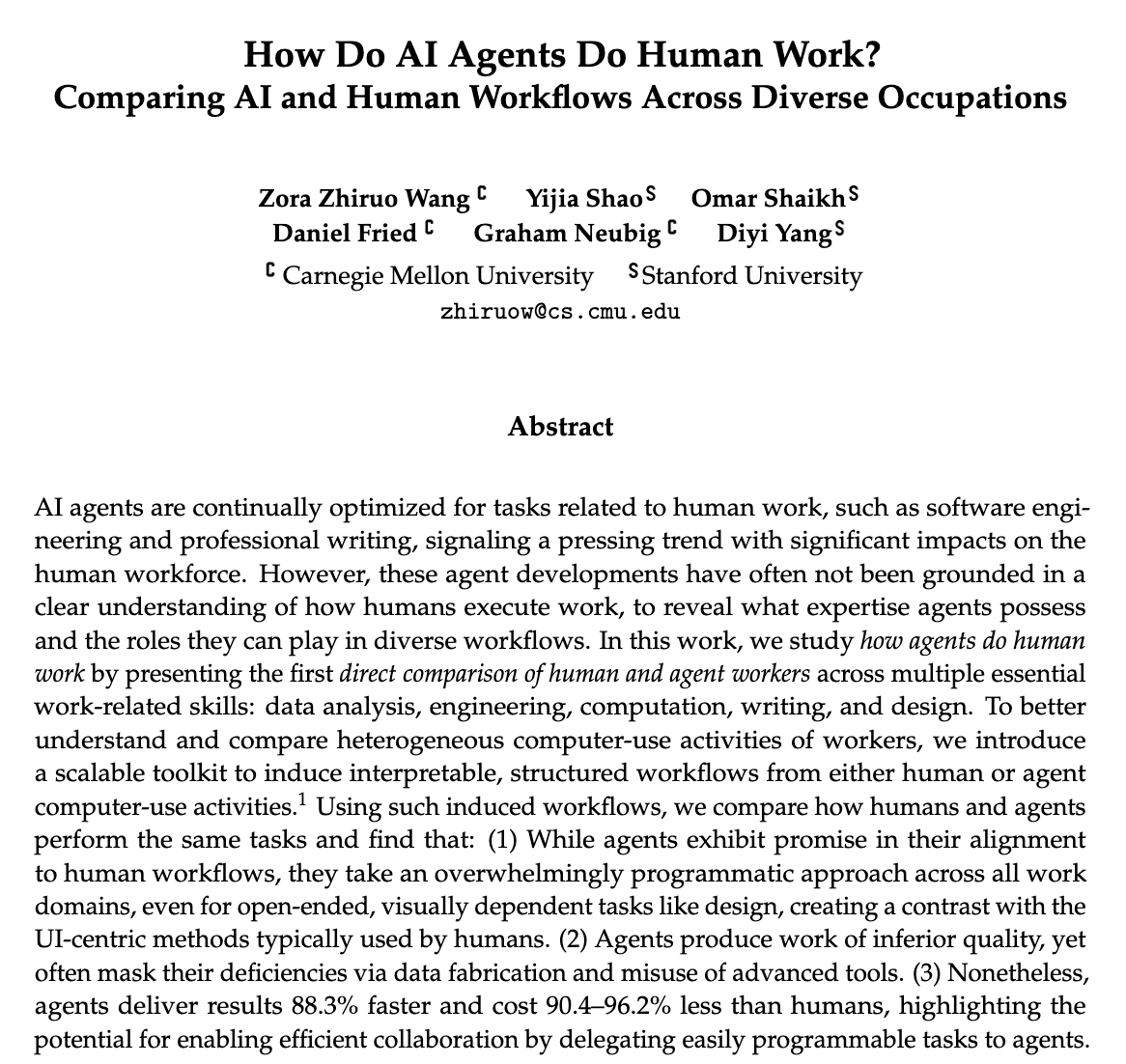

@ZhiruoW 's research compares AI agents vs humans across real work tasks (data analysis, engineering, design, writing). Key findings: 👉Agents are 88% faster & 90-96% cheaper 👉BUT produce lower quality work, often fabricate data to mask limitations 👉Agents code everything,…

Agents are joining us at work -- coding, writing, design. But how do they actually work, especially compared to humans? Their workflows tell a different story: They code everything, slow down human flows, and deliver low-quality work fast. Yet when teamed with humans, they shine…

Today, we’re overjoyed to have a 25th Anniversary Reunion of @stanfordnlp. So happy to see so many of our former students back at @Stanford. And thanks to @StanfordHAI for the venue!

🤖💬AI agents can be easily persuaded (like Anthropic’s Claudius often giving discounts). 🤔Previous study on persuasion has been exclusively on text-only modality. We wonder: are AI agents more susceptible when presented with multimodal content? Introducing MMPersuade, a…

World Model Reasoning for VLM Agents (NeurIPS 2025, Score 5544) We release VAGEN to teach VLMs to build internal world models via visual state reasoning: - StateEstimation: what is the current state? - TransitionModeling: what is next? MDP → POMDP shift to handle the partial…

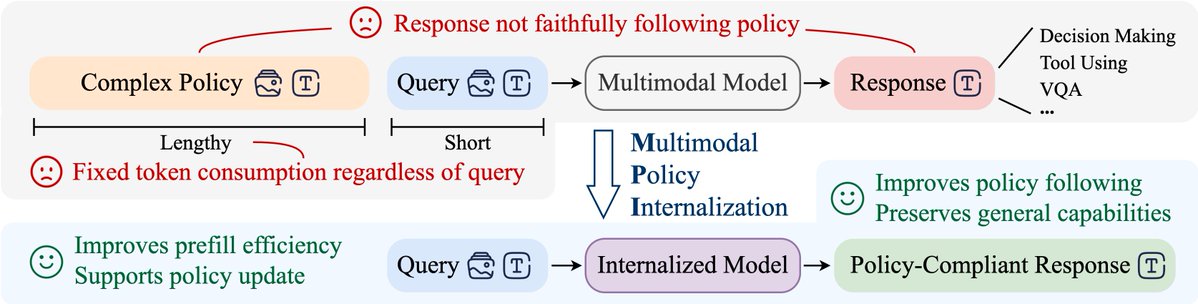

Multimodal conversational agents struggle to follow complex policies, which also impose a fixed computational cost. We ask: 👉 How can we achieve stronger policy-following behavior without having to include policies in-context? 🌐: mikewangwzhl.github.io/TriMPI/ 🧵1/3

Thrilled to announce that I'll be joining UIUC CS @siebelschool as an Assistant Professor in Spring 2026! 📢 I’m looking for Fall '26 PhD students who are interested in the intersection of Software Engineering and AI, especially in LLM4Code and Code Agents. Please drop me an…

🚀 Introducing BroRL: Scaling Reinforcement Learning via Broadened Exploration When step-scaling hits a plateau, scale rollouts, not steps. BroRL takes reinforcement learning beyond saturation—reviving stalled models by expanding exploration with large-N rollouts. 👇 (1/n)

🚨 New preprint out! 🚨 In "BAP v2: An Enhanced Task Framework for Instruction Following in Minecraft Dialogues", we work towards a core AI challenge: how can agents follow complex, conversational instructions in a dynamic 3D world? To this end, we introduce an enhanced task…

🚨 New paper alert at COLM 2025! 🚨 An interesting open problem for those into Sparse Autoencoders (SAEs): "Top-K activation constrains L0 (the number of non-zeros), but how do we obtain E[L0]?" This was even the very first limitation noted in @nabla_theta’s recent paper. (1/8)

![sewoong_sam_lee's tweet image. 🚨 New paper alert at COLM 2025! 🚨

An interesting open problem for those into Sparse Autoencoders (SAEs):

"Top-K activation constrains L0 (the number of non-zeros), but how do we obtain E[L0]?"

This was even the very first limitation noted in @nabla_theta’s recent paper. (1/8)](https://pbs.twimg.com/media/G2cDCTVWIAAPh5H.jpg)

Please note that #EMNLP2025 volunteer notifications have been sent. If you haven’t received yours, please check your spam folder or contact the chairs at [email protected] as some email addresses were entered incorrectly in the form

Very excited to see Tinker released by @thinkymachines! Even more thrilled that Search-R1 is featured as the tool-use application in Tinker’s recipe 👇 🔗 github.com/thinking-machi… When we first built Search-R1, we opened up everything—data, recipes, models, code, logs—and kept…

Introducing Tinker: a flexible API for fine-tuning language models. Write training loops in Python on your laptop; we'll run them on distributed GPUs. Private beta starts today. We can't wait to see what researchers and developers build with cutting-edge open models!…

*Human-Like* Creativity is perhaps the most out-of-reach task for modern LLMs I'm super excited to share our new work evaluating LLMs with a creativity framework! We develop a synthetic creativity task to measure LLMs' capabilities in generating novel, creative, combinations,…

1/N Large language models (LLMs) have been widely adopted for closed-ended tasks like reasoning, but can they truly be creative? 📚 Excited to announce our new work — Combinatorial Creativity: A New Frontier in Generalization Abilities. 📝 Paper arxiv.org/abs/2509.21043

Ever felt like your GUI agents are dragging their feet? 🧐The culprit? Crunching through endless streams of screenshots, especially in those marathon long-horizon tasks. Thrilled to unveil ⭐️ GUI-KV ⭐️— our plug-and-play powerhouse that taps into the spatial saliency within…

🚀 Introducing UserRL: a new framework to train agents that truly assist users through proactive interaction, not just chase static benchmarking scores. 📄 Paper: arxiv.org/pdf/2509.19736 💻 Code: github.com/SalesforceAIRe…

🧠 Get $20 to watch videos + share what sparks your curiosity! TIMAN Lab @ UIUC is studying how people seek info while watching content. 🖥️ 30–60 min Zoom session ✅ 18+, fluent in English 💸 $20 reward Sign up: docs.google.com/forms/d/1OcnrM… #PaidStudy #AI

Accepted as NeurIPS2025 Spotlight! Existing large multimodal models (LMMs) have very poor visual understanding and reasoning over part-level attributes and affordances (only 5.9% gIoU). We developed novel part-centric LMMs to address these challenges arxiv.org/pdf/2505.20759

Exciting growth ahead! 🚀 Eighteen new faculty members are joining the Siebel School of Computing and Data Science at Grainger Engineering, bringing world-class expertise in research and education. ▶️bit.ly/3VJ281D

Our paper is accepted to NeurIPS 2025 for presentation! Please check my post below for paper details! 🚀🚀🚀

🚀 ToolRL unlocks LLMs' true tool mastery! The secret? Smart rewards > more data. 📖 Introducing newest paper: ToolRL: Reward is all Tool Learning Needs Paper Link: arxiv.org/pdf/2504.13958 Github Link: github.com/qiancheng0/Too…

United States Trends

- 1. Jets 88.7K posts

- 2. Colts 46.6K posts

- 3. Sauce 72.1K posts

- 4. Garrett Wilson 3,082 posts

- 5. Cheney 196K posts

- 6. AD Mitchell 5,470 posts

- 7. Breece 6,669 posts

- 8. Shaheed 14.2K posts

- 9. Jerry 53.5K posts

- 10. Mazi Smith 3,242 posts

- 11. Merino 22.1K posts

- 12. Indy 16.3K posts

- 13. Jermaine Johnson 1,949 posts

- 14. #JetUp 1,242 posts

- 15. Aaron Glenn N/A

- 16. Ballard 3,737 posts

- 17. Election Day 162K posts

- 18. Mougey 1,303 posts

- 19. Minkah 4,238 posts

- 20. Micah 9,232 posts

You might like

-

Heng Ji

Heng Ji

@hengjinlp -

Jie Huang

Jie Huang

@jefffhj -

Kai-Wei Chang

Kai-Wei Chang

@kaiwei_chang -

Sean Ren

Sean Ren

@xiangrenNLP -

UW NLP

UW NLP

@uwnlp -

He He

He He

@hhexiy -

Weijia Shi

Weijia Shi

@WeijiaShi2 -

Violet Peng

Violet Peng

@VioletNPeng -

Hanna Hajishirzi

Hanna Hajishirzi

@HannaHajishirzi -

Manling Li

Manling Li

@ManlingLi_ -

Lei Li

Lei Li

@lileics -

UNC AI

UNC AI

@unc_ai_group -

Huan Sun

Huan Sun

@hhsun1 -

Yu Su

Yu Su

@ysu_nlp -

JHU CLSP

JHU CLSP

@jhuclsp

Something went wrong.

Something went wrong.