UNC Computer Science

@unccs

Department of Computer Science - University of North Carolina at Chapel Hill Choose to #GIVE today - learn more here: http://linktr.ee/unccompsci

Vous pourriez aimer

🤔 We rely on gaze to guide our actions, but can current MLLMs truly understand it and infer our intentions? Introducing StreamGaze 👀, the first benchmark that evaluates gaze-guided temporal reasoning (past, present, and future) and proactive understanding in streaming video…

⛱️ Heading to San Diego for #NeurIPS (Dec 2-7th)! I am on the industry job market & will be presenting: LASeR: Learning to Adaptively Select Reward Models with Multi-Arm Bandits (🗓️Dec 4, 4:30PM) Excited to chat about research (reasoning, LLM agents, post-training) & job…

🎉Excited to share that LASeR has been accepted to #NeurIPS2025!☀️ RLHF with a single reward model can be prone to reward-hacking while ensembling multiple RMs is costly and prone to conflicting rewards. ✨LASeR addresses this by using multi-armed bandits to select the most…

🚨 Thrilled to share Prune-Then-Plan! - VLM-based EQA agents often move back-and-forth due to miscalibration. - Our Prune-Then-Plan method filters noisy frontier choices and delegates planning to coverage-based search. - This yields stable, calibrated exploration and…

🚨Introducing our new work, Prune-Then-Plan — a method that enables AI agents to better explore 3D scenes for embodied question answering (EQA). 🧵 1/2 🟥 Existing EQA systems leverage VLMs to drive exploration choice at each step by selecting the ‘best’ next frontier, but…

🚨Introducing our new work, Prune-Then-Plan — a method that enables AI agents to better explore 3D scenes for embodied question answering (EQA). 🧵 1/2 🟥 Existing EQA systems leverage VLMs to drive exploration choice at each step by selecting the ‘best’ next frontier, but…

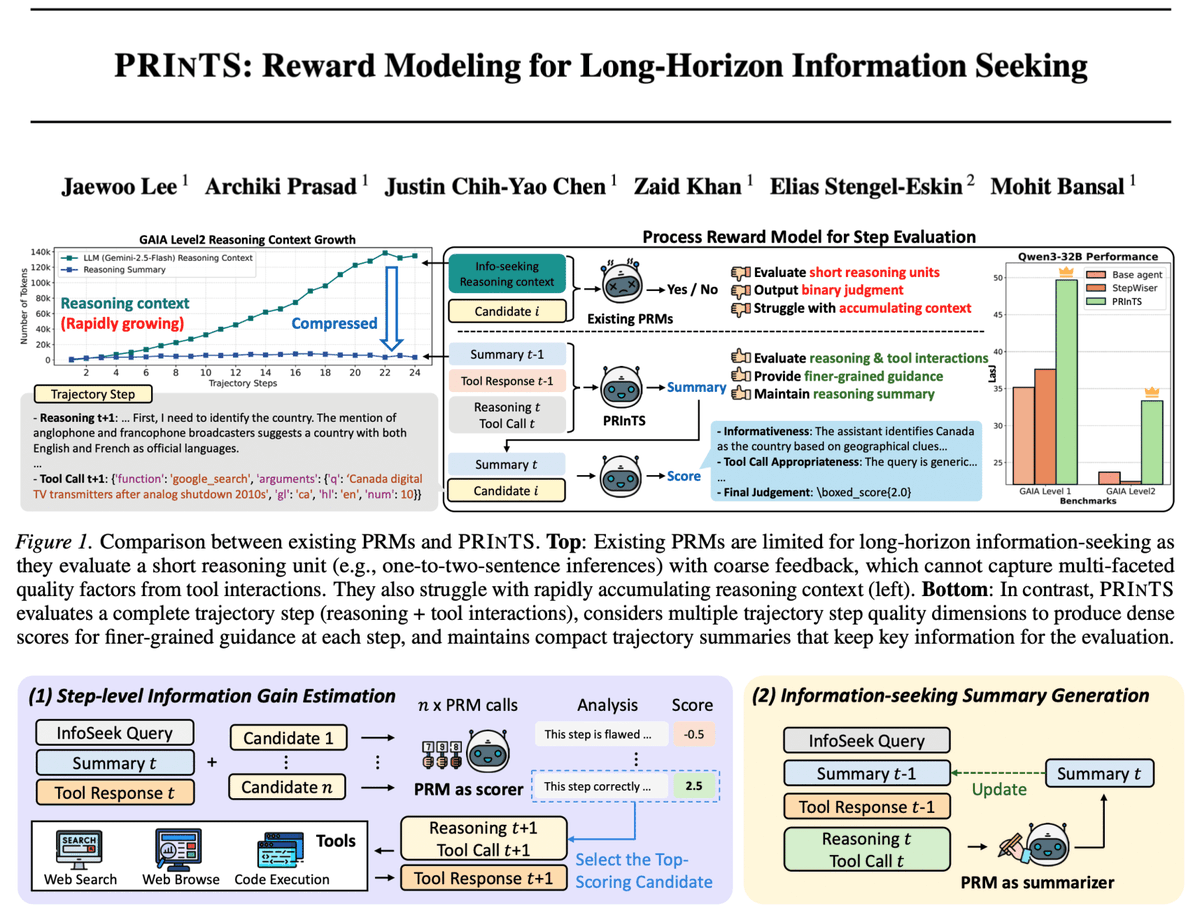

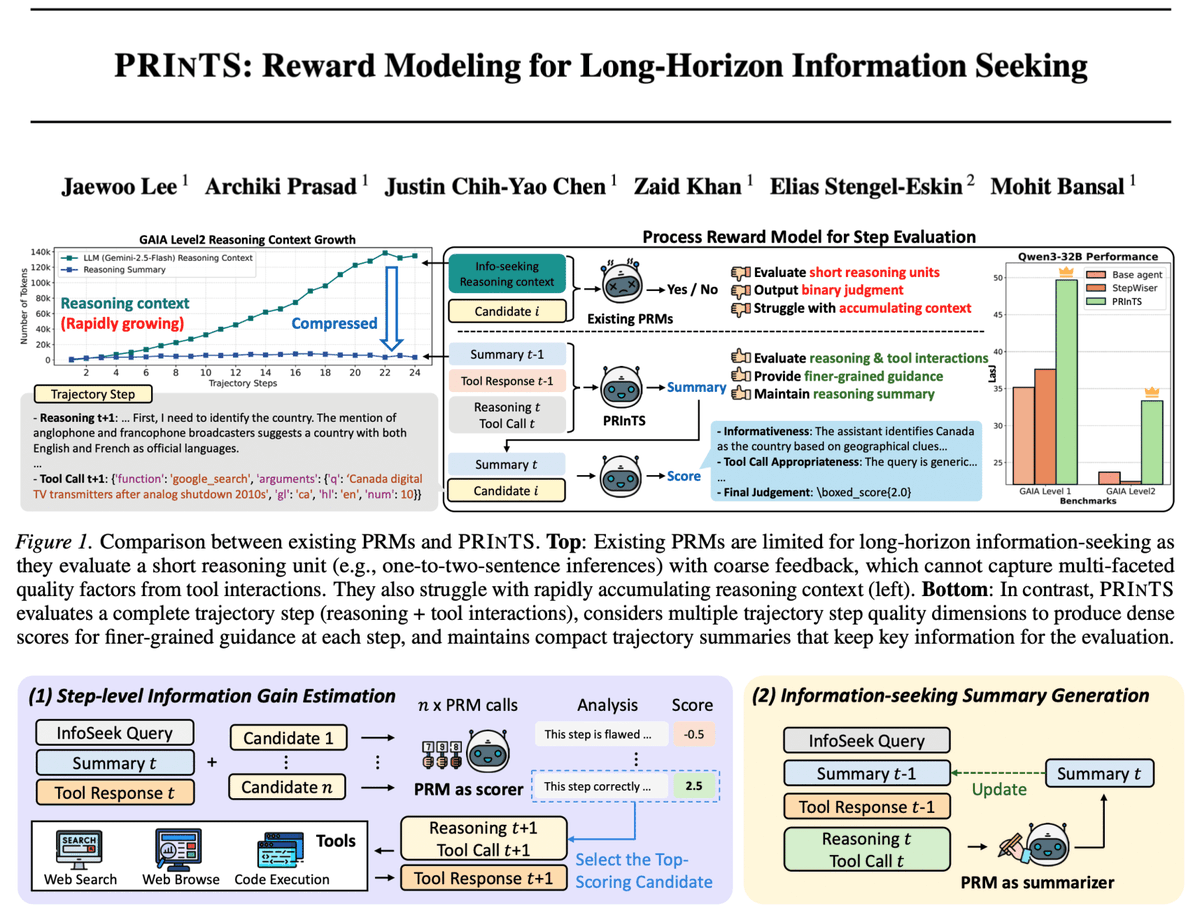

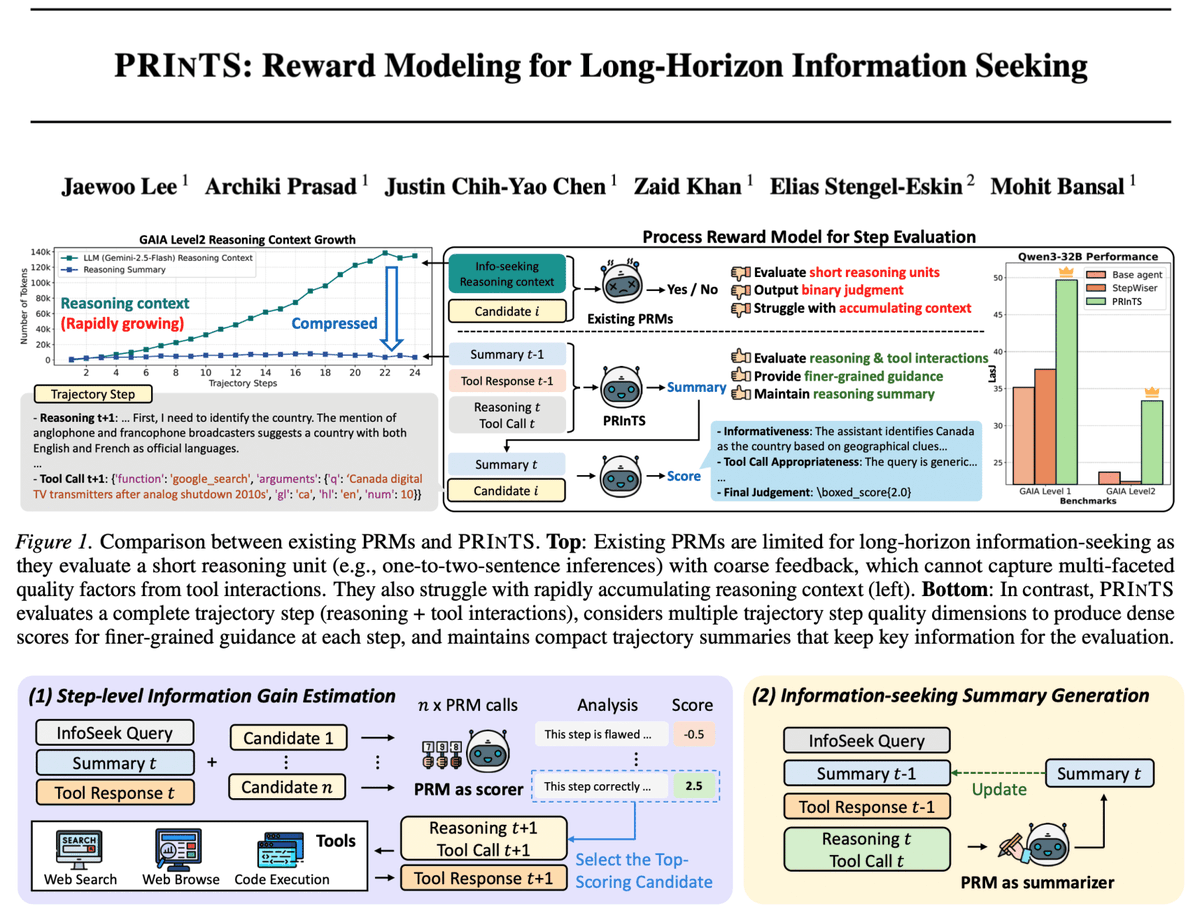

🚨 Check out our generative process reward model, PRInTS, that improves agents' complex, long-horizon information-seeking capabilities via: 1⃣ novel MCTS-based fine-grained information-gain scoring across multiple dimensions. 2⃣ accurate step-level guidance based on compression…

🚨 Excited to announce ✨PRInTS✨, a generative Process Reward Model (PRM) that improves agent’s long-horizon info-seeking via info-gain scoring + summarization. PRInTS guides open + specialized agents with major boosts 👉+9.3% avg. w/ Qwen3-32B across GAIA, FRAMES &…

We’re thrilled to share that AgingMultiverse has been accepted to @SIGGRAPHAsia ! 🎉 Our method delivers state-of-the-art diffusion-based face editing — all without additional training. Huge thanks to @unccs for the feature and for highlighting our work! 🙌 Report:…

🔥New paper alert on face age editing in diffusion models! “The Aging Multiverse: Generating Condition-Aware Facial Aging Tree via Training-Free Diffusion”. 🔥 🤔 Ever wondered how you'd look like if you: 🏋️ hit the gym every week for 20 years? OR 🏖️ moved to Hawaii 10 years…

Long-horizon information-seeking tasks remain challenging for LLM agents, and existing PRMs (step-wise process reward models) fall short because: 1⃣ the reasoning process involves interleaved tool calls and responses 2⃣ the context grows rapidly due to the extended task horizon…

🚨 Excited to announce ✨PRInTS✨, a generative Process Reward Model (PRM) that improves agent’s long-horizon info-seeking via info-gain scoring + summarization. PRInTS guides open + specialized agents with major boosts 👉+9.3% avg. w/ Qwen3-32B across GAIA, FRAMES &…

🚨 Excited to announce ✨PRInTS✨, a generative Process Reward Model (PRM) that improves agent’s long-horizon info-seeking via info-gain scoring + summarization. PRInTS guides open + specialized agents with major boosts 👉+9.3% avg. w/ Qwen3-32B across GAIA, FRAMES &…

🚨 Check out SketchVerify --> an efficient/scalable training-free, sketch-verification based planning framework that improves motion planning quality with more dynamically coherent trajectories (i.e., physically plausible and instruction-consistent motions) prior to full video…

🚨 Excited to share SketchVerify — a framework that scales trajectory planning for video generation. ➡️ Sketch-level motion previews let us search dozens of trajectory candidates instantly — without paying the cost of the time-consuming diffusion process. ➡️ A multimodal…

Owen has been one of my closest collaborators for years and is a fantastic researcher. Now he’s just starting his PhD with Prof. @mohitban47, and this is already his first paper as a PhD student. Super proud of him, go check it out🎉 Owen is also looking for 2026 summer…

🚨 Excited to share SketchVerify — a framework that scales trajectory planning for video generation. ➡️ Sketch-level motion previews let us search dozens of trajectory candidates instantly — without paying the cost of the time-consuming diffusion process. ➡️ A multimodal…

🚨 Excited to share SketchVerify — a framework that scales trajectory planning for video generation. ➡️ Sketch-level motion previews let us search dozens of trajectory candidates instantly — without paying the cost of the time-consuming diffusion process. ➡️ A multimodal…

Thanks @_akhaliq🙌for sharing our work on sketch-based verification for scalable/efficient trajectory planning for physics-aware video generation! Here is our detailed thread with more demos/results! x.com/owenhuang117/s…

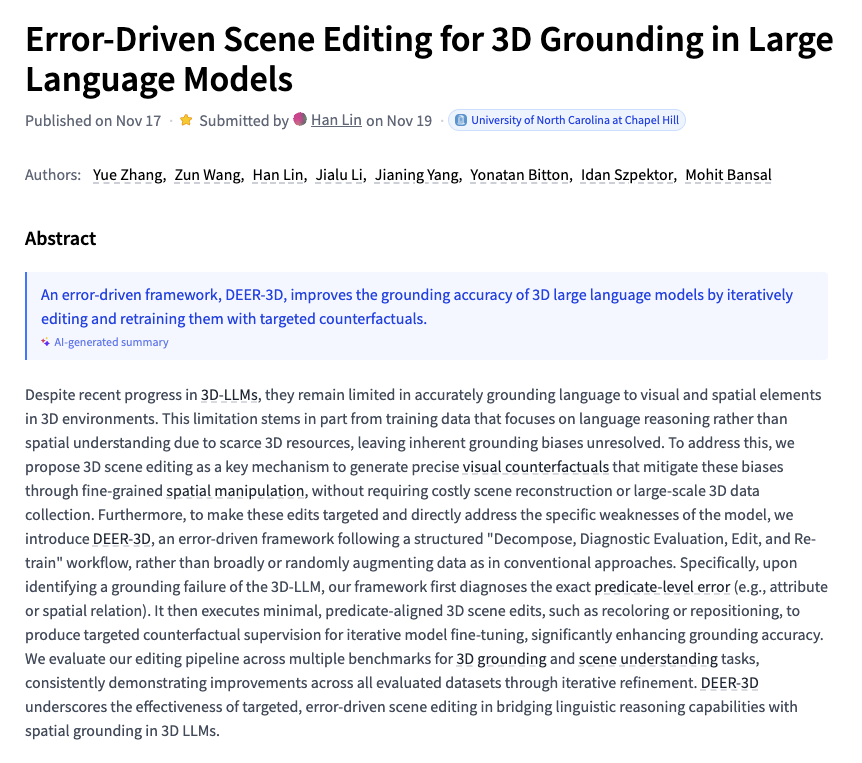

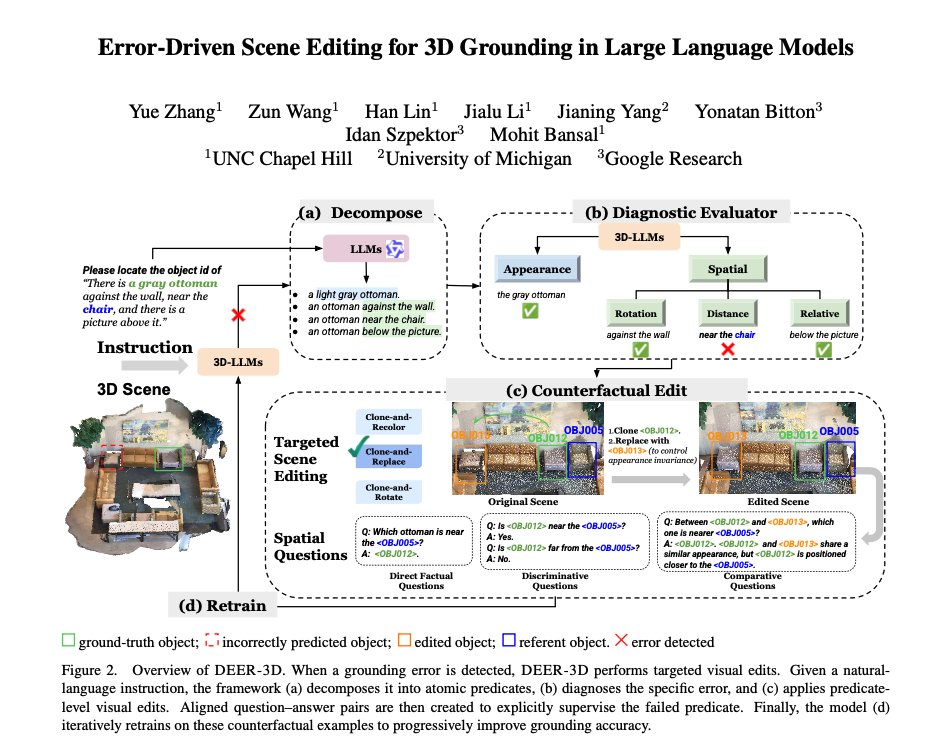

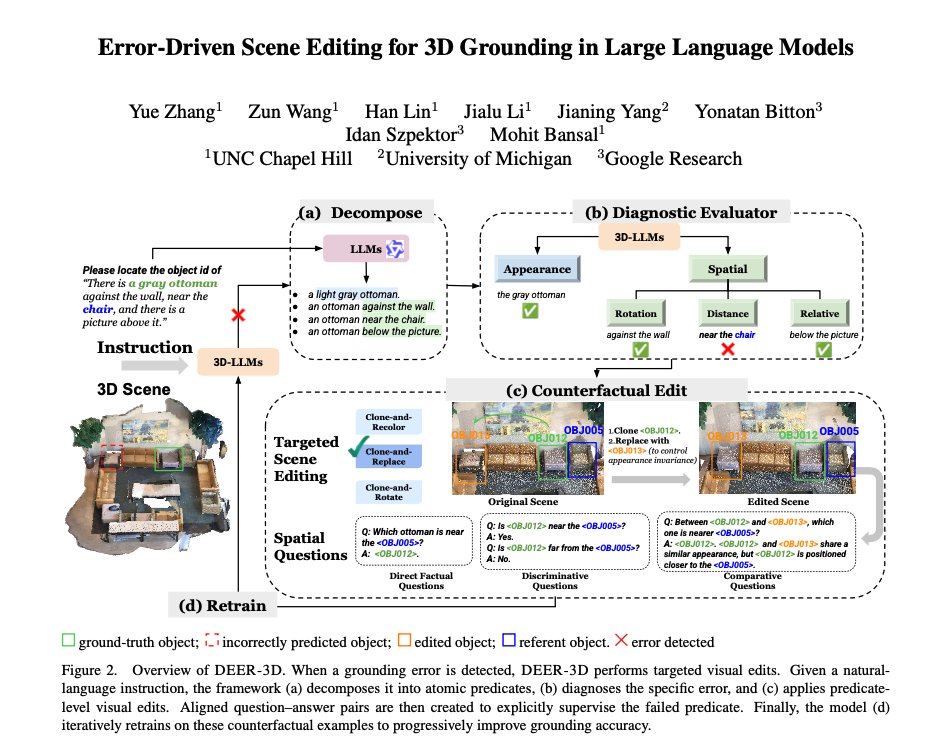

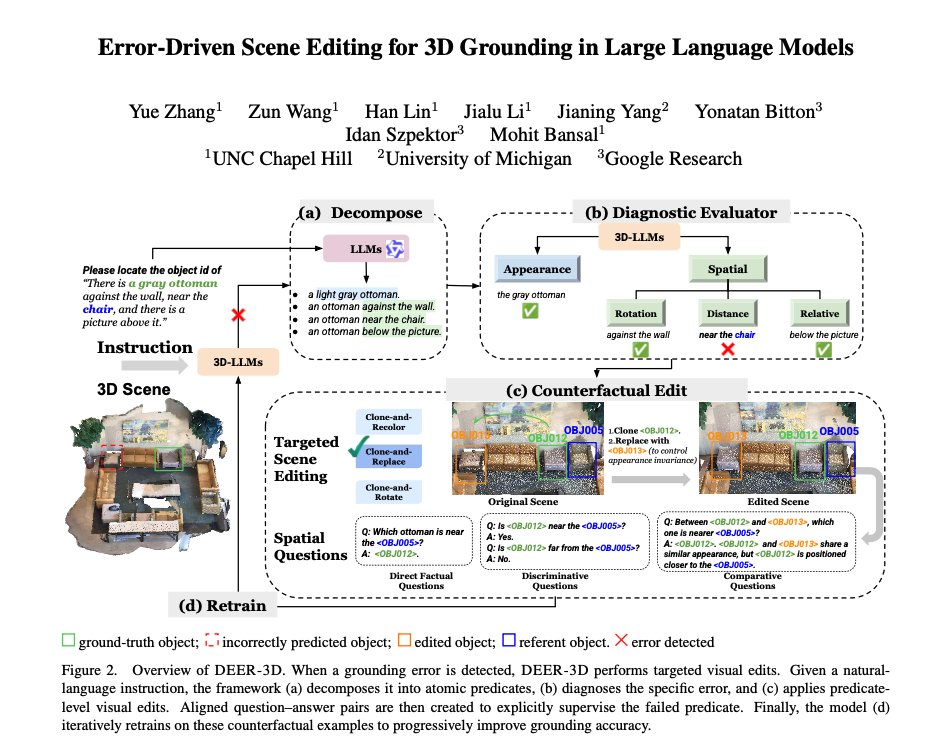

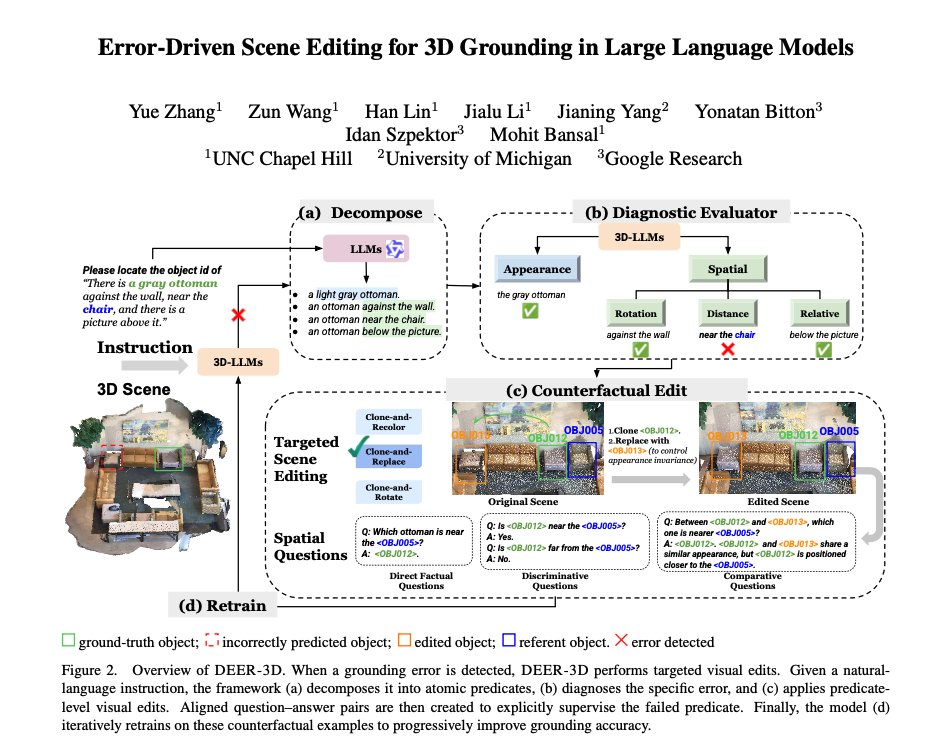

🌟DEER-3D: A Diagnostic-First Framework for Improving 3D Grounding ▶️ Instead of relying on generic or random augmentations, DEER-3D creates targeted 3D counterfactual scenes that are directly linked to the model’s failure patterns. ▶️ It conducts attribute-level diagnosis to…

🚨 Thrilled to introduce DEER-3D: Error-Driven Scene Editing for 3D Grounding in Large Language Models - Introduces an error-driven scene editing framework to improve 3D visual grounding in 3D-LLMs. - Generates targeted 3D counterfactual edits that directly challenge the…

🙌 Welcome, Dr. Mingyu Ding @unccs, to join the Editorial Board of the "Robotics, Mechatronics and Intelligent Machines" Section in Machines. mdpi.com/journal/machin… #robotics #AI #HRI #computervision #robotlearning #mechanicaldesign @MDPIEngineering @MDPIOpenAccess

Thanks @_akhaliq for sharing our work "DEER3D: Error-Driven Scene Editing for 3D Grounding in Large Language Models"!🙏 For those who are interested, here is the detailed thread-> x.com/zhan1624/statu…

Check out our new work: 3D-LLMs need *error-driven* data augmentation!

🚨 Thrilled to introduce DEER-3D: Error-Driven Scene Editing for 3D Grounding in Large Language Models - Introduces an error-driven scene editing framework to improve 3D visual grounding in 3D-LLMs. - Generates targeted 3D counterfactual edits that directly challenge the…

🚨 Thrilled to introduce DEER-3D: Error-Driven Scene Editing for 3D Grounding in Large Language Models - Introduces an error-driven scene editing framework to improve 3D visual grounding in 3D-LLMs. - Generates targeted 3D counterfactual edits that directly challenge the…

PhD student @QiLuchao is democratizing generative AI for human faces, giving storytellers the opportunity to use and personalize AI tools to their own applications. Read More: cs.unc.edu/news-article/d…

Thanks @unccs! I am grateful for all the support from the department along the way!

United States Tendances

- 1. #AEWDynamite 19.4K posts

- 2. Giannis 77.9K posts

- 3. #TusksUp N/A

- 4. #Survivor49 2,567 posts

- 5. #TheChallenge41 1,967 posts

- 6. Ryan Leonard N/A

- 7. Claudio 28.8K posts

- 8. Jamal Murray 5,598 posts

- 9. #ALLCAPS 1,229 posts

- 10. Ryan Nembhard 3,358 posts

- 11. Kevin Overton N/A

- 12. Will Wade N/A

- 13. Steve Cropper 4,847 posts

- 14. Achilles 5,332 posts

- 15. Klingberg N/A

- 16. Toluca 7,893 posts

- 17. Yeremi N/A

- 18. Dark Order 1,760 posts

- 19. Orlov N/A

- 20. Bucks 52.2K posts

Vous pourriez aimer

-

Jialu Li

Jialu Li

@JialuLi96 -

CMU School of Computer Science

CMU School of Computer Science

@SCSatCMU -

UNC AI

UNC AI

@unc_ai_group -

Siebel School of Computing and Data Science

Siebel School of Computing and Data Science

@siebelschool -

UBC Computer Science

UBC Computer Science

@UBC_CS -

Yoav Artzi

Yoav Artzi

@yoavartzi -

Mohit Bansal

Mohit Bansal

@mohitban47 -

Computer Science @ The University of Manchester

Computer Science @ The University of Manchester

@csmcr -

UW–Madison Computer Sciences

UW–Madison Computer Sciences

@WisconsinCS -

UMN Computer Science & Engineering

UMN Computer Science & Engineering

@UMNComputerSci -

Yi Lin Sung

Yi Lin Sung

@yilin_sung -

Archiki Prasad ✈️ NeurIPS 2025

Archiki Prasad ✈️ NeurIPS 2025

@ArchikiPrasad -

Swarnadeep Saha

Swarnadeep Saha

@swarnaNLP -

Adyasha Maharana

Adyasha Maharana

@adyasha10 -

USC Thomas Lord Department of Computer Science

USC Thomas Lord Department of Computer Science

@CSatUSC

Something went wrong.

Something went wrong.