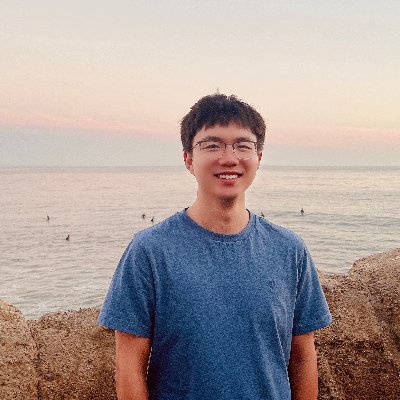

Viraj Prabhu

@virprabh

Research Scientist at Salesforce AI. Georgia Tech PhD. Interested in all things computer vision / machine learning.

내가 좋아할 만한 콘텐츠

Check out our latest work on building Web Agents that Learn Tools (WALT) to get more done faster! 🧵👇🏻

(Thread 1/4) Announcing WALT — Web Agents that Learn Tools 🛠️ WALT reverse-engineers existing web automations (search, comment, filter) → reusable tools that allow agents to focus on higher-level reasoning rather than choreographing clicks. This abstraction transforms the…

🚀 Introducing BLIP3o-NEXT from @SFResearch -- a fully open-source foundation model that unifies text-to-image generation and image editing within a single architecture. Key insights: 1️⃣ Architecture-wise: most design choices show comparable performance — what matters is…

Browser agents — and agents in general — should learn to discover and use higher-level skills rather than executing low-level atomic actions. WALT turns unsupervised web interactions into structured, reusable skills, enabling agents to act with fewer steps and greater…

Humans don’t just use tools — we invent them. That’s the next frontier for AI agents. At @SFResearch, we’re introducing WALT (Web Agents that Learn Tools) — a framework that teaches browser agents to discover and reverse-engineer a website’s hidden functionality into reusable…

Humans don’t just use tools — we invent them. That’s the next frontier for AI agents. At @SFResearch, we’re introducing WALT (Web Agents that Learn Tools) — a framework that teaches browser agents to discover and reverse-engineer a website’s hidden functionality into reusable…

(3/4) Outcome: up to 30% higher success rates with 1.4x fewer steps / LLM-calls (new SoTA on VisualWebArena) 📈 Here’s another example of finding stay options on Airbnb: Baseline web agent (left), WALT agent (right).

(4/4) We provide a simple CLI for discovery/serving (MCP) with WALT – try it out with 🚀walt discover <your-url>; walt agent <your-task> --start-url <your-url> 📝 Paper: bit.ly/4nhJf0K 🔗 Code: bit.ly/47gMAXZ Authors: @virprabh, @yutong_dai, Matthew Fernandez,…

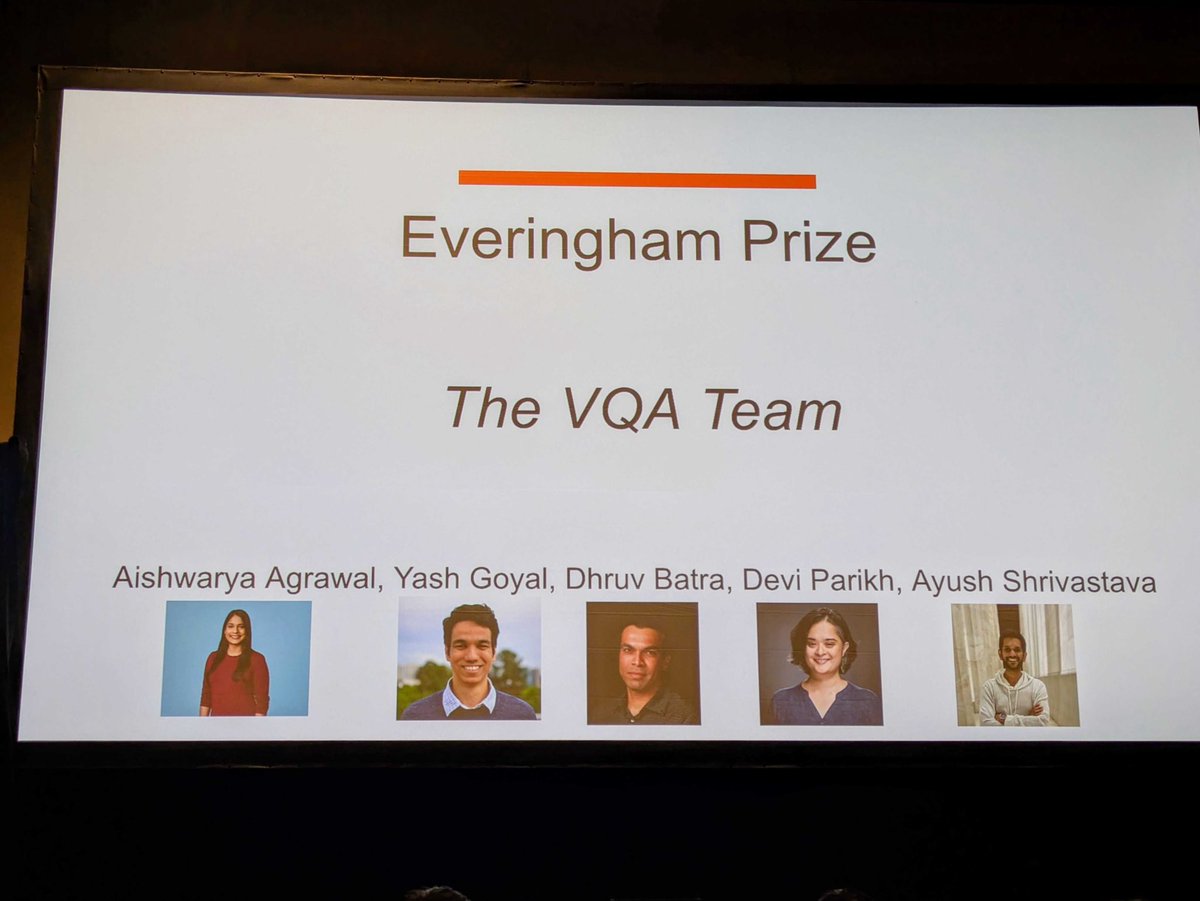

Thank you to the award committee and the broader vision community for the recognition. After all these (21!) years and so many conferences across sub-disciplines in AI, the vision community continues to feel like home. What makes this extra special is that the original VQA…

I'll be presenting this at the first poster session tomorrow (Oct 21, 11.45am, Exhibit Hall I #301) – stop by if you're attending #ICCV2025! 🏖️

💥 Super excited to introduce our latest work on **programmatically** benchmarking vision-language models in the wild 👇

Thank you so much Caiming! We show that involving coding as a new type of action apart from GUI action for CUA can significantly help improve the computer-using performance while reducing the total actions for task solving. If you are interested in it, please take a look at…

🚀 Computer-using agents represent a powerful new paradigm for human-computer interaction. Over the past year, we’ve explored multiple approaches to tackle the key challenges in building robust CUA systems. 12/2024 we released Aguvis (x.com/CaimingXiong/s…) 07/2024 we released…

🚀 Computer-using agents represent a powerful new paradigm for human-computer interaction. Over the past year, we’ve explored multiple approaches to tackle the key challenges in building robust CUA systems. 12/2024 we released Aguvis (x.com/CaimingXiong/s…) 07/2024 we released…

Meet AGUVIS: A pure vision-based framework for autonomous GUI agents, operating seamlessly across web, desktop, and mobile platforms without UI code. Key Features & Contributions 🔍 Pure Vision Framework: First fully autonomous pure vision GUI agent capable of performing tasks…

Happening now in 208B, come check out the first EMACS workshop! #CVPR2025

Join us at the first-ever EMACS workshop @CVPR! 🚨 Submissions open March 5: tinyurl.com/emacs25 See you in Nashville! 🎸 #CVPR2025

🚨🚨 Paper submission deadline extended to May 4. Submit your work (in-progress or complete!) to the EMACS workshop @CVPR2025 in Nashville! Submission link: tinyurl.com/emacs2025submit #CVPR2025 #GenerativeAI #bias

🚀 Excited about how generative AI can power experimental (not just observational) audits of ML systems that reveal actionable insights into performance and bias? Join us at the first-ever EMACS workshop @CVPR2025 in Nashville! 🌟 Speakers & submissions: sites.google.com/view/emacs2025/

🚀 Excited about how generative AI can power experimental (not just observational) audits of ML systems that reveal actionable insights into performance and bias? Join us at EMACS (Experimental Model Auditing with Controllable Synthesis) workshop @CVPR! sites.google.com/view/emacs2025/

Join us at the first-ever EMACS workshop @CVPR! 🚨 Submissions open March 5: tinyurl.com/emacs25 See you in Nashville! 🎸 #CVPR2025

🚀 Excited about how generative AI can power experimental (not just observational) audits of ML systems that reveal actionable insights into performance and bias? Join us at the first-ever EMACS workshop @CVPR2025 in Nashville! 🌟 Speakers & submissions: sites.google.com/view/emacs2025/

Introducing Gaze-LLE, a new model for gaze target estimation built on top of a frozen visual foundation model! Gaze-LLE achieves SOTA results on multiple benchmarks while learning minimal parameters, and shows strong generalization paper: arxiv.org/abs/2412.09586

Looking forward to some Miami sun this week at #EMNLP2024, my first NLP conference in ~7 years! ☀️ HMU if you’d like to learn more about our work at @SFResearch or just meet/catch up! 🍹

🤔Ever wondered why merging LoRA models is trickier than fully-finetuned ones? 🔍We explore this and discover that poor alignment b/w LoRA models lead to subpar merging. 💡The solution? KnOTS🪢— our latest work that uses SVD to improve alignment and boosts SOTA merging methods.

Model merging is tricky when model weights aren’t aligned Introducing KnOTS 🪢: a gradient-free framework to merge LoRA models. KnOTS is plug-and-play, boosting SoTA merging methods by up to 4.3%🚀 📜: arxiv.org/abs/2410.19735 💻: github.com/gstoica27/KnOTS

Introducing EgoMimic - just wear a pair of Project Aria @meta_aria smart glasses 👓 to scale up your imitation learning datasets! Check out what our robot can do. A thread below👇

Evaluate the hallucination of your VLMs using our new benchmark

🚨🚨🚨Introducing PROVE: A new programmatic benchmark for evaluating vision-language models (VLMs). VLMs often provide responses that are unhelpful, contain false claims about the image, or both. However, benchmarking this in the wild can be surprisingly hard! Enter PROVE,…

United States 트렌드

- 1. Epstein 1.12M posts

- 2. Tarik Skubal 2,971 posts

- 3. Steam Machine 60.2K posts

- 4. Virginia Giuffre 63.6K posts

- 5. Starship 13.4K posts

- 6. Bill Clinton 27.8K posts

- 7. Valve 41K posts

- 8. #LightningStrikes N/A

- 9. Xbox 65.6K posts

- 10. Cy Young 4,080 posts

- 11. #dispatch 59.6K posts

- 12. Dana Williamson 11.8K posts

- 13. Tim Burchett 21.8K posts

- 14. Raising Arizona N/A

- 15. Boebert 53.3K posts

- 16. Situation Room 10K posts

- 17. Hagel N/A

- 18. Beal 7,527 posts

- 19. Maxwell 142K posts

- 20. Jake Paul 4,430 posts

내가 좋아할 만한 콘텐츠

-

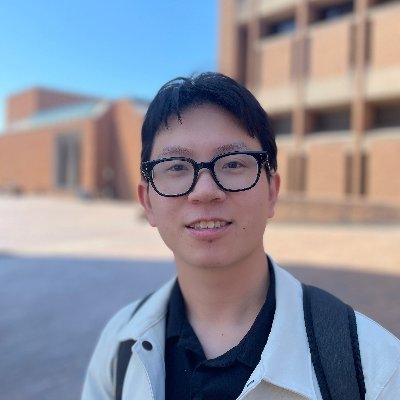

Judy Hoffman

Judy Hoffman

@judyfhoffman -

Ani Kembhavi

Ani Kembhavi

@anikembhavi -

Karan Desai (KD)

Karan Desai (KD)

@kdexd -

Frank Dellaert

Frank Dellaert

@fdellaert -

Danfei Xu

Danfei Xu

@danfei_xu -

Hilde Kuehne

Hilde Kuehne

@HildeKuehne -

Yash Kant

Yash Kant

@yash2kant -

Abhishek Das

Abhishek Das

@abhshkdz -

Ram Ramrakhya

Ram Ramrakhya

@RamRamrakhya -

erikwijmans

erikwijmans

@erikwijmans -

Unnat Jain

Unnat Jain

@unnatjain2010 -

Kiana Ehsani

Kiana Ehsani

@ehsanik -

Ayush Shrivastava

Ayush Shrivastava

@ayshrv -

Andrew Silva

Andrew Silva

@andrewsilva9 -

Purva Tendulkar

Purva Tendulkar

@PurvaTendulkar

Something went wrong.

Something went wrong.