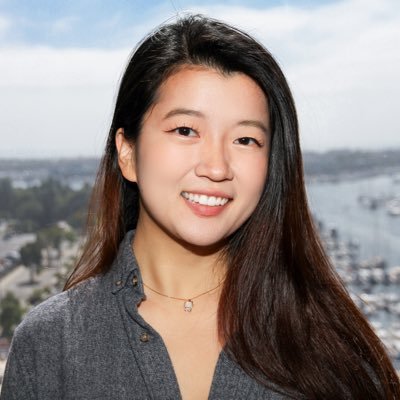

Zeming Wei

@weizeming25

First-year Ph.D. student @PKU1898, ex-visiting student @UCBerkeley, research intern @BytedanceTalk. I focus on developing Trustworthy AI/ML.

You might like

🚨False Sense of Security: Our new paper identifies a critical limitation in representation probing-based malicious input detection—purported "high detection accuracy" may confer a false sense of security: arxiv.org/pdf/2509.03888

Mitigating racial bias from LLMs is a lot easier than removing it from humans! Can’t believe this happened at the best AI conference @NeurIPSConf We have ethical reviews for authors, but missed it for invited speakers? 😡

Yes. AI could even lead to a decline of science since we may stop to ask why and how. We need to make AI more interpretable — not only for AI but also for humanity.

unfortunately, the solution that works best often isn’t the one that teaches you the most cleaning up pretraining data works great for improving LLM perf, prompt engineering works great for improving agent perf but you won’t learn much by doing these things

Our theory on LLM self-correction has been accepted to #NeurIPS2024 ! A good time to revisit it after the GPT-o1 release:)

Can LLMs improve themselves by self-correction, just like humans? In this new paper, we take a serious look into the self-correction ability of Transformers. We find although possible, self-correction is much harder than supervised learning, so we need a fully armed Transformer!

Excited to share that our LLM self-correction paper received the Spotlight Award (top 3) at ICML’24 ICL workshop! iclworkshop.github.io

Can LLMs improve themselves by self-correction, just like humans? In this new paper, we take a serious look into the self-correction ability of Transformers. We find although possible, self-correction is much harder than supervised learning, so we need a fully armed Transformer!

😎So excited to see that our In-context Attack (ICA) method has been leveraged by Anthrophic to break down the most prominent LLMs -- simply by extending # in-context examples! What a lesson of scaling!😆 See how this idea originates w/ @weizeming25 arxiv.org/abs/2310.06387

New Anthropic research paper: Many-shot jailbreaking. We study a long-context jailbreaking technique that is effective on most large language models, including those developed by Anthropic and many of our peers. Read our blog post and the paper here: anthropic.com/research/many-…

Glad to share that I just reached 100 citations according to Google Scholar. Thanks all coauthors! scholar.google.com/citations?user…

Jatmo: Prompt Injection Defense by Task-Specific Finetuning "It harnesses a teacher instruction-tuned model to generate a task-specific dataset, which is then used to fine-tune a base model (i.e., a non-instruction-tuned model). Jatmo only needs a task prompt and a dataset of…

United States Trends

- 1. Under Armour 5,726 posts

- 2. Blue Origin 10.8K posts

- 3. Megyn Kelly 36.8K posts

- 4. Nike 27K posts

- 5. New Glenn 11.2K posts

- 6. Senator Fetterman 21.5K posts

- 7. Curry Brand 4,679 posts

- 8. Brainiac 8,613 posts

- 9. Vine 37.9K posts

- 10. #2025CaracasWordExpo 12.3K posts

- 11. Operación Lanza del Sur 4,432 posts

- 12. Operation Southern Spear 4,976 posts

- 13. CarPlay 4,759 posts

- 14. Eric Swalwell 33.2K posts

- 15. Matt Gaetz 18K posts

- 16. Portugal 69.3K posts

- 17. Coach Beam N/A

- 18. World Cup 110K posts

- 19. #UFC322 9,255 posts

- 20. Thursday Night Football 2,463 posts

You might like

-

Yi Zeng 曾祎

Yi Zeng 曾祎

@EasonZeng623 -

Xichen Pan

Xichen Pan

@xichen_pan -

Paali

Paali

@roopali_vij -

Yihe Deng

Yihe Deng

@Yihe__Deng -

Jiarui Jin

Jiarui Jin

@jerryjiaruijin -

Tongtian Zhu

Tongtian Zhu

@Tongtian_Zhu -

Ziquan

Ziquan

@Ziquan12 -

Julian Guerreiro

Julian Guerreiro

@JJAGuerreiro -

Jiachen ("Tianhao") Wang

Jiachen ("Tianhao") Wang

@JiachenWang97 -

Zekai Wang

Zekai Wang

@wzekai99 -

Yufeng Yang

Yufeng Yang

@yufengyang1999 -

Shiyu Wang

Shiyu Wang

@shiyu04490786

Something went wrong.

Something went wrong.