내가 좋아할 만한 콘텐츠

Thursday, I will be presenting my open-source LLM tooling work at the London Clojurians online event. I'll go over the components needed to build LLM apps and discuss whether or not these components are already a part of LLM models and APIs. meetup.com/london-clojuri…

meetup.com

Is there a place for LLM orchestration tools? (by Žygimantas Medelis), Tue, Apr 9, 2024, 6:30 PM |...

**THIS IS AN ONLINE EVENT** [Connection details will be shared 1h before the start time] The London Clojurians are happy to present: Title: **Is there a place for LLM orc

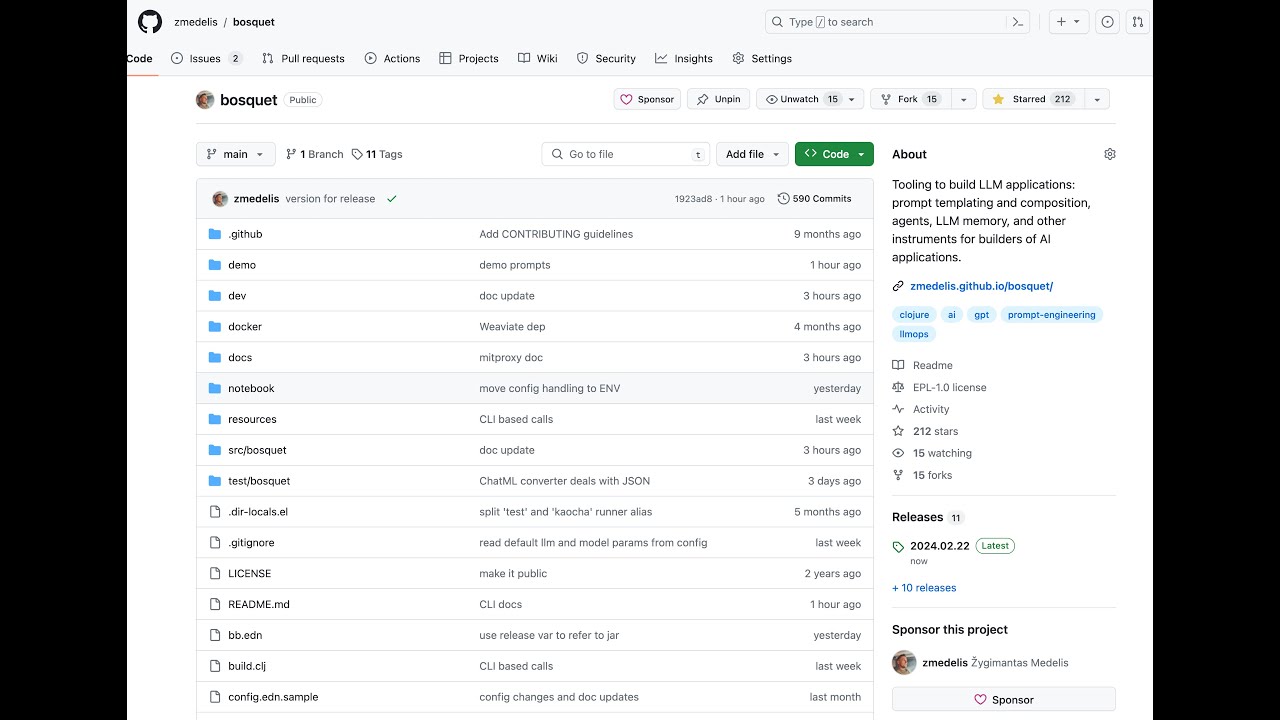

A demo of my latest work on Bosquet - an LLM tooling to work with Gen AI github.com/zmedelis/bosqu… youtu.be/ywlNGiD9gCg

youtube.com

YouTube

Bosquet LLM command line interface and observability tools

The worst take that I have seen these past few days is that long context models like Gemini 1.5 will replace RAG. This couldn't be further from the truth. Let's explore one scenario. Imagine you had data that has a complex structure, changes regularly, and has an important…

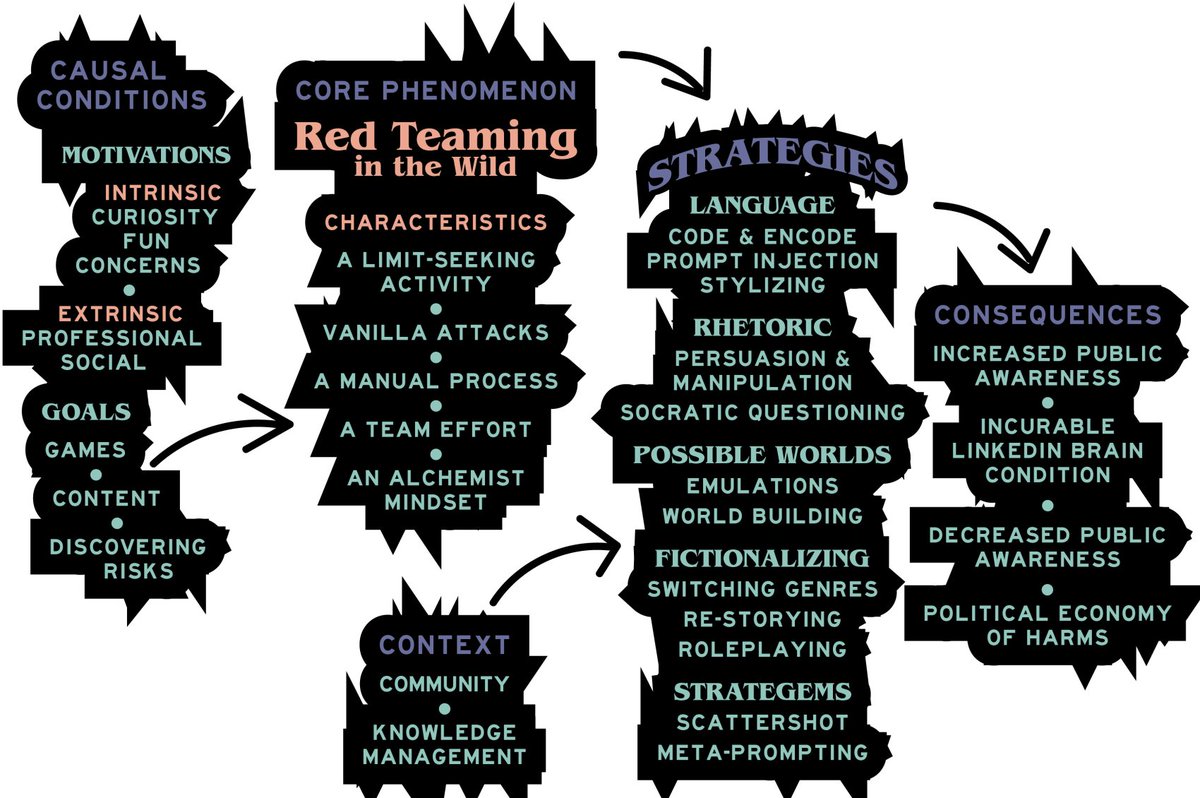

An exploration into LLM Red Teaming: 1. How is LLM jailbreaking defined? 2. What are the motivations and goals of those seeking ways to make LLMs misbehave? 3. What strategies are used to red team? arxiv.org/abs/2311.06237

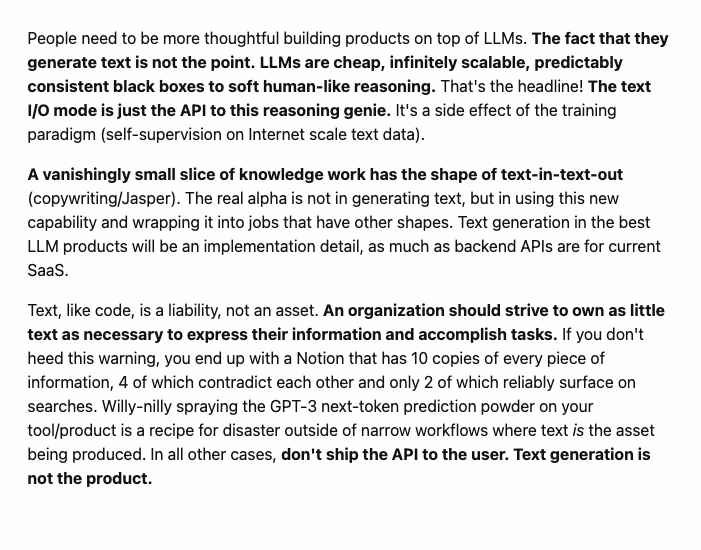

Small rant about LLMs and how I see them being put, rather thoughtlessly IMO, into productivity tools. 📄 TL;DR — Most knowledge work isn't a text-generation task, and your product shouldn't ship an implementation detail of LLMs as the end-user interface stream.thesephist.com/updates/166861…

On Friday, we'll have the next talk of our #LLM meetup series. @zzgmm will demonstrate how recent research papers can be elegantly implemented using #Bosquet in #Clojure. clojureverse.org/t/scicloj-llm-…

Sunday tinkering to help with HuggingFace 🤗 dataset access from Clojure github.com/zmedelis/hfds-…

github.com

GitHub - zmedelis/hfds-clj: Access to HuggingFace datasets via Clojure

Access to HuggingFace datasets via Clojure. Contribute to zmedelis/hfds-clj development by creating an account on GitHub.

Following the CoVe implementation another chain this time on text summarization task. Chain of Density is a neat technique to produce good summaries. It is interesting to track how summarization density increases as the LLM produces versions of summaries. zmedelis.github.io/bosquet/notebo…

zmedelis.github.io

Chain of Density prompting

Chain of Density (CoD) technique is introduced in GPT-4 Summarization with Chain of Density Prompting paper. It aims to produce high-quality and dense information text summaries.

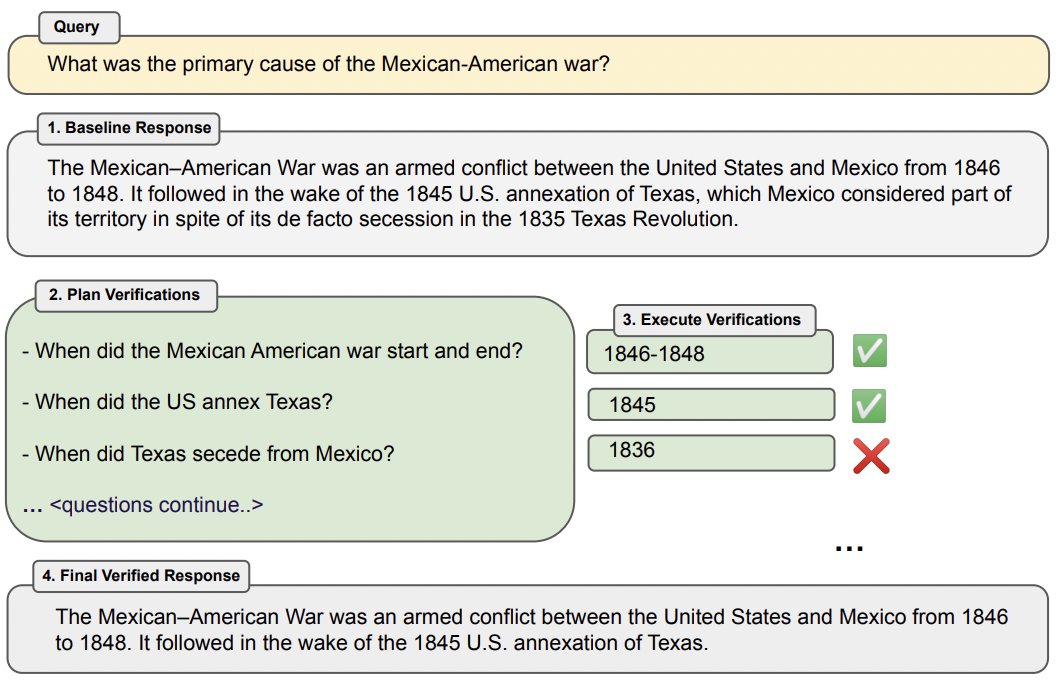

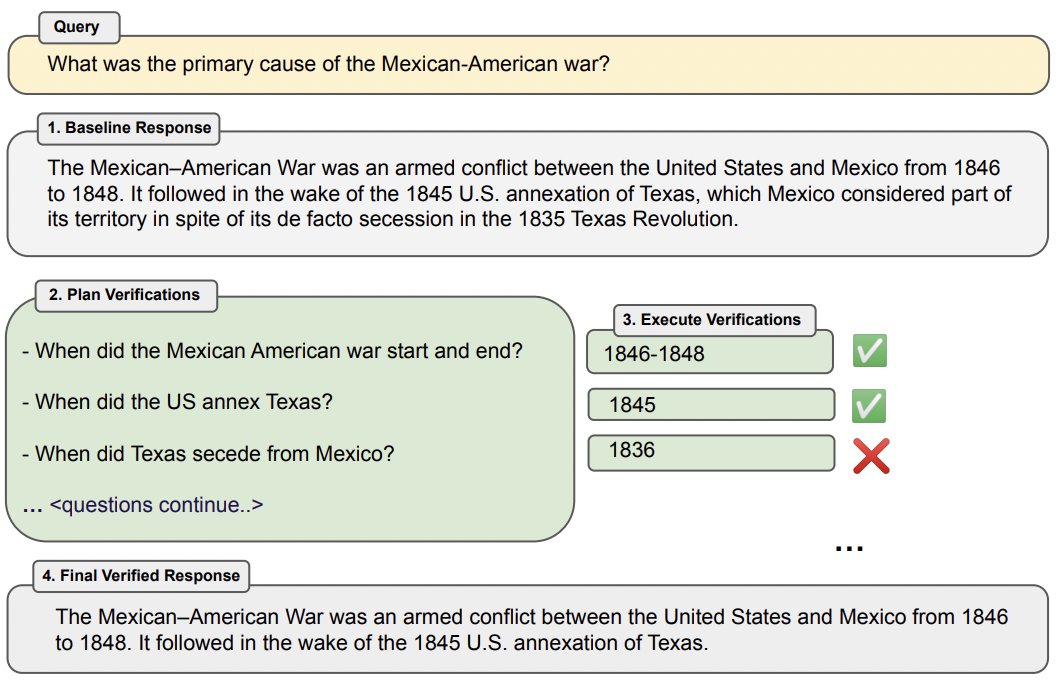

Chain of Verification is a multi-step prompting technique addressing the issue of LLM hallucinations. CoVe can be declared and executed in Bosquet without custom coding and piping zmedelis.github.io/bosquet/notebo…

Chain of Verification is a multi-step prompting technique addressing the issue of LLM hallucinations. CoVe can be declared and executed in Bosquet without custom coding and piping zmedelis.github.io/bosquet/notebo…

LLMs are not great at generating complex structured data. They will often be a lot or a bit off. Even having 50.0% instead of 50 in a financial doc might be misleading. But finetuned smaller models like Llama-7B will outperform big ones in this task. arxiv.org/pdf/2309.08963…

Clojure LLM meetup #5 is tomorrow 📅 Join us to hear about and discuss the LLM tools we are building 💪 clojureverse.org/t/scicloj-llm-… I'll present Bosquet's approach to LLM memory 🧠 handling github.com/zmedelis/bosqu…

LLM hallucinates when it adds fabricated info. This is not always bad. Precision parsing or completing contracts with LLM is useful but not that spectacular. Going with hallucinated what-ifs in the world of the contract is more interesting. arxiv.org/abs/2309.05922

"I did this thing and automated 90% of grunt work on your side, help me fix the hard parts." - is how we're trying to design our AI interfaces. Why? I'm increasingly convinced that our existing UX primitives don't hold up for modern AI workflows. Most our systems designed for…

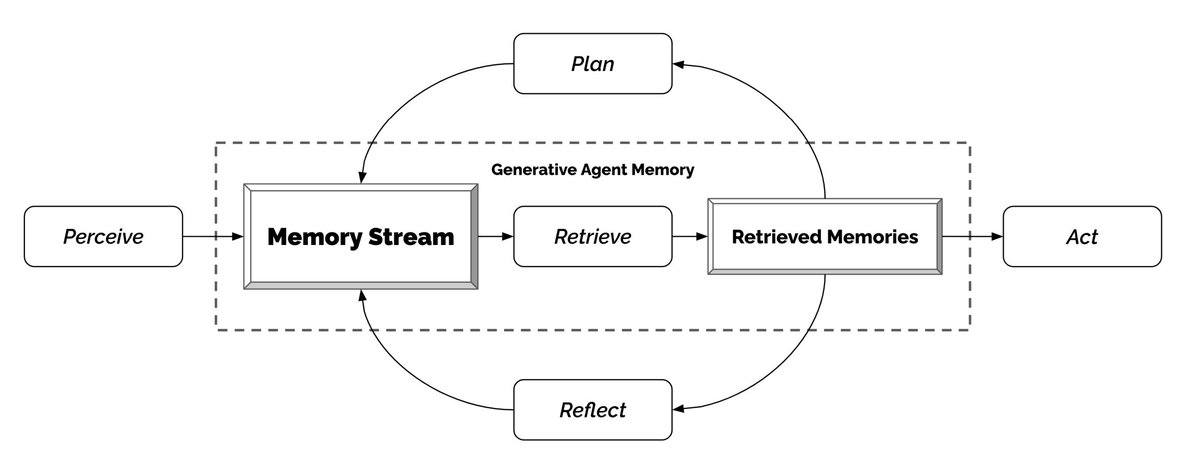

🧠Generative Agents paper has a nice LLM memory approach, showing how memory handling should be added to an LLM-reliant app. It does not focus on how large content is stored. VectorDB, or whatnot. Instead, it defines how memory is produced and consumed arxiv.org/pdf/2304.03442…

Bosquet - a Clojure LLM Ops lib. Supports multiple LLM API providers, agents, and prompt chaining github.com/zmedelis/bosqu…

I am delighted to receive Clojurists Together support to continue my work on Bosquet - a Clojure LLM lib for building AI apps. Will be expanding agents and adding memory support. github.com/zmedelis/bosqu…

Clojurists Together is excited to announce our next round of funding for Q3 202 projects. Congratulations all! clojuriststogether.org/news/q3-2023-f…

United States 트렌드

- 1. Kilmar Abrego Garcia 4,715 posts

- 2. NASCAR 10.3K posts

- 3. Godzilla 30.4K posts

- 4. The WET 24.8K posts

- 5. Sora 50.1K posts

- 6. Denny 2,349 posts

- 7. OpenAI 24.2K posts

- 8. #thursdayvibes 2,762 posts

- 9. Michigan Man 7,614 posts

- 10. Algorhythm Holdings 1,510 posts

- 11. Johnny Morris 1,150 posts

- 12. Bennie Thompson 2,773 posts

- 13. #RIME_NEWS N/A

- 14. Usher 4,366 posts

- 15. Paula Xinis N/A

- 16. Good Thursday 41K posts

- 17. Person of the Year 12.2K posts

- 18. Shaggy 2,657 posts

- 19. Cam Newton 2,112 posts

- 20. Bob Iger N/A

Something went wrong.

Something went wrong.