#acl2021nlp search results

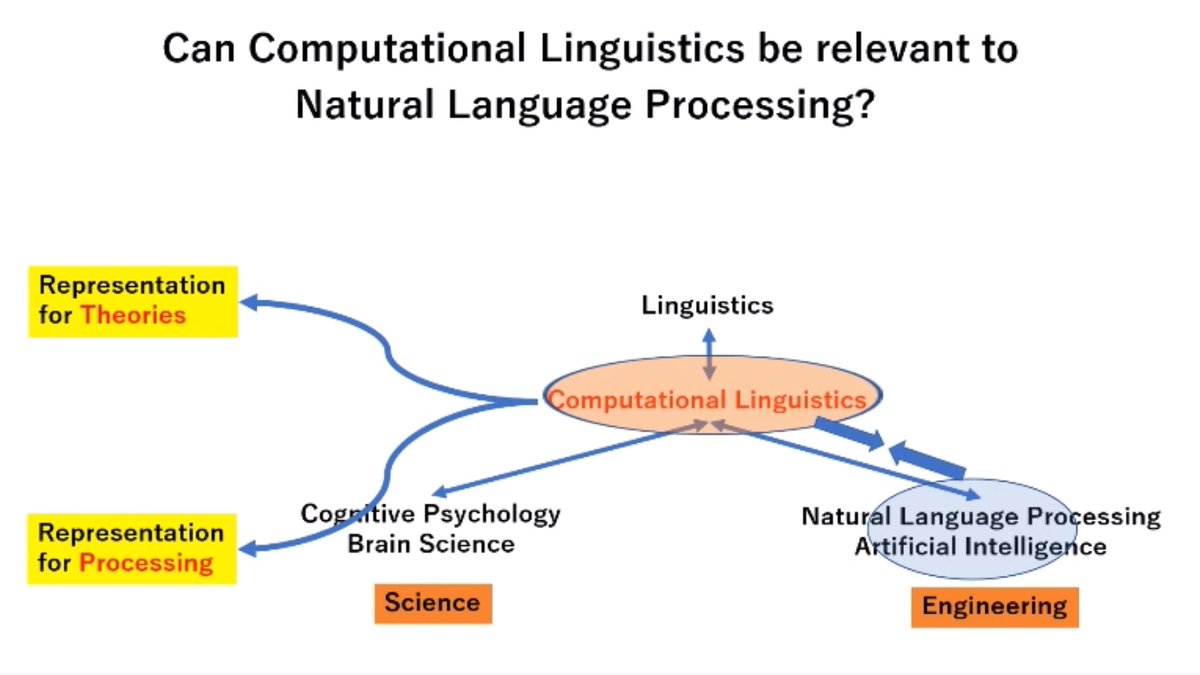

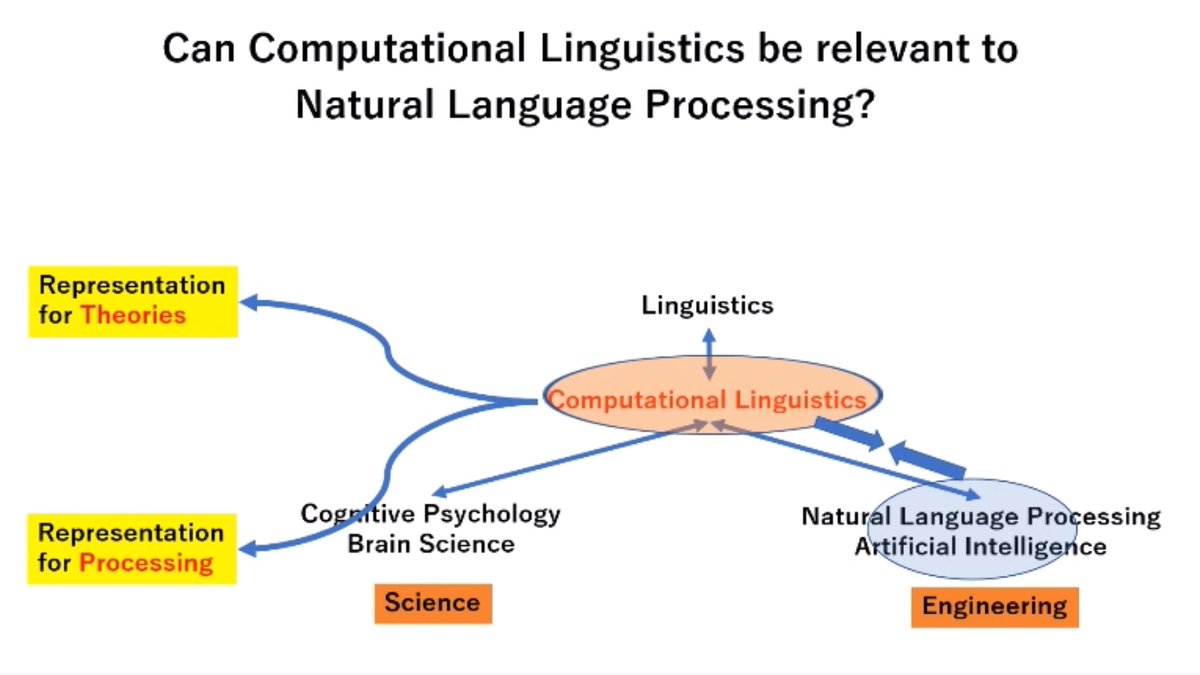

We often ask: what is the difference between Computational Linguistics and Natural Language Processing? A wonderful perspective from Prof. Junichi Tsuji, #ACL2021NLP Lifetime Achievement Award winner, with the message: It is time for us to reconnect NLP and CL!

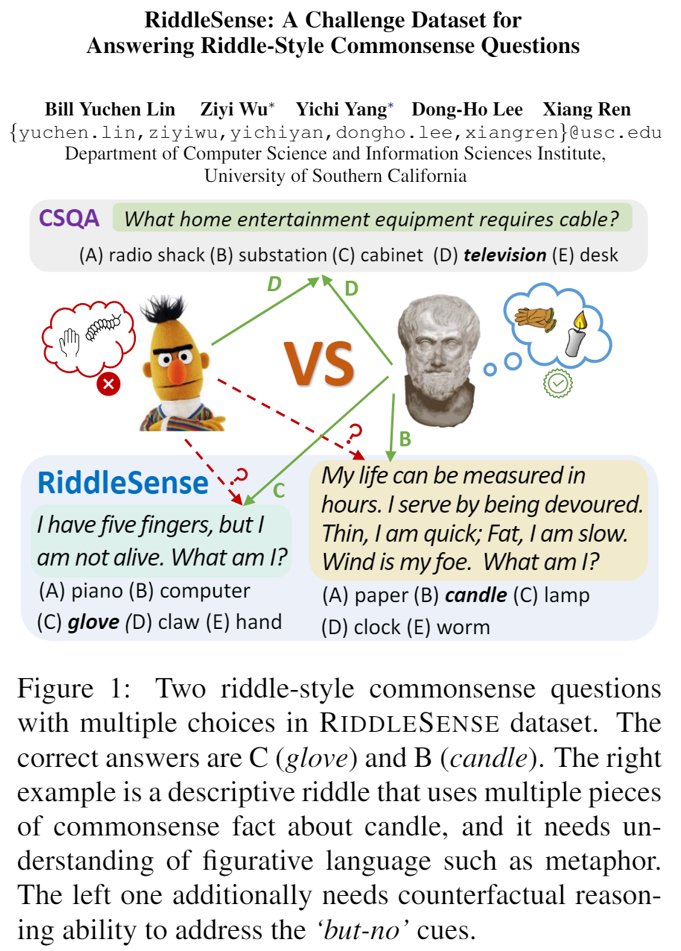

Happy to announce that 2 of our papers on commonsense reasoning got accepted to #ACL2021NLP (1main+1findings). 🎉One for analyzing and improving multilingual LMs on CSR; the other presents RiddleSense, a QA dataset for answering #riddles! Code, data & papers coming soon! @nlp_usc

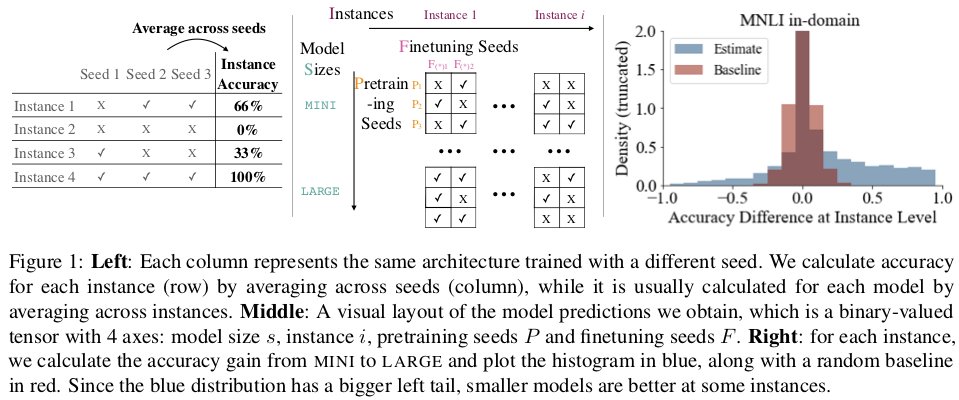

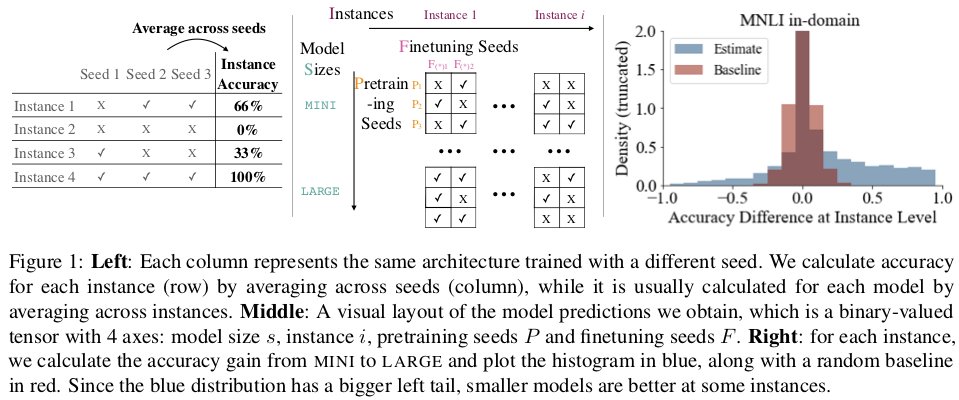

On which datapoints are smaller models better? 🤔Surprisingly, even pinpointing them is challenging and requires new statistical tools!😯 Check out our paper: Are Larger Pretrained Language Models Uniformly Better? Comparing Performance at the Instance Level #NLProc #ACL2021NLP

Wanna have some fun with your NLU models? Try out RiddleSense, our new QA dataset in #ACL2021NLP. It consists of multi-choice questions featuring both linguistic creativity and common-sense knowledge. Project website: inklab.usc.edu/RiddleSense/ Paper: arxiv.org/abs/2101.00376 [1/5]

![billyuchenlin's tweet image. Wanna have some fun with your NLU models? Try out RiddleSense, our new QA dataset in #ACL2021NLP. It consists of multi-choice questions featuring both linguistic creativity and common-sense knowledge.

Project website: inklab.usc.edu/RiddleSense/ Paper: arxiv.org/abs/2101.00376 [1/5]](https://pbs.twimg.com/media/E5odW6_VgAQmnzR.jpg)

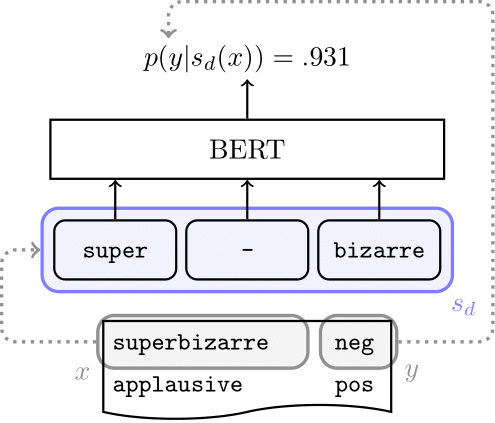

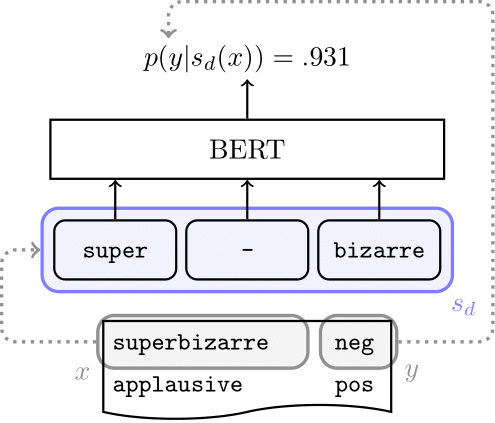

BERT's tokenizations are sometimes morphologically superb-iza-rre, but does that impact BERT's semantic representations of complex words? Our upcoming #ACL2021NLP paper (w/ Janet Pierrehumbert & @HinrichSchuetze) takes a look at this question. arxiv.org/pdf/2101.00403… /1

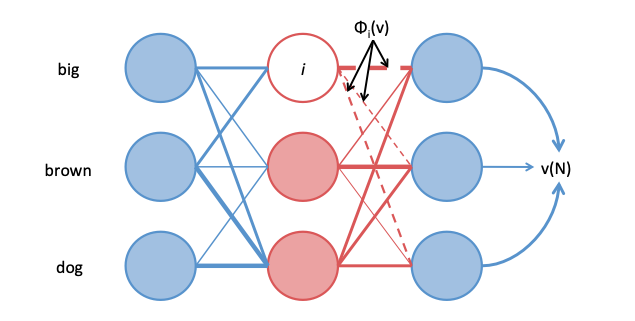

Is there a connection between Shapley Values and attention-based explanations in NLP? Yes! Our #ACL2021NLP paper proves that **attention flows** can be Shapley Value explanations, but regular attention and leave-one-out cannot. arxiv.org/abs/2105.14652 w/ @jurafsky @stanfordnlp

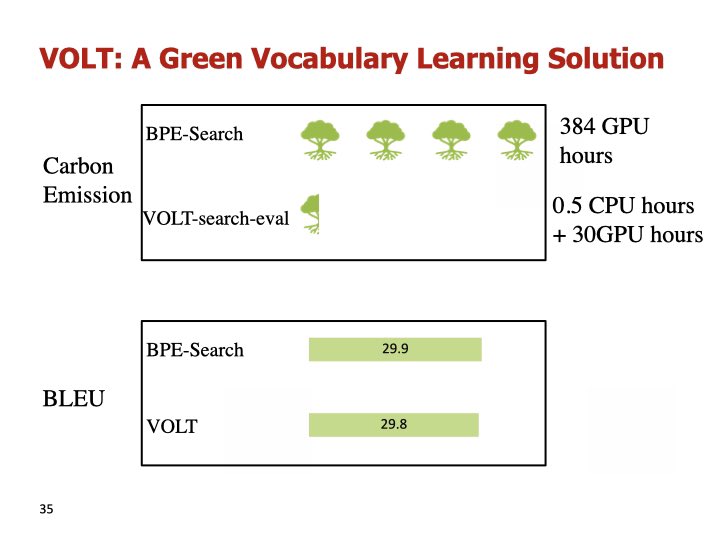

UCSB #NLProc Group welcomes new faculty and co-Director Prof. Lei Li @lileics: sites.cs.ucsb.edu/~lilei/ Don’t forget to check out his #ACL2021NLP Best Paper on optimal transport for vocabulary reduction next week.

Join us for the Workshop on Natural Language Processing for Programming @Nlp4Prog on Aug 6th (9AM-6PM ET) at #ACL2021NLP, featuring a stellar multi-disciplinary lineup of speakers from PL/NLP/HCI/Robotics. Check out the program here nlp4prog.github.io/2021/program/ @osunlp

Thrilled to announce that our paper received the Test of Time Award from the Association for Computational Linguistics!!! @aclmeeting #ACL2021NLP #NLProc 1/n

Introducing our #acl2021nlp work on evaluating and improving multi-lingual language models (ML-LMs) for commonsense reasoning (CSR). We present resources on probing & benchmarking, and a method for improving ML-LMs. [1/6] Paper, code, data, etc.: inklab.usc.edu/XCSR/

![billyuchenlin's tweet image. Introducing our #acl2021nlp work on evaluating and improving multi-lingual language models (ML-LMs) for commonsense reasoning (CSR). We present resources on probing & benchmarking, and a method for improving ML-LMs. [1/6]

Paper, code, data, etc.: inklab.usc.edu/XCSR/](https://pbs.twimg.com/media/E4gQYZ7UUAMg6Kp.jpg)

Word meaning varies across linguistic and extralinguistic contexts, but so far there has been no attempt in #NLProc to model both types of variation jointly. Our #ACL2021NLP paper fills this gap by introducing dynamic contextualized word embeddings. arxiv.org/pdf/2010.12684… [1/4]

![vjhofmann's tweet image. Word meaning varies across linguistic and extralinguistic contexts, but so far there has been no attempt in #NLProc to model both types of variation jointly. Our #ACL2021NLP paper fills this gap by introducing dynamic contextualized word embeddings. arxiv.org/pdf/2010.12684… [1/4]](https://pbs.twimg.com/media/E5IU-5IXoAMRtC9.jpg)

![vjhofmann's tweet image. Word meaning varies across linguistic and extralinguistic contexts, but so far there has been no attempt in #NLProc to model both types of variation jointly. Our #ACL2021NLP paper fills this gap by introducing dynamic contextualized word embeddings. arxiv.org/pdf/2010.12684… [1/4]](https://pbs.twimg.com/media/E5IU__sXMAYwMIV.png)

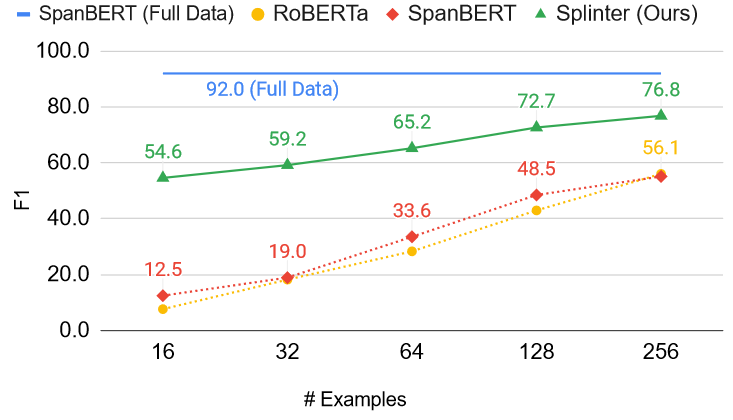

Ever wanted to train a QA model with only 100 examples? Check out Splinter, our new extractive QA model, trained with a novel self-supervised pretraining task, which excels at the few-shot setting 🔥 at #ACL2021NLP Paper: arxiv.org/abs/2101.00438 1/N

Earlier today I gave an invited talk #ACL2021NLP. My sister took some impromptu pictures. The times we live in...

[#acl2021nlp Note Series] Excited about @radamihalcea's ACL Presidential Address @aclmeeting! Don't just chase "accuracy," but care about "more dimensions": interpretability, generalizability, ethics, NLP for social good, environmental cost of NLP, D&I, etc! /1

![ZhijingJin's tweet image. [#acl2021nlp Note Series] Excited about @radamihalcea's ACL Presidential Address @aclmeeting! Don't just chase "accuracy," but care about "more dimensions": interpretability, generalizability, ethics, NLP for social good, environmental cost of NLP, D&I, etc! /1](https://pbs.twimg.com/media/E7vi1EyWQAUDJtX.jpg)

Thank you everyone for attending my talk on "Including Signed Languages" at the #ACL2021NLP Best Paper Session! I am so grateful for all the enthusiasm 😊 We will upload ASL interpretations soon! In the meantime, here's my pre-recording with EN subtitles: youtu.be/AYEIcOsUyWs

PSA people tweeting #NLProc papers. Twitter appears to be classifying #ACL2021 tweets as "Sports". Might have better luck using #ACL2021NLP

#ACL2021NLP folks, I will organize a BoF session on Machine Learning Wednesday morning Aug 4 10am Pacific Time / 17:00 UTC - 18:00 UTC. underline.io/events/167/ses… Everyone is welcome to join and suggest topics to discuss. #NLProc

Honored and flattered to receive the best paper award of #ACL2021NLP. Thank to wonderful co-authors Jingjing, Hao, Chun and Zaixiang, to the extraordinary MLNLC team at Bytedance AI Lab, and zhenyuan, weiying, hang, and ACL committee for support! to Olympic sprinter #SuBingtian

Congratulations to the authors who have won our paper awards! 2021.aclweb.org/program/accept/ #ACL2021NLP #NLProc

@nedjmaou, besides doing working on automated fact-checking for journalists, also created some great tweet round-ups of #EMNLP2021 ift.tt/38d6Nkj and #ACL2021NLP ift.tt/3Afrvvl

Otherwise these are the papers being presented: #acl2021nlp BERT is to NLP what AlexNet is to CV: Can Pre-Trained Language Models Identify Analogies? 👉aclanthology.org/2021.acl-long.… #emnlp2021 Distilling Relation Embeddings from Pretrained Language Models 👉aclanthology.org/2021.emnlp-mai…

Check out our follow-up paper at #acl2021nlp if you're curious how this can be generalized to other forms of sociolinguistic variation!

Stoked to share our latest #aclnlp2021 paper! TL;DR: We argue for the need for #reliability testing as NLP systems start leaving the lab and impacting real lives w/ @JotyShafiq @baxterkb @gabennett45 @ArazTH @knmnyn @SFResearch @NUSComputing @LKYSch tinyurl.com/reliablenlp 1/

Li is an Assistant Prof. @ucsbcs. His publication on Vocab Learning won Best Paper at #ACL2021NLP (link in IG bio). He's looking for student research assistants. Candidates can email/catch him in HFH2122. Read the full spotlight on our IG/FB!

Then, the second talk was given by our own @iAmitGajbhiye who walked us through his recent #acl2021nlp (Findings) paper on "Knowledge distillation for quality estimation"

#acl2021nlp Beyond Goldfish Memory: Long-Term Open-Domain Conversation. (arXiv:2107.07567v1 [cs\.CL]) arxiv.org/abs/2107.07567

Our #ACL2021NLP paper "Lower Perplexity is Not Always Human-Like" is now at arxiv.org/abs/2106.01229 The perplexity of language models is getting lower. Does this mean that they better simulate human incremental language processing? The answer is in our title. (@NlpTohoku)

ACL-IJCNLP 2021 に1本の論文が採択されました。また、Findings に2本の論文が採択されました。 #ACL2021NLP #NLProc nlp.ecei.tohoku.ac.jp/news-release/5…

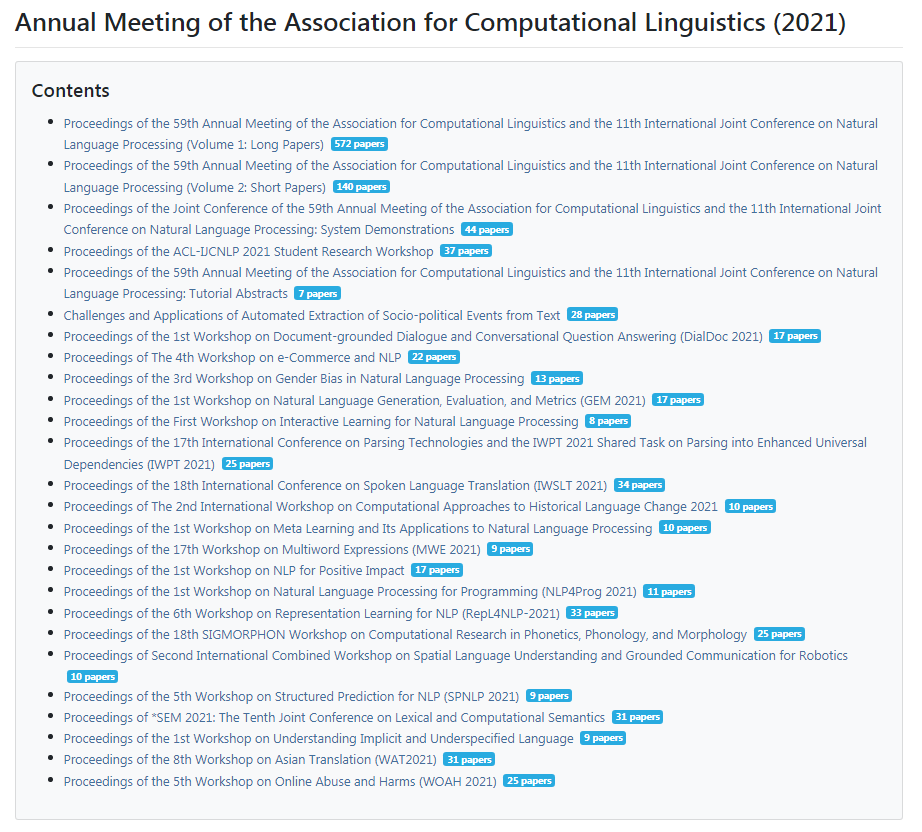

The proceedings of #ACL2021NLP are now available on the @aclanthology! aclanthology.org/events/acl-202… #NLProc

On which datapoints are smaller models better? 🤔Surprisingly, even pinpointing them is challenging and requires new statistical tools!😯 Check out our paper: Are Larger Pretrained Language Models Uniformly Better? Comparing Performance at the Instance Level #NLProc #ACL2021NLP

BERT's tokenizations are sometimes morphologically superb-iza-rre, but does that impact BERT's semantic representations of complex words? Our upcoming #ACL2021NLP paper (w/ Janet Pierrehumbert & @HinrichSchuetze) takes a look at this question. arxiv.org/pdf/2101.00403… /1

Excited that work on enriching dialog at inference-time with background stories got accepted in @aclmeeting (main)--continuing our series of work on World Knowledge⇄Language Generation. w @harsh_jhamtani, @BergKirkpatrick & Julian #NLProc #ACL2021NLP

Message from @radamihalcea, ACL president: stop chasing accuracy numbers, let's focus on other important aspects! #ACL2021NLP #NLProc

We often ask: what is the difference between Computational Linguistics and Natural Language Processing? A wonderful perspective from Prof. Junichi Tsuji, #ACL2021NLP Lifetime Achievement Award winner, with the message: It is time for us to reconnect NLP and CL!

Some suggest that our #NLProc models learn something like humanlike syntax. But do they really? Our #ACL2021NLP paper suuggests, actually, they don’t. (with @koustuvsinha @prasannapartha @jpineau1) Paper: arxiv.org/pdf/2101.00010… Repo: github.com/facebookresear…

Congrats to @siddkaramcheti, @RanjayKrishna, @drfeifei & @chrmanning for #ACL2021NLP Outstanding Paper Mind Your Outliers! Investigating the Negative Impact of Outliers on Active Learning for Visual Question Answering. arxiv.org/abs/2107.02331 Code github.com/siddk/vqa-outl… #NLProc

Happy to announce that 2 of our papers on commonsense reasoning got accepted to #ACL2021NLP (1main+1findings). 🎉One for analyzing and improving multilingual LMs on CSR; the other presents RiddleSense, a QA dataset for answering #riddles! Code, data & papers coming soon! @nlp_usc

Join us for the Workshop on Natural Language Processing for Programming @Nlp4Prog on Aug 6th (9AM-6PM ET) at #ACL2021NLP, featuring a stellar multi-disciplinary lineup of speakers from PL/NLP/HCI/Robotics. Check out the program here nlp4prog.github.io/2021/program/ @osunlp

Have you ever wanted to get word token distributions, but had to throw away oov items because simply counting frequencies can’t handle them? This paper is for you! Just in time for the party, here is a summary of our #ACL2021NLP findings on Modeling the Unigram Distribution :)

Is there a connection between Shapley Values and attention-based explanations in NLP? Yes! Our #ACL2021NLP paper proves that **attention flows** can be Shapley Value explanations, but regular attention and leave-one-out cannot. arxiv.org/abs/2105.14652 w/ @jurafsky @stanfordnlp

🚨New #ACL2021NLP paper🚨 “Training Adaptive Computation for Open-Domain Question Answering with Computational Constraints” with @PMinervini, Pontus and @riedelcastro at @ucl_nlp. Please join us at ACL2021 on August 3 in Session 8E (09:00 UTC) to learn more about our work🍺 [1/3]

Thrilled to announce that our paper received the Test of Time Award from the Association for Computational Linguistics!!! @aclmeeting #ACL2021NLP #NLProc 1/n

Our third keynote is by @ChrisGPotts (@stanfordnlp and @StanfordAILab):"Reliable Characterizations of NLP Systems as a Social Responsibility" #ACL2021NLP #NLProc

Something went wrong.

Something went wrong.

United States Trends

- 1. Steelers 53.4K posts

- 2. #ITZY_TUNNELVISION 30.9K posts

- 3. Mr. 4 4,705 posts

- 4. Rudy Giuliani 13.1K posts

- 5. Chargers 38.6K posts

- 6. Resign 114K posts

- 7. #MondayMotivation 28.7K posts

- 8. Schumer 234K posts

- 9. Tomlin 8,382 posts

- 10. #Talus_Labs N/A

- 11. Rodgers 21.5K posts

- 12. 8 Democrats 10.5K posts

- 13. Tim Kaine 22.9K posts

- 14. Sonix 1,424 posts

- 15. Happy Birthday Marines 3,188 posts

- 16. Voltaire 9,087 posts

- 17. Angus King 19K posts

- 18. #BoltUp 3,132 posts

- 19. Dick Durbin 14.9K posts

- 20. The BBC 400K posts