#acl2023nlp search results

#ACL2023NLP #NLP4ConvAI @5thnlp4convai Our workshop starts with @Diyi_Yang and @larry_heck's invited talks!

I'm on the faculty market in fall 2023! I work on foundations of multimodal ML applied to NLP, socially intelligent AI & health. My research & teaching cs.cmu.edu/~pliang/ If I could be a good fit for your department, I'd love to chat at #ACL2023NLP & #ICML2023 DM/email me!

Kevin Du on “ Generalizing Backpropagation for Gradient-Based Interpretability”, showing how to get the maximum gradient path or how uniform gradients are across paths, with semi rings. #ACL2023NLP #ACL2023

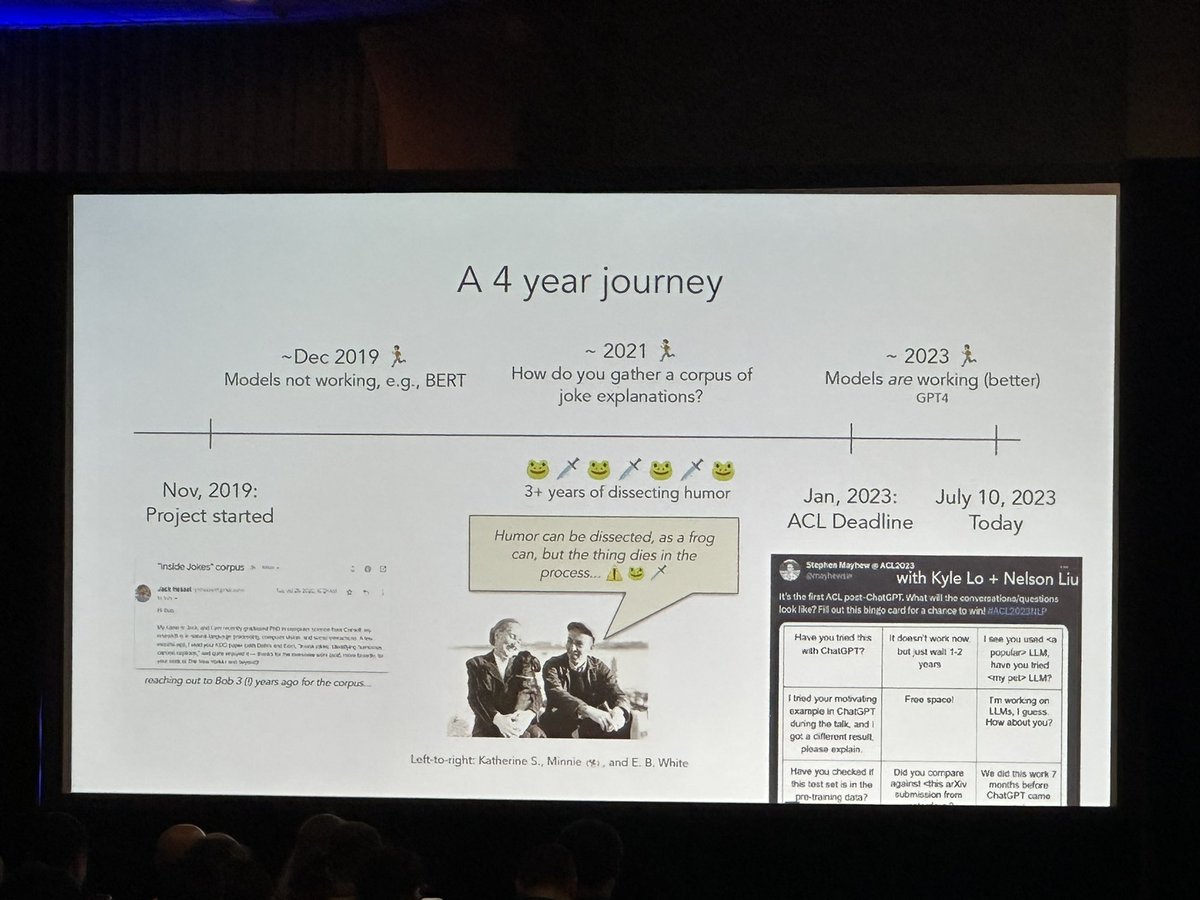

Inspirational words from @jmhessel in his #ACL2023NLP best paper talk: "If you're a PhD student working on a long-term project that you're excited about but still isn't coming together, keep it up...I want to read your paper!" Slide shows the "4 year journey" from idea to award

When language models produce text, is the text novel or copied from the training set? For answers, come to our poster today at #acl2023nlp! Session 1 posters, 11:00 - 12:30 today Critics* are calling the work "monumental" Link to paper: direct.mit.edu/tacl/article/d… 1/2

Congratulations to Dr. @ZhiyingJ 🎉 who just successfully defended her Ph.D. thesis! What an eventful week, with #ACL2023NLP - that DAAM thing, and that gzip thing. Thanks to an awesome committee!

That's a wrap! The Waterloo (@UWCheritonCS) team had fun attending the ACL 2023 Conference in Toronto, Canada! #ACL2023NLP 🇨🇦 We would like to congratulate @ralph_tang @likicode @ZhiyingJ @lintool et al. for winning the Best Paper Award at ACL 2023!!🏆 Next stop is SIGIR 2023.

All papers deserve quality reviews, no questions asked. It's my first time to be recognized as an Outstanding Reviewer at #ACL2023NLP and I'm beyond grateful for the people behind this nomination 🤗

Talk by Hinrich Schütze (@HinrichSchuetze) : 🟠 Extending LLMs to a large subset of the world's languages "horizontally," focusing on low-resource languages 🟠 Glot500-m model is trained on more than 500 languages #starsem2023 #ACL2023NLP

Congratulations to @jmhessel @anmarasovic and the team at @ai2_mosaic for the best paper award at ACL2023 :) Humor and Creativity is still hard and GPTs are still not there yet. #NLProc #ACL2023NLP

🔥 Just had our first oral presentation done at #ACL2023NLP jointly with my student @BingshengY for our new paper "Are Human Explanations Always Helpful?" TLDR: We propose a new TREU metric to auto-eval the quality of human-crafted explanations (for future data labeling tool.)…

I had an incredible week in Toronto! #ACL2023NLP was an amazing experience where I had the chance to meet many great people and return home with plenty of new ideas. Now it's time to go back into work, hope I can make it into Bangkok next year!

Here we go Toronto 🇨🇦! Excited to be at #ACL2023NLP and connect with familiar faces and meet new ones. Come say hi!

🎉 Congratulations to all the award winners at #ACL2023NLP! 🌟🏆 Your groundbreaking research is shaping the future of NLP👏 2023.aclweb.org/program/best_p…

Had an awesome #ACL2023NLP experience. Thanks to the organizers. Met new friends. For memory, here is a pic of the panel discussion which I enjoyed the most with the awesome co-panelists @sarahookr and @danqi_chen. Thanks @Francis_YAO_ , @vishakh_pk and others for inviting.

Congrats to @RichardSocher, Alex Perelygin, @jeaneis, @jcchuang, @ChrisGPotts, @AndrewYNg, & @chrmanning on their 10 year @aclmeeting Test of Time award for the Stanford Sentiment Treebank—Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank! #ACL2023NLP

🏆 ACL Test of Time Paper Award: Category 1: 🔟 years ago (2013): - Universal Dependency Annotation for Multilingual Parsing 🔗aclanthology.org/P13-2017/ - Recursive Deep Models for Semantic Compositionality over a Sentiment Treebank 🔗aclanthology.org/D13-1170/ 🧵(6/n)

The poster for "Evaluating Paraphrastic Robustness in Textual Entailment Models," joint work by @dhr_verma. @lal_yash, our @SinhaShreyashee, @azpoliak & @ben_vandurme, is being presented during Poster Session 1 (11 AM EDT) at @aclmeeting #ACL2023NLP #NLProc

📢Please RT! I'll be at @aclmeeting 's #ACL2023NLP ✨Ethics Session✨ Join us on 📅Tue 11 Jul 16:15 for our report on ACL stakeholders & ethics panel. Help us set priorities! @KarnFort1 Yulia Tsvetkov @dirk_hovy @pascalefung @LucianaBenotti @MalvinaNissim Jin-Dong Kim @mdredze

Greatly enjoyed T6 by @danqi_chen's group @ #ACL2023NLP. One interesting insight: with the success of #LLMs, retrieval-based models shift towards retrieval-based prompting / in-context learning, though they still underperform full FT models. More here: acl2023-retrieval-lm.github.io

Thanks @aclmeeting for hosting the conference in Canada while ignoring all nationalities who won't be able to participate due to the strict visa procedure! Given this, kindly stop claiming too much about the importance of diversity, equity & inclusion! #ACL2023 #ACL2023NLP

Ever wondered how to continually improve your code LLM? In our new #ACL2023nlp paper, we explore Continual Learning (CL) methods for code domain: CodeTask-CL benchmark & Prompt Pooling with Teacher Forcing for CL in code domain. arxiv.org/abs/2307.02435 @AmazonScience @uncnlp 🧵

ACL is less than a week away. Check out the papers to be presented by our Cardiff NLP group! 🔥 #acl2023nlp @aclmeeting #NLProc

I and my colleagues are at the @Google booth #ACL2023NLP for the next half hour, if you want to chat!

#ACL2023NLP 自分のTACL論文のoral発表からのNLI研究者との再会からのACLで会った研究者たちと人生について語りながらナイアガラの滝を観てからのディナーと研究者の醍醐味を一日で一気に味わった感。研究者は自分の見識が広がる最高の仕事だと思った。みんなD進しよう。(さきがけのSさんインスパイア

🚨Paper+Datasets🚨 Our great intern @shivanshug11's work @AmazonScience was accepted at #ACL2023NLP (Findings)🥳 TL;DR: We proposed cross-lingual knowledge distillation to train non-English QA models with NO annotated data🔥 Preprint: arxiv.org/abs/2305.16302 🧵Details+Datasets

Chart captioning is hard, both for humans & AI. Today, we’re introducing VisText: a benchmark dataset of 12k+ visually-diverse charts w/ rich captions for automatic captioning (w/ @angie_boggust @arvindsatya1) 📄: vis.csail.mit.edu/pubs/vistext.p… 💻: github.com/mitvis/vistext #ACL2023NLP

As everything else, Language Models also get outdated. How can we keep them up-to date, without retraining from scratch? In this #ACL2023NLP (main conf.) accepted paper, we propose a temporal adaptation method using prompts!

Huge congratulations to Martha Palmer of @BoulderNLP for winning the #acl2023nlp Lifetime Achievement Award! Martha deserves more recognition for her pioneering work in producing labeled datasets for #NLProc semantics in the 1990s/early 2000s, well before things like ImageNet.

🚨Our paper "Faithfulness Tests for Natural Language Explanations" has been accepted to #ACL2023! Stay tuned for more details coming soon! Work with @oanacamb, Christina Lioma, Thomas Lukasiewicz, Jakob Grue Simonsen,@IAugenstein #NLProc #ACL2023NLP @CopeNLU

An ACL @aclmeeting in Thailand must be much easier for us down under to attend! All it takes is some papers to submit ARR! #ACL2023NLP #NLProc

I don’t get it #ACL2023 #ACL2023nlp

#ACLRebuttal I know I am going to encounter some malicious reviewers. There you go!

Heading to #ACL2023NLP, excited to meet folks! We will be presenting work on discourse/QUD, summarization, and probing intergroup bias, see jessyli.com/acl2023 Tagging my students who will be there @HongliZhan @YatingWu96

Something went wrong.

Something went wrong.

United States Trends

- 1. #BUNCHITA 1,345 posts

- 2. #SmackDown 44.2K posts

- 3. Tulane 4,130 posts

- 4. Aaron Gordon 3,224 posts

- 5. Giulia 14.2K posts

- 6. Supreme Court 180K posts

- 7. Russ 13.3K posts

- 8. Connor Bedard 2,657 posts

- 9. #TheLastDriveIn 3,479 posts

- 10. Podz 2,835 posts

- 11. Frankenstein 75K posts

- 12. #TheFutureIsTeal N/A

- 13. Caleb Wilson 5,602 posts

- 14. #OPLive 2,239 posts

- 15. Northwestern 4,971 posts

- 16. Memphis 15.9K posts

- 17. Scott Frost N/A

- 18. Tatis 1,875 posts

- 19. Justice Jackson 5,198 posts

- 20. Rockets 20.2K posts