#autoencoder resultados de búsqueda

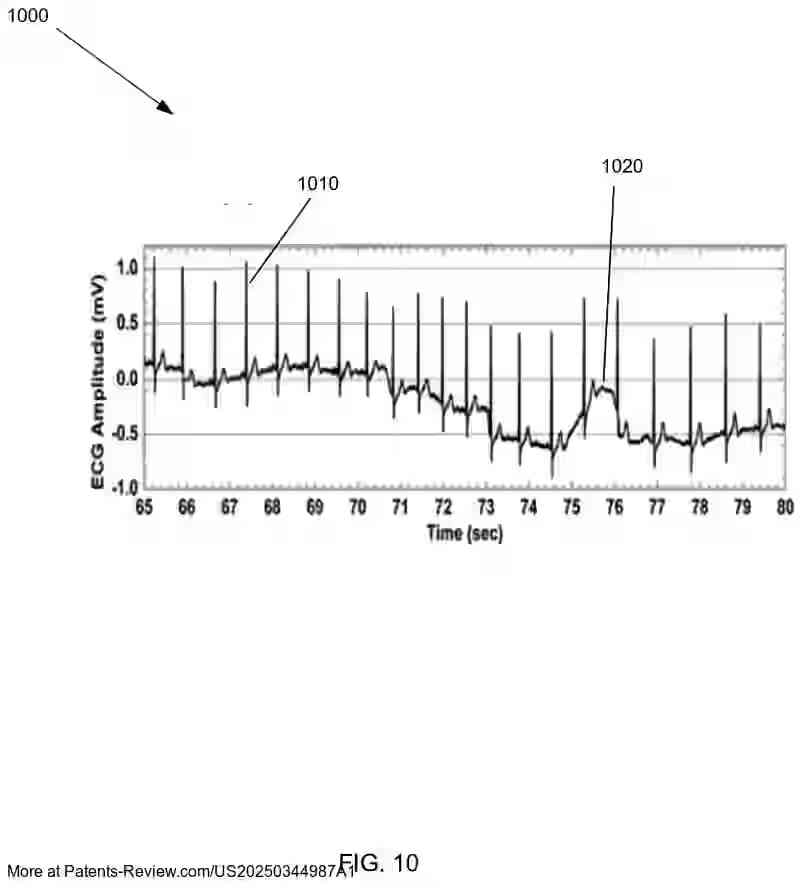

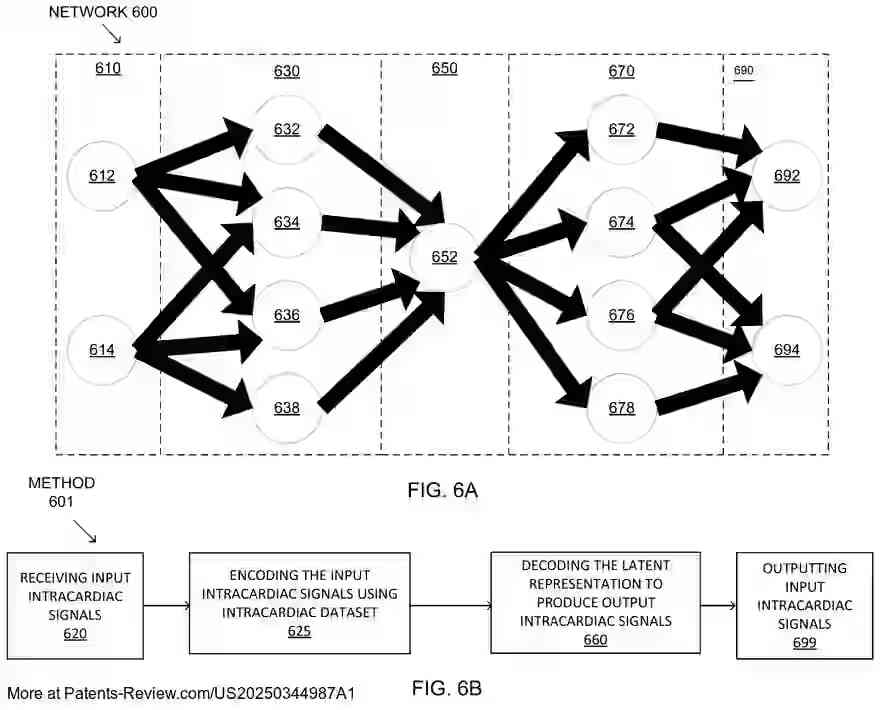

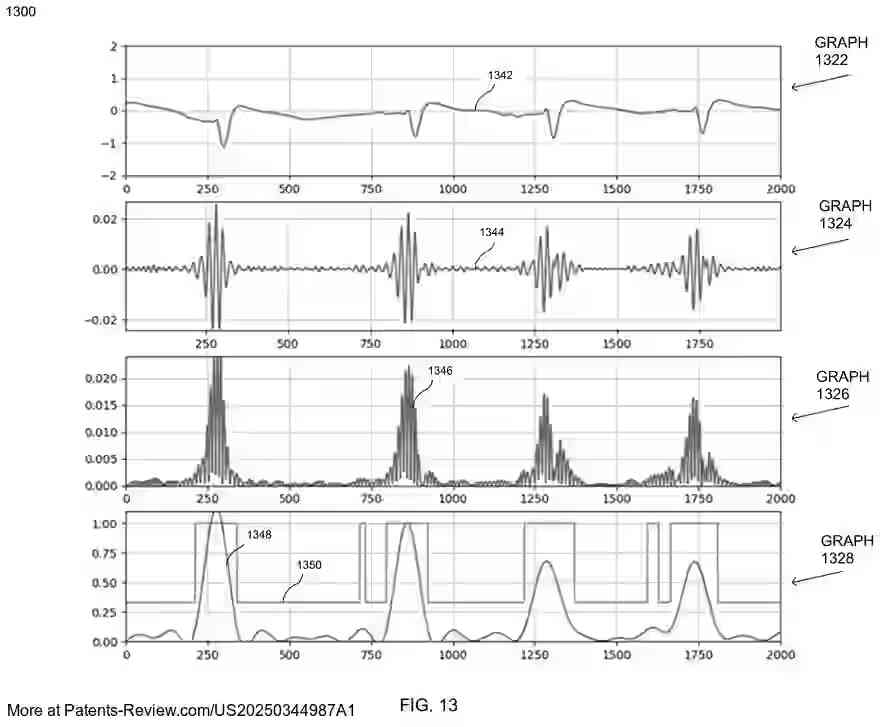

New patent application #US20250344987A1 by #BiosenseWebster explores reducing noise in intracardiac ECGs using a denoised #autoencoder. The system refines ECGs with #DeepLearning, enhancing signal clarity by encoding and decoding raw data to remove noise. Key features include…

高積算高s/nのMRS dataで学習させたStacked Autoencoderを用い、MRS積算回数をごく少なくしても充分評価に耐えるspectraを生成する用意したというpreprint ヒト脳の低積算画像では,SNRが43.8%増加し,MSEが68.8%減少し、定量性は保たれた #MRS #autoencoder #papers arxiv.org/abs/2303.16503…

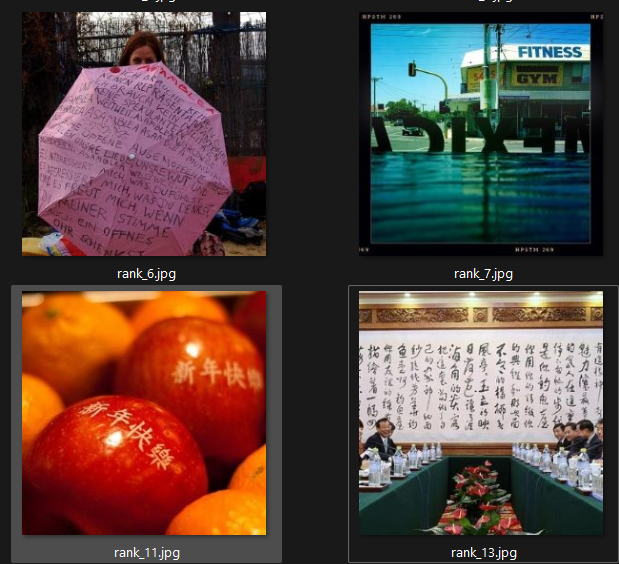

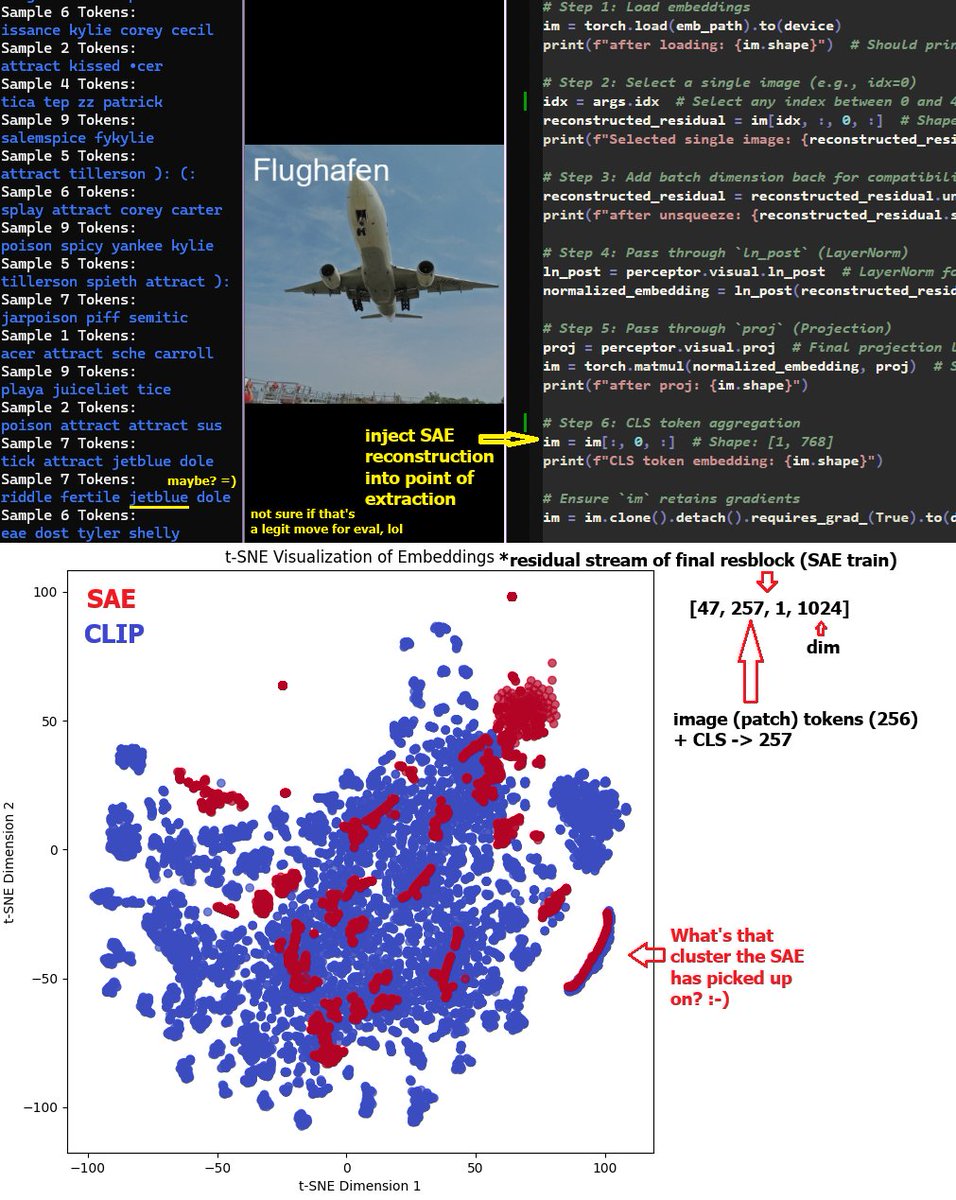

YUSS new trained #sparse #autoencoder has FOUND THE TEXT OBSESSION in #CLIP #AI!🥳🤩 Only 1 smol problem..🤣 It's not just *ONE* typographic cluster.🤯 Left: 3293 encodes CLIP neurons for English, probably EN text signs. Right: 2052 encodes East Asian + German + Mirrored. 👇🧵

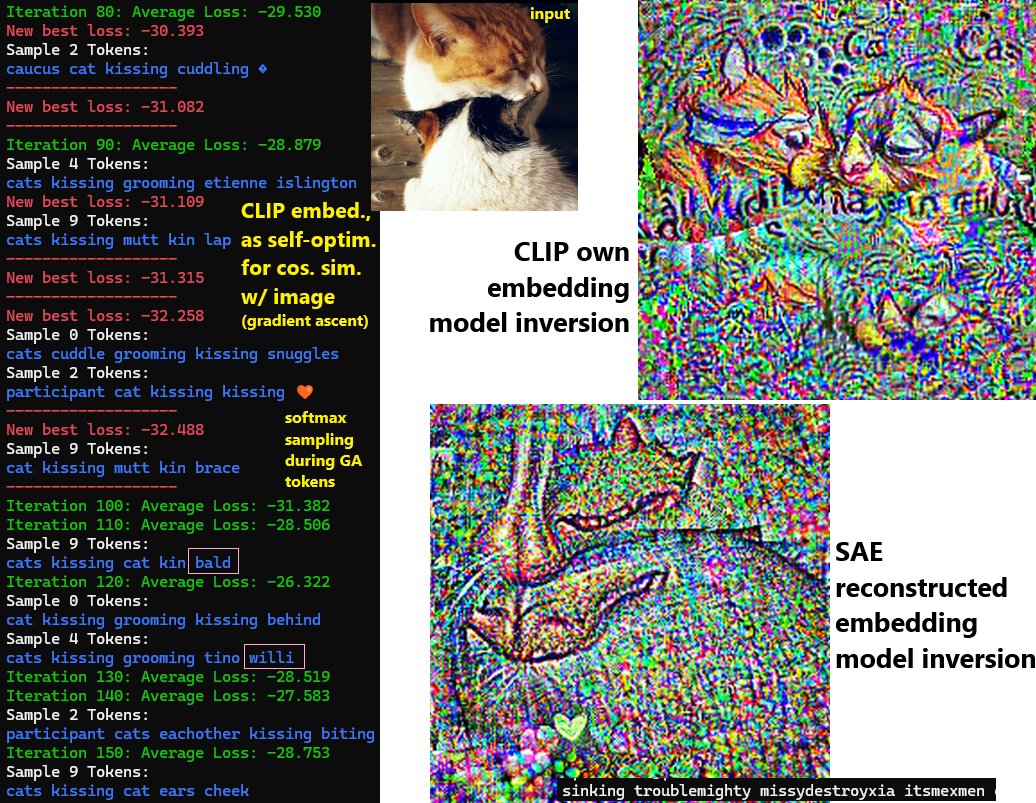

Thanks for the en-/discouragement, #GPT4o 😂 Now #sparse #autoencoder #2 learns to be a #babelfish, translating #logits to #token sequences.🤯 It could help decode a sparse #CLIP embedding, it could help decode a gradient ascent #CLIP #opinion! God luck & good speed, #SAE ✊😬

Fun with #CLIP's #sparse #autoencoder: First glimpse, I thought [Act idx 20] was encoding "sports / tennis". But that's not the shared feature. It's a "people wearing a thing around their head that makes them look stupid" feature. 🤣😂 #lmao #AI #AIweirdness

![zer0int1's tweet image. Fun with #CLIP's #sparse #autoencoder: First glimpse, I thought [Act idx 20] was encoding "sports / tennis".

But that's not the shared feature. It's a "people wearing a thing around their head that makes them look stupid" feature. 🤣😂

#lmao #AI #AIweirdness](https://pbs.twimg.com/media/GdvKrMHagAAKhWe.jpg)

#CLIP 'looking at' (gradient ascent) a fake image (#sparse #autoencoder idx 3293 one-hot vision transformer (!) embedding). Has vibes similar to #AI's adverb neuron.🤓😂 🤖: pls aha ... 🤖: go aha ... hey lis carley ... 🤖: go morro ... thanks morro dealt ... go thub ... ... .

Testing #sparse #autoencoder trained on #CLIP with #COCO 40k (normal (human) labels, e.g. "a cat sitting on the couch"). Yes, #SAE can generalize to CLIP's self-made #AI-opinion gradient ascent embeds.🤩 Cat getting teabagged may be legit "nearby concept" in context.😘😂 #AIart

Reconstructed #sparse #autoencoder embeddings vs. #CLIP's original text embedding #AI self-made 'opinion'. For simple emoji black-on-white input image. Model inversion thereof: #SAE wins. Plus, CLIP was also 'thinking' of A TEXT (symbols, letters) when 'seeing' this image.🤗🙃

These = guidance with text embeddings #CLIP made (gradient ascent) while looking at an image of one of its own neurons, which it found to be "hallucinhorrifying trippy machinelearning" -> passed through trained-on-CLIP #sparse #autoencoder (nuke T5) -> guidance. #AIart #Flux1

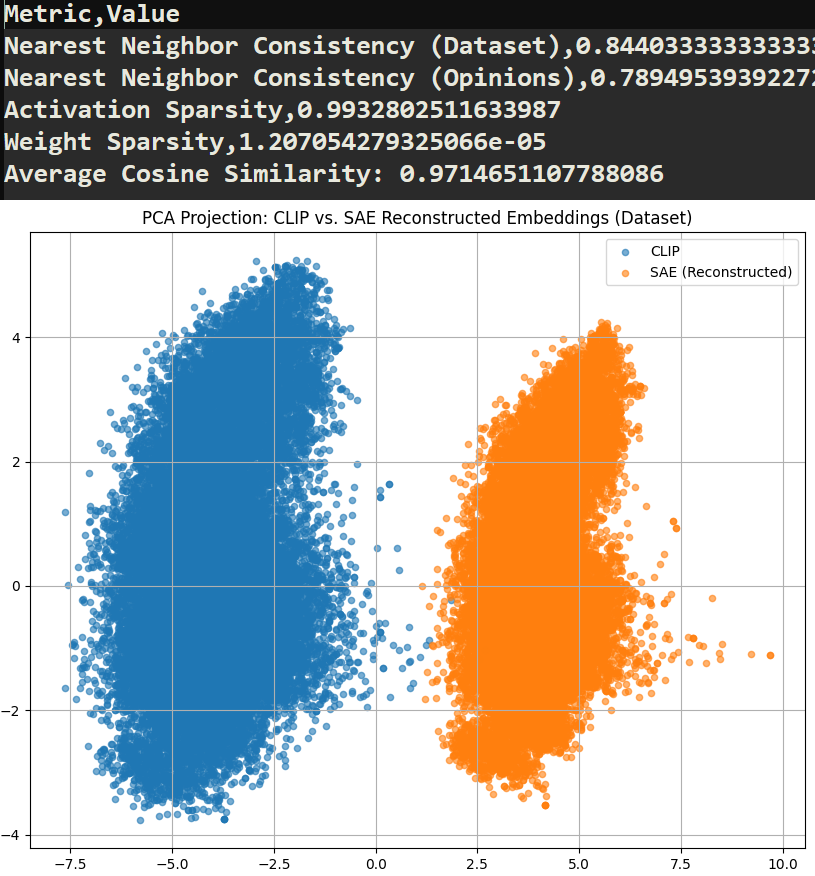

w00t best #sparse #autoencoder for the #AI so far \o/ I kinda maxed out the reconstruction quality @ 97% cossim. I can stop optimizing this ball of mathematmadness now. 😅 Tied encoder / decoder weights + extra training on #CLIP's "hallucinwacky ooooowords" ('opinions'). 😂

Time to train a good #sparse #autoencoder config on the real stuff (residual stream). I guess the current #SAE was too sparse for this level of complexity. And now it takes a 'non-insignificant' amount of time to train one, too, ouch!🙃 Sparsity: 0.96887 Dead Neurons Count: 0

Excited to have presented my poster at CVIP 2024! It was a valuable experience to share my work and connect with the research community. #cvip2024 #ArtificialIntelligence #autoencoder #isro

🖼️🖼️ #Hyperspectral Data #Compression Using Fully Convolutional #Autoencoder ✍️ Riccardo La Grassa et al. 🔗 brnw.ch/21wPykC

🖐️🖐️ A Combination of Deep #Autoencoder and Multi-Scale Residual #Network for #Landslide Susceptibility Evaluation ✍️ Zhuolu Wang et al. 🔗 mdpi.com/2072-4292/15/3…

A Latent Diffusion Model for Protein Structure Generation openreview.net/forum?id=8zzje… #autoencoder #proteins #biomolecules

Catch the ‘Using AI/ML to Drive Multi-Omics Data Analysis to New Heights’ webinar tomorrow afternoon. Speaking second is Ibrahim Al-Hurani from @mylakehead, presenting #autoencoder and #GAN approaches for #multiomics. Join us tomorrow: hubs.la/Q02H55cS0

HQ-VAE: Hierarchical Discrete Representation Learning with Variational Bayes openreview.net/forum?id=1rowo… #autoencoder #quantization #autoencoding

Day 16 of my summer fundamentals series: Built an Autoencoder from scratch in NumPy. Learns compressed representations by reconstructing inputs. Encoder reduces, decoder rebuilds. Unsupervised and powerful for denoising, compression, and more. #MLfromScratch #Autoencoder #DL

👋👋 Unsupervised #Transformer Boundary #Autoencoder Network for #Hyperspectral Image #Change Detection ✍️ Song Liu et al. 🔗 brnw.ch/21wOvyQ

Something went wrong.

Something went wrong.

United States Trends

- 1. Piers 31.8K posts

- 2. Pulisic 8,276 posts

- 3. Alina Habba 15K posts

- 4. Wolves 92.1K posts

- 5. Chargers 16.6K posts

- 6. Cunha 15.3K posts

- 7. Paramount 80.1K posts

- 8. #WOLMUN 9,276 posts

- 9. Amorim 40.8K posts

- 10. Tracy Morgan N/A

- 11. Farmers 86.9K posts

- 12. Carragher 42.6K posts

- 13. #MUFC 16K posts

- 14. Jasmine Crockett 21.5K posts

- 15. Mason Mount 4,787 posts

- 16. Busta 1,668 posts

- 17. Kyle 32.4K posts

- 18. Manchester United 41.7K posts

- 19. Amad 5,169 posts

- 20. Go Birds 6,639 posts