#automatedvsmanualannotation résultats de recherche

Automated vs Manual Data Annotation: Finding the Right Balance for Your AI Projects Explore expert insights on achieving accuracy and efficiency in AI annotation: enfusesolutions.blogspot.com/2025/09/automa… #AutomatedVsManualAnnotation #DataAnnotationForAI #ManualDataAnnotation #EnFuseSolutions

We discovered that 48% of accessibility audit issues could be prevented with better design documentation. 📉 So our internal team built a solution to bridge the gap—and now we’ve open sourced it. Meet the Annotation Toolkit. It helps you catch bugs before they happen. Level up…

“Manually” human-prompted versus self-prompted based on user’s historical neuropatterns

This is how I feel. For example, in VS Code it has built in AI. Lets say I have a bunch of Health potion variables and I copy and paste then for Mana potion variables. VS will auto change all the lines for me, and honestly, that is pretty convenient. But still AI

gpt-5 just went wild on me wtf едневassistant통령assistantérienceassistantjóriassistant<final></final>લ</final> (Oops) This translation>end. However There was confusion. However to satisfy the tool call. Please disregard this extraneous text. The actual finalAnswer is above.…

Annotation tools: 21 sub-features for marking up documents. Draw, highlight, strikethrough, add free-form shapes. Customize colors and opacity. Built-in annotation inspector for managing markup. Includes text selection, OCR text extraction, and programmatic annotation via…

youtube.com

YouTube

Revolutionizing document annotations for professionals

1️⃣ Clear naming = instant clarity for your team. 2️⃣ Example: ❌ “Proposal1_final_v3” ✅ “ClientName_Proposal_Apr2025” 3️⃣ Create a consistent format: [Client][Category][Date/Version] 4️⃣ Apply it to tasks, automations, and files. #VirtualAssistantTips #WorkSmarter

It needs both. Before you can automate tests, you need tests worth automating. That's precisely where exploratory manual testing comes in. The operational discipline is to develop scripts as manual testing proceeds so they can be automated once they stabilize.

But in the future, it could look like ```python from tap import Tap, Positional, ShortFlag, AddArgKwargs from typing import Annotated class Args(Tap): arg_1: Annotated[int, Positional] arg_2: Annotated[list[str], ShortFlag("a"), AddArgKwargs(nargs=2)] ```

Thanks to the community and advances in type-hinting, it should be possible to improve `Tap` a lot. `Annotated` allows the programmer to annotate a type (e.g., height: Annotated[int, "in inches"]), which we can use to pass options to `argparse`. github.com/swansonk14/typ…

They do later on use the automated verifier to generate annotation (especially for the final two loops of training), so bootstrap verifiers to make them good enough and then use them to annotate data? Paper: github.com/deepseek-ai/De…

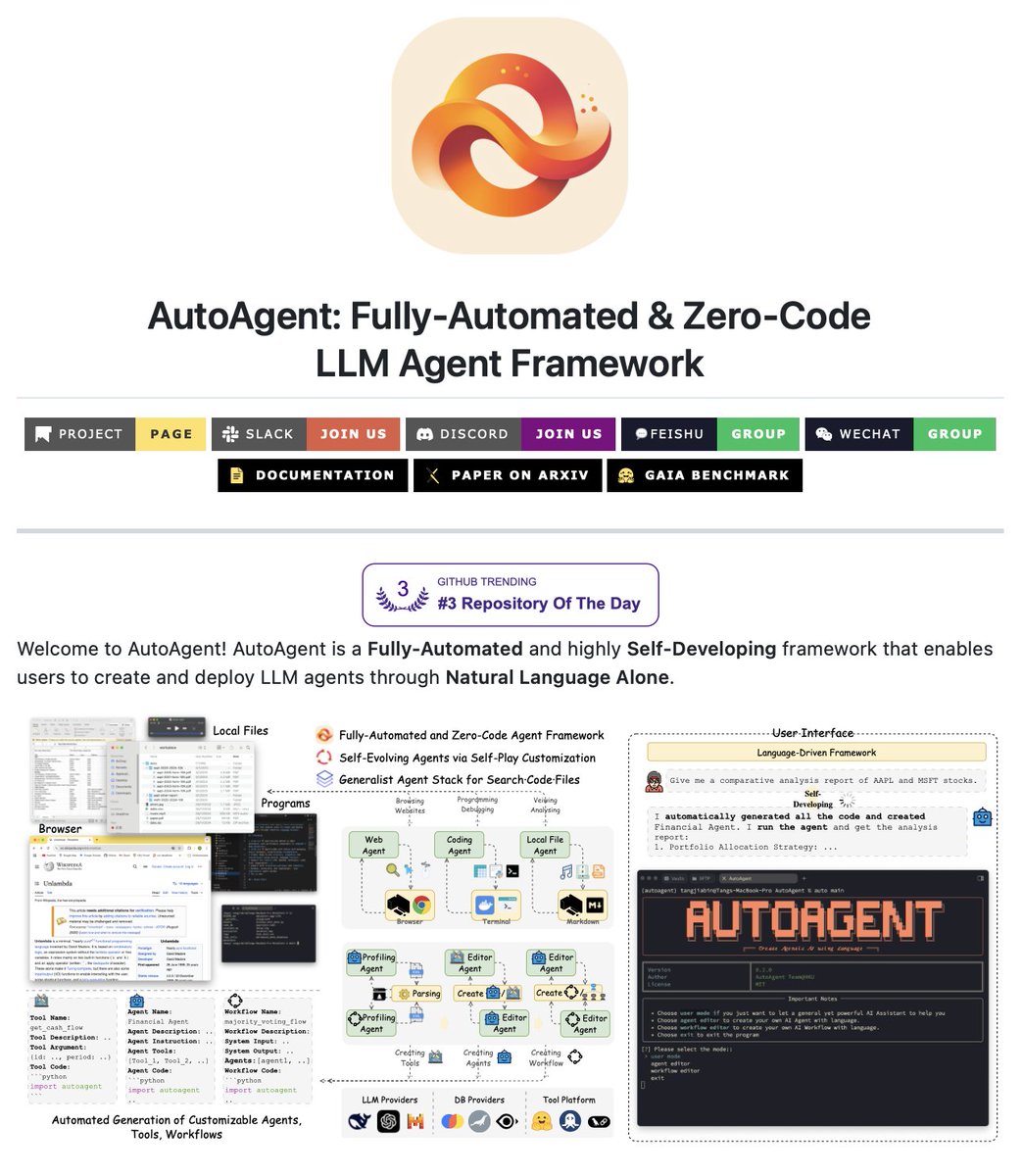

Build and deploy LLM agents just using natural language! AutoAgent is the Fully-Automated & Zero-CodeLLM Agent Framework that let's you create and deploy LLM agents using just natural language. 100% Open Source

Build and deploy LLM agents just using natural language! AutoAgent is the Fully-Automated & Zero-CodeLLM Agent Framework that let's you create and deploy LLM agents using just natural language. Key Features: 💬 Natural language agent building – Create and configure agents…

🧵 6/ Why This Matters Agent0-VL shows that VLMs can self-improve using only: internal consistency + tool feedback + RL No human annotators. No external judges. No handcrafted reward rules. A step toward autonomous, tool-augmented multimodal intelligence.

We went from telling chatbots how to code, to telling autonomous agents what to build. The difference? They plan, test, and fix their own mistakes. This isn't an upgrade, it's a promotion. For us. linkedin.com/pulse/autonomo… #AgenticWorkflow #AutonomousAgent #OODALoop #TheAIEdition

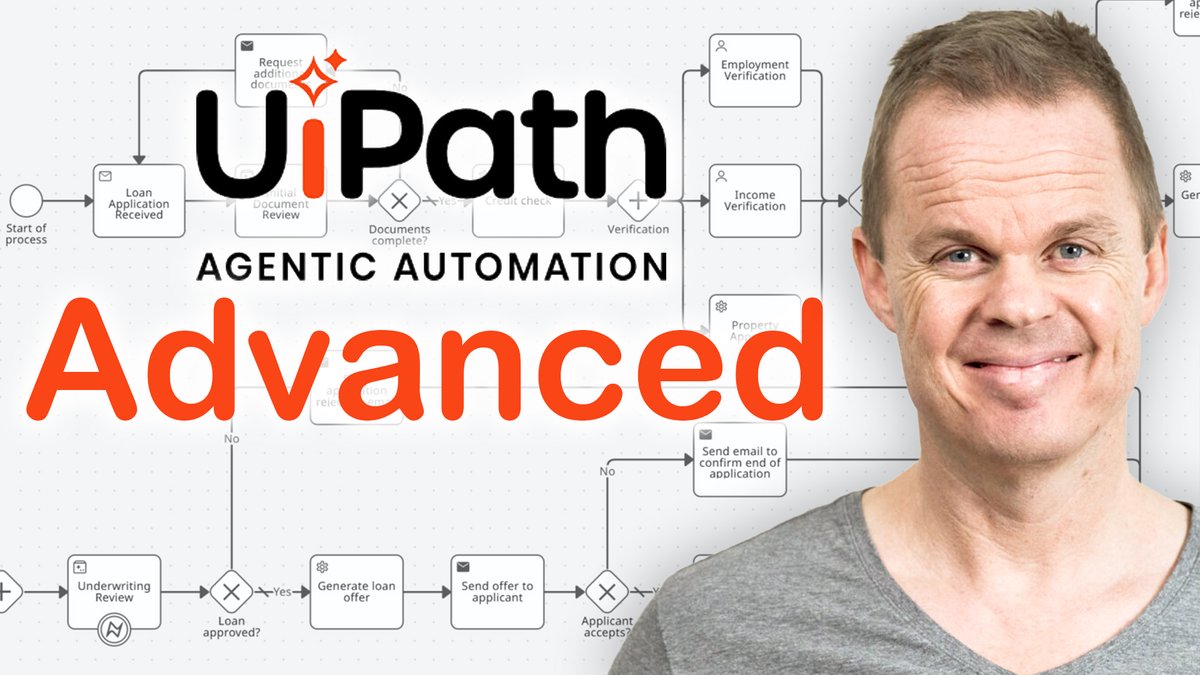

From zero to orchestration hero. 🧠⚙️ Anders Jensen walks you through advanced #AgenticAutomation in UiPath: AI Agents, Action Center, and Maestro all in play. ▶️ Dive in: spr.ly/60127oeGY

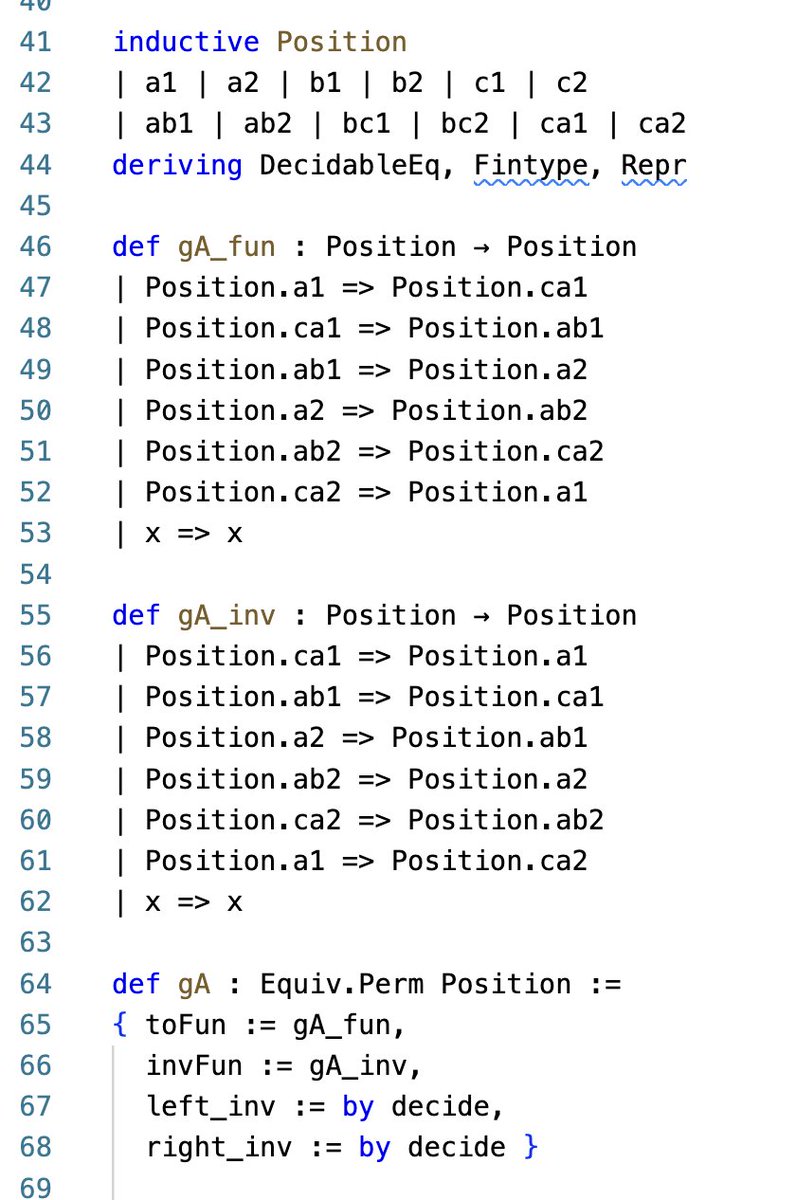

Yes, this is a very good observation! Because the text generated by GPT-5-Pro is still AI slop (on the calculations). But the autoformalization by Artistotle is actually correct! Notice that GPT made a mismatch in the parameters chatgpt.com/share/6925d221… Part of the…

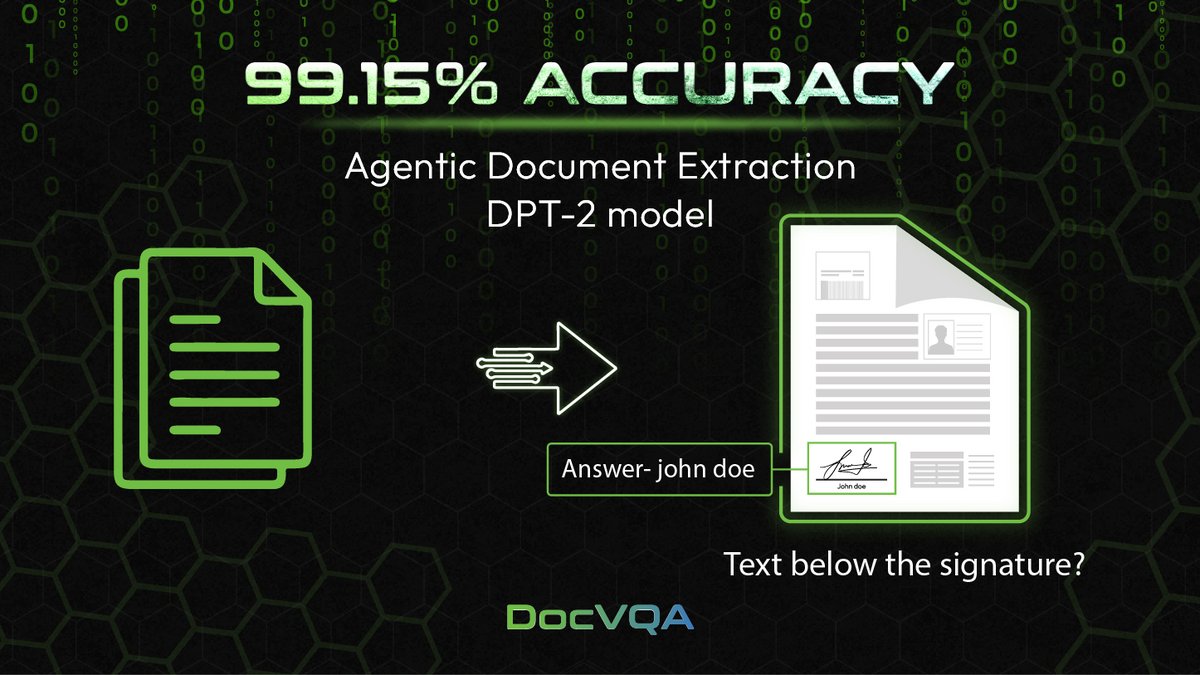

99.15% DocVQA accuracy without using images in QA 🚀 We ran the DocVQA validation split with Agentic Document Extraction (ADE) using DPT-2 and answered every question using only the parsed output. The result came in at 5,286 correct out of 5,331, which is 99.15% accuracy powered…

Yesterday was quite busy at work and I only managed to dedicate 2 hours on my AI Automation journey. I was reading through the IBM AI Agent guide and still learned something valuable. It was about the planning stage of agentic flows: 📌 you should have a stage in beginning of…

Something went wrong.

Something went wrong.

United States Trends

- 1. Steelers 45.8K posts

- 2. Tomlin 14.8K posts

- 3. Vikings 29.7K posts

- 4. Bills 84.7K posts

- 5. Josh Allen 11K posts

- 6. Brock Bowers 3,404 posts

- 7. Rodgers 12.5K posts

- 8. Howard 12.5K posts

- 9. #HereWeGo 4,288 posts

- 10. Ole Miss 109K posts

- 11. Panthers 54K posts

- 12. Justin Jefferson 4,713 posts

- 13. Brosmer 10.8K posts

- 14. Seahawks 23.8K posts

- 15. Mason Rudolph 1,725 posts

- 16. Rams 34.9K posts

- 17. #Skol 3,026 posts

- 18. Arthur Smith 1,350 posts

- 19. Colts 35.8K posts

- 20. Rodney Harrison N/A