#deeplearningtips 검색 결과

Can you guess which activation function is best for handling vanishing gradient issues? Drop your answer! #AI #DeepLearningTips

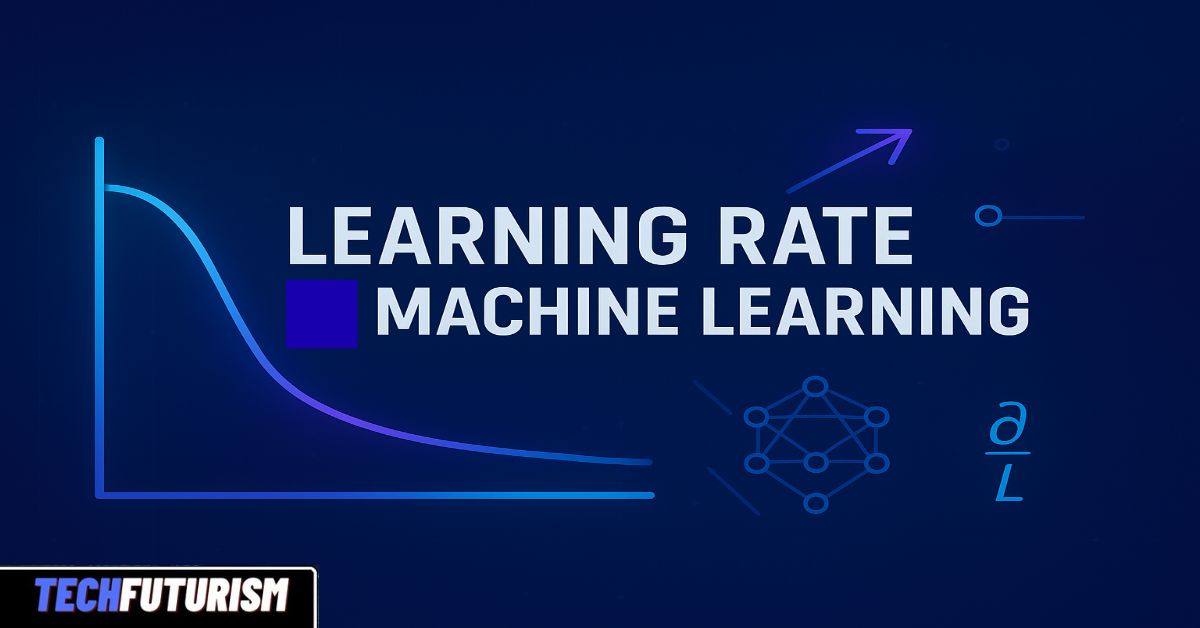

Mastering Learning Rate Machine Learning: A Powerful Guide for Positive Results techfuturism.com/mastering-lear… #DeepLearningTips, #LearningRate, #MachineLearningBasics, #MLTraining

💡 ML Snippet of the Day: Have you tried 'Gradient Clipping'? This technique keeps gradients from exploding, helping models train more smoothly! Useful in deep learning to avoid instability issues. 🚀 #MachineLearning #DeepLearningTips

Why is prompt engineering crucial? 🤔 It helps in maximizing the utility of a model, especially in zero-shot or few-shot scenarios. The better the prompt, the better the result! #DeepLearningTips

Hacking on an instance segmentation deep learning project? Check out the Cityscapes and COCO datasets. #DeepLearningTips

3. Non-Zero-Centered Output Sigmoid outputs only positive values, which can create unbalanced gradients. During backprop, this can lead to zig-zagging in gradient updates, making training slower. Alternative functions like ReLU can avoid this. #DeepLearningTips #Sigmoid

Beyond backprop: Weight mirroring at initialization. Forces rapid convergence, avoids local minima. Few understand the power of symmetry. #AISymmetry #ModelAlchemy #DeepLearningTips

8. Using Pre-Trained Layers in Deep Learning youtu.be/OI-XyyCEnOc?si… via @YouTube #AIModel, #DeepLearningTips, #TransferLearningForBeginners, #MachineLearningTips, #PreTrainedLayers, #AIProjects, #DataScienceTips, #AICommunity, #NeuralNetworkTraining, #ML

youtube.com

YouTube

8. Using Pre-Trained Layers in Deep Learning

#deeplearning #deeplearningtips #learningtips #transformers #gpt3 #gpt2 #gpt #bert #learning #tips youtu.be/bvBK-coXf9I

So you don't have money but you want to start doing some deep learning? #deeplearning #deeplearningtips #hardware #cloud #budgetpc #budgetdeeplearning #cheaphardware #cheap youtu.be/rcIrIhNAe_c

youtube.com

YouTube

Cheapest (0$) Deep Learning Hardware Options | 2021

Beyond backprop: Weight mirroring at initialization. Forces rapid convergence, avoids local minima. Few understand the power of symmetry. #AISymmetry #ModelAlchemy #DeepLearningTips

Can you guess which activation function is best for handling vanishing gradient issues? Drop your answer! #AI #DeepLearningTips

3. Non-Zero-Centered Output Sigmoid outputs only positive values, which can create unbalanced gradients. During backprop, this can lead to zig-zagging in gradient updates, making training slower. Alternative functions like ReLU can avoid this. #DeepLearningTips #Sigmoid

💡 ML Snippet of the Day: Have you tried 'Gradient Clipping'? This technique keeps gradients from exploding, helping models train more smoothly! Useful in deep learning to avoid instability issues. 🚀 #MachineLearning #DeepLearningTips

Why is prompt engineering crucial? 🤔 It helps in maximizing the utility of a model, especially in zero-shot or few-shot scenarios. The better the prompt, the better the result! #DeepLearningTips

#deeplearning #deeplearningtips #learningtips #transformers #gpt3 #gpt2 #gpt #bert #learning #tips youtu.be/bvBK-coXf9I

So you don't have money but you want to start doing some deep learning? #deeplearning #deeplearningtips #hardware #cloud #budgetpc #budgetdeeplearning #cheaphardware #cheap youtu.be/rcIrIhNAe_c

youtube.com

YouTube

Cheapest (0$) Deep Learning Hardware Options | 2021

Hacking on an instance segmentation deep learning project? Check out the Cityscapes and COCO datasets. #DeepLearningTips

Can you guess which activation function is best for handling vanishing gradient issues? Drop your answer! #AI #DeepLearningTips

Mastering Learning Rate Machine Learning: A Powerful Guide for Positive Results techfuturism.com/mastering-lear… #DeepLearningTips, #LearningRate, #MachineLearningBasics, #MLTraining

Something went wrong.

Something went wrong.

United States Trends

- 1. Cyber Monday 47.7K posts

- 2. TOP CALL 11.4K posts

- 3. Adam Thielen 2,398 posts

- 4. GreetEat Corp. N/A

- 5. #Rashmer 18.3K posts

- 6. Shakur 5,829 posts

- 7. Alina Habba 31.7K posts

- 8. #IDontWantToOverreactBUT 1,472 posts

- 9. #GivingTuesday 2,881 posts

- 10. Shopify 4,832 posts

- 11. $MSTR 17.3K posts

- 12. Check Analyze N/A

- 13. Token Signal 3,590 posts

- 14. Hartline 2,737 posts

- 15. John Butler N/A

- 16. UCLA 6,869 posts

- 17. Market Focus 2,932 posts

- 18. #MondayMotivation 10.5K posts

- 19. Chesney 2,618 posts

- 20. Melania Trump 38.3K posts