#distributedparallelsystems zoekresultaten

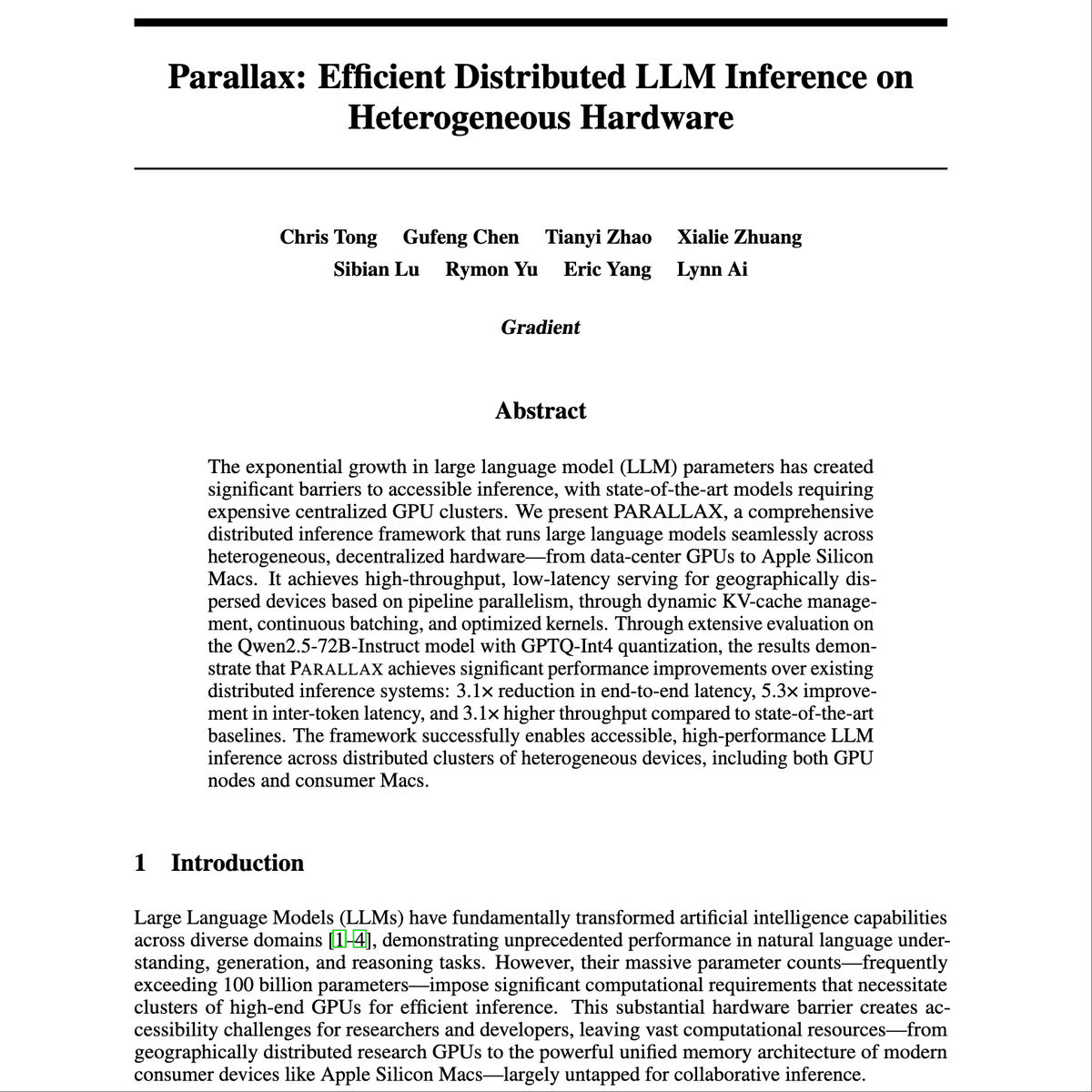

Ever wondered how our chatbot replies in seconds without a central server? It runs on Parallax’s Swarm: a fully decentralized mesh where your prompt is tokenized, segmented, and routed across nodes holding model shards. Each node executes its assigned layers of the LLM, passing…

I just went through all the projects on @DeSciNews site and I found out something really interesting. Out of the 17 projects on the site, 11 have a DAO and/or voting. Also, of the 17, 15 take part or work with external DAOS. DAOs are super difficult to navigate and aren’t even…

Introducing RND1, the most powerful base diffusion language model (DLM) to date. RND1 (Radical Numerics Diffusion) is an experimental DLM with 30B params (3B active) with a sparse MoE architecture. We are making it open source, releasing weights, training details, and code to…

Intelligence has been locked in walled gardens. Today, we’re opening the gates. Parallax now runs in Hybrid mode, with Macs and GPUs serving large models together in a truly distributed framework.

Goodnight Friends 🌌 Can I get a GN ? Most rollups still rely on centralized sequencers, fast, but fragile. @espressosys changes the game with shared sequencing that’s decentralized by design. It lets multiple rollups plug into a common layer, enabling fair ordering, atomic…

Verde from @gensynai - when model computations can be verified as transactions 🔐 𝟏/𝟐 . In the world of distributed learning, one of the main problems has always been trust: how can you be sure that everything you ran on someone else's equipment was done honestly and…

Do you want to make your programs easier to parallelise? Yes, you do. Do you enjoy debugging concurrency? I bet you don't. Good news: you can now turn your favourite sequential structures into high-throughput concurrent ones without writing a single line of concurrent code ⇒

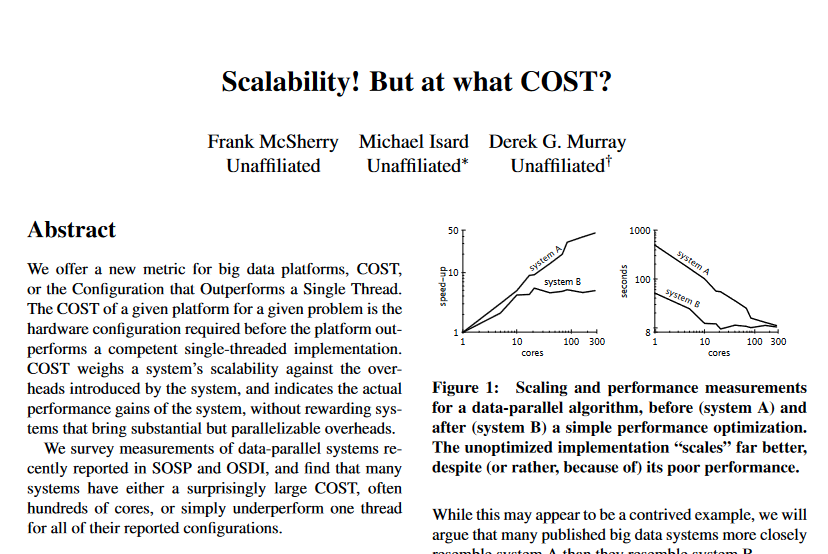

If you truly enjoy distributed systems and haven't seen this paper, you should check it out.

We've reached a major milestone in fully decentralized training: for the first time, we've demonstrated that a large language model can be split and trained across consumer devices connected over the internet - with no loss in speed or performance.

Introducing Paris - world's first decentralized trained open-weight diffusion model. We named it Paris after the city that has always been a refuge for those creating without permission. Paris is open for research and commercial use.

ブロックチェーン × #kamui4d での分散型並列駆動開発やってみた! 現在の状態はブロックチェーンで中央サーバーを設置せずに分散保持、gitはworktree生成と過去アーカイブの役割 マシンリソースを分けてそれぞれで生成 接続はwinとmacをtailscale +…

The Sovereign AI OS everyone deserves. Parallax lets you set up a local AI cluster across Macs and PCs, and host your own models and applications without performance compromises. Watch @alex_mirran and the crew get @Alibaba_Qwen 235B running in minutes.

Introducing Parallax, the first fully distributed inference and serving engine for large language models. Try it now: chat.gradient.network 🧵

Scalability! But at what cost? This paper is an absolute classic because it explores the underappreciated tradeoffs of distributing systems. It asks about the COST of distributed systems--the Configuration that Outscales a Single Thread. The question is, how many cores does a…

Distributed Systems Basics (CAP Theorem, Consistency Models) What are Distributed Systems? → A distributed system is a group of computers working together as a single system → They communicate over a network to share tasks and data → Benefits → Scalability, reliability,…

The future of AI isn’t just in “big models” it’s in distributed scale. Gradient Parallax is the real game changer: host LLMs across your own devices — Mac, Linux, GPU clusters no central cloud needed. Build, share, and deploy faster and cheaper. @gradientintern @Gradient_HQ

Have you read this book? If not, read now, you will thank me later buy here - amazon.com/Think-Distribu… (aff)

distributed 5000x5000 matrix multiplication on my two laptops collaborating in the execution i’m simulating multiple peers connected to a network where tinyp2p distributes the computational load one node submits the task to the network, which is processed and divided into…

Async APIs for scalable AI. Fault proofs for secure AI. WebGPU for accessible AI. It all connects here. 👀

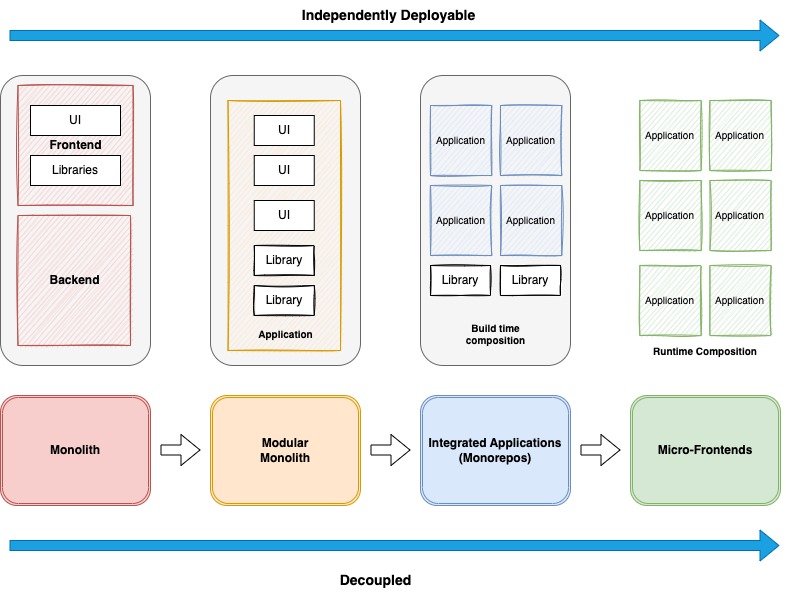

You (probably) don't need Micro-Frontends This is the "Distributed and Decoupled Spectrum" Make sure that you explore all the other options before trying to implement a fully distributed system

Something went wrong.

Something went wrong.

United States Trends

- 1. #WWERaw 20.5K posts

- 2. Logan Paul 4,114 posts

- 3. Cowboys 32.9K posts

- 4. Koa Peat N/A

- 5. Cuomo 125K posts

- 6. Monday Night Football 10K posts

- 7. Cardinals 17.1K posts

- 8. #OlandriaxCFDAAwards 8,519 posts

- 9. #RawOnNetflix N/A

- 10. CM Punk 17.7K posts

- 11. Caleb Wilson N/A

- 12. Harvey Weinstein 9,236 posts

- 13. Josh Sweat 1,108 posts

- 14. Teen Vogue 5,752 posts

- 15. Walt Weiss 2,847 posts

- 16. Guyton N/A

- 17. Condon 3,214 posts

- 18. Braves 12K posts

- 19. Sliwa 35K posts

- 20. Schwab 6,177 posts