#explainai search results

Delightful to be at #SiliconFlatirons @ColoLaw for #ExplainAI w/ @MargotKaminski & @Sharon_B_Jacobs!

8. 📚 “Explain this complex topic (AI/Finance) in Gen Z language using [ExplainLikeImFive or ChatGPT].” #ExplainAI #SmartSimplicity

Explain anything on your screen with AI "Search With Google Lens" Option on Chrome browser allows you to explain anything on your screen with AI. #googlelens #explainai #ai

Back at #ExplainAI at #SiliconFlatirons: Comparative legal approaches to explainability. @MargotKaminski moderates all-star panel of @megleta, @JcMalgieri, @aselbst, @caffsouza, & @mikarv

.@HarrySurden helpfully explains that "'zed' is Australian for 'z'" at #explainai. #loveit.

.@JcMalgieri asks: what have EU member states done to implement EU GDPR's explainability requirement? - Just 9've implemented Art. 22 (incl a bunch of big ones) - big diffs (how significant effects trigger?) - Slovenia just covers negative effects! #ExplainAI #SiliconFlatirons

Netherlands just implements in public decisions. Germany has other sectorial restrictions. France has 3 levels: judicial (automated decisions forbidden), administrative (some safeguards), private (more things allowed) Hungary: future law will permit...we'll see! #ExplainAI

"The biggest difference between the EU and the US is that in the US we don't regulate any more." @aselbst #SiliconFlatirons #ExplainAI

Data are made available by institutions...but these tend to be *interested* institutions, and we should be careful with those data. Pierre Schlag #siliconflatirons #explainAI

"By observing this [#ExplainAI] panel, you would discover that engineers wear glasses because they are cool, and lawyers do not because they are cool. Observing the previous panel, you would come to a different conclusion." Indeed. Joshua Kroll #SiliconFlatirons

"To the extent that the US has an explanation regime, it's from credit laws" (e.g., adverse action notice from Fair Credit Reporting Act) @aselbst #SiliconFlatirons #ExplainAI

Fairness is nice, but we disagree on how to operationalize it. Callout to @ArtDecider, which has solved that other contestable concept. Kroll @ #ExplainAI

Motivations around EU automated-decision rules have to be seen in light of concerns about incorrect data. @mikarv @ #ExplainAI

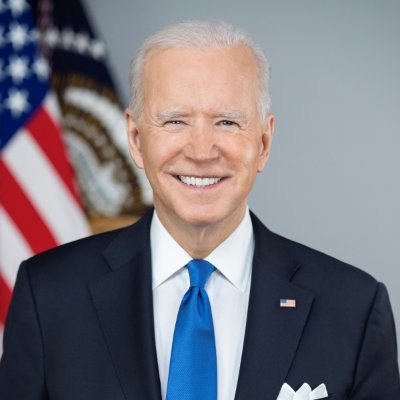

When it comes to AI, we must both support responsible innovation and ensure appropriate guardrails to protect folks’ rights and safety. Our Administration is committed to that balance, from addressing bias in algorithms – to protecting privacy and combating disinformation.

The right to a human in the loop is a regulatory feature of EU that is completely foreign to US rules--and has been for a while. @MegLeta @ #ExplainAI

Want ability to intervene in a machine in real time, not just contest after the fact a decision that is handed to us. @DMulliganUCB #siliconflatirons #explainAI

Yeh: I have a one year old and a 7 year old. For my one year old, if something looks like this <<points to water bottle>> he will speak to it #siliconflatirons #explainAI

"Where is my tool to check data for fairness?" is not the way to operationalize these concepts. Our model is more mental than technological. Look at development of tech lifecycle, all the way to deployment, what are questions we shd be asking? Philips #siliconflatirons #explainAI

I'm so pleased that @MargotKaminski is masterfully livetweeting #explainAI @ #SiliconFlatirons so I don't have to worry about conveying content and can just focus on occasional snark.

Explainable AI dashboards visualize mood drivers—sleep, social, work stress—empowering users and clinicians to co-create treatment plans .#ExplainAI #Dashboard

8. 📚 “Explain this complex topic (AI/Finance) in Gen Z language using [ExplainLikeImFive or ChatGPT].” #ExplainAI #SmartSimplicity

Explain anything on your screen with AI "Search With Google Lens" Option on Chrome browser allows you to explain anything on your screen with AI. #googlelens #explainai #ai

When it comes to AI, we must both support responsible innovation and ensure appropriate guardrails to protect folks’ rights and safety. Our Administration is committed to that balance, from addressing bias in algorithms – to protecting privacy and combating disinformation.

@ #100DaysOfCode #doesnt have... #explainAI

Why do you use Twitter? 1. Education 2. Entertainment 3. Monetisation 4. Networking 5. Social 6. Other... I use Twitter for a combination of all of the above.

Series of great audience qs. Lessons from voting? Microsoft's review as a "checklist of audits on top of checklist of audits". Would you be open to additional scrutiny by third parties? #siliconflatirons #explainAI

Desai: Product counsel plays a role. Lawyers who aren't just antagonistic but understand tech #siliconflatirons #explainAI

*very important* Impact Assessments work only if you have people with enough knowledge and tools for contesting within organizations that use them, and incentives for making meaningful. @DMulliganUCB #siliconflatirons #explainAI

Algorithmic Impact Assessments are not just a tool for transparency, but to force the process for questions/tool applications. Philips #siliconflatirons #explainAI (with HT to @aselbst)

How are you defining your problem statement? This will require a toolkit beyond technology and also involving questions. Philips #siliconflatirons #explainAI

Case studies provide us with some of the rich/necessary knowledge, @DMulliganUCB points to Goodman & Brauneis piece about algorithm acquisitions by governments papers.ssrn.com/sol3/papers.cf… #siliconflatirons #explainAI

Fairness exists not just within a technical system but a socio-technical system @DMulliganUCB #siliconflatirons #explainAI

Counterfactual example provides fantastic way to address certain issues. But it's a tool and does some things very well and certain things won't help you with at all. Using counterfactuals doesn't give a free pass. What else is going on? Philips #siliconflatirons #explainAI

"Where is my tool to check data for fairness?" is not the way to operationalize these concepts. Our model is more mental than technological. Look at development of tech lifecycle, all the way to deployment, what are questions we shd be asking? Philips #siliconflatirons #explainAI

Really galls @DMulliganUCB that SG said of risk recidivism software in Wisconsin Loomis case: "not ready to review it because we don't know how gender is being used" #siliconflatirons #explainAI nytimes.com/2017/05/01/us/…

Real reason to think about contestability, not explainability, if the goal is domain-specific knowledge production. @DMulliganUCB #explainAI #siliconflatirons

Want ability to intervene in a machine in real time, not just contest after the fact a decision that is handed to us. @DMulliganUCB #siliconflatirons #explainAI

Part II: Gleb Sizov defending his PhD thesis on "Automating Problem Analysis Using Knowledge from Text" #CBR #explainAI @iefakultetet

Delightful to be at #SiliconFlatirons @ColoLaw for #ExplainAI w/ @MargotKaminski & @Sharon_B_Jacobs!

Back at #ExplainAI at #SiliconFlatirons: Comparative legal approaches to explainability. @MargotKaminski moderates all-star panel of @megleta, @JcMalgieri, @aselbst, @caffsouza, & @mikarv

Something went wrong.

Something went wrong.

United States Trends

- 1. #FanCashDropPromotion 2,436 posts

- 2. Dizzy 7,138 posts

- 3. #FridayVibes 5,377 posts

- 4. Good Friday 61.8K posts

- 5. #FursuitFriday 9,822 posts

- 6. #PETITCOUSSIN 22.4K posts

- 7. #FridayFeeling 3,244 posts

- 8. Happy Friyay 1,596 posts

- 9. Munetaka Murakami 1,814 posts

- 10. Publix 2,072 posts

- 11. Elise Stefanik 10.1K posts

- 12. Tammy Faye 4,252 posts

- 13. Laporta 8,929 posts

- 14. Talus Labs 25.5K posts

- 15. Chase 89.8K posts

- 16. $ZEC 36.5K posts

- 17. Happy N7 2,590 posts

- 18. John Wayne 1,927 posts

- 19. Kehlani 20.5K posts

- 20. Sydney Sweeney 106K posts