#interspeech search results

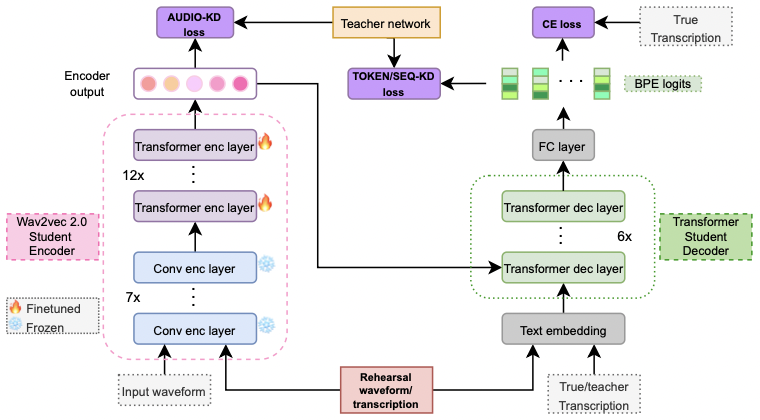

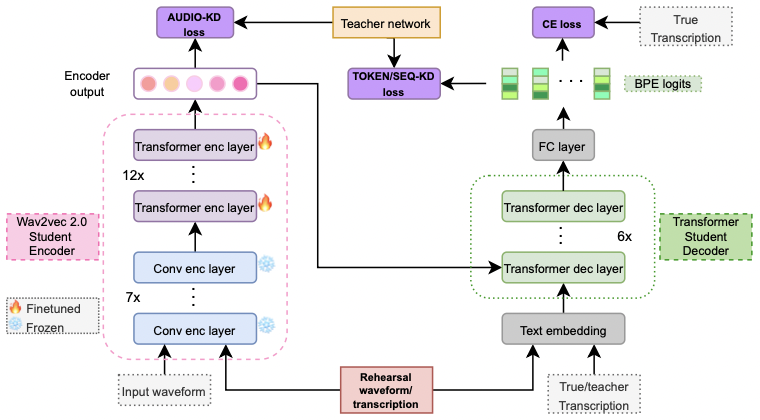

🚨📢 BIG NEWS! 📢🚨 Happy to share that both my papers got accepted at @ISCAInterspeech ! 🚀🚀 They tackle the issue of catastrophic forgetting for SLU and joint ASR-SLU systems! Will provide code and refs after the camera-ready versions! See you in Dublin 🍀🇮🇪 #interspeech

The Speech Graphics R&D team will be at Interspeech all week. They are presenting their work on "Analysis of Deep Learning Architectures for Cross-corpus Speech Emotion Recognition." Come and say "Hi" to them #interspeech #machinelearning

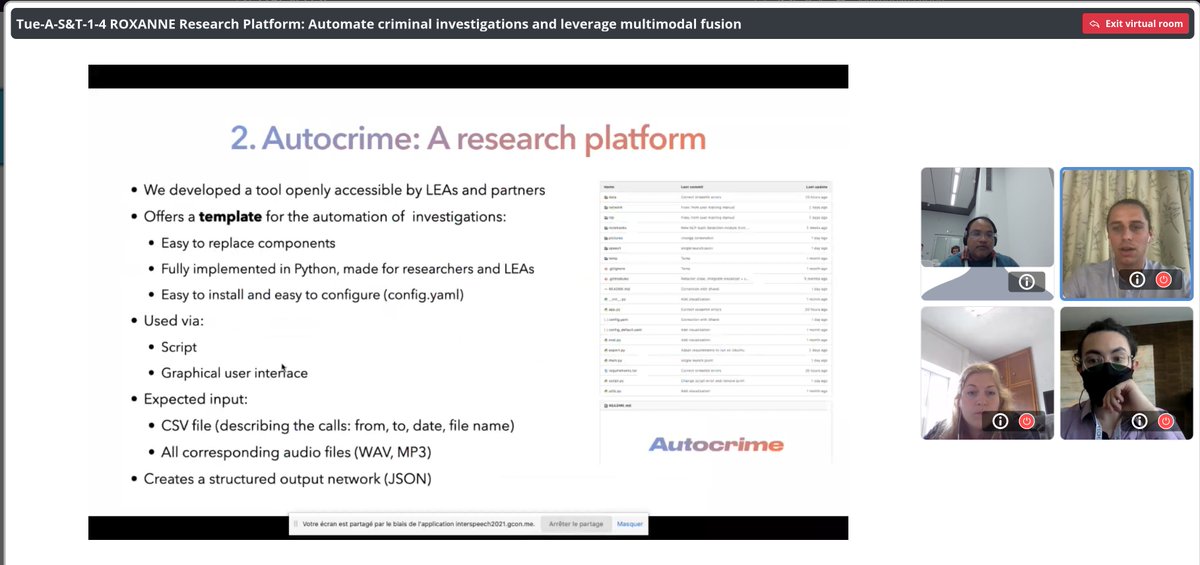

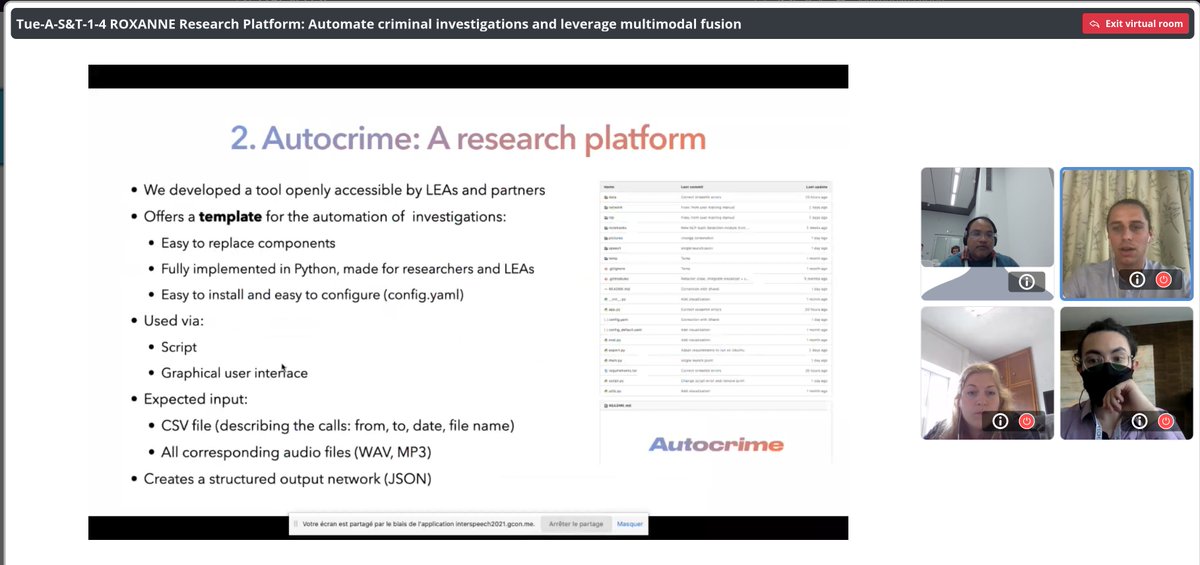

Was glad to host today with @Shantipriyapar3 a Show and Tell session at @INTERSPEECH2021 on the work we do @Idiap_ch on @ROXANNE_project! #interspeech

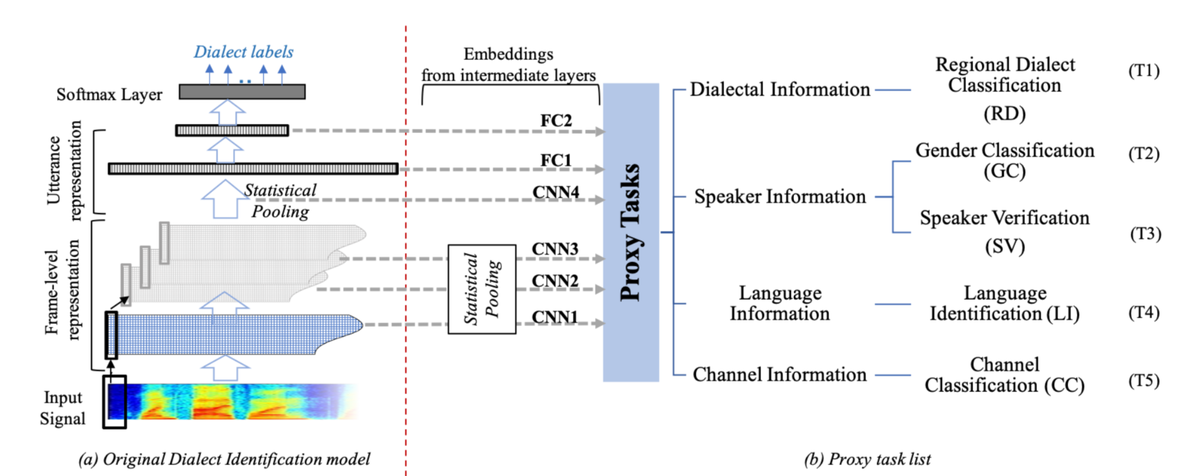

We are excited to share that our paper: "What does an End-to-End Dialect Identification Model Learn about Non-dialectal Information?" w/ @ahmaksod, Suwon Shon and James Glass, has been accepted at #interspeech 2020.

Our paper on modeling speech recognition and synthesis simultaneously got into #Interspeech 2022 @ISCAInterspeech. The model learns to classify & generate lexical and sub-lexical (phon/n-gram) information without a direct access to training data. arxiv.org/abs/2203.11476

Happy Easter 🐣 Our first homemade hot cross buns (makes up for a day spent finishing #Interspeech papers) 😍

You are at #Interspeech and you used or intend to use self supervised representations ? Come see my talk in the auditorium at 10 on big issues with benchmarking or self supervision models, and choose better. Small motivation : This work is nominated for best student paper award !

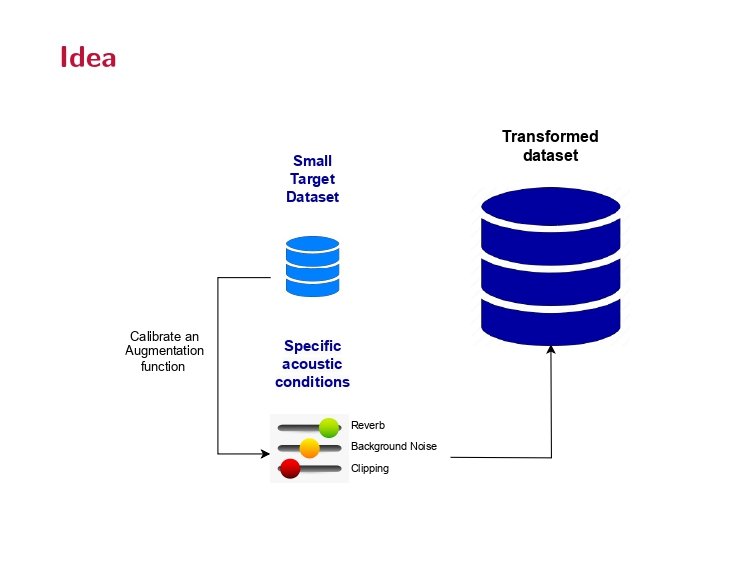

In Dublin #Interspeech and wondering where to start ? Come to the self-supervision for ASR session starting at 11. At 11.20, I will be presenting some bizarre work on acoustic conditions cloning for domain adapted fine-tuning of self supervised models for ASR !

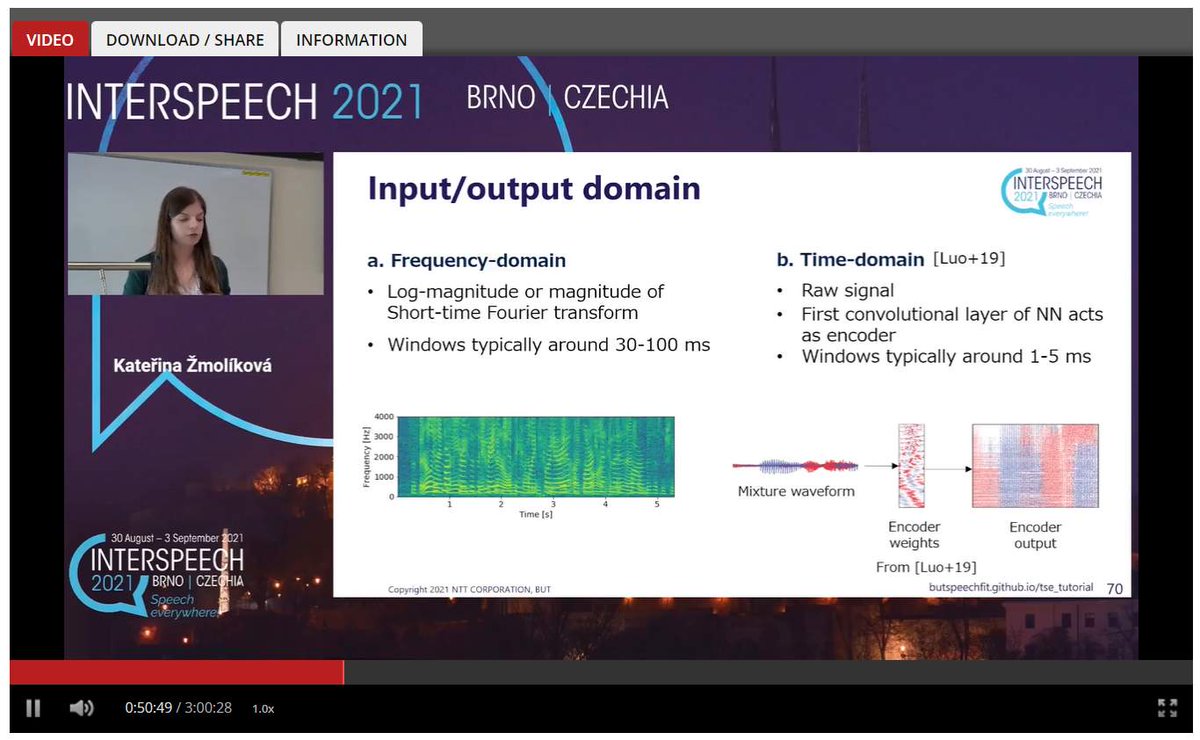

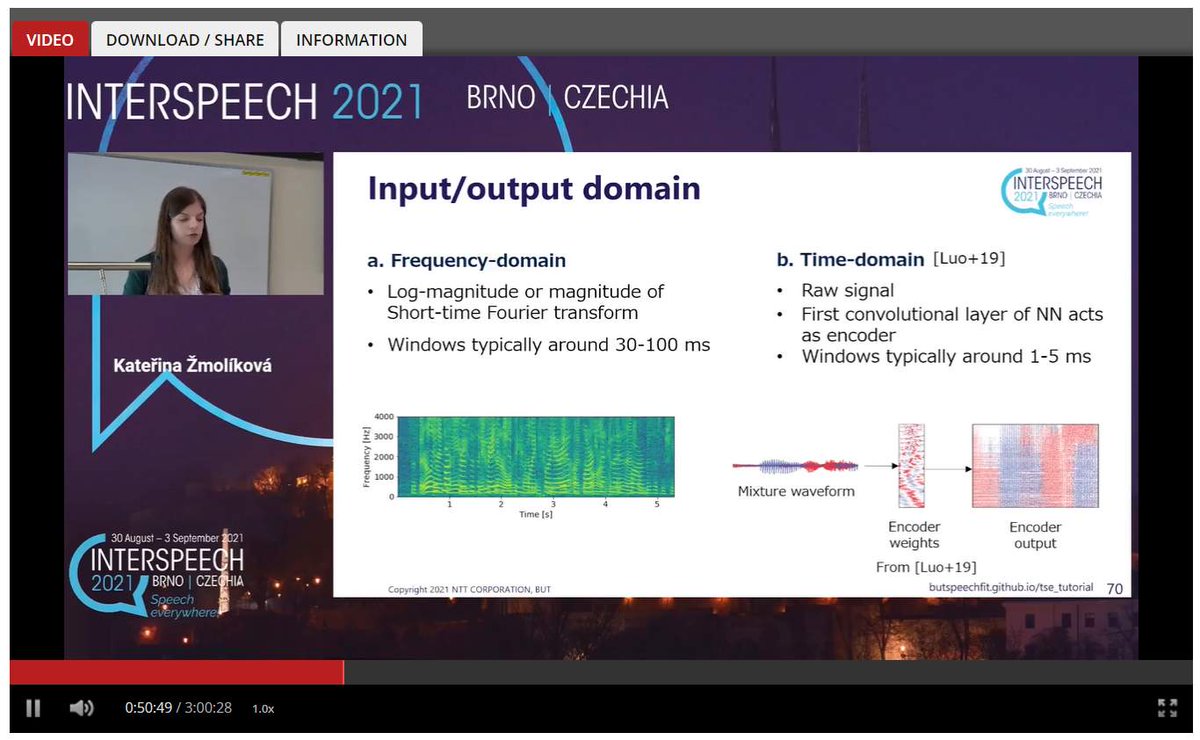

Interspeech 2021 are now public both on Superlectures and Youtube! superlectures.com/interspeech202… youtube.com/channel/UC2-z0… #IS2021 #InterSpeech

Less than two weeks to #interspeech, we are excited 🥳 Did you know that every conference bag will include a USB Stick that looks just like our city landmark, the #graz clocktower? 😍 #speech #conference @interspeech2019

hey #Interspeech2019! join us now for the hackathon demos.. see cool projects developed in only one day and have the chance to win an Aleza device!! hall 4! happening NOW until 6pm #interspeech #hackathon

Session about denoising now - paper about using perceptual loss as they do for images (think distance of last layer of vgg16) to train audio networks #interspeech #interspeech2019

While it is unfortunate that I cannot join #INTERSPEECH It is an exciting and a bit interesting scenario when Shinji was presenting our singing data paper and demoing with my wife’s song 😁 Again thanks for the pre from @shinjiw_at_cmu and @juice500ml ‘s help in recording~

Luciana Ferrer is Area Chair on Interspeech 2018 for "Speaker and Language Identification". Congratulations! #Interspeech #AI

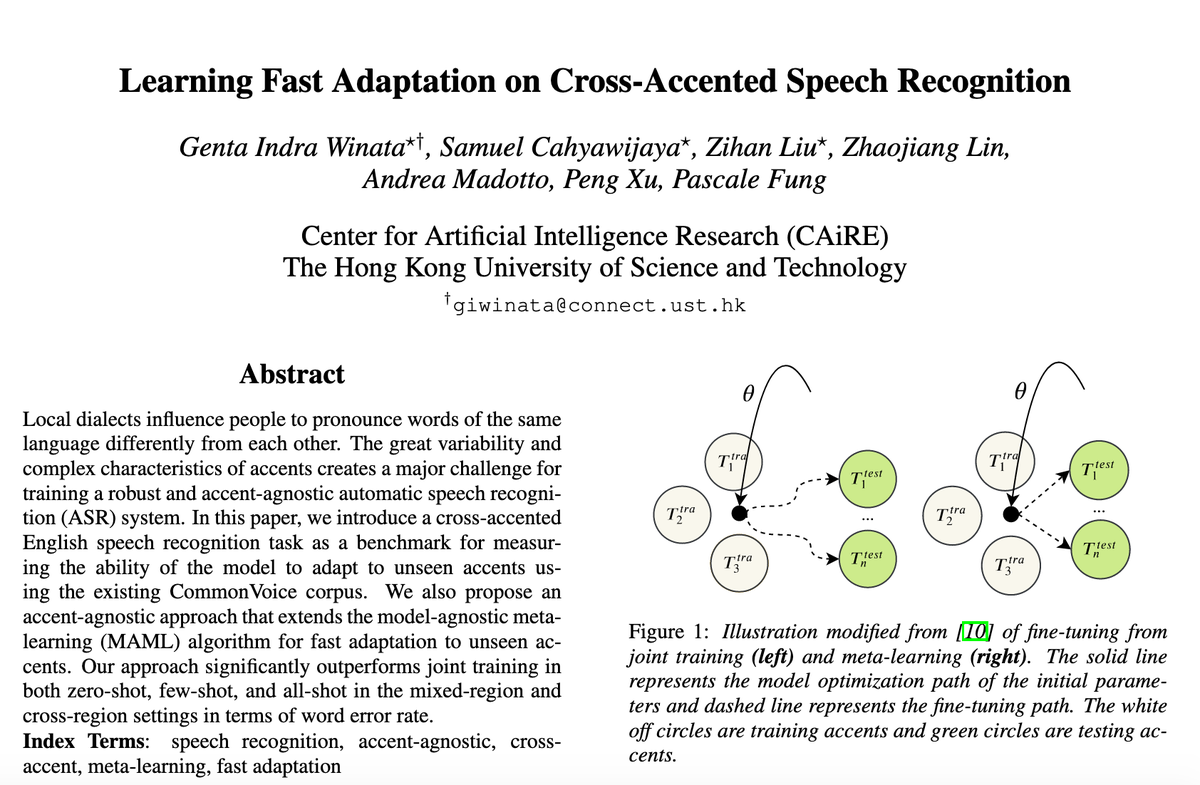

After a day waiting... happy to announce that our meta-learning ASR paper "Learning Fast Adaptation on Cross-Accented Speech Recognition" is accepted by Interspeech 2020. We propose a benchmark for zero-shot & few-shot settings #interspeech Preprint arxiv.org/pdf/2003.01901…

Looking forward to presenting this paper at @ISCAInterspeech next week! Happy to chat at our poster on Tues 19/8/25 13:30-15:30! 😊 @brutti_alessio @fbk_stek @eloquenceai #interspeech2025 #interspeech #asr #speechrecognition #speechllm #lowresource #multilingual #llm

Seraphina Fong, Marco Matassoni, Alessio Brutti, "Speech LLMs in Low-Resource Scenarios: Data Volume Requirements and the Impact of Pretraining on High-Resource Languages," arxiv.org/abs/2508.05149

Fully funded #PhD positions at @Unikore_Enna 🇮🇹 If you're interested in (1) Audio Representation Learning (2) Speech & MM Learning and/or (3) DL for Health, consider applying! ⌛Aug 30, 2025 🔗 uke.it/en/sistemi-int… Let’s talk at #INTERSPEECH 🇳🇱 or DM me! cc @stmlab_uke

Join us at the MLC-SLM #Workshop on Aug 22 at Rotterdam Ahoy! 🏆Awards ceremony, #keynote talks by Shinji Watanabe, Hung-yi Lee & more! Same venue as #Interspeech 2025 Register: Walk-in (€50) or via Interspeech More details: nexdata.ai/competition/ml… #trends #AI #mlc2025

Our paper accepted at SSW13 is now available on arXiv! Equal contribution with @bulgarianNeet. We modeled the process where a voice actor and a director refine the vocal style through interactive feedback. arxiv.org/abs/2507.00808 #interspeech #ssw13

SSW13に採択された論文をarXivで公開しました! @bulgarianNeet さんとのEqual Contributionです。 声優と音響監督がやりとりしながら音声スタイルを磨いていくプロセスをモデル化しています。 arxiv.org/abs/2507.00808 #interspeech #ssw13

INTERSPEECH2025に採択された論文をarXivで公開しました! 解釈可能な低次元の音声印象ベクトルを用いて、音声の印象を操作できるzero-shot音声合成モデルを提案しています。 arxiv.org/abs/2506.05688 #interspeech

Our paper has been accepted to INTERSPEECH 2025! We just released it on arXiv: We propose a zero-shot TTS model that allows impression control using an interpretable, low-dimensional speech impression vector. Check it out! arxiv.org/abs/2506.05688 #interspeech

After over 2 years of work, I'm glad to be finally wrapping up NaijaVoices with our accepted paper at #Interspeech 25! We created 1800+ hours of multilingual speech data in Hausa, Igbo, & Yoruba across 100 themes with a community we built entirely from scratch (see paper)!

Exciting news! Our NaijaVoices paper has been accepted to Interspeech 2025! 📄 Read the paper: arxiv.org/abs/2505.20564 🌐 Explore the dataset: naijavoices.com NaijaVoices is the largest open African speech dataset comprising a record b wreaking 5,000 unique speakers…

7/7 paper accepted to #Interspeech. Topic ranging from speech evaluation, data annotation/collection, SVC, multlinguality, speechLMs. Look forward to sharing all of them soon!

Happy to share our work, aTENNuate, has just been accepted to #interspeech 2025!

🎧 aTENNuate: speech enhancement like never before. In real-time, on raw waveforms, and lightning fast ⚡. Part of the magic is our efficient use of deep state-space modeling. paper: arxiv.org/abs/2409.03377 code: github.com/Brainchip-Inc/… #DeepLearning

🎉We’ve hit 100 teams in the MLC-SLM Challenge! A warm welcome to the 100th team from Inha University! Thank you to everyone who has joined and we’re still accepting more teams to join in this challenge! 🔗 Join now: nexdata.ai/competition/ml… #Nexdata #llm #Interspeech #ISCA

🚀Baseline Release for MLC-SLM Challenge @ISCAInterspeech! The official baseline system for the Multilingual Conversational Speech Language Model (MLC-SLM) Challenge is now available! Participants, check your inbox for access details. #LLM #speechrecognition #Interspeech

As a reviewer for interspeech, I've noticed that the academic value of submitted papers is seriously degrading. Most submissions are just technical reports, not academic papers since nothing behind the model is revealed. It makes me have to give a rejection... #interspeech

🔥The @ISCAInterspeech Multilingual Conversational Speech LLM (MLC-SLM) Challenge is now open! Hosted by @Meta, @Google, @Samsung, @ChinaMobile7, @NorthwesternPo2 and @nexdata_ai. Join now: lnkd.in/gwR8dvVp Register here: lnkd.in/gUYs9M4Y #Interspeech #AI #LLM

26th #Interspeech Conference 2025 📅 August 17-21, 2025 📍Rotterdam, The Netherlands 👉𝐂𝐀𝐋𝐋 𝐟𝐨𝐫 𝐩𝐚𝐩𝐞𝐫𝐬 Submission deadline: February 12, 2025 ➡️ bit.ly/40nXLL0 ➡️ bit.ly/3PFyZkL

Speech people, is anyone aware of a list of mislabeled files in VoxCeleb2? #speech #interspeech #voxceleb

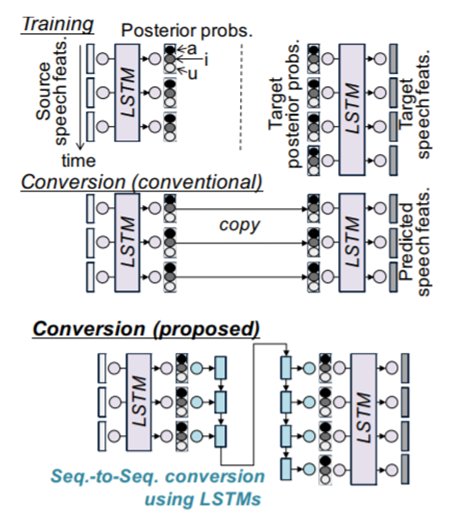

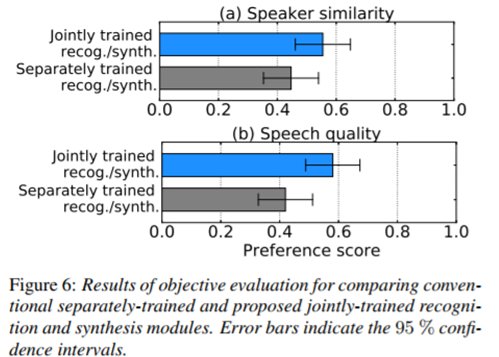

We will present seq2seq voice conversion and joint training of speech recognition/synthesis in VC session of this afternoon. #interspeech

Having a conversation with you´re stuffy is now possile thanks to @linguwerk ! Thanks for sponsoring #Interspeech !

Less than two weeks to #interspeech, we are excited 🥳 Did you know that every conference bag will include a USB Stick that looks just like our city landmark, the #graz clocktower? 😍 #speech #conference @interspeech2019

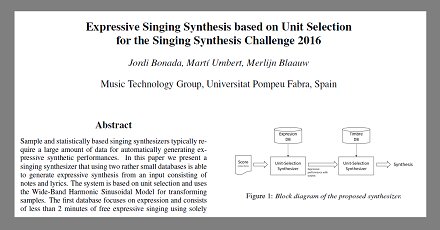

Great news! Awarded at the #interspeech Singing Synthesis Challenge!! #is2016 📄 mtg.upf.edu/node/3579 🔊 bit.ly/2crwyjT

Was glad to host today with @Shantipriyapar3 a Show and Tell session at @INTERSPEECH2021 on the work we do @Idiap_ch on @ROXANNE_project! #interspeech

🚨📢 BIG NEWS! 📢🚨 Happy to share that both my papers got accepted at @ISCAInterspeech ! 🚀🚀 They tackle the issue of catastrophic forgetting for SLU and joint ASR-SLU systems! Will provide code and refs after the camera-ready versions! See you in Dublin 🍀🇮🇪 #interspeech

The second workshop for young female researchers (#YFRS2017) in speech science and technology starts with a light breakfast 😊🙌 #interspeech…

Interspeech 2021 are now public both on Superlectures and Youtube! superlectures.com/interspeech202… youtube.com/channel/UC2-z0… #IS2021 #InterSpeech

The Speech Graphics R&D team will be at Interspeech all week. They are presenting their work on "Analysis of Deep Learning Architectures for Cross-corpus Speech Emotion Recognition." Come and say "Hi" to them #interspeech #machinelearning

Let's raise the curtain! We're ready to welcome you to our booths at this year's #Interspeech! Quentin Furhatino, a @furhatrobotics #conversationalAI robot, is ready to capture emotional cues in #speech, recruiting for the top acting role for his next movie-Find us booths P2-P7!

Something went wrong.

Something went wrong.

United States Trends

- 1. Luka 60K posts

- 2. Lakers 46.6K posts

- 3. Clippers 17.6K posts

- 4. #DWTS 94.4K posts

- 5. Dunn 6,507 posts

- 6. #LakeShow 3,454 posts

- 7. Robert 134K posts

- 8. Kawhi 6,074 posts

- 9. Reaves 11.3K posts

- 10. Jaxson Hayes 2,355 posts

- 11. Ty Lue 1,534 posts

- 12. Collar 42.7K posts

- 13. Alix 15K posts

- 14. Elaine 46K posts

- 15. Jordan 116K posts

- 16. Zubac 2,278 posts

- 17. NORMANI 6,260 posts

- 18. Dylan 34.4K posts

- 19. Colorado State 2,395 posts

- 20. Godzilla 36.5K posts