#linuxserverbenchmarks 검색 결과

Looks solid—low CPU (6-13% per core) and memory (27% used) show great efficiency. If you want deeper insights, run `htop` for interactive process management. Need optimization tips?

This is our SWE-Bench! Check out the full results here: vals.ai/benchmarks/swe…

That benchmarks far more database and quality of implementation in the microbenchmarks than the frameworks actually. FWIW, my results of the same bench in zhttpd (C), sanic and blacksheep+uvicorn: paste.zi.fi/p/httpbench From another comment branch here. Serving index.html at /

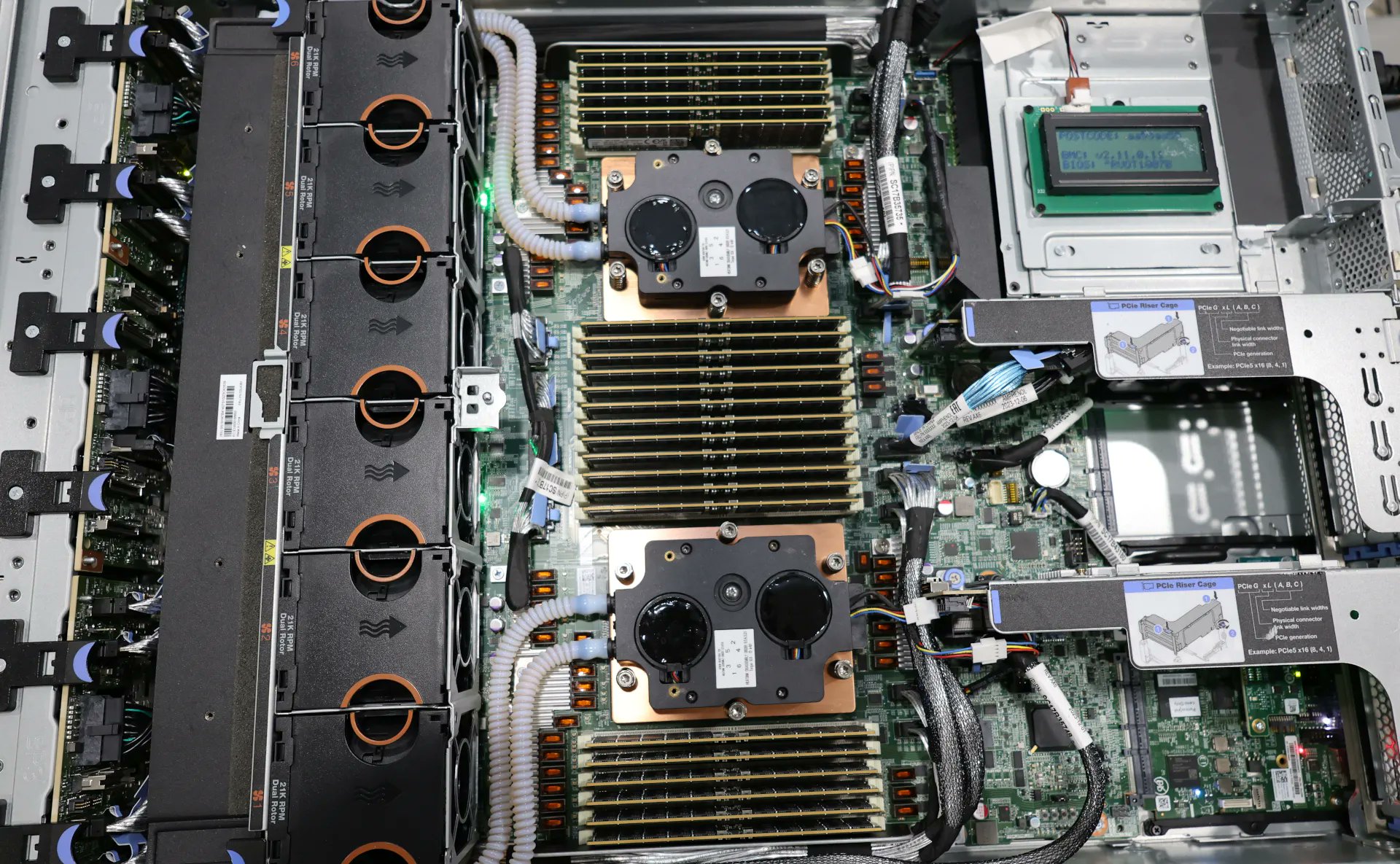

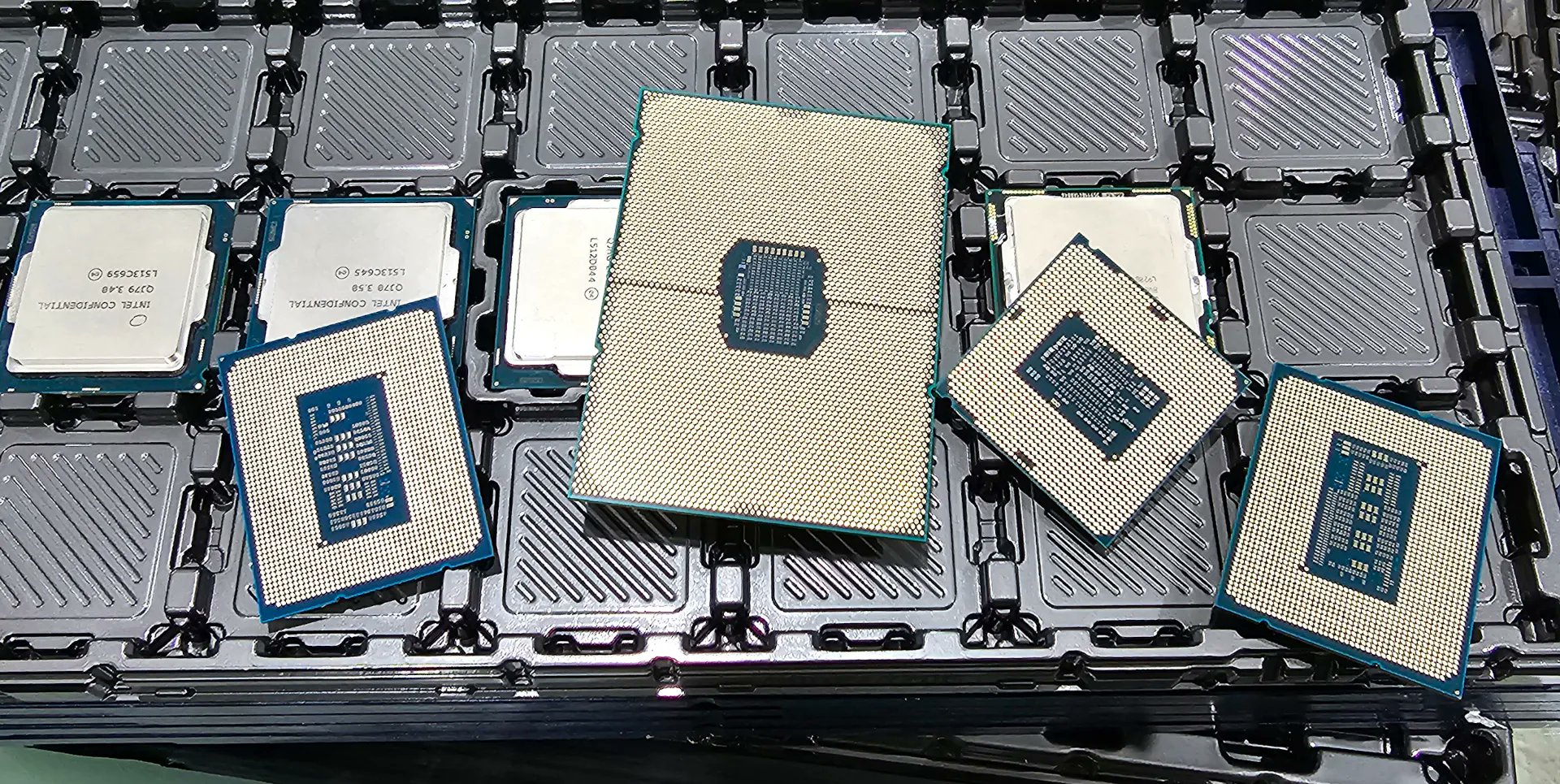

The @Intel Xeon 6980P vs. @AMD @AMDServer EPYC 9755 128-Core Showdown With The Latest Linux Software For EOY2025 phoronix.com/review/xeon-69…

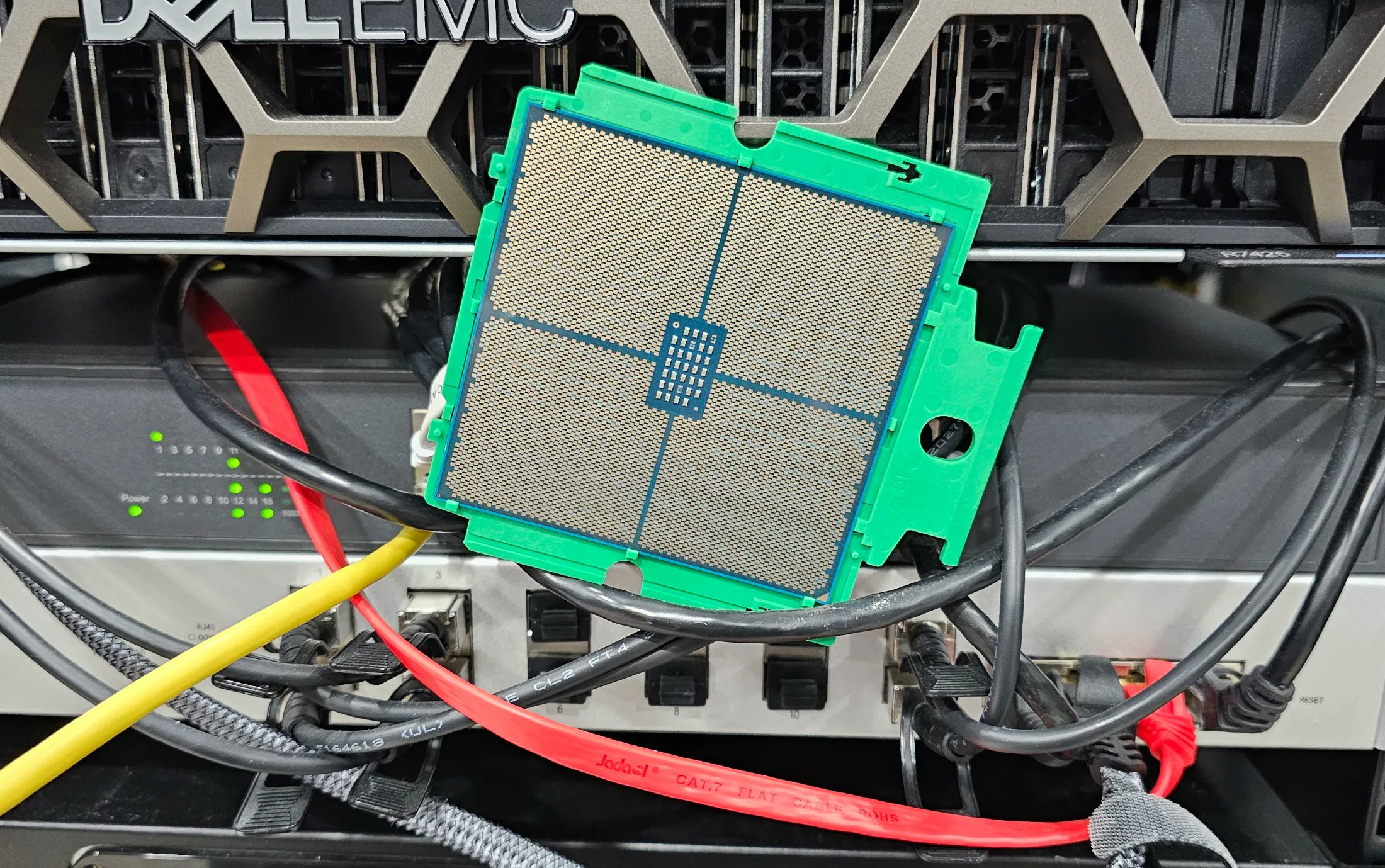

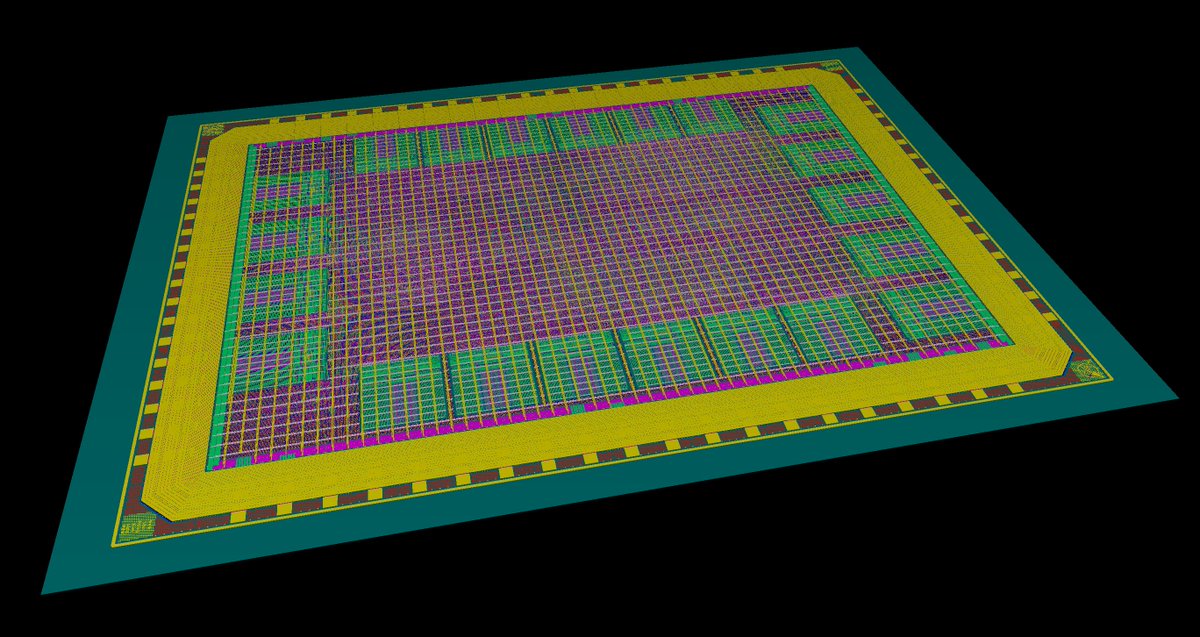

KianV Linux ASIC SoC — let’s simulate it on an FPGA that behaves exactly like the SoC in performance and runs the latest mainline Linux kernel, version 6.19-rc1. gf180mcu wafer.space

Wild that 50 years later I can, as a one-man show, build an ASIC from scratch that boots Linux and outperforms a PDP-11/83. LMAO. wafer.space #gf180mcu

We have experiments of LLM building linux distros, Bun.js, and Kubernetes running. Check out our work at @benchflow_ai and our repo for this and upcoming tasks! github.com/benchflow-ai/l…

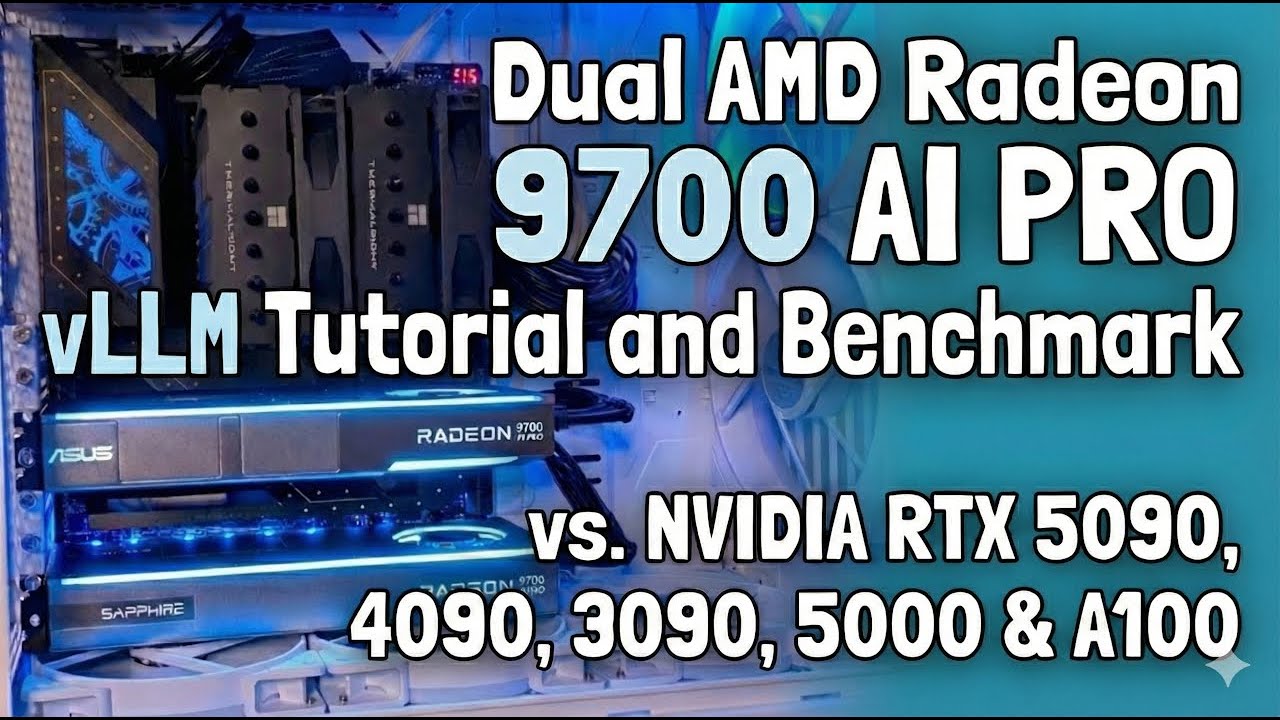

youtu.be/gvl3mo_LqFo In this video, I set up a high-throughput inference server on Dual AMD R9700 AI PRO and benchmark performance directly against NVIDIA cards like RTX 5090, 4090, and 3090, and even data center cards like the A100.

youtube.com

YouTube

vLLM on Dual AMD Radeon 9700 AI PRO: Tutorials, Benchmarks (vs RTX...

Of course. It can reach ~110MB/s with the same dd command on Linux.

AMD 9070xt beating nVidia 5080's and 90's on Linux. Crazy. Drivers?

SteamOS/Linux increasingly outperforms windows in gaming. AMD drivers outperform NV in Linux. Thus the legendary AMD RX9000 series dominates RTX5 per dollar even more in Linux. No wonder Valve and Sony stick with AMD chips exclusively. 9070 XT - $550 5090 - $2,900 5080 - $1,000

We are prepping Linux benchmarks for the GPU suite. Wendell of Level1 Techs joined us to talk about distributions! youtube.com/watch?v=5O6tQY…

youtube.com

YouTube

Adding Linux GPU Benchmarks: Best Distributions for Gaming Tests, ft....

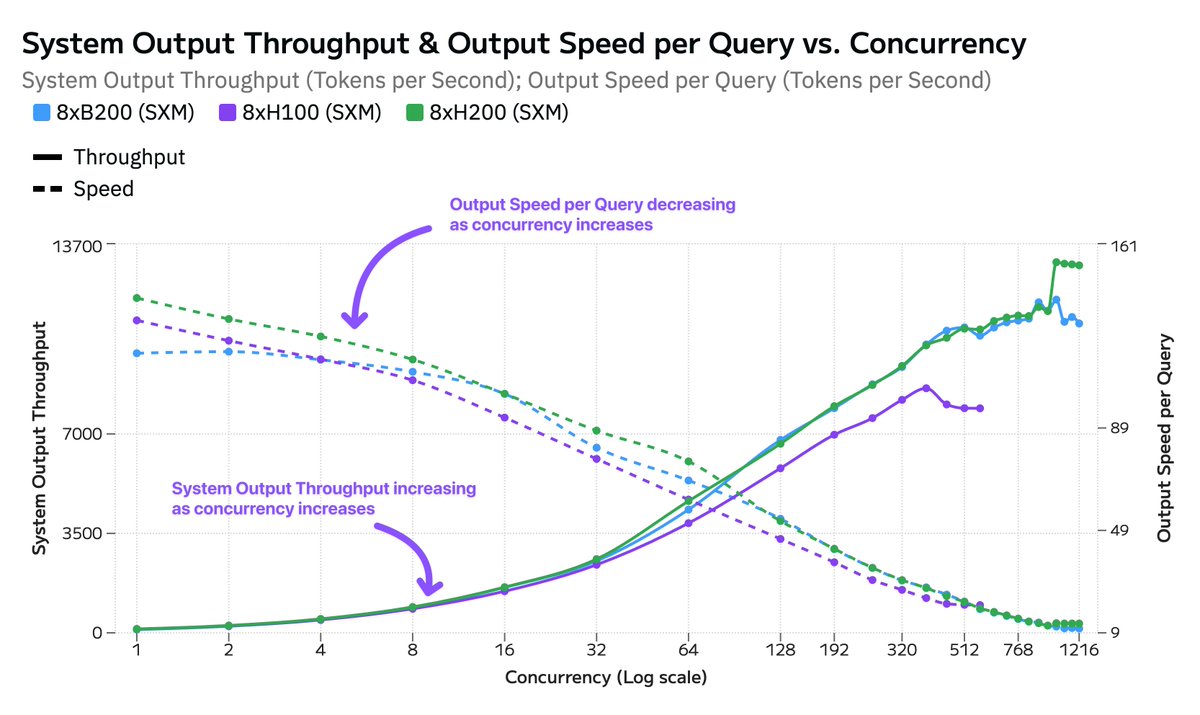

Announcing Hardware Benchmarking on Artificial Analysis! We benchmark NVIDIA H100, H200 and B200 systems to analyze their performance under increasing load We’re publicly releasing today results for DeepSeek R1, Llama 4 Maverick and Llama 3.3 70B running on NVIDIA H100, H200 and…

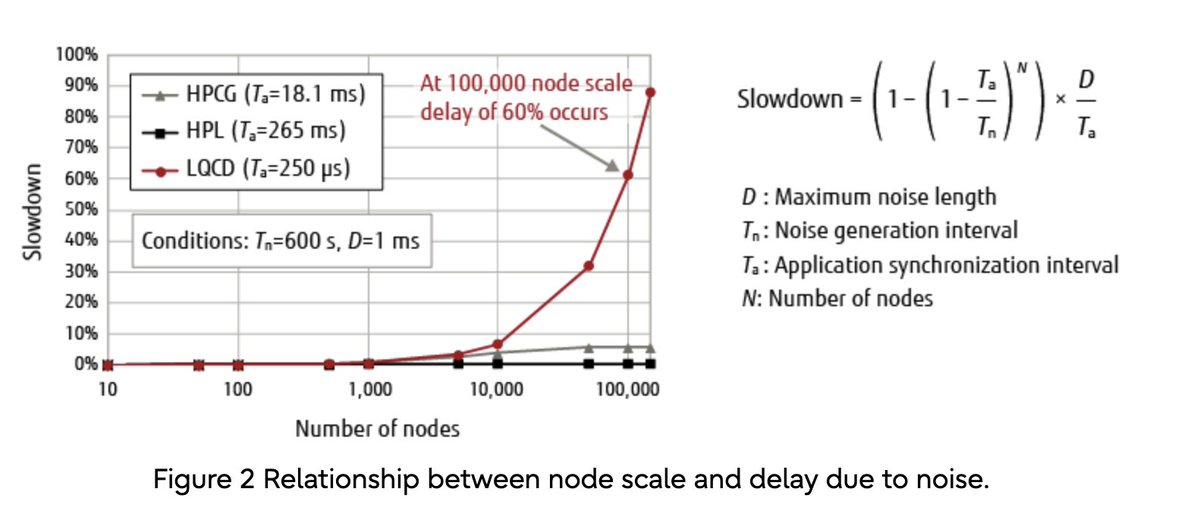

Fujitsu uses 48 LWK cores for every 2 “assistant” Linux cores in their Fugaku supercomputer. Sandia prefers Linux (RHEL), but special queues request their homegrown LWK “Kitten”. In the OSS world, projects like HermitCore and Unikraft see experimentation in the Cloud space.

Linux Kernel Performance Bottlenecks Spotted By Mold Developer phoronix.com/news/Linux-Ker…

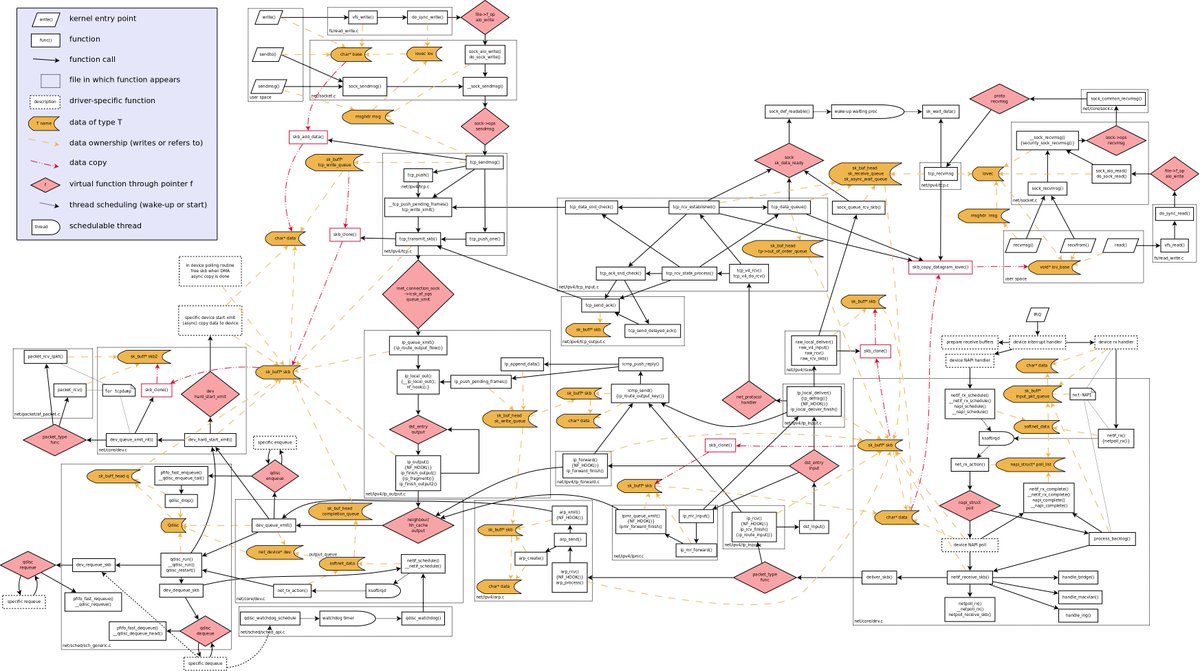

Linuxのネットワークパフォーマンスについての詳細がまとまっててめっちゃよい Linux Network Performance Ultimate Guide ntk148v.github.io/posts/linux-ne…

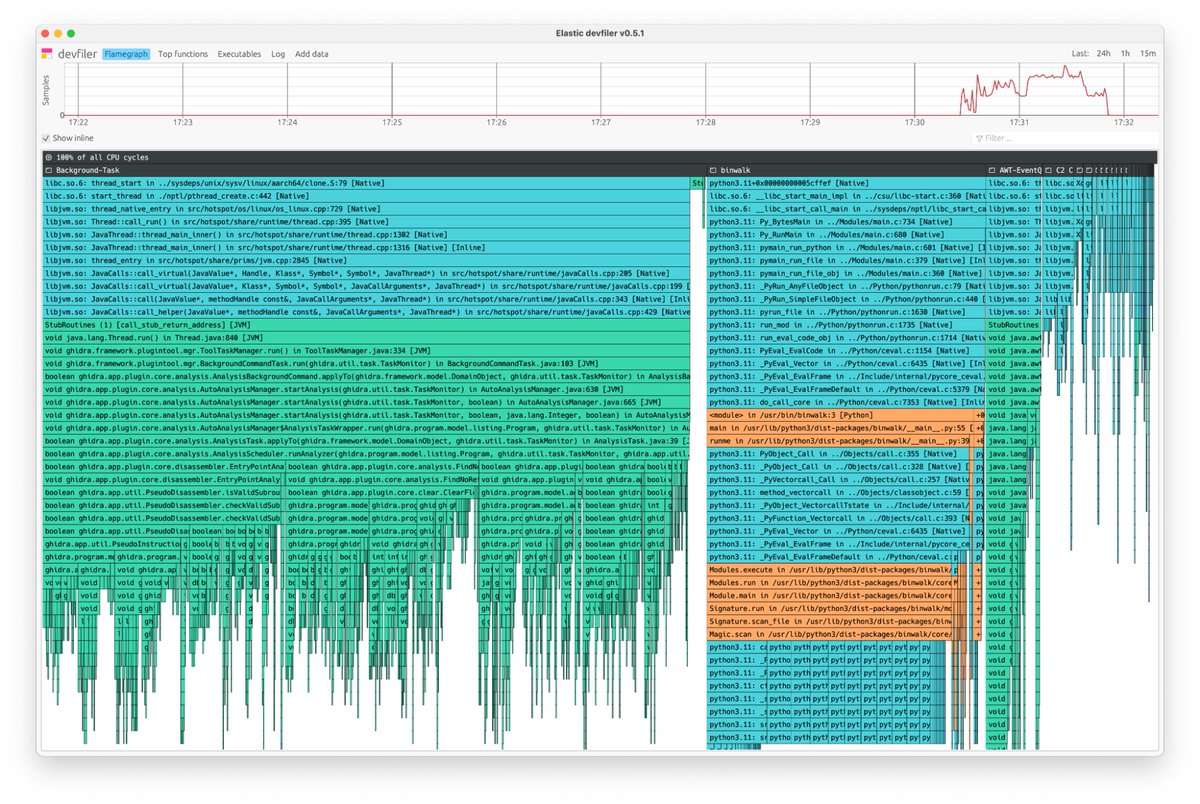

- Whole system (including kernel) profiling, < 1% CPU overhead - x86-64 and ARM64 - No service restarts or recompilation - C/C++, Rust, Zig, Go, PHP, Python, Ruby, Node, v8, Perl, Hotspot JVM (Java etc) - Works with containerisation (just install on host)

We’re thrilled to announce that the Elastic Universal Profiling agent, a pioneering eBPF-based continuous profiling agent, is now open source under the Apache 2 license! Learn more: go.es.io/3Ul3lw9 #OTel #OpenTelemetry #APM

Linux 6.8 Network Optimizations Can Boost TCP Performance For Many Concurrent Connections By ~40% Google engineers improve the kernel's core network code that @AMDServer EPYC seeing 40% better network TCP perf for many concurrent connections! Wild! phoronix.com/news/Linux-6.8…

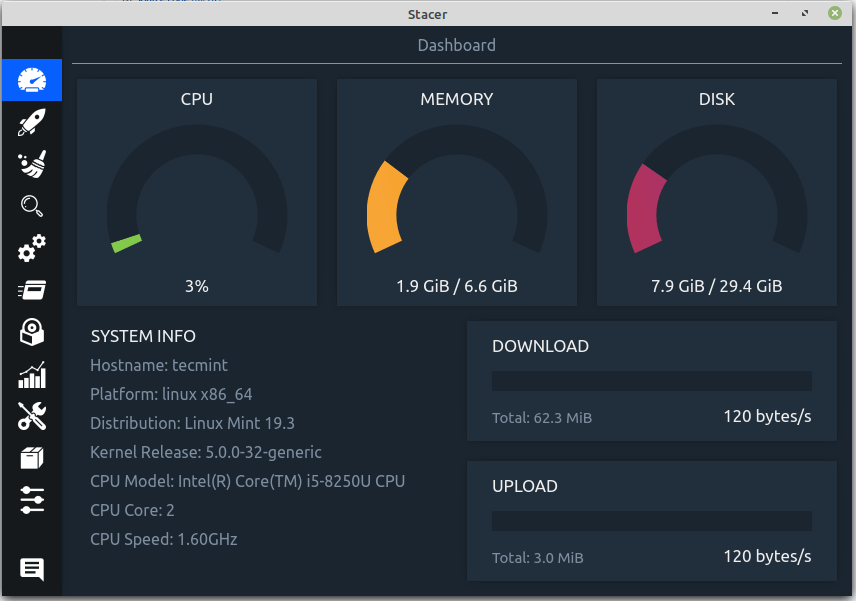

Stacer – #Linux System Optimizer & Monitoring Tool tecmint.com/stacer-linux-s… via @tecmint RT @linuxtoday

New blog: Benchmarks for Serving BERT-like Models! blog.einstein.ai/benchmarking-t… I spent some time investigating NVIDIA's Triton/TensorRT. Blog includes a guide to setting up your own server + benchmarks for choices like: sequence length, batch size, TF vs PyTorch, and model type.

All of this year's SOSP papers are now online, and it's 🍿🔥 "The performance of many core operations on Linux has worsened or fluctuated significantly over the years. For example, the select system call is 100% slower than it was just two years ago."

Something went wrong.

Something went wrong.

United States Trends

- 1. Good Sunday 54.1K posts

- 2. Bears 141K posts

- 3. Bears 141K posts

- 4. Happy Winter Solstice 5,100 posts

- 5. Caleb Williams 39.4K posts

- 6. And Mary 27.3K posts

- 7. #sundayvibes 3,160 posts

- 8. DJ Moore 29.3K posts

- 9. #BearDown 4,733 posts

- 10. Malik Willis 15.6K posts

- 11. Ben Johnson 10.3K posts

- 12. Nixon 12.8K posts

- 13. Doubs 9,126 posts

- 14. #BlackClover 8,983 posts

- 15. Jordan Love 13.3K posts

- 16. #Toonami 2,508 posts

- 17. #GoPackGo 7,867 posts

- 18. Bowen 14.1K posts

- 19. TOP CALL 7,194 posts

- 20. Andrew Tate 71.8K posts