#llamacpp resultados da pesquisa

The best local ChatGPT just dropped. A new interface for Llama.cpp lets you run 150K open-source models locally — no limits, no censorship, full privacy. Works on any device, supports files, and it’s FREE. 🔗 github.com/ggml-org/llama… #LlamaCpp

Wha! Just booted up the local Linux server and realized that I was playing with #llamacpp last year! Thanks to @ollama now it’s a daily occurrence on my Mac!

【ハイスペックPC勢、朗報です!🎉】 ついに巨大な #Qwen3Next モデルが、あなたのPCで動く日が来るかも!✨ #llamacpp がQwen3-Next-80Bのサポート検証を開始しました! クラウド不要!高性能AIをローカルで安全に使える可能性が浮上! API課金なし、高精度AIをガンガン試せて費用対効果も抜群◎…

I am gonna be a #pinokio script writing fool once @cocktailpeanut releases v5! Currently using the new agent feature to help me build a super lightweight LLM chat app using #llamacpp to serve models which are dynamically downloaded from @huggingface

【朗報】ローカルAIの常識が変わる!?🚀 llama.cppに「GroveMoE」が統合! 「高性能AIをローカルで動かすのは難しい…」と諦めていた方に朗報です⚡️ ついに「GroveMoE」が「#llamacpp」にマージ!PCでもパワフルAIがサクサク動く時代が来るかも!まさに「賢い省エネAI」の幕開けですね✨ 💡…

we need llama.cpp for voice models, anyone doing it already? #llamacpp #llamacpp4voice

Just a simple chatbot based on #LLM for #ROS2. It is controlled by a YASMIN state machine that uses #llamacpp + #whispercpp + #VITS.

Actually geeking with privateGPT and ingesting a bunch of PDF (#langchain #GPT4all #LLamaCPP). What's totally cool about PrivateGPT is that it's "private", no data sharing / leaks. Which is totally mandatory for corporate / company documents. Repo => github.com/imartinez/priv… I'll…

Are you also tired of too many open browser tabs? I built a Tabulous chrome extension to help! Summarizes tab contents along other handy features. Fully local, fully open source. Thanks! @LangChainAI @FastAPI @huggingface @hwchase17 #llamacpp #llama2 github.com/naveen-tirupat…

Experimental #LlamaCPP Code Completion Project for JetBrains IDE's using Java and any GGUF model 🤩 🔥 GitHub @ github.com/stephanj/Llama… /Cc @ggerganov @DevoxxGenie

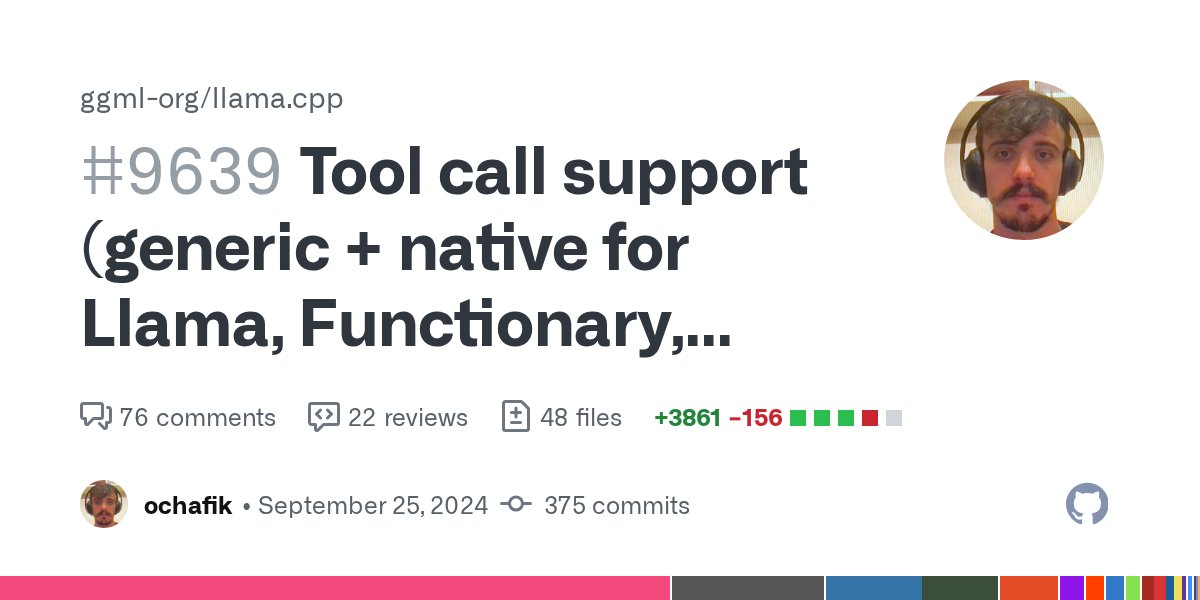

llama.cpp now supports tool calling (OpenAI-compatible) github.com/ggerganov/llam… 🧵 #llamacpp

llama cpp has a new UI, give a try ✨ #llamacpp #newui #llm #runllmlocally youtu.be/HX1wUis68GQ

youtube.com

YouTube

llama.cpp HAS A NEW UI | Run LLM Locally | 100% Private

Want an even easier way to play with #llamacpp on your #M1 (or #M2) with #LLaVA 1.5 #multimodal #model fine-tuned on top of #Llama2? Download #llamafile, spin up the web UI, and I asked what were the components in the image.

檢驗TAIDE-LX-7B-Chat繁體中文語言模型的黨性時候到了。 很好,中華民國台灣確實是一個國家,這個新AI模型回答再也不會像CKIP-LlaMa-2-7B一樣翻車了。 #llamacpp #llama

llamacppをUnityで動かすやつ。llamacpp.swiftにデフォルト設定されてるモデルだと、ネイティブアプリと同じ速度感だったので、やはりモデルの問題だった。ので、llamacppがUnityで動く環境は手に入れられたぞ #LLAMA #llamacpp #Unity #madewithunity

AI adoption is on the rise in India, with significant investments in AI technologies. Generative AI is expected to unlock between US $2.6 trillion and US $4.4 trillion in additional value. #AI #OpenSource #LlamaCpp

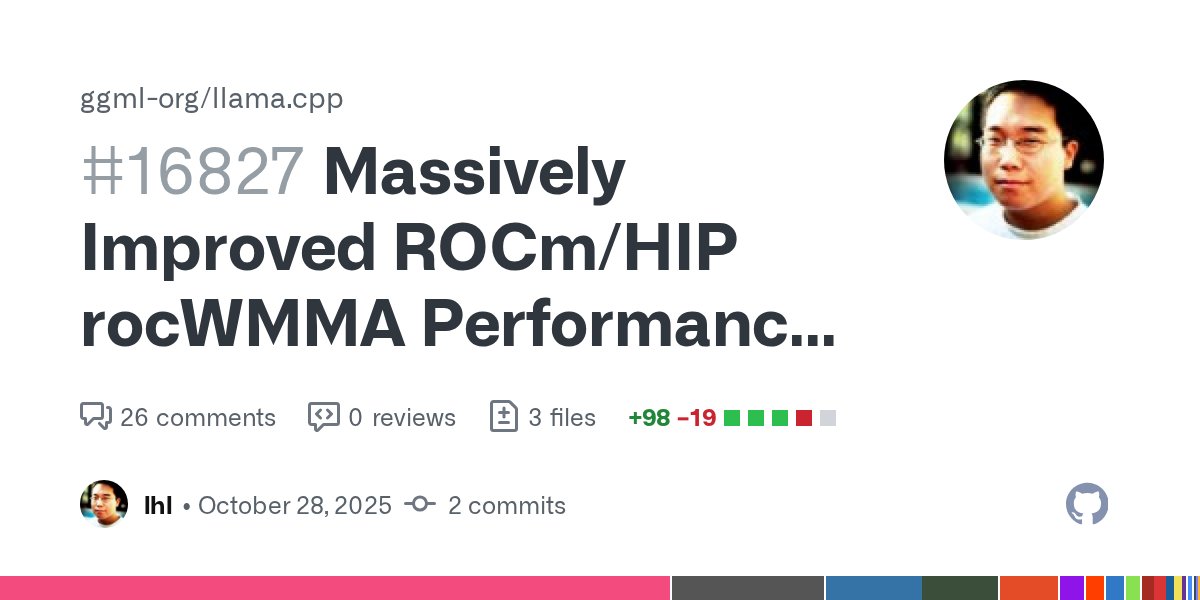

#EVOX2 #llamacpp 2倍の高速化ってすごいな 並列度を上げて(SMを最低でも2ブロック動かす・・であってる?)、FlashAttentionのメモリ領域確保を固定長から可変長にしたみたい github.com/ggml-org/llama…

I am gonna be a #pinokio script writing fool once @cocktailpeanut releases v5! Currently using the new agent feature to help me build a super lightweight LLM chat app using #llamacpp to serve models which are dynamically downloaded from @huggingface

Had a great chat about @BrodieOnLinux if you want to learn about Docker Model Runner tune in. Push/pull and encapsulate AI models as simply as you run a container. We dive into many different tangential topics #DockerModelRunner #llamacpp #ai #LLMs

Today we have @ericcurtin17 on the show to talk about the Docker Model Runner project and running LLMs on your own hardware #Linux #Podcast Audio: creators.spotify.com/pod/profile/te… Video: youtube.com/watch?v=gzgUDG…

youtube.com

YouTube

#297 Docker Model Runner Is Awesome | Eric Curtin

llama cpp has a new UI, give a try ✨ #llamacpp #newui #llm #runllmlocally youtu.be/HX1wUis68GQ

youtube.com

YouTube

llama.cpp HAS A NEW UI | Run LLM Locally | 100% Private

The best local ChatGPT just dropped. A new interface for Llama.cpp lets you run 150K open-source models locally — no limits, no censorship, full privacy. Works on any device, supports files, and it’s FREE. 🔗 github.com/ggml-org/llama… #LlamaCpp

we need llama.cpp for voice models, anyone doing it already? #llamacpp #llamacpp4voice

🚫 No GPU? No problem. Sergiu Nagailic hit 92+ tokens/sec on llama.cpp with just a CPU Threadripper setup. Great insights for low-resource LLM devs. Read: bit.ly/4ofrYGY #llamacpp #OpenSourceAI #DrupalAI #LLMDev

【ハイスペックPC勢、朗報です!🎉】 ついに巨大な #Qwen3Next モデルが、あなたのPCで動く日が来るかも!✨ #llamacpp がQwen3-Next-80Bのサポート検証を開始しました! クラウド不要!高性能AIをローカルで安全に使える可能性が浮上! API課金なし、高精度AIをガンガン試せて費用対効果も抜群◎…

【朗報】ローカルAIの常識が変わる!?🚀 llama.cppに「GroveMoE」が統合! 「高性能AIをローカルで動かすのは難しい…」と諦めていた方に朗報です⚡️ ついに「GroveMoE」が「#llamacpp」にマージ!PCでもパワフルAIがサクサク動く時代が来るかも!まさに「賢い省エネAI」の幕開けですね✨ 💡…

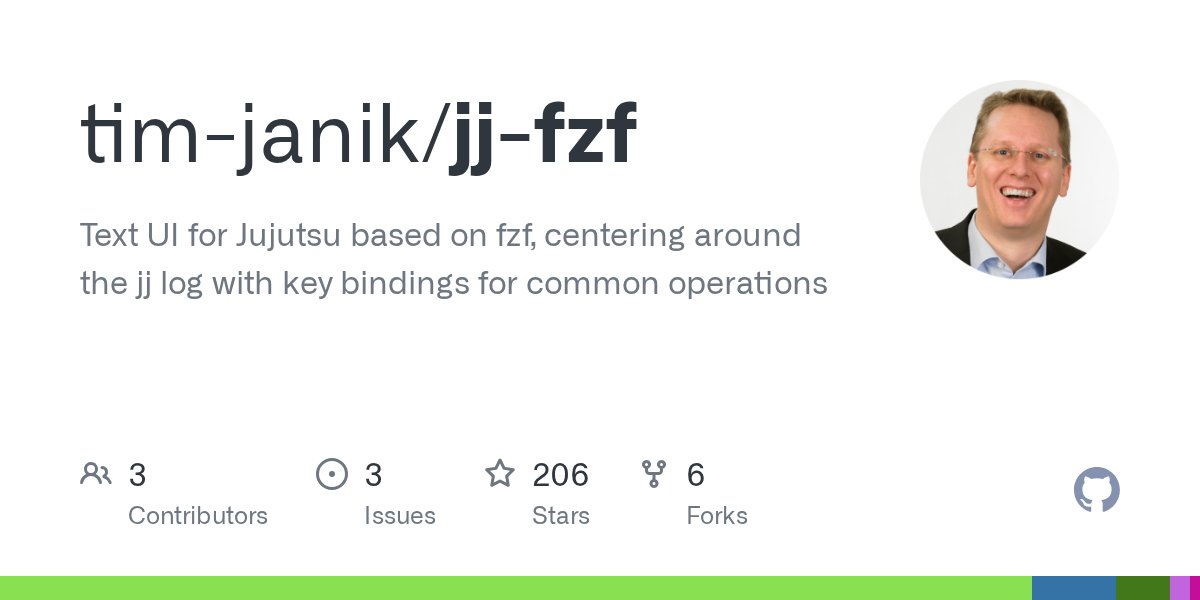

For #LLM generated #Commit Messages, using #Llamacpp from @ggml_org, #Gemini or #OpenAI see: github.com/tim-janik/jj-f… #Jujutsu #VCS #jjfzf #AI #LLM #BuildInPublic #100DaysOfCode #Git #CLI #DevTools #ShellScript #OpenSource

#DevLog: jj-fzf ✨ Alt-S: Start Interactive Restore ⏪ Oplog Alt-V: Revert Operation 📝 Ctrl-D: Automatic Merge Messages 🏷️ New: Bookmark Untrack / Push-New 🧠 Ctrl-S: LLM Commit Messages → #LLamacpp #Gemini #OpenAI #Jujutsu #VCS #jjfzf #AI #LLM #100DaysOfCode #DevTools

Llama.cpp now pulls GGUF models directly from Docker Hub By using OCI-compliant registries like Docker Hub, the AI community can build more robust, reproducible, and scalable MLOps pipelines. Learn more: docker.com/blog/llama.cpp… #Docker #llamacpp #GGUF

Engineer's Guide to running Local LLMs with #llamacpp on Ubuntu, @Alibaba_Qwen Coder 30B running locally along with QwenCode in your terminal dev.to/avatsaev/pro-d…

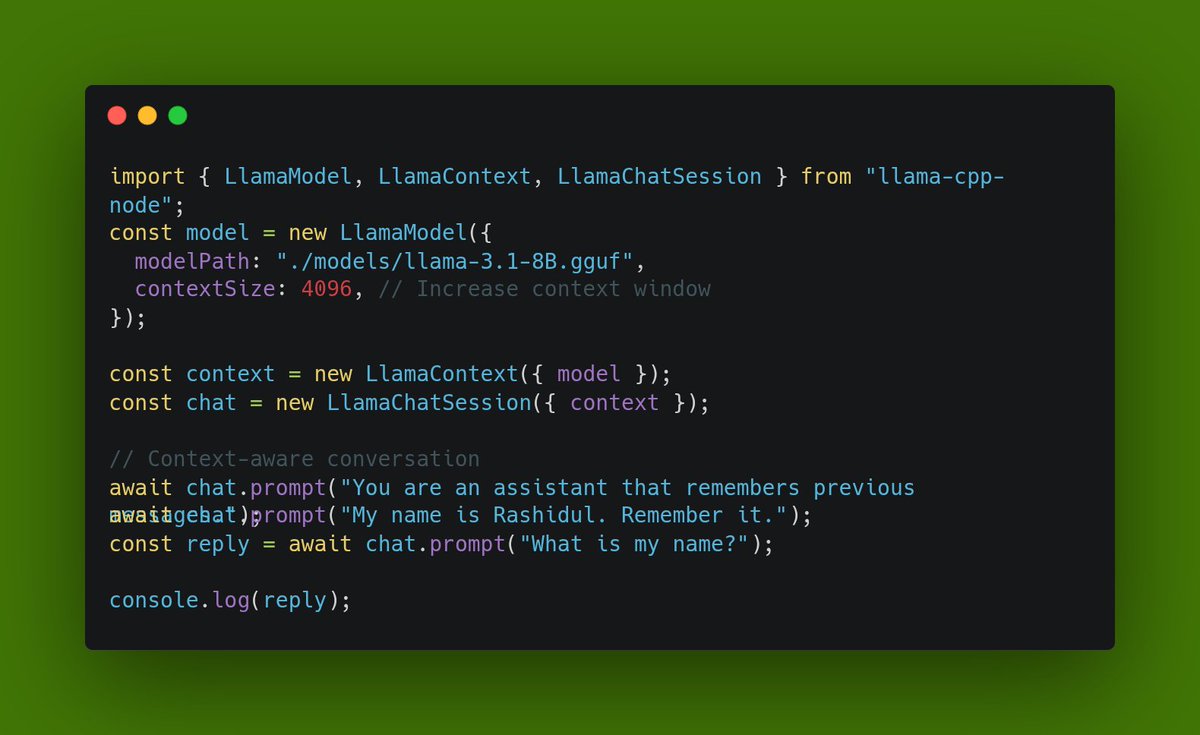

Context matters. 🧠 Using llama-cpp-node, you can boost model accuracy just by increasing the context window. Longer memory = smarter offline AI. Super useful for chatbots & agents. ⚡ #LlamaCpp #NodeJS #AI #LLM #JavaScript

llama_sampler_sample が落ちてしまうね。 Dartにバインドしたからか?謎。 有識者さん助けて〜! llama_sampler_sample crashes. Is it because I bound it to Dart? It's a mystery. Experts please help! #Flutter #Dart #llamacpp #GGUF #有識者求む #プログラミング

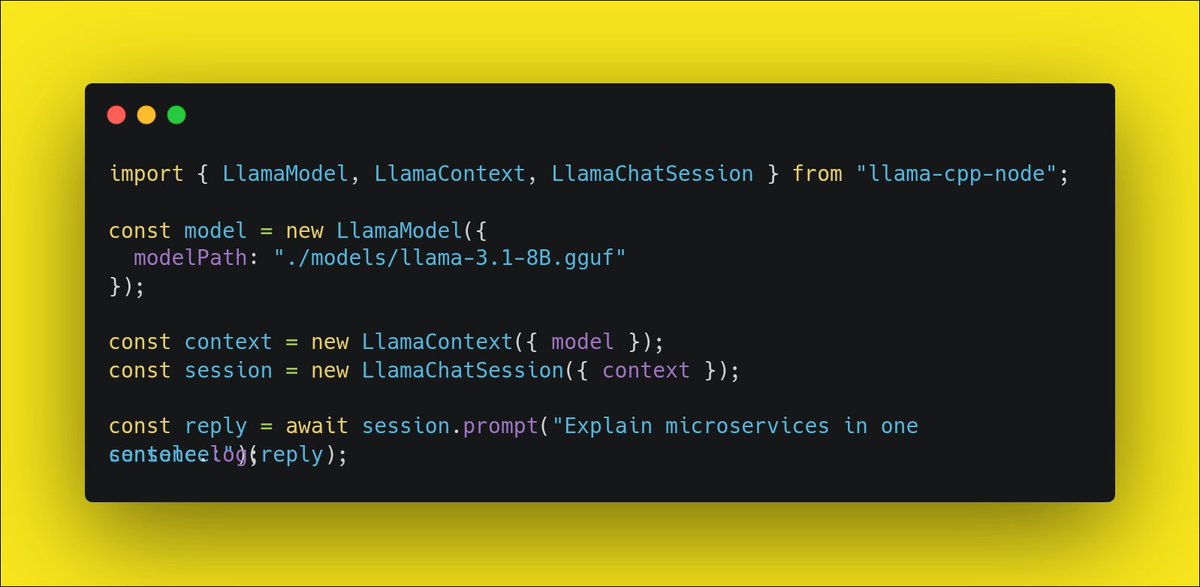

🔥 Running local LLMs in Node.js just got EASY! Been using llama-cpp-node — super lightweight, offline, and insanely fast. That’s literally it. No APIs. No costs. 100% local. 🧠⚡ #NodeJS #AI #LlamaCpp #LLM #JavaScript #OpenSource

Web embedding using Llama.cui + #Qwen2 #llamacpp, you can ask it anything github.com/dspasyuk/llama…

Wha! Just booted up the local Linux server and realized that I was playing with #llamacpp last year! Thanks to @ollama now it’s a daily occurrence on my Mac!

Well, for some reason I am unable to trigger Alpaca's tinfoil head mode. Too bad. But lots of fun anyway. 🤣🤣 #llama #alpaca #llamacpp #serge

first version of the #python #boost #llamacpp #llamaplugin linking and calling python github.com/ggerganov/llam… next is to pass in variables

【朗報】ローカルAIの常識が変わる!?🚀 llama.cppに「GroveMoE」が統合! 「高性能AIをローカルで動かすのは難しい…」と諦めていた方に朗報です⚡️ ついに「GroveMoE」が「#llamacpp」にマージ!PCでもパワフルAIがサクサク動く時代が来るかも!まさに「賢い省エネAI」の幕開けですね✨ 💡…

【ハイスペックPC勢、朗報です!🎉】 ついに巨大な #Qwen3Next モデルが、あなたのPCで動く日が来るかも!✨ #llamacpp がQwen3-Next-80Bのサポート検証を開始しました! クラウド不要!高性能AIをローカルで安全に使える可能性が浮上! API課金なし、高精度AIをガンガン試せて費用対効果も抜群◎…

檢驗TAIDE-LX-7B-Chat繁體中文語言模型的黨性時候到了。 很好,中華民國台灣確實是一個國家,這個新AI模型回答再也不會像CKIP-LlaMa-2-7B一樣翻車了。 #llamacpp #llama

So @LangChainAI its working pretty decent with CodeLlama v2 34B (based off @llama v2) on my two P40's. Its a bit slow but usable. 15t/s average, but #llamacpp context caching makes it usable even with large System Prompts :D Made a telegram bot to be easier to test interaction.

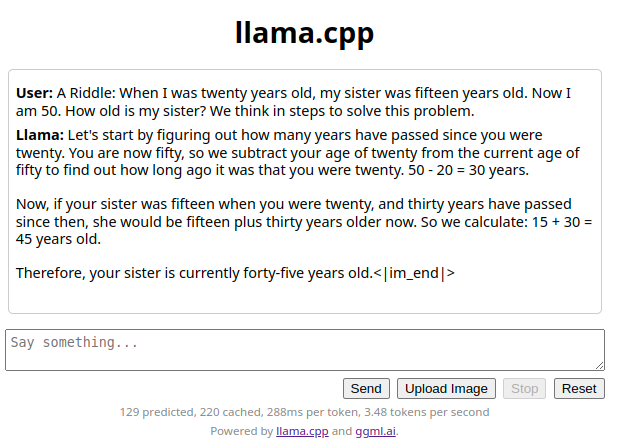

As a comparison, this is Yi. Running on llama.cpp, using TheBloke's 'yi-34b-chat.Q5_K_M.gguf' quant. (Note that the strange "<|im_end>" is due to the built-in HTML GUI of llama.cpp not honoring the chat template of the model.) #llm #yi #llamacpp @ggerganov

Something went wrong.

Something went wrong.

United States Trends

- 1. #AEWFullGear 50.2K posts

- 2. Benavidez 13.7K posts

- 3. Klay 7,400 posts

- 4. Haney 25.7K posts

- 5. LJ Martin N/A

- 6. Mark Briscoe 3,439 posts

- 7. #LasVegasGP 122K posts

- 8. Georgia Tech 6,397 posts

- 9. Terry Smith 2,655 posts

- 10. #AlianzasAAA 3,293 posts

- 11. Lando 67.2K posts

- 12. Kyle Fletcher 1,992 posts

- 13. Utah 21.4K posts

- 14. #OPLive 2,220 posts

- 15. Nebraska 24.4K posts

- 16. Rhule 2,055 posts

- 17. Narduzzi 1,508 posts

- 18. #LAFC N/A

- 19. Kris Statlander 2,022 posts

- 20. Raleek Brown N/A