#mltips search results

✅New to #MachineLearning? ⛔️Avoid common mistakes with this concise guide! Covering 5 stages : - pre-modeling prep, - building, - evaluating, - comparing, and - reporting results It's perfect for research students and anyone looking to reach valid conclusions. #MLtips

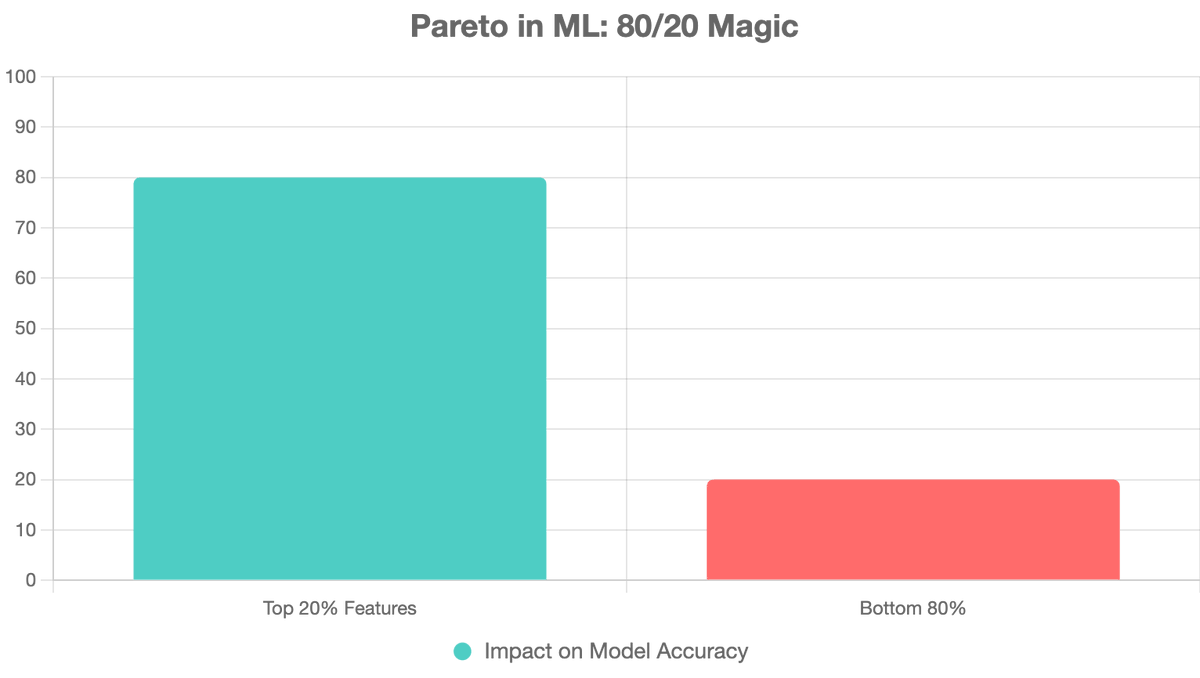

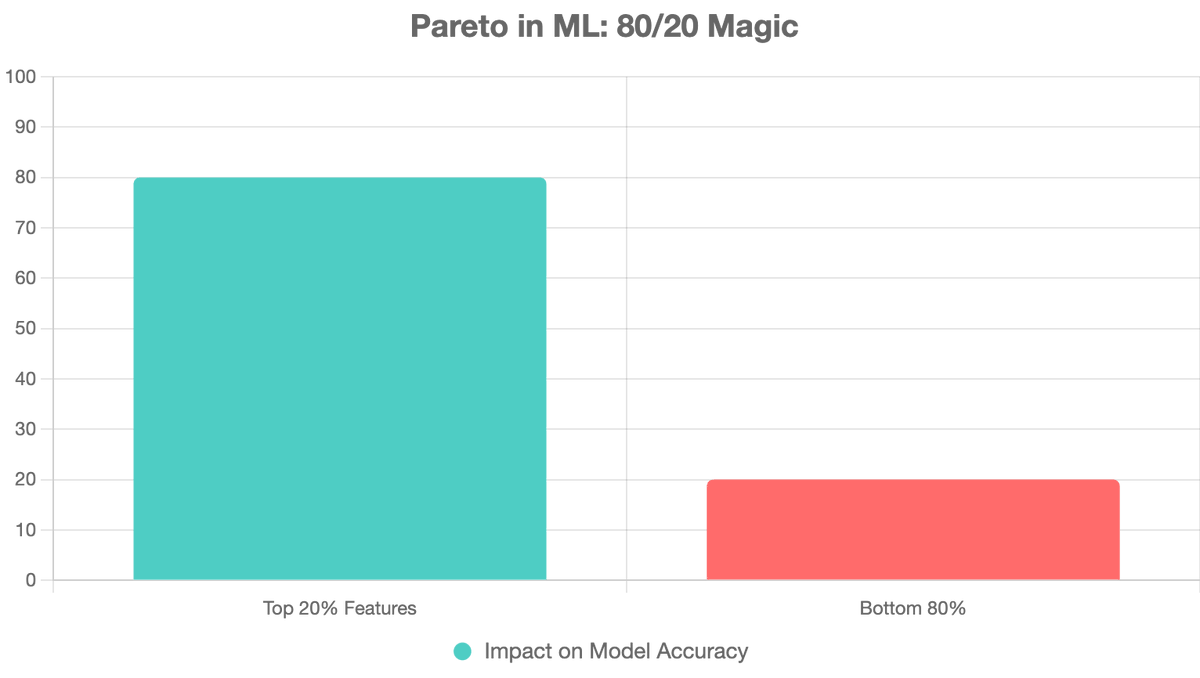

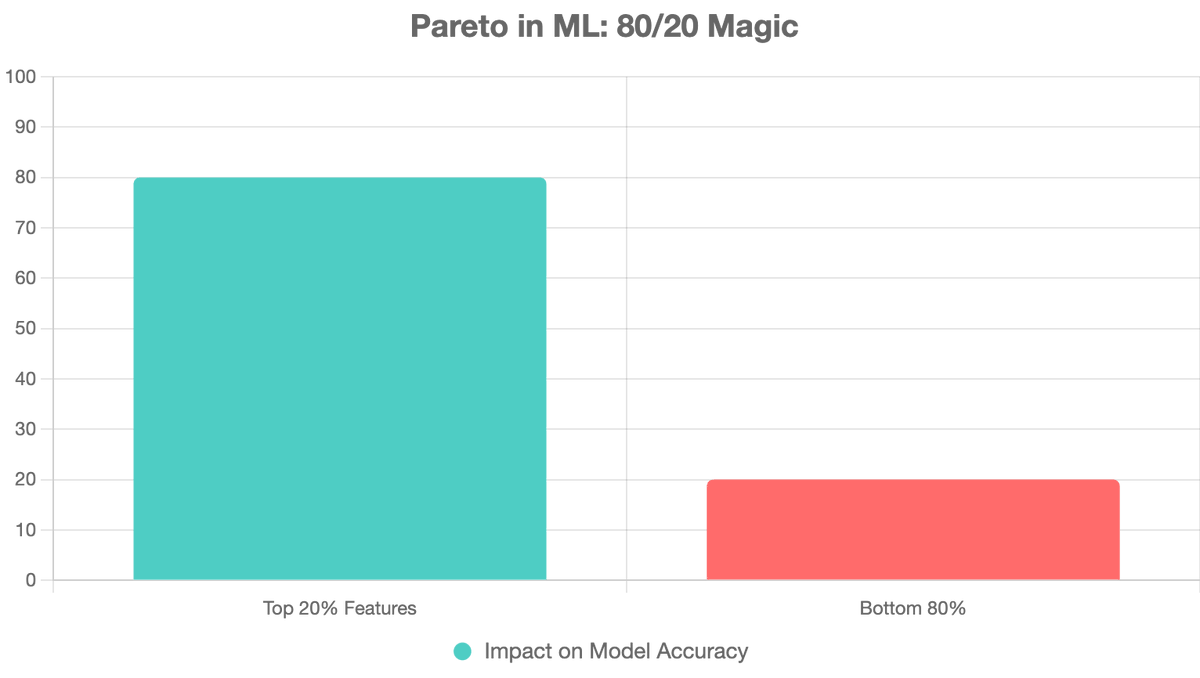

Pareto Principle in DS: 80% of your model's value comes from 20% of features/data. Ditch the fluff—focus wins! Prioritized your last project? Spill! #DataScience #MLTips

Bhai, this one’s wild — ML mein reproducibility ka scene pakka karne ke liye random seeds use karte hain! Ek seed fix kar do, aur tumhare "random" results har baar same aayenge. Jaise Ludo game mein hamesha vahi dice roll sequence aaye! 🎲 #MLTips

"Boost your ML skills! Quick tip: Use early stopping to prevent overfitting in your models. New library: Hugging Face's Transformers 4.21 released! Shortcut: Use libraries like TensorFlow or PyTorch for efficient model training. #MachineLearning #MLTips #DeepLearning…

"Boost your ML skills! * Use Transfer Learning to speed up model training * Try Gradient Boosting for robust predictions * Update yourself on the latest TensorFlow & PyTorch releases #MachineLearning #MLTips #DeepLearning #AI"

"Boost Your ML Skills! Tip: Use early stopping to prevent overfitting in neural networks. News: Google announces new AI chips for edge devices. Shortcut: Try LSTM layers for time-series forecasting. #MachineLearning #AI #MLTips #DataScience"

Your ML model might be underperforming because of unscaled features. 📉 Start scaling. Start winning. 💡 #FeatureScaling #MLTips #DataScience

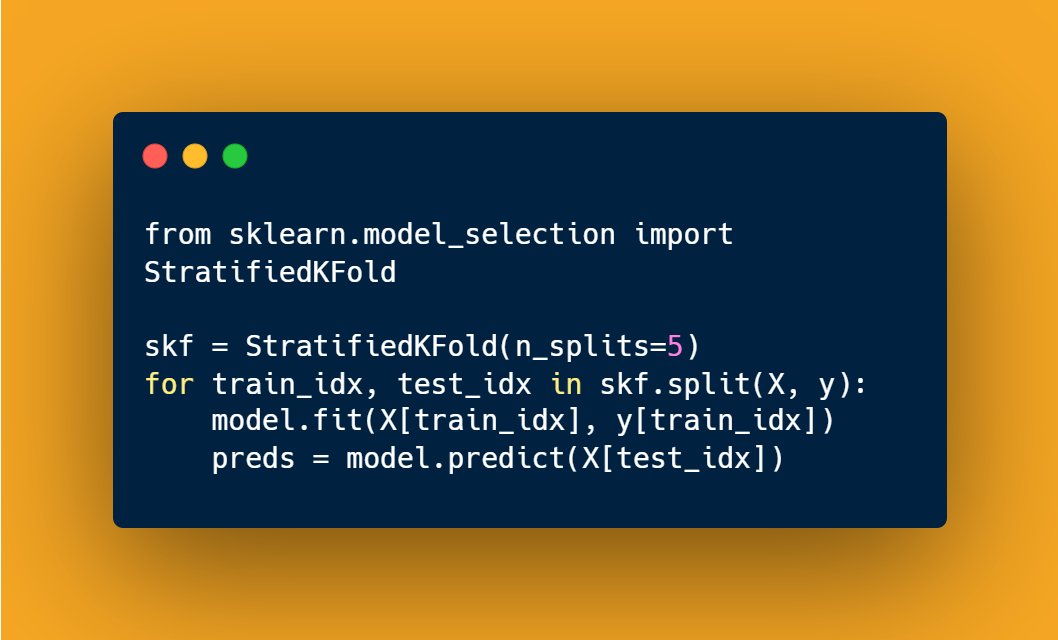

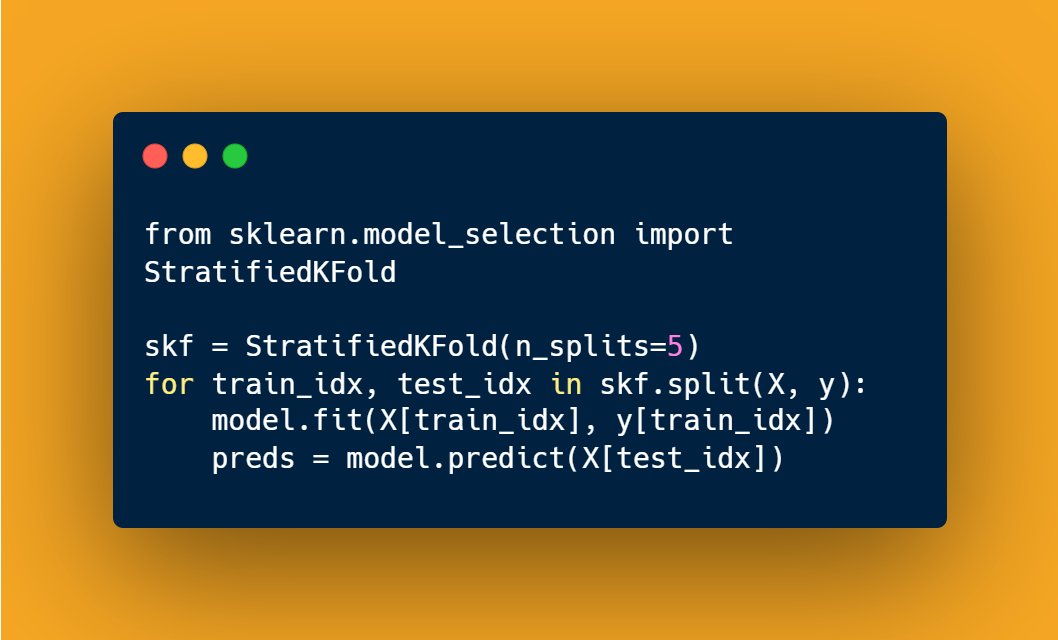

Use cross-validation with stratified sampling to avoid biased model evaluation on imbalanced datasets! #MachineLearning #MLTips #AI #Python #DataScience #ModelTraining #SEO #MLOps #DeepLearning

Use early stopping in model training to prevent overfitting — it's a game-changer for model generalization! 🎯 💡 Train smarter, not longer. #MachineLearning #MLTips #AI #DataScience #Python #ScikitLearn #ModelOptimization #SEO #DevTips #MLOps

🤖 Mastering Machine Learning? Use Pipeline + GridSearchCV in scikit-learn to streamline preprocessing and model tuning in one go. ⚡ Clean. Efficient. Tuned. #MachineLearning #MLTips #ScikitLearn #DataScience #Python #AI #MLPipeline

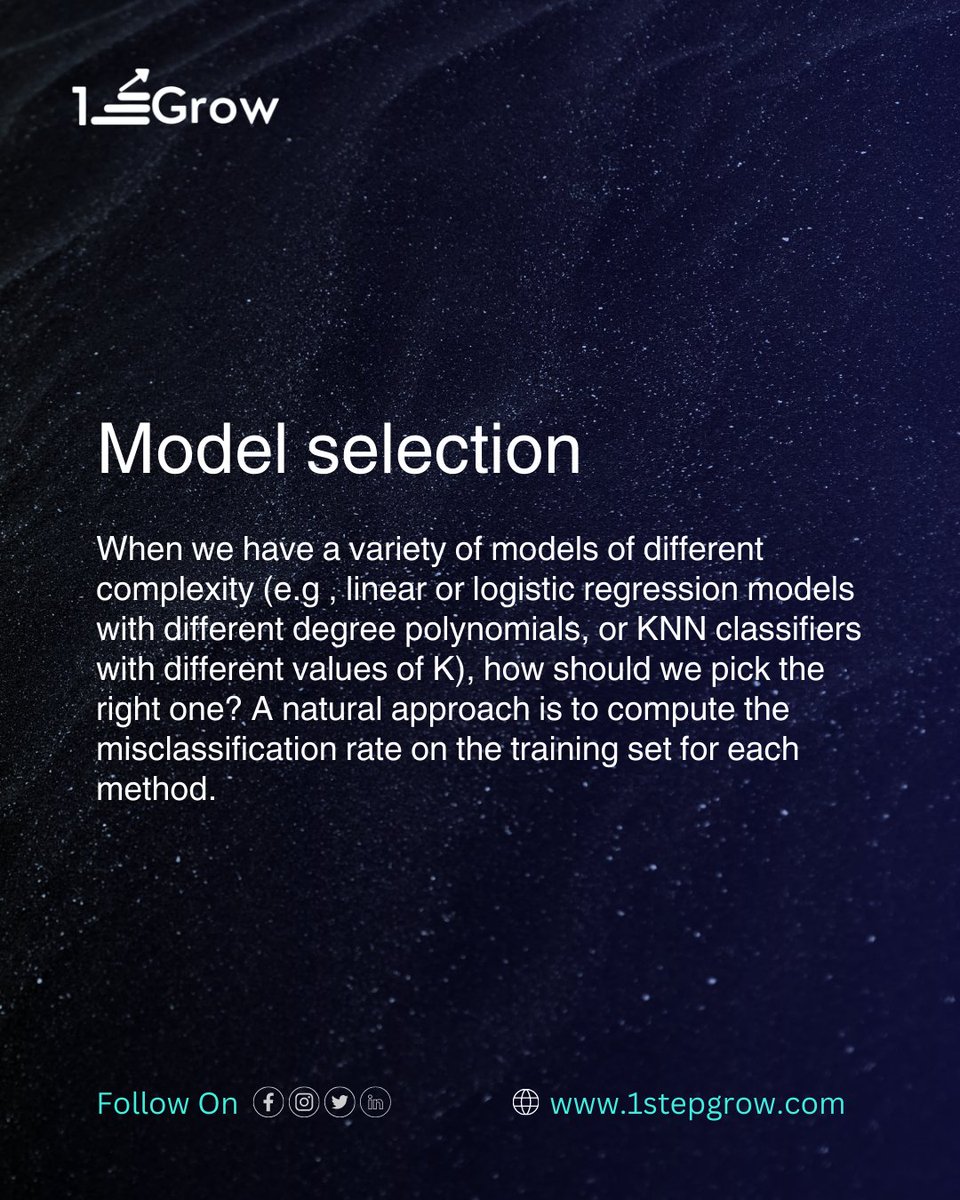

🧠 Unveiling Machine Learning Secrets! 🤖 Discover the core of ML in Part 3 of our Cheat Sheet: 🔍 Optimization 🤖 K-nearest neighbors 🔄 Cross-validation 🔍 Model selection Follow @1stepGrow for continuous learning! #MachineLearning #TechEd #MLTips #LearnWith1StepGrow

🚀 ML Wisdom Unveiled! 🧠 Boost your #MachineLearning journey with Cheat Sheet 21. Dive into model uncertainty, robust predictions, and master the art of balancing complexity. 📚 Follow @1stepGrow for the latest in ML evolution. #MLTips #DataScience

🛠️ **The 80/20 Rule in ML:** We spend 80% of the time on data cleaning and feature engineering, and 20% on model training. If your dataset is messy, your model will be weak. What's your top data cleaning tool (Pandas, Dask, Spark, etc.)? #DataScience #MLTips #DataEngineering

Great roadmap share! After 15+ years building ML systems, I'd add: focus heavily on linear algebra & statistics first. They're the foundation for everything else. The math seems daunting but it clicks when you apply it to real projects. #MLtips

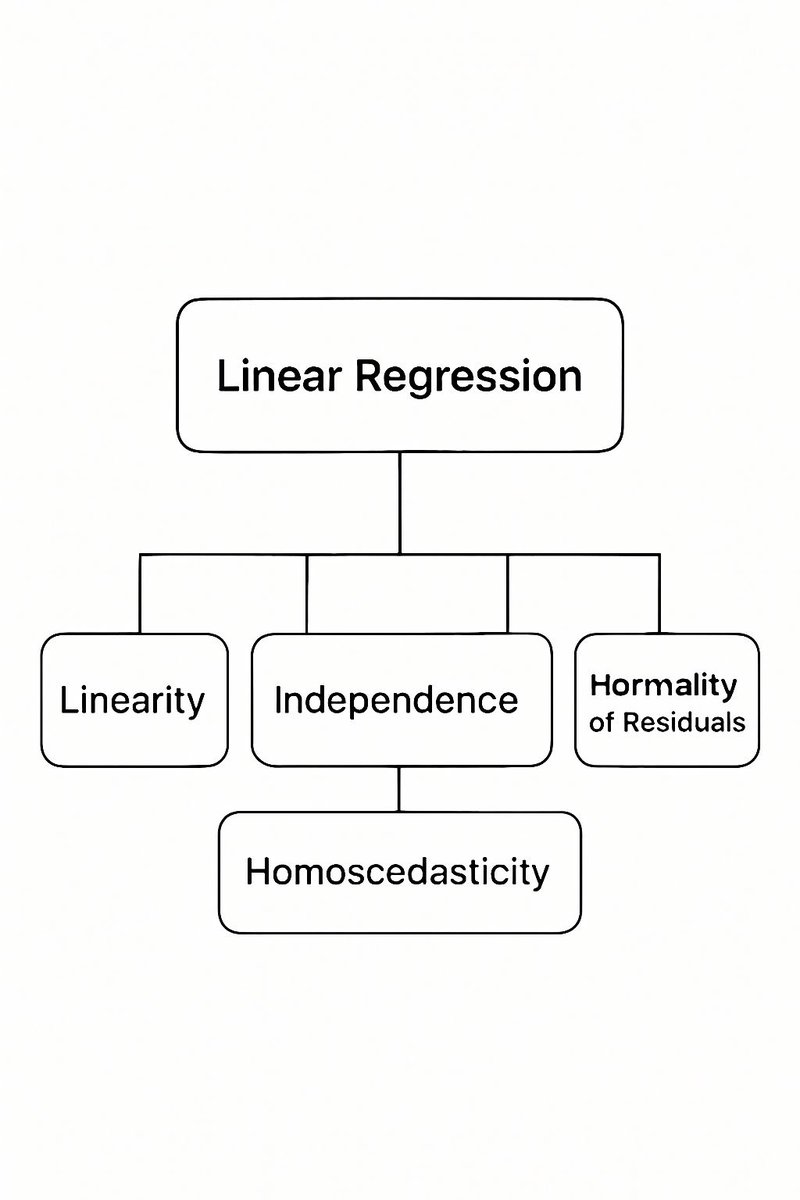

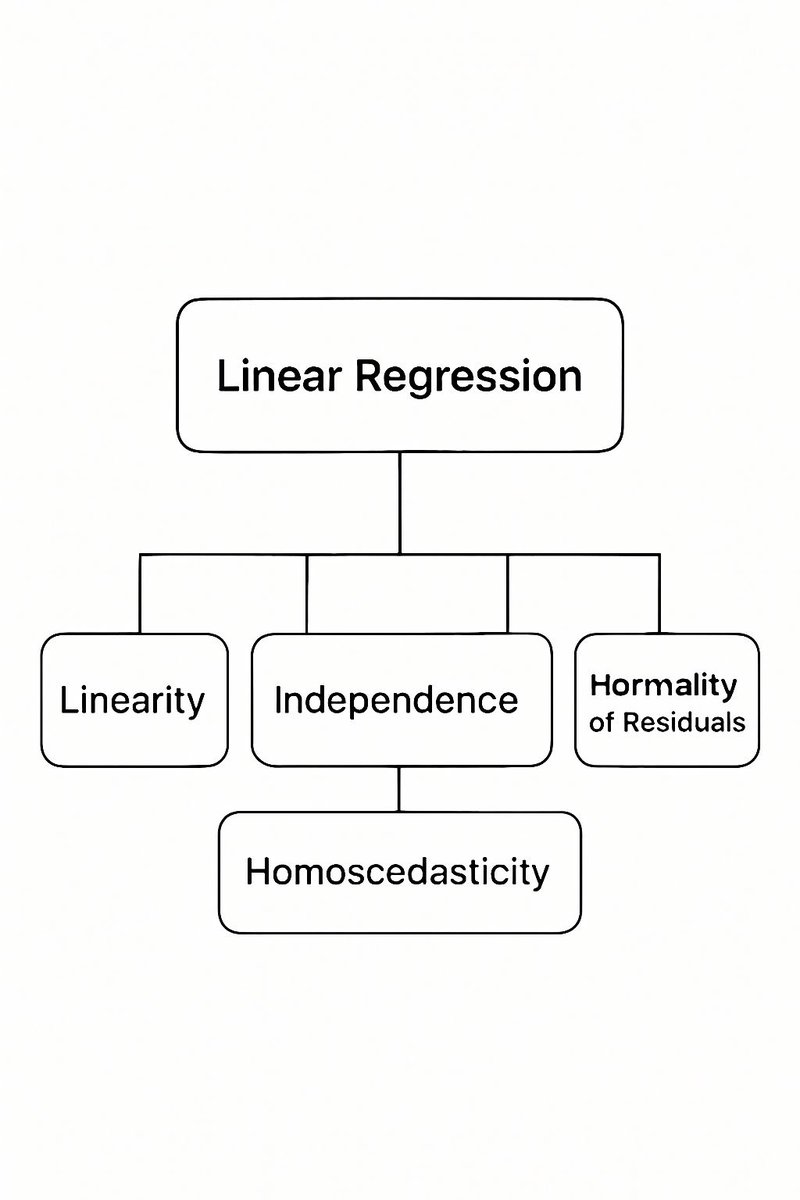

Multicollinearity happens when predictors in linear regression are highly correlated. This can make your model unreliable! Check VIF and fix it before trusting your results. #DataScience #MLTips

Bhai, this one’s wild — ML mein reproducibility ka scene pakka karne ke liye random seeds use karte hain! Ek seed fix kar do, aur tumhare "random" results har baar same aayenge. Jaise Ludo game mein hamesha vahi dice roll sequence aaye! 🎲 #MLTips

Pareto Principle in DS: 80% of your model's value comes from 20% of features/data. Ditch the fluff—focus wins! Prioritized your last project? Spill! #DataScience #MLTips

Label smoothing = teaching your model humility 😌 Instead of “this class = 100%,” you say “this class = 90%, others = 10%.” It reduces overconfidence, handles noisy data & boosts generalization! #MachineLearning #AI #MLTips

Never settle for the first model you train 🚀 Always compare multiple models: Simple vs complex Interpretability vs performance Accuracy vs efficiency One dataset, multiple perspectives = smarter ML decisions 🤖💡 #AI #MachineLearning #MLTips

📉 Overfitting in DL? Use dropout, data augmentation, early stopping, and weight decay to generalize better. #DeepLearning #Overfitting #MLTips

💡 Quick Feature Engineering hacks: Dates → day/week/month Text → word counts & sentiment Missing values → don’t panic, just impute! Better features, better predictions. 🚀 #MLTips #FeatureEngineering #AI

Great models start with great features: normalize, encode, combine, transform. A small tweak can boost performance! #MachineLearning #FeatureEngineering #MLTips

Contributing to ML Community I covered the real mindset that helped me move forward: 🔗bhavy7.substack.com/p/code-first-o… #MachineLearning #MLCareer #MLTips #GenerativeAI

Pareto Principle in DS: 80% of your model's value comes from 20% of features/data. Ditch the fluff—focus wins! Prioritized your last project? Spill! #DataScience #MLTips

Evaluate the model: Accuracy will vary with different 'k' values and datasets. #ModelEvaluation #MLTips

✅New to #MachineLearning? ⛔️Avoid common mistakes with this concise guide! Covering 5 stages : - pre-modeling prep, - building, - evaluating, - comparing, and - reporting results It's perfect for research students and anyone looking to reach valid conclusions. #MLtips

"Boost your ML skills! Quick tip: Use early stopping to prevent overfitting in your models. New library: Hugging Face's Transformers 4.21 released! Shortcut: Use libraries like TensorFlow or PyTorch for efficient model training. #MachineLearning #MLTips #DeepLearning…

"Boost your ML skills! * Use Transfer Learning to speed up model training * Try Gradient Boosting for robust predictions * Update yourself on the latest TensorFlow & PyTorch releases #MachineLearning #MLTips #DeepLearning #AI"

"Boost Your ML Skills! Tip: Use early stopping to prevent overfitting in neural networks. News: Google announces new AI chips for edge devices. Shortcut: Try LSTM layers for time-series forecasting. #MachineLearning #AI #MLTips #DataScience"

🧠 Unveiling Machine Learning Secrets! 🤖 Discover the core of ML in Part 3 of our Cheat Sheet: 🔍 Optimization 🤖 K-nearest neighbors 🔄 Cross-validation 🔍 Model selection Follow @1stepGrow for continuous learning! #MachineLearning #TechEd #MLTips #LearnWith1StepGrow

🚀 ML Wisdom Unveiled! 🧠 Boost your #MachineLearning journey with Cheat Sheet 21. Dive into model uncertainty, robust predictions, and master the art of balancing complexity. 📚 Follow @1stepGrow for the latest in ML evolution. #MLTips #DataScience

Multicollinearity happens when predictors in linear regression are highly correlated. This can make your model unreliable! Check VIF and fix it before trusting your results. #DataScience #MLTips

Your ML model might be underperforming because of unscaled features. 📉 Start scaling. Start winning. 💡 #FeatureScaling #MLTips #DataScience

Use cross-validation with stratified sampling to avoid biased model evaluation on imbalanced datasets! #MachineLearning #MLTips #AI #Python #DataScience #ModelTraining #SEO #MLOps #DeepLearning

🧮 Why does your model perform worse on new data? It’s probably overfitting. The model learned the noise, not the pattern. Tip: ✅ Use cross-validation ✅ Simplify features ✅ Regularize A good model predicts, not memorizes. #MLTips #Overfitting

Bias = Oversimplification. A model with high #bias: 1⃣ Makes simplistic assumptions 2⃣ Misses essential patterns 3⃣ Performs poorly on both training and unseen data (underfitting) 4⃣ Think of it as too lazy to learn enough. (2/n) #AIModels #MLTips

Use early stopping in model training to prevent overfitting — it's a game-changer for model generalization! 🎯 💡 Train smarter, not longer. #MachineLearning #MLTips #AI #DataScience #Python #ScikitLearn #ModelOptimization #SEO #DevTips #MLOps

🤖 Mastering Machine Learning? Use Pipeline + GridSearchCV in scikit-learn to streamline preprocessing and model tuning in one go. ⚡ Clean. Efficient. Tuned. #MachineLearning #MLTips #ScikitLearn #DataScience #Python #AI #MLPipeline

Something went wrong.

Something went wrong.

United States Trends

- 1. Good Thursday 28.1K posts

- 2. Merry Christmas 66.1K posts

- 3. Happy Friday Eve N/A

- 4. DataHaven 11.2K posts

- 5. #thursdayvibes 1,719 posts

- 6. #thursdaymotivation 2,229 posts

- 7. #DMDCHARITY2025 1.88M posts

- 8. Hilux 7,674 posts

- 9. Toyota 27.4K posts

- 10. Omar 181K posts

- 11. Earl Campbell 2,298 posts

- 12. Halle Berry 3,990 posts

- 13. #PutThatInYourPipe N/A

- 14. Happy Birthday Dan 1,682 posts

- 15. Steve Cropper 8,401 posts

- 16. Nazi Germany 7,863 posts

- 17. Metroid Prime 4 16.6K posts

- 18. #ALLOCATION 715K posts

- 19. The BIGGЕST 1.03M posts

- 20. CAFE 159K posts