#probabilitymodels 搜尋結果

buff.ly/2b28qmq #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b27RJt #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b284wk #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b27QVW #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

Introduction to Probability Models - Sheldon M. Ross - 10th Edition ➖➖➖ bit.ly/2LBXBdC ➖➖➖ #EstadísticayProbabilidad #IntroductiontoProbabilityModels #ProbabilityModels #EstadísticaInferencial #elsolucionario #pdf #librosgratis #universidad

buff.ly/2b28LW6 #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

📚 Introduction to Probability Models - Sheldon M. Ross - 12th Edition ➖➖➖ ✅ bit.ly/3bmpd1E ➖➖➖ #EstadísticayProbabilidad #Probability #ProbabilityModels #EstadísticaInferencial #elsolucionario #pdf #librosgratis #universidad #ebooks

buff.ly/2b28u5A #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

📚 Introduction to Probability Models - Sheldon M. Ross - 6th Edition ➖➖➖ ✅ bit.ly/32UrAXw ➖➖➖ #EstadísticayProbabilidad #Probability #ProbabilityModels #EstadísticaInferencial #elsolucionario #librosgratis #librosPdf #escuela #estudiante

buff.ly/2b28DpJ #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28xyt #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28suu #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28uCM #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

is the English Premier League competitive? Prof @NialFriel in his Insight Winter Lecture explains football #probabilitymodels without equations 🤔 ⚽️#BayesianStatistics #football @ucddublin @DCU @UCC @uniofgalway @scienceirel

buff.ly/2b28suu #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28Vgt #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28sL4 #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28kLu #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b284MQ #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

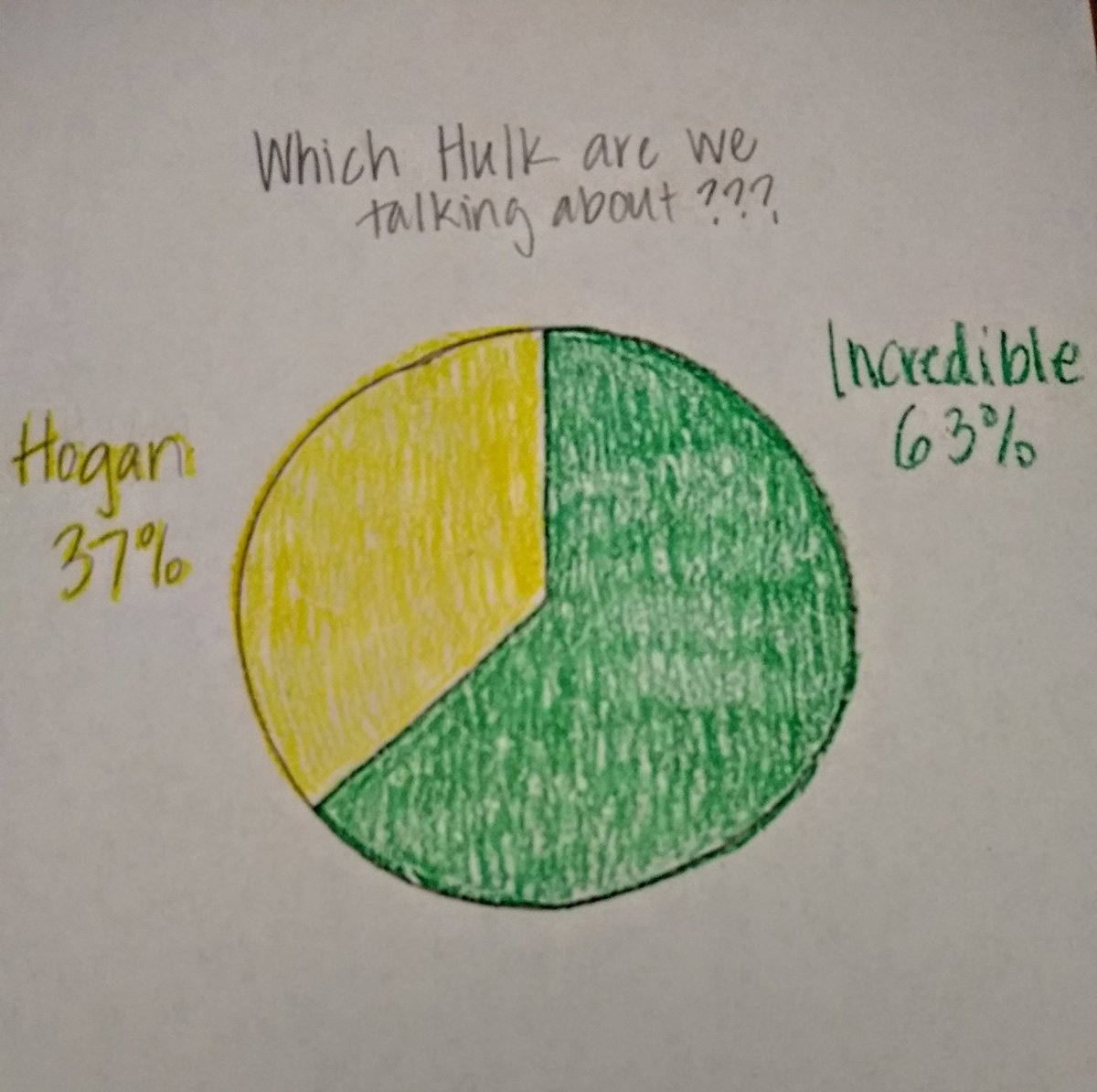

I often have to rely on previous data to figure out which Hulk my kids are talking about since context tells me it could go either way. #boymom #forecasting #probabilitymodels

Integrated Probability Model (Powell + Age of Disclosure + Grok/Copilot) open.substack.com/pub/jackpowell…

If I understood correctly, your overall point for that one is that the probability method isn't reliable because there are times where you can excuse one option as always being the cause even if there's evidence that it's NOT the cause. This is actually a very good point; my (c)

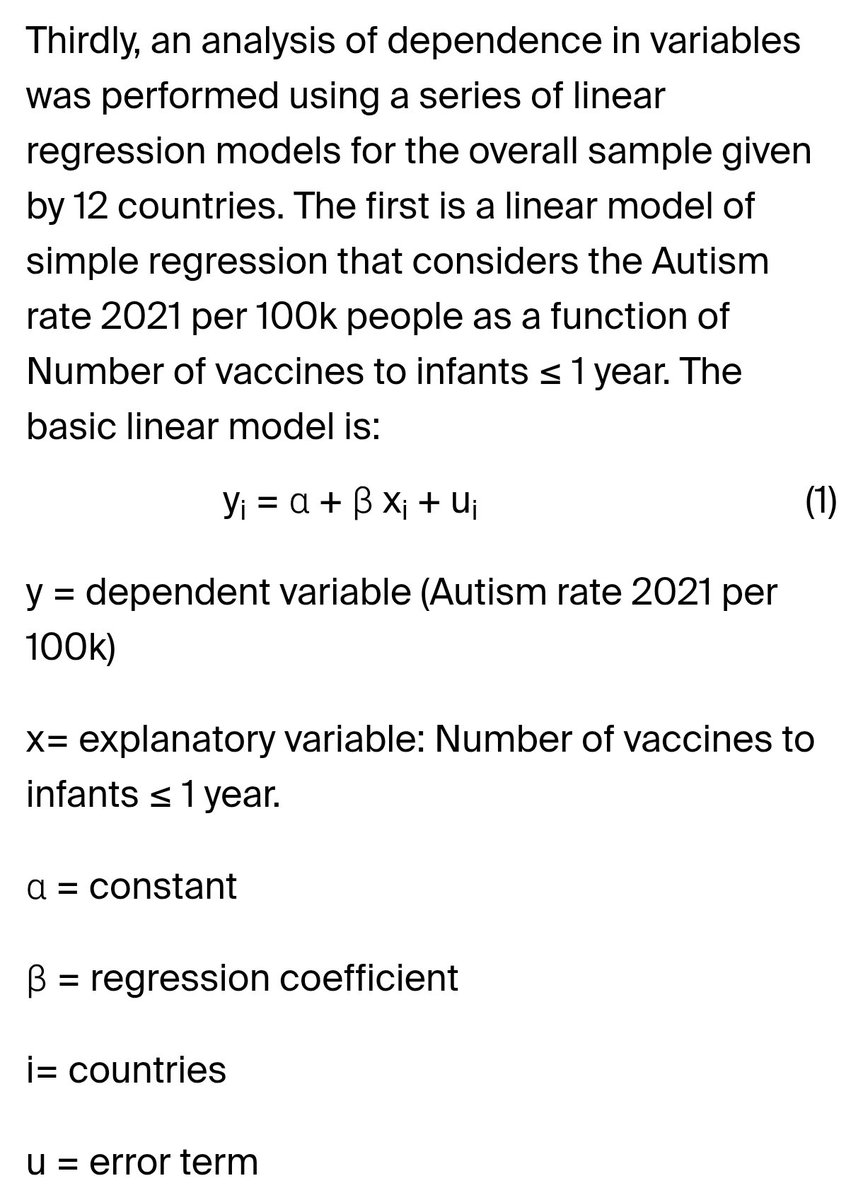

Già vedo qui da un rapido scrolling qualcosa che non va: il modello lineare indicato nella formula, vìola sicuramente alcuni assunti che condizionano la validità dei risultati, qualunque cosa venga fuori.

"Probabilistic Machine Learning" by Kevin P. Murphy Download link can be found at: probml.github.io/pml-book/book1…

10-Page Probability Cheat-Sheet. #BigData #Analytics #DataScience #AI #MachineLearning #IoT #IIoT #Python #RStats #TensorFlow #JavaScript #ReactJS #CloudComputing #Serverless #Linux #Statistics #Programming #Coding #100DaysofCode geni.us/Probability-Ch

Even five independent results would mean the odds that the model is false are 1 in 3.2 million. Ten independent results make it 1 in 10 trillion. How else do you suggest I calculate the odds if not by the quantity of existing data supporting it?

The more consistent the accuracy of a model’s predictions, the more likely that model is to be true. The odds of the current model being false given observed reliability are astronomically low. Why should we assume that by default?

Here is a nice introduction to how people use imprecise probabilities / non-Bayesian uncertainty in practice (used to be on the EA forum but now only seems to be on LessWrong), per the field of "decision making under deep uncertainty": lesswrong.com/posts/nHspXJyL…

Ούτε εγώ το διατύπωσα και τόσο καλά, αλλά καταλάβατε. Η πιθανότητα είναι sensitivity x prevalence/[sensitivity x prevelance + (1-specificity) x (1- prevalence)]

Your not alone in that respect. If you haven't seen it before the piece on the link below provides a thoughtful discussion of the robustness of those probabilities: tandfonline.com/doi/abs/10.108…

isn't any more impossible. P(picking computable) = 0% while P(picking uncomputable) = 100%, sure, but for all x computable and y uncomputable, P(picking x) = P(picking y) = 0% no?

I'm aware you're a CS professor, but have you not taken any classes on Bayesian approaches to probability theory? This is a perfectly reasonable thing! en.wikipedia.org/wiki/Bayesian_…

Il codice si esegue correttamente. Output: Prior mean: 0.200 Posterior mean: 0.350 95% Credible Interval: [0.163, 0.566] P(θ > 0.5 | data): 0.081 Mostra un aggiornamento bayesiano moderato dal prior scettico (0.2) ai dati (5/10 successi), con probabilità bassa di θ>0.5.…

You say the probability is irreducible? No, that doesn't correspond to experimental data. Details:howallworks.github.io/theoryofeveryt…

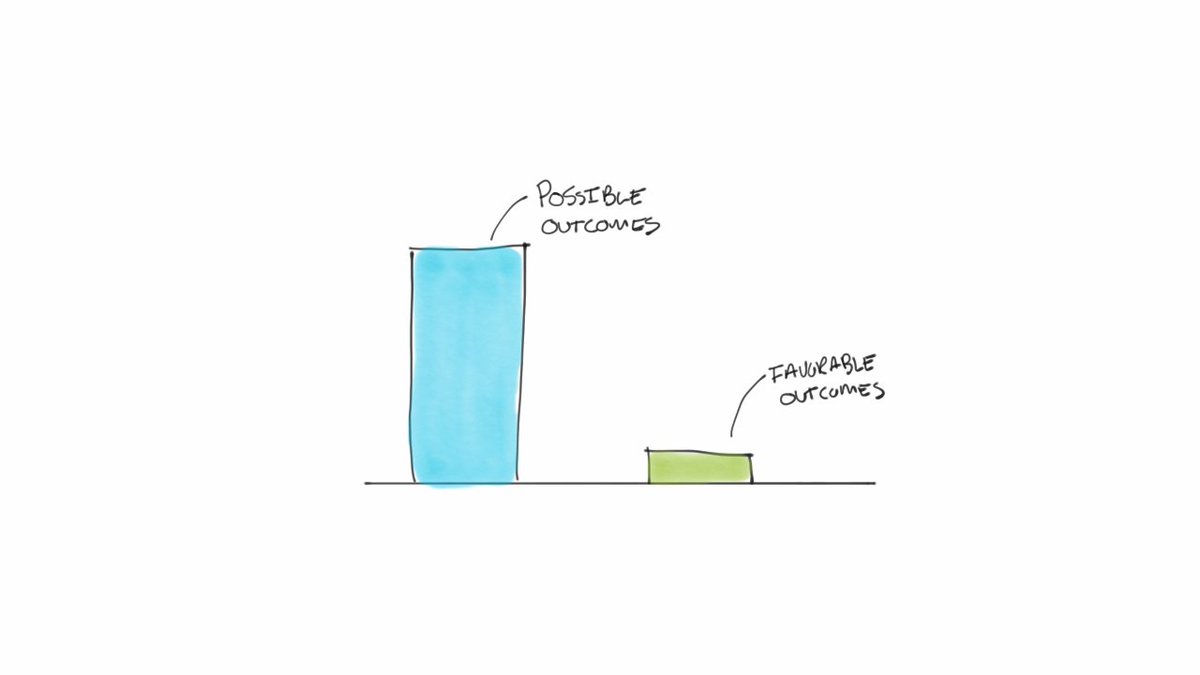

Probabilistic prediction uses statistical models to estimate outcome likelihoods under uncertainty. Methodology: 1) Gather data on variables. 2) Build a model (e.g., Bayesian networks or ML algos) to compute probabilities. 3) Incorporate priors and updates via Bayes' theorem. 4)…

This model thinks you should go for it. An extra 7.9% of win probability.

The balance of probabilities is 51% probable.

Probabilistic I think in the sense that if you train a probe from the hidden state to the latent (in an experimental scenario where you have it) you have progressively less uncertainty the more context you consider. If for this problem there is a number of observations that would…

buff.ly/2b27RJt #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b284wk #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b27QVW #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28DpJ #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28xyt #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28LW6 #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28suu #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28qmq #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28Vgt #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

Introduction to Probability Models - Sheldon M. Ross - 10th Edition ➖➖➖ bit.ly/2LBXBdC ➖➖➖ #EstadísticayProbabilidad #IntroductiontoProbabilityModels #ProbabilityModels #EstadísticaInferencial #elsolucionario #pdf #librosgratis #universidad

📚 Introduction to Probability Models - Sheldon M. Ross - 12th Edition ➖➖➖ ✅ bit.ly/3bmpd1E ➖➖➖ #EstadísticayProbabilidad #Probability #ProbabilityModels #EstadísticaInferencial #elsolucionario #pdf #librosgratis #universidad #ebooks

📚 Introduction to Probability Models - Sheldon M. Ross - 6th Edition ➖➖➖ ✅ bit.ly/32UrAXw ➖➖➖ #EstadísticayProbabilidad #Probability #ProbabilityModels #EstadísticaInferencial #elsolucionario #librosgratis #librosPdf #escuela #estudiante

buff.ly/2b28suu #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28u5A #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28uCM #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b284MQ #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28kLu #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

buff.ly/2b28sL4 #DataScience #Probabilitymodels #relationalDBMS #PNR #adventure #predictstore #TeamBuilding…

Get best MATH 4431/6604 Probability Models Assignment Help at Expertsmind. expertsmind.com/library/comput… #MATH4431 #MATH6604 #ProbabilityModels #AssignmentHelp #OnlineTutor #EngineeringMathematics #CourseHelp #HomeworkHelp #YorkUniversity #Toronto #Canada

is the English Premier League competitive? Prof @NialFriel in his Insight Winter Lecture explains football #probabilitymodels without equations 🤔 ⚽️#BayesianStatistics #football @ucddublin @DCU @UCC @uniofgalway @scienceirel

Something went wrong.

Something went wrong.

United States Trends

- 1. Good Saturday 19.9K posts

- 2. Tosin 5,847 posts

- 3. #LingOrm3rdMeetMacauD1 451K posts

- 4. #SaturdayVibes 2,985 posts

- 5. LINGORM MACAU MEET D1 447K posts

- 6. Travis Head 24.8K posts

- 7. Burnley 22.7K posts

- 8. Marjorie Taylor Greene 80.2K posts

- 9. #myheroacademia 15.9K posts

- 10. The View 97.6K posts

- 11. Caleb Love 3,285 posts

- 12. Somali 78.3K posts

- 13. #GoDeku 8,948 posts

- 14. #DBLF2025 17.3K posts

- 15. Kandi 12.2K posts

- 16. Collin Gillespie 4,350 posts

- 17. Suns 23K posts

- 18. Norvell 3,659 posts

- 19. Joshua 108K posts

- 20. Sengun 8,869 posts