#semisupervisedlearningalgorithms 検索結果

With countless tools and steps across the fab floor, robust process time models are critical to maintaining throughput and accuracy. Discover how the industry is leveraging machine learning to improve scheduling performance with @Semi_Dig: tinyurl.com/mv5shz7x

I think semi-supervised would prevent overoptimizing for bias thats just my two cents

Autoencoder-based Semi-Supervised Dimensionality Reduction and Clustering for Scientific Ensembles. arxiv.org/abs/2512.11145

Solving Semi-Supervised Few-Shot Learning from an Auto-Annotation Perspective. arxiv.org/abs/2512.10244

SetAD: Semi-Supervised Anomaly Learning in Contextual Sets. arxiv.org/abs/2512.07863

If you're looking for inspiration, I have quite a long list of semi supervised learning tasks for computer vision, many of which have natural analogues in NLP. Here you go ---> fast.ai/posts/2020-01-…

An aux task for LLM to predict the order of next chunk of tokens. Haha this is so 2018! In computer vision this kind of tasks was all the rage for "self-supervised" learning! This specific one was called "jigsaw". There was also "relative patch location" and "rotation…

Semi-supervised Vision Transformers at Scale abs: arxiv.org/abs/2208.05688 SemiViT-Huge achieves an impressive 80% top-1 accuracy on ImageNet using only 1% labels, which is comparable with Inception-v4 using 100% ImageNet labels

The performance of supervised learning tasks improves with more high-quality labels. However it is expensive to collect many such labels. Semi-supervised learning is one of the paradigms for dealing with label scarcity: lilianweng.github.io/lil-log/2021/1…

Glad to share our recent success in applying semi-supervised learning to Google's most fundamental product, Search. Our approach, semi-supervised distillation (SSD), is a simplified version of Noisy Student and an alternative to product distillation. Blog: ai.googleblog.com/2021/07/from-v…

Understanding how to best use unlabeled examples in real-world applications of #ML is often challenging. Today we present a semi-supervised learning approach that can be applied at scale to achieve performance gains beyond that of fully-supervised models. goo.gle/3r7OfJY

Let's talk about learning problems in machine learning: ▫️ Supervised Learning ▫️ Unsupervised Learning ▫️ Reinforcement Learning And some hybrid approaches: ▫️ Semi-Supervised Learning ▫️ Self-Supervised Learning ▫️ Multi-Instance Learning Grab your ☕️, and let's do this👇

Should you deploy and use Semi-Supervised Learning in production? Here are some of our learnings at @uizardIO written by our talented Varun: medium.com/@nairvarun18/f…

Some new progress on Semi-supervised learning for speech recognition. Noisy student training improves accuracy on the LibriSpeech benchmark. The gains are especially big on the noisy test set (“Test-other”). Link: arxiv.org/abs/2005.09629

Here's a notebook showing how to perform semi-supervised classification w/ GANs: colab.research.google.com/github/sayakpa…. Done w/ @TensorFlow 2 & most importantly `tf.keras`. I could not figure out how to override `train_step` for the matter, so decided to keep everything inside a custom loop.

There are now 3 papers that successfully use Self-Supervised Learning for visual feature learning: MoCo: arxiv.org/abs/1911.05722 PIRL: arxiv.org/abs/1912.01991 And this, below. All three use some form of Siamese net.

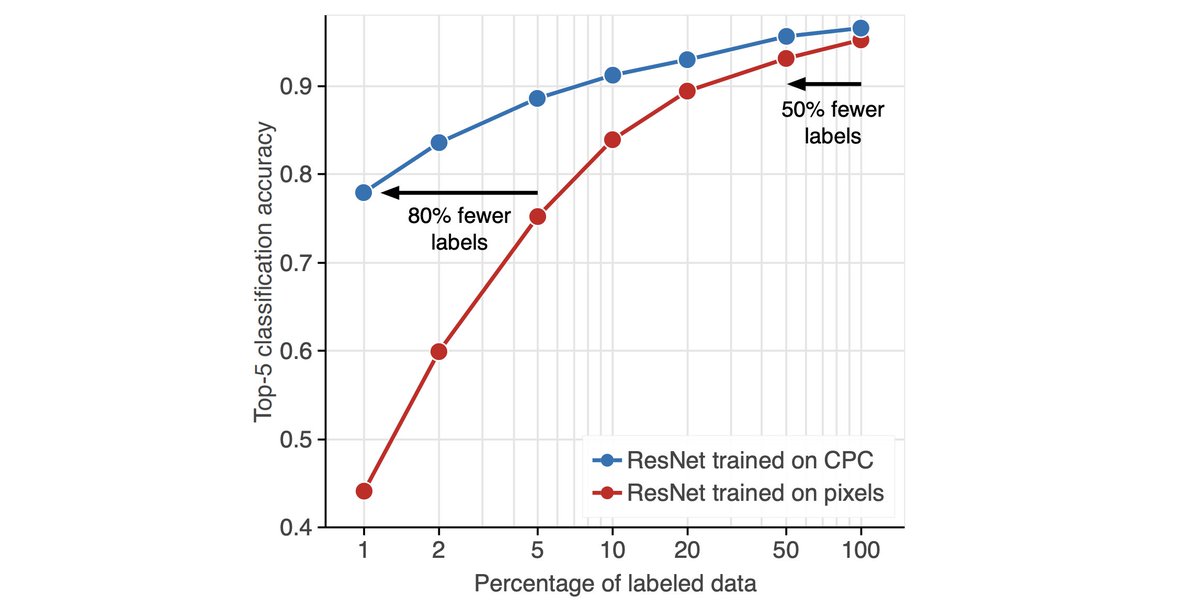

Unsupervised pre-training now outperforms supervised learning on ImageNet for any data regime (see figure) and also for transfer learning to Pascal VOC object detection arxiv.org/abs/1905.09272…

Billion-scale demi-weakly-supervised learning yields record results on image and video recognition. And it's open source. ai.facebook.com/blog/billion-s…

We're developing alternative ways to train our AI systems so that we can do more with less labeled training data overall. Learn how our “semi-weak supervision” method is delivering state-of-the-art performance for highly efficient, production-ready models. ai.facebook.com/blog/billion-s…

First paper published for Special Issue "Humanistic Data Mining: Tools and Applications": An Auto-Adjustable Semi-Supervised Self-Training Algorithm mdpi.com/1999-4893/11/9… mdpi.com/journal/algori… #Semisupervisedlearningalgorithms #selflabeling #selftraining

New #ACL2018 paper on StructVAE, semi-supervised learning with structured latent variables: arxiv.org/abs/1806.07832 A new shift-reduce method for seq2tree neural models, semantic parsing results robust to small data, and nice analysis of why semi-supervised learning works!

Something went wrong.

Something went wrong.

United States Trends

- 1. Knicks 73.4K posts

- 2. Mariah 24.9K posts

- 3. NBA Cup 56.9K posts

- 4. Tyler Kolek 6,846 posts

- 5. Wemby 26.8K posts

- 6. #NewYorkForever 4,630 posts

- 7. Brunson 22.3K posts

- 8. Mitch 16K posts

- 9. Josh Hart 4,676 posts

- 10. Thug 20.3K posts

- 11. Mike Brown 4,629 posts

- 12. #WWENXT 18.7K posts

- 13. Buck Rogers 1,606 posts

- 14. #LMD7 37.1K posts

- 15. Thibs N/A

- 16. Macklin Celebrini 1,802 posts

- 17. Gil Gerard 1,560 posts

- 18. Dylan Harper 2,063 posts

- 19. #TheFutureIsTeal N/A

- 20. Thea 13.3K posts