#stochasticprogramming risultati di ricerca

Fine-Tuning Discrete Diffusion Models with Policy Gradient Methods. arxiv.org/abs/2502.01384

Unless that selection/choice/sense of was written in stone/encoded/predetermined itself 💀 Even giving leeway to probabilistic/reactionary programming/code/information instead of 'strictly confined', it's acting within (just) a (larger) predetermined and confined set of

We analyze the implicit bias of constant step stochastic subgradient descent (SGD). We consider the setting of binary classification with homogeneous neural networks -a large class of deep neural networks with ReLU-type activation functions such as MLPs and CNNs without biases.…

unless reasoning might be continuous in a sufficiently higher dimension latent space, and what you perceived as combinatorial is only the result of projecting it to a lower dimension. The blessing of dimensionality :) btw, you can still optimize in a discrete space, e.g., word…

This pertains to dimensionality. So higher dimensionality SC becomes problematic computation-wise, and monte carlo may not work for generalized "policy“ optimization in this cases. DL tries to tackle this via function approximation, and RL basically learns policy directly.…

Iterative Sampling Methods for Sinkhorn Distributionally Robust Optimization. arxiv.org/abs/2512.12550

The training process moves the state of the network toward a manifold attractor in high dimensional vector space, changing the state of the system doesn’t change that process, or the manifold in vector space the system state is heading for, just the starting point going forward…

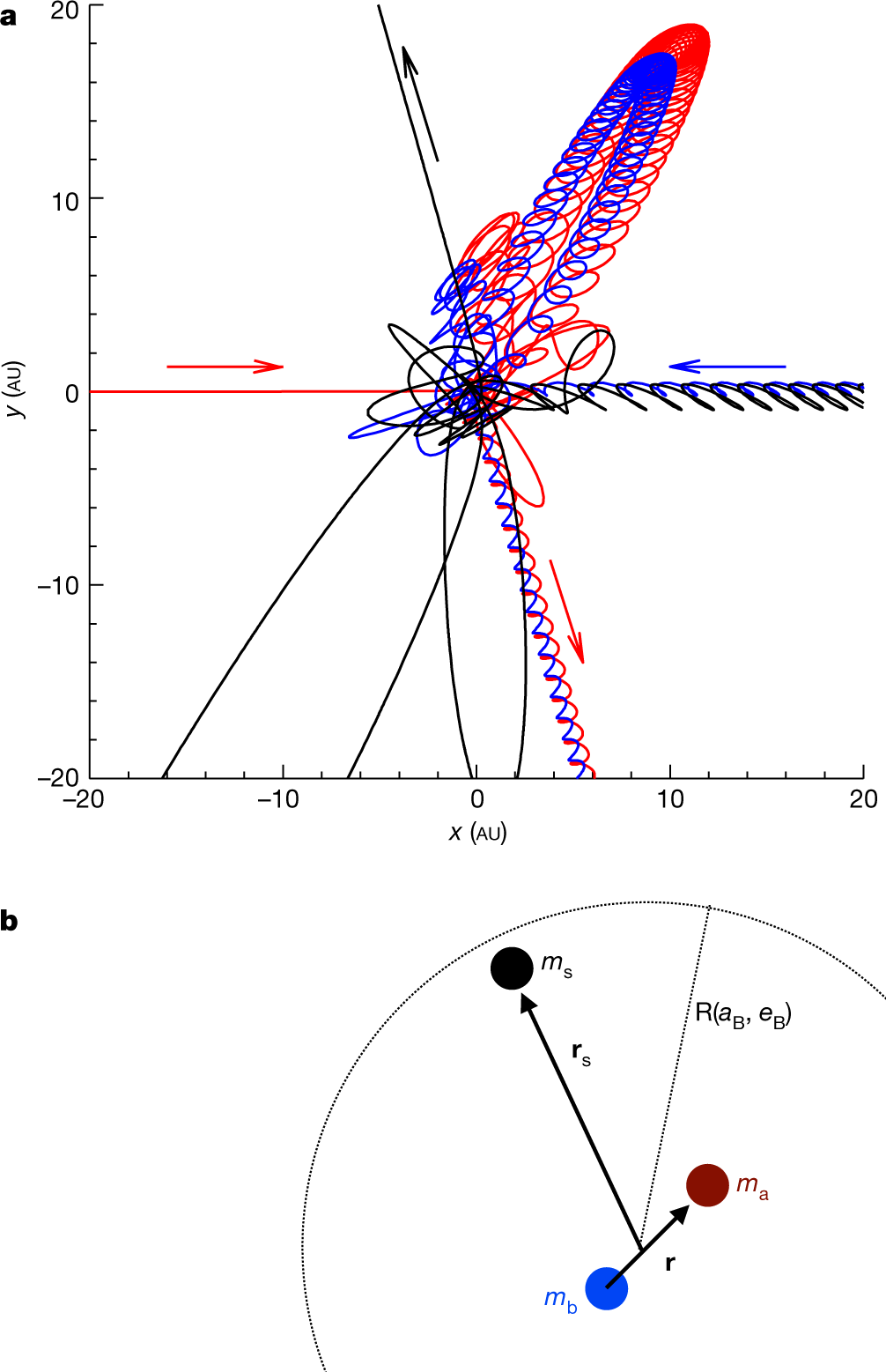

This concept of stochastic determinism appears to be a property of complex systems. There is an excellent article on the classical three‑body problem that illustrates a similar idea: A statistical solution to the chaotic, non-hierarchical three-body problem | Nature…

reasoning traces in LLMs are complicated so lets try reducing it to a geometric problem that u can then approximate with a stochastic differential equation arxiv.org/pdf/2506.04374

Stochastic calculus + ito calculus + entropy + survival analysis... and in an ensemble learning = predictive models

Friends, At least some of you follow me for my research. So please note that the latest draft of my book "Stochastic Algorithms for Nonconvex Optimization and Reinforcement Learning" is now available at: people.iith.ac.in/m_vidyasagar/R… It is about 75% complete.

Maria Bazotte (@MBazotte), one of our brilliant PhD students at the SCALE-AI Chair at @polymtl, has just been awarded the Dupačová-Prékopa Best Student Paper Prize in #StochasticProgramming at #ICSP2025!

ok so dont freak out but i'm getting nontrivial performance improvements on SFT by scaling the loss by avg probability of all tokens in dataset... represented as a scalar between 0 and 1, where 0 is a perfect prediction ...which doubles as an implicit learning rate scheduler?

it turns out you can actually bias LLM finetuning in the direction you want for a metric if you can bound it between 1 and 0, and use that as a multiplier for the cross entropy loss. implicitly, in an emergent way w/o hard penalties! so, i can make SFT better on "hard tokens"

🔍 Learn how to handle infeasible scenarios in stochastic programming using penalty terms or chance constraints. Author: Thana B. #StochasticProgramming #Optimization #MonteCarloModeling ift.tt/BFo1K5y

New paper! We cast reward fine-tuning as stochastic control. 1. We prove that a specific noise schedule *must* be used for fine-tuning. 2. We propose a novel algorithm that is significantly better than the adjoint method*. (*this is an insane claim) arxiv.org/abs/2409.08861

Plenary talk Jim Luedtke (@jim_luedtke) U. Wisconsin-Madison (@UWMadison), USA "Generator Expansion to Improve Power Grid Resiliency and Efficiency: A Case Study in Location Analysis Under Uncertainty" #StochasticProgramming #NonLinearOpt #MachineLearning

Dr Michael A H Dempster: "Bill Ziemba's legacy consists of a wide range of contributions to the literature ranging from pure mathematics and theoretical physics to engineering, economics, politics, finance and professional baseball." #StochasticProgramming #RiskManagement

Dive into the World of Multistage Stochastic Programs 📊: Ever heard of Epi-convergent Discretizations? 🔄 These cutting-edge techniques ensure convergence to optimal solutions, providing a robust framework for decision-making under uncertainty. #StochasticProgramming…

The 2nd Copenhagen PhD School of Stochastic Programming will take place during the days before EURO 2024. Guest lecturers: - @Francy_Maggioni - Asgeir Tomasgard - Alois Pichler - Michal Kaut Info and registration math.ku.dk/english/calend… #StochasticProgramming #Optimization

Something went wrong.

Something went wrong.

United States Trends

- 1. Jake Paul 285K posts

- 2. #GMMTVStarlympics2025 5.09M posts

- 3. #Scambodia N/A

- 4. #Scammer N/A

- 5. Bama 41.8K posts

- 6. jennie 303K posts

- 7. WILLIAMEST NO LIMITS 583K posts

- 8. Good Saturday 18.6K posts

- 9. PERTHSANTA DOMIIA WEWILLWIN 842K posts

- 10. Clinton 280K posts

- 11. Oklahoma 68.1K posts

- 12. #엑소_2025멜론뮤직어워드 44K posts

- 13. EXO THE MOMENT 32.1K posts

- 14. #EXO_MMA2025 45.8K posts

- 15. Dave Chappelle 4,973 posts

- 16. NAMTANFILM CHAMPION LUV 185K posts

- 17. jay park 9,610 posts

- 18. Vivek 54.6K posts

- 19. Tyson 30K posts

- 20. Mateer 12.8K posts