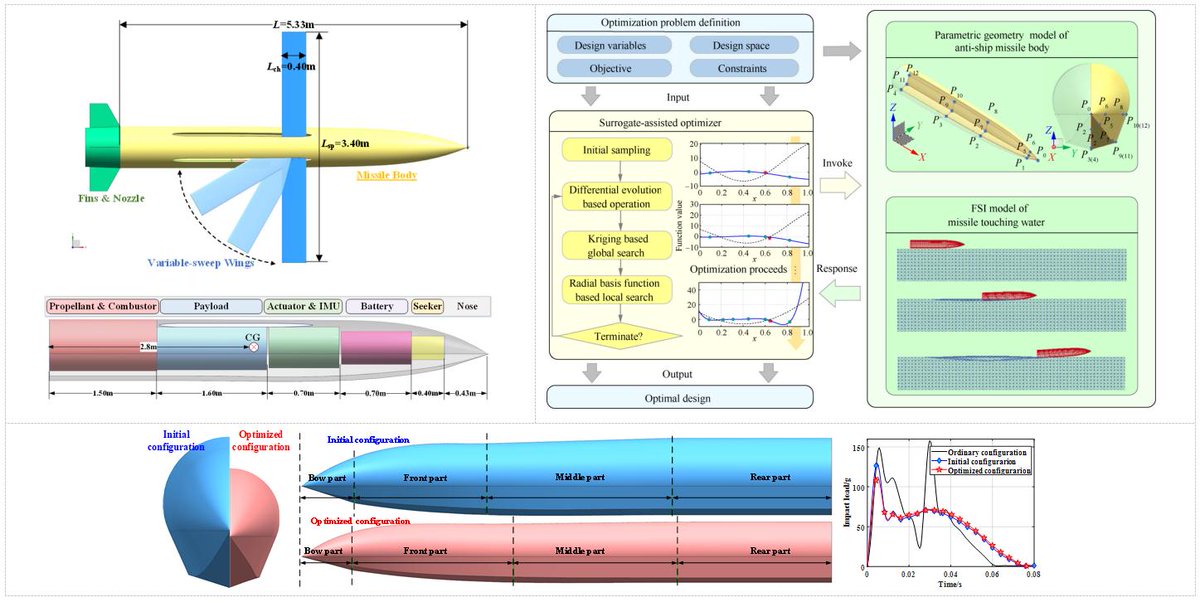

#surrogate_assisted_optimization نتائج البحث

Agencies charge $150K/yr for this. This AI CMO did it in 15 mins. This AI system replaces your entire marketing team and ships campaigns in hours instead of weeks. No agencies. No freelancers. No $150K/year teams. Just complete marketing campaigns from research to…

Since compute grows faster than the web, we think the future of pre-training lies in the algorithms that will best leverage ♾ compute We find simple recipes that improve the asymptote of compute scaling laws to be 5x data efficient, offering better perf w/ sufficient compute

built my own vector db from scratch with - linear scan, kd_tree, hsnw, ivf indexes just to understand things from first principles. all the way from: > recursive BST insertion with d cycling split > hyperplan perpendicular splitting to axis at depth%d > bound and branch pruning…

Φ-SO : Physical Symbolic Optimization - Learning Physics from Data 🧠 The Physical Symbolic Optimization package uses deep reinforcement learning to discover physical laws from data. Here is Φ-SO discovering the analytical expression of a damped harmonic oscillator.

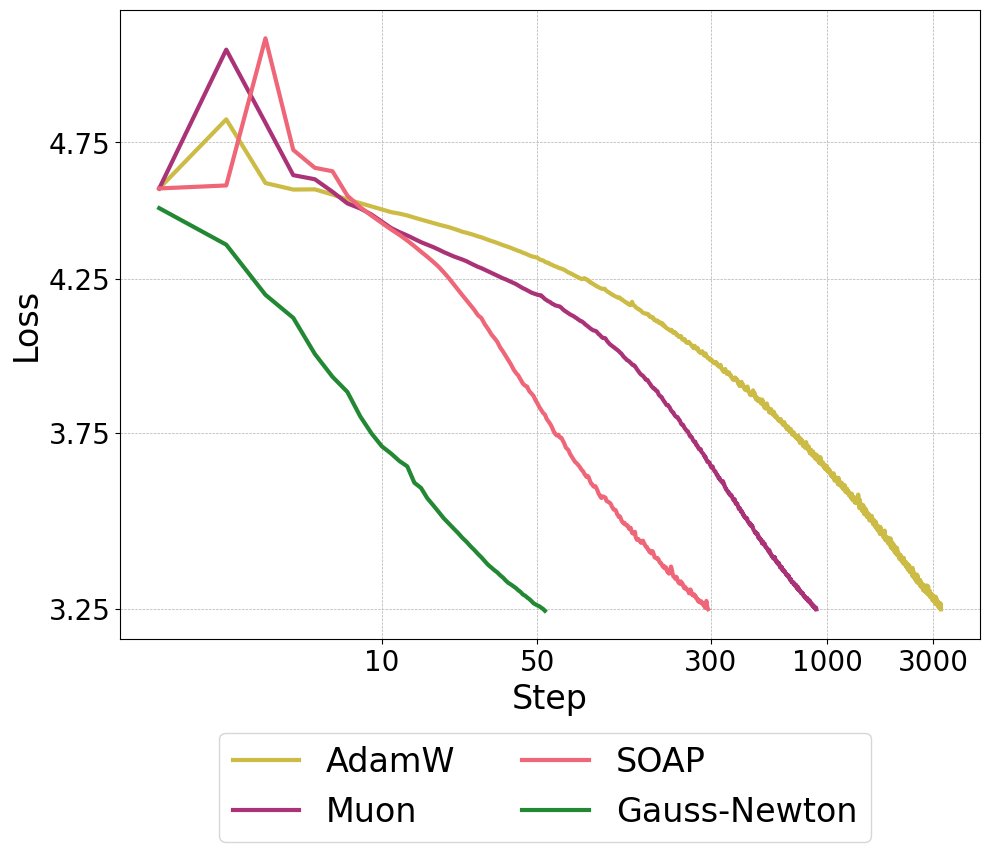

1/8 Second Order Optimizers like SOAP and Muon have shown impressive performance on LLM optimization. But are we fully utilizing the potential of second order information? New work: we show that a full second order optimizer is much better than existing optimizers in terms of…

The key to unlocking genuine physical intelligence is the **Surrogate Model**. It's how we embed the laws of nature into the machine learning loop. #AI #PIML #SurrogateModel #CFD

The Dobby Report by @SentientAGI is one of the most fascinating deep dives into AI fine-tuning I’ve read recently. ➥ It explains how Sentient created the “Dobby” family ~ models that combine hard skills (like math, logic, reasoning, instruction-following) with soft skills (like…

Nov 2 Paper 2/30: Evolution Strategies at Scale: LLM Fine-Tuning Beyond Reinforcement Learning This paper revisits Evolution Strategies (ES) as an alternative to RL for fine-tuning LLMs. Instead of exploring in action space (token-level rollouts like PPO/GRPO), ES perturbs…

In the age of LLMs, smart people are getting smarter, while dumb people are getting dumber.

LLMs as Optimizers -Optimization task described in natural language At each step: -LLM generates new solutions w/ previous solutions & values -New solutions evaluated -Added to prompt -LLMs optimize their prompts & outperform human-designed prompts arxiv.org/abs/2309.03409

We wrote 'The Annotated Diffusion Transformer'. OpenAI's Sora uses a diffusion transformer to generate video. DiTs answer the question: what if we replaced the U-net in a diffusion model with a Transformer. Link in comments.

you think you know math, try understanding TRPO math with surrogate objective, approximations.

You send a query to @SentientAGI. Everything finishes in just a few seconds. But behind the scenes, here’s what really happens: User → Agent Router → Specialized Agents → GRID → ROMA → Output Layer 🟢 1️⃣ Router: Analyzes the query and determines which agents should…

This was one of the best ML freelance projects I have ever worked on. It combines multiple features vectors into a single large vector embedding for match-making tasks. It contains so many concepts - > YOLO > Grabcut Mask > Color histograms > SIFT ( ORB as alternate ) > Contours…

LLMs can now self-optimize. A new method allows an AI to rewrite its own prompts to achieve up to 35x greater efficiency, outperforming both Reinforcement Learning and Fine-Tuning for complex reasoning. UC Berkeley, Stanford, and Databricks introduce a new method called GEPA…

A bot can optimize a variable. AI Xovia is designed to understand the entire equation. It doesn't just ask "what if price goes up?" It asks "why would it?" and builds its strategy on the answer. $AIX $SOL

Analysis on pass@k training. This shows how advantage shaping for pass@k training relates to maximizing surrogate reward of pass@k, and how it relates to upsampling of harder samples.

I am SUPER EXCITED to publish the 130th episode of the Weaviate Podcast featuring Xiaoqiang Lin (@xiaoqiang_98), the lead author of REFRAG from Meta Superintelligence Labs! 🎙️🎉 Traditional RAG systems use vectors to retrieve relevant context, but then throw away the vectors,…

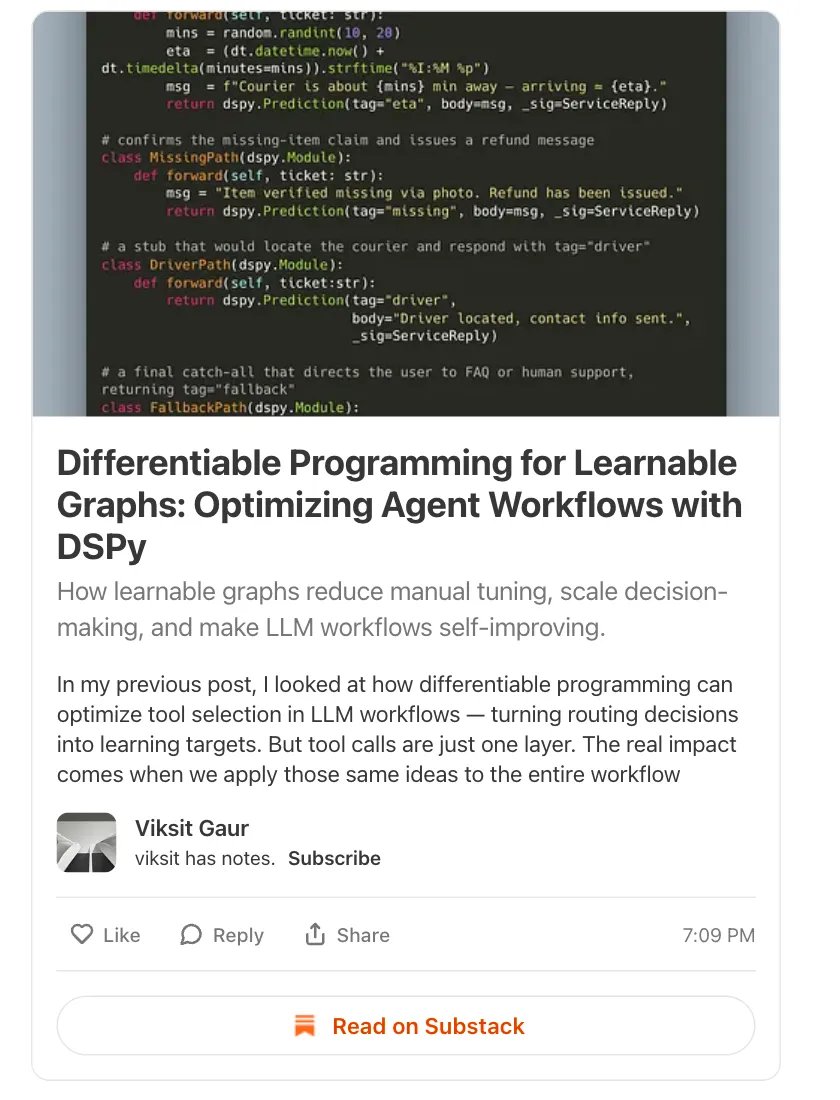

New post! Optimizing End to End Agent Workflows with @DSPyOSS optimizers: Differentiable Programming for Learnable Graphs How learnable graphs reduce manual tuning, scale decision-making, and make LLM workflows self-improving. (Link below)

Keywords: #Surrogate_assisted_optimization #Fluid_structure_interaction #Arbitrary_Lagrange_Eulerian #Missile #Conceptual_design Link: doi.org/10.1016/j.cja.…

✨CJA Highlighted Article📷: Surrogate-assisted optimization for anti-ship missile body configuration considering high-velocity water touching Link: doi.org/10.1016/j.cja.…… @BIT1940

Something went wrong.

Something went wrong.

United States Trends

- 1. #WWERaw 28.1K posts

- 2. Cowboys 39K posts

- 3. Koa Peat 2,445 posts

- 4. Logan Paul 5,371 posts

- 5. Bland 7,424 posts

- 6. Cardinals 18.9K posts

- 7. Cuomo 131K posts

- 8. Monday Night Football 12.1K posts

- 9. Marvin Harrison Jr 3,198 posts

- 10. Sam Williams N/A

- 11. Jerry 35.8K posts

- 12. Jake Ferguson 1,346 posts

- 13. Arizona 33.4K posts

- 14. #RawOnNetflix 1,131 posts

- 15. Jacoby Brissett 1,362 posts

- 16. Turpin N/A

- 17. #OlandriaxCFDAAwards 10.4K posts

- 18. Ben Kindel N/A

- 19. Kyler Murray 1,466 posts

- 20. Josh Sweat 1,338 posts