#traditionalml kết quả tìm kiếm

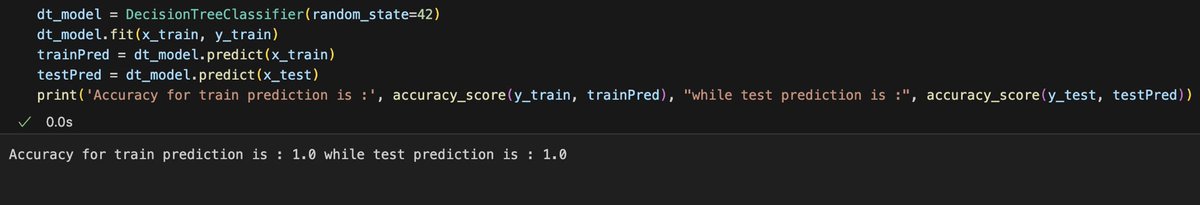

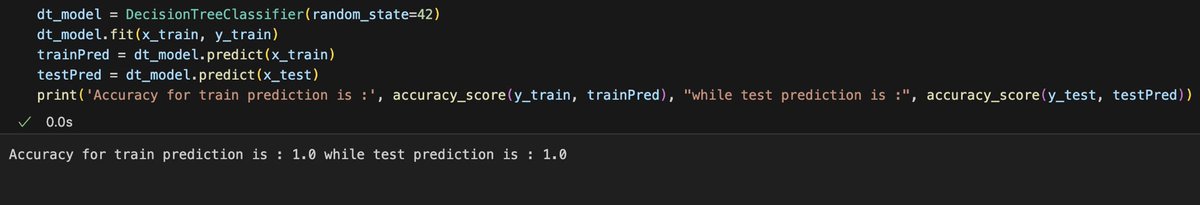

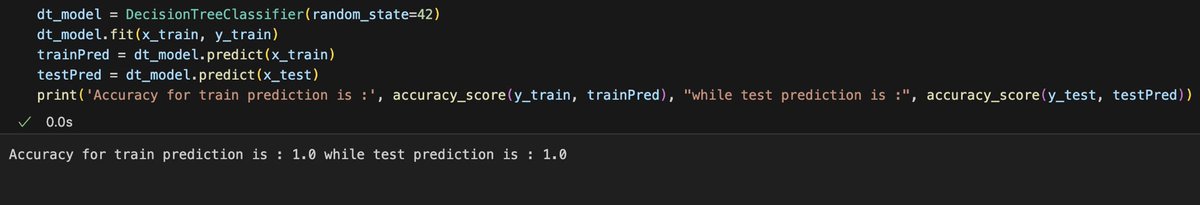

#TraditionalML 😦100% comes from Kaggel datasets even with base model even with susceptible high variance model. #nodataleakage

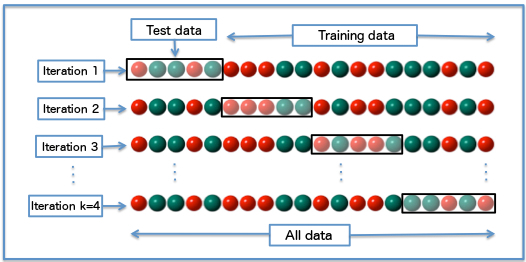

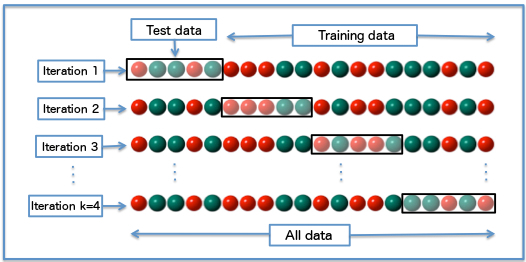

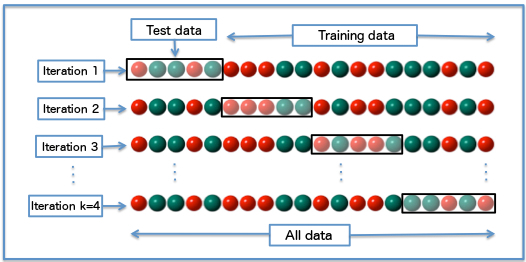

#TraditionalML It's k-fold cross-validation. Since all data samples are used for both training and testing a model, this removes doubts about the data being biased or skewed.

🚀 In #TraditionalML, online inference means predictions post-request; batch, pre-computed for predictable inputs like rec systems. But with open-ended #FoundationModels, predicting all prompts is tough! #AIApplications 🧠✨ #BatchInference

Article: “Do not sleep on traditional machine learning: Simple and interpretable techniques are competative to deep learning for sleep scoring.” lnkd.in/g79CRfU3 #MachineLearning #TraditionalML #DataScience #DeepLearning #ArtificialIntelligence #ML #DL #Research 5/5

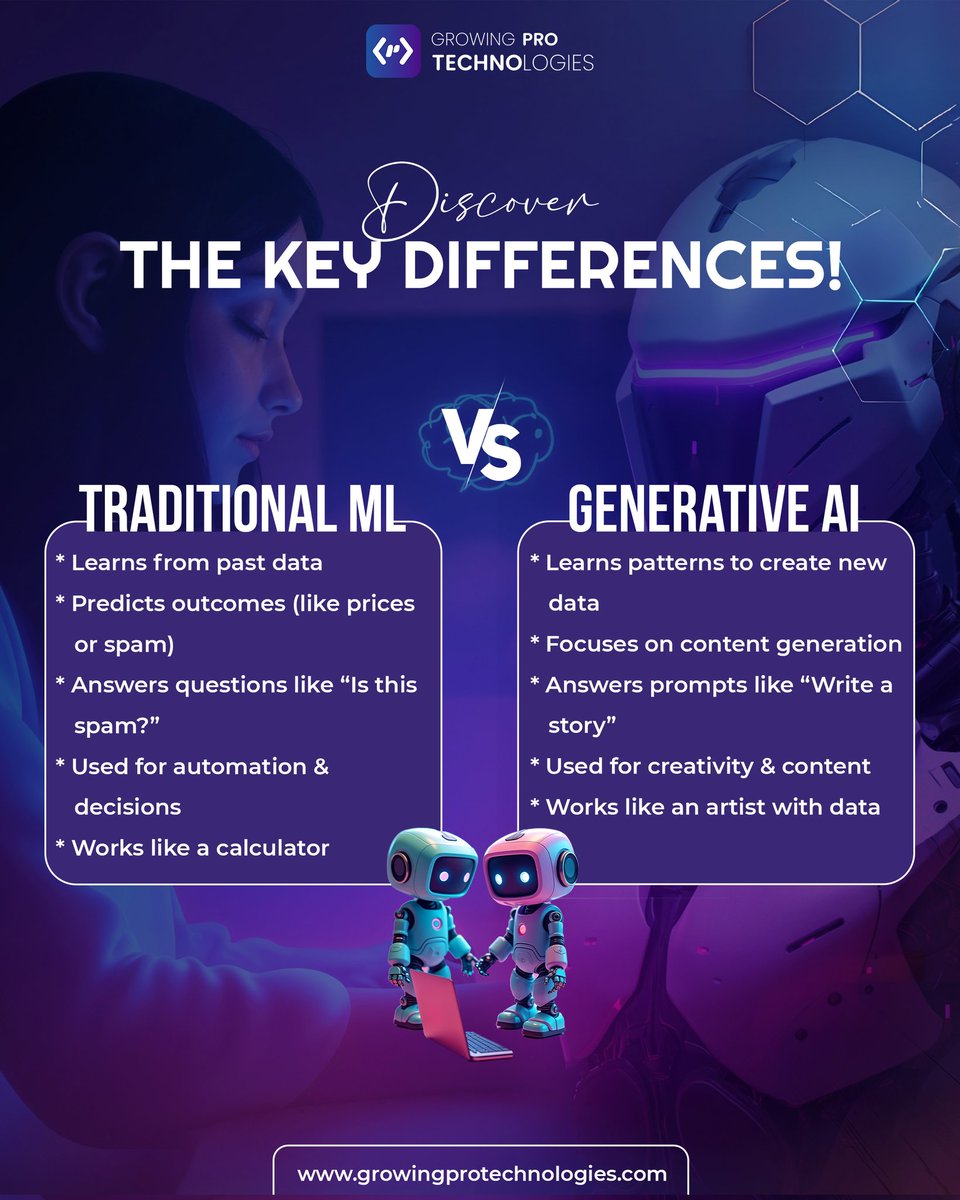

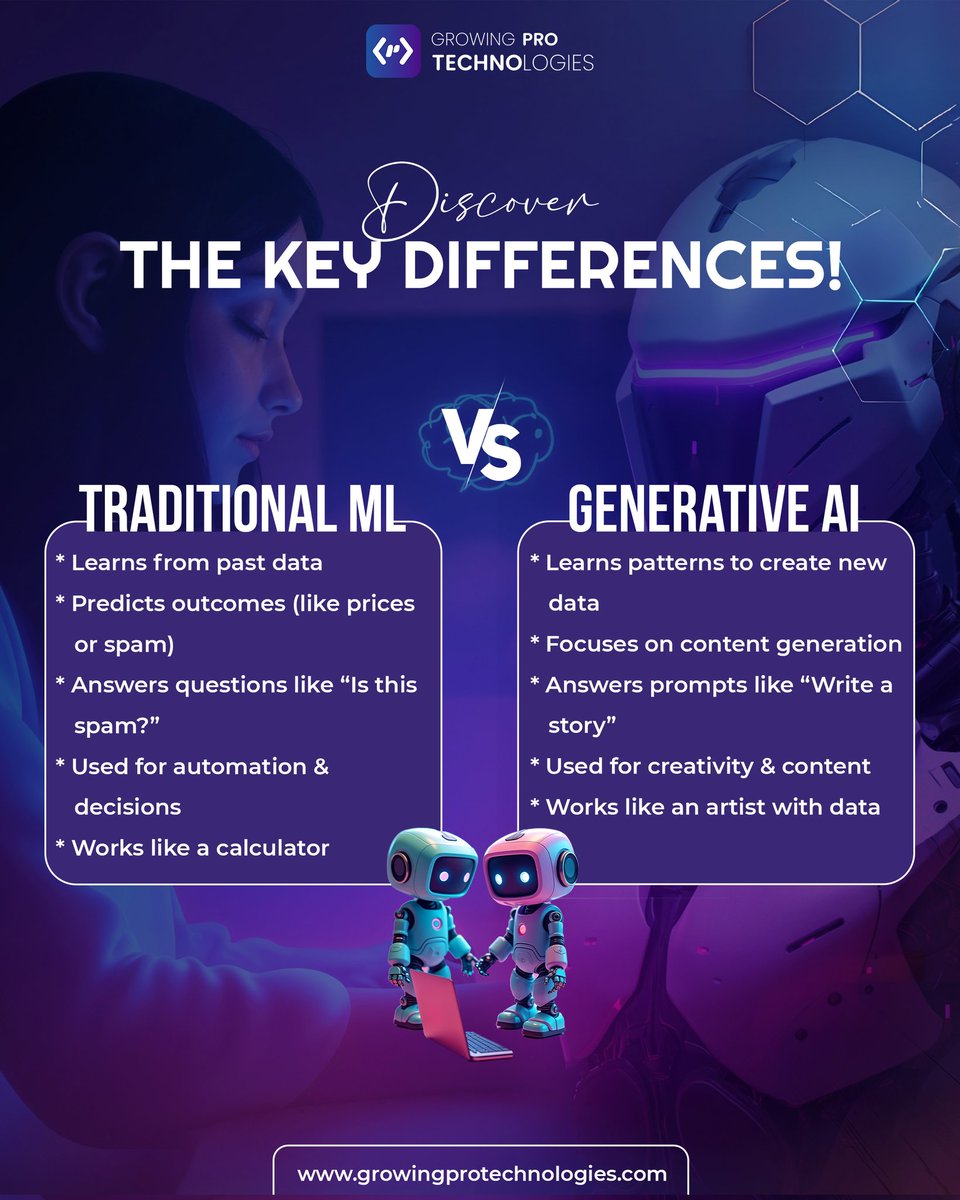

Think all AI is the same? Think again! 🤔 Read on to discover the key differences and how both can power the future of your business. #traditionalml #generativeai #aiinnovation #machinelearning #futureofai #aitechnology #artificialintelligence #techrevolution #businesssolutions

#TraditionalML Gradiant_Boosting + Regularization = XGBoosting

#TraditionalML Train the base model first before tuning model parameters, compare performance.

#TraditionalML A rf_model performance just got improved after applying parameter tuning [grid_search].

#TraditionalML DecisionTree models are ensembled to make the RandomForest more robust to overfitting and a better-performing model.

#TraditionalML Atleast the traditionalML models are intetpretable and can handle small-medium sized datasets. Start with them first!

#TraditionalML Tree-based algorithms are prone to overfitting, why? Decision Trees learn deeply (memorise a lot) during training. Random Forest, as an ensemble, automatically reduces overfitting. However, Random Forest is still prone to overfitting when the data is too noisy.

Through zero-shot and few-shot methods, this technical use case unpacks #ObjectDetection and shares a Dataiku demo that can be easily reused with your own images: | bit.ly/40QwOyo | #TrainingData #TraditionalML #AdvancedDataScience

madeup terminologies in AI - "#TraditionalML" - a term which is becoming common-place in last couple of years. No proper definition!! Has created so much confusion for people outside the field like - Gen AI Vs ML, AI Vs ML. (fyi: GenAI ⊂ ML ⊂ AI)

Humans, but Not Deep Neural Networks, Often Miss Giant Targets in Scenes #HardAi #SoftAi #TraditionalMl ow.ly/SN8Q30fH2Sg

#TraditionalML 😦100% comes from Kaggel datasets even with base model even with susceptible high variance model. #nodataleakage

madeup terminologies in AI - "#TraditionalML" - a term which is becoming common-place in last couple of years. No proper definition!! Has created so much confusion for people outside the field like - Gen AI Vs ML, AI Vs ML. (fyi: GenAI ⊂ ML ⊂ AI)

#TraditionalML Gradiant_Boosting + Regularization = XGBoosting

#TraditionalML It's k-fold cross-validation. Since all data samples are used for both training and testing a model, this removes doubts about the data being biased or skewed.

#TraditionalML DecisionTree models are ensembled to make the RandomForest more robust to overfitting and a better-performing model.

#TraditionalML Train the base model first before tuning model parameters, compare performance.

#TraditionalML Atleast the traditionalML models are intetpretable and can handle small-medium sized datasets. Start with them first!

#TraditionalML A rf_model performance just got improved after applying parameter tuning [grid_search].

#TraditionalML Tree-based algorithms are prone to overfitting, why? Decision Trees learn deeply (memorise a lot) during training. Random Forest, as an ensemble, automatically reduces overfitting. However, Random Forest is still prone to overfitting when the data is too noisy.

Think all AI is the same? Think again! 🤔 Read on to discover the key differences and how both can power the future of your business. #traditionalml #generativeai #aiinnovation #machinelearning #futureofai #aitechnology #artificialintelligence #techrevolution #businesssolutions

Through zero-shot and few-shot methods, this technical use case unpacks #ObjectDetection and shares a Dataiku demo that can be easily reused with your own images: | bit.ly/40QwOyo | #TrainingData #TraditionalML #AdvancedDataScience

Article: “Do not sleep on traditional machine learning: Simple and interpretable techniques are competative to deep learning for sleep scoring.” lnkd.in/g79CRfU3 #MachineLearning #TraditionalML #DataScience #DeepLearning #ArtificialIntelligence #ML #DL #Research 5/5

Humans, but Not Deep Neural Networks, Often Miss Giant Targets in Scenes #HardAi #SoftAi #TraditionalMl ow.ly/SN8Q30fH2Sg

#TraditionalML 😦100% comes from Kaggel datasets even with base model even with susceptible high variance model. #nodataleakage

#TraditionalML It's k-fold cross-validation. Since all data samples are used for both training and testing a model, this removes doubts about the data being biased or skewed.

Think all AI is the same? Think again! 🤔 Read on to discover the key differences and how both can power the future of your business. #traditionalml #generativeai #aiinnovation #machinelearning #futureofai #aitechnology #artificialintelligence #techrevolution #businesssolutions

Something went wrong.

Something went wrong.

United States Trends

- 1. Thanksgiving 1.69M posts

- 2. Thankful 317K posts

- 3. Turkey Day 36.3K posts

- 4. Cece Winans N/A

- 5. #GoPackGo 3,417 posts

- 6. #OnePride 3,259 posts

- 7. Busta Rhymes 1,238 posts

- 8. #ProBowlVote 18.3K posts

- 9. Go Lions 2,146 posts

- 10. Lil Jon N/A

- 11. Afghanistan 246K posts

- 12. Grateful 226K posts

- 13. National Anthem 5,025 posts

- 14. Gobble Gobble 22.1K posts

- 15. #PrizePicksNFL 2,274 posts

- 16. #Gratitude 7,345 posts

- 17. Sarah Beckstrom 16.3K posts

- 18. Toys R Us N/A

- 19. Turn Down for What N/A

- 20. Debbie Gibson N/A