#vanishinggradientproblem search results

✅Challenges: Training Difficulty: Deep RNNs can be hard to train due to #vanishinggradientproblem. Using LSTM or GRU units can alleviate this issue Computation Cost: More layer increase cost & training time, especially for long sequences. Code - colab.research.google.com/drive/1c4eN4cP…

Learn about Backpropagation deepai.org/machine-learni… #VanishingGradientProblem #Backpropagation

deepai.org

Backpropagation

Backpropagation, short for backward propagation of errors, is a widely used method for calculating derivatives inside deep feedforward neural networks.

From the Machine Learning & Data Science glossary: Long Short-Term Memory deepai.org/machine-learni… #VanishingGradientProblem #LongShortTermMemory

deepai.org

Long Short-Term Memory

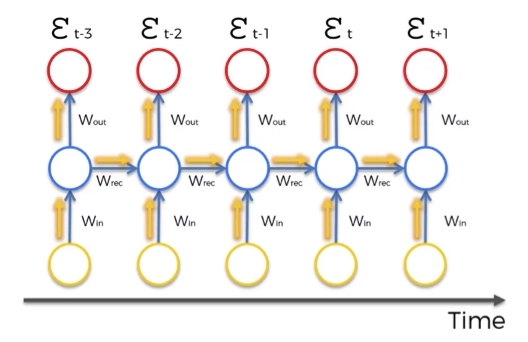

LSTMs are a form of recurrent neural network invented in the 1990s by Sepp Hochreiter and Juergen Schmidhuber, and now widely used for image, sound and time series analysis, because they help solve...

From the Machine Learning & Data Science glossary: RMSProp deepai.org/machine-learni… #VanishingGradientProblem #RMSProp

DeepAI Term of the Day: Vanishing Gradient Problem deepai.org/machine-learni… #Backpropagation #VanishingGradientProblem

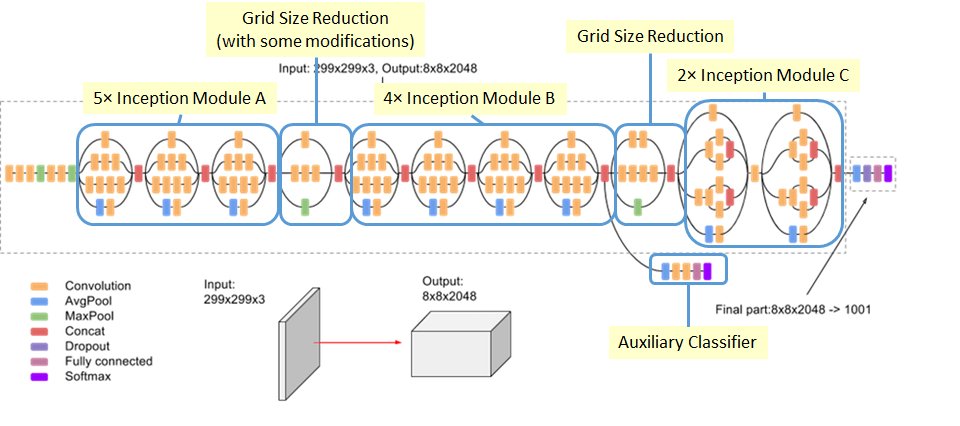

Everything you need to know about Inception Module deepai.org/machine-learni… #VanishingGradientProblem #InceptionModule

Read about ReLu deepai.org/machine-learni… #VanishingGradientProblem #ReLu

deepai.org

ReLu

ReLu is a non-linear activation function that is used in multi-layer neural networks or deep neural networks. The output of ReLu is the maximum value between zero and the input value.

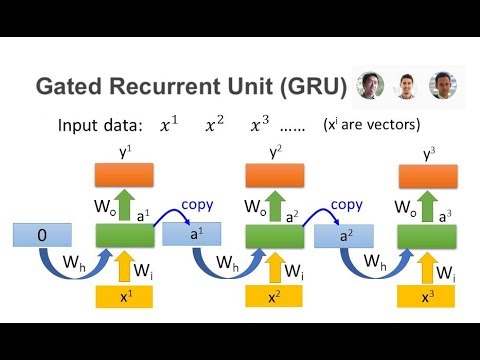

Read about Gated Recurrent Unit deepai.org/machine-learni… #VanishingGradientProblem #GatedRecurrentUnit

deepai.org

Gated Recurrent Unit

A gated recurrent unit is a gating mechanism in recurrent neural networks similar to a long short-term memory unit but without an output gate.

From the Machine Learning & Data Science glossary: RMSProp deepai.org/machine-learni… #VanishingGradientProblem #RMSProp

From the Machine Learning & Data Science glossary: Gated Neural Network deepai.org/machine-learni… #VanishingGradientProblem #GatedNeuralNetwork

Learn about Activation Level deepai.org/machine-learni… #VanishingGradientProblem #ActivationLevel

deepai.org

Activation Level

The activation level of an artificial neural network node is the output generated by the activation function, or directly given by a human trainer.

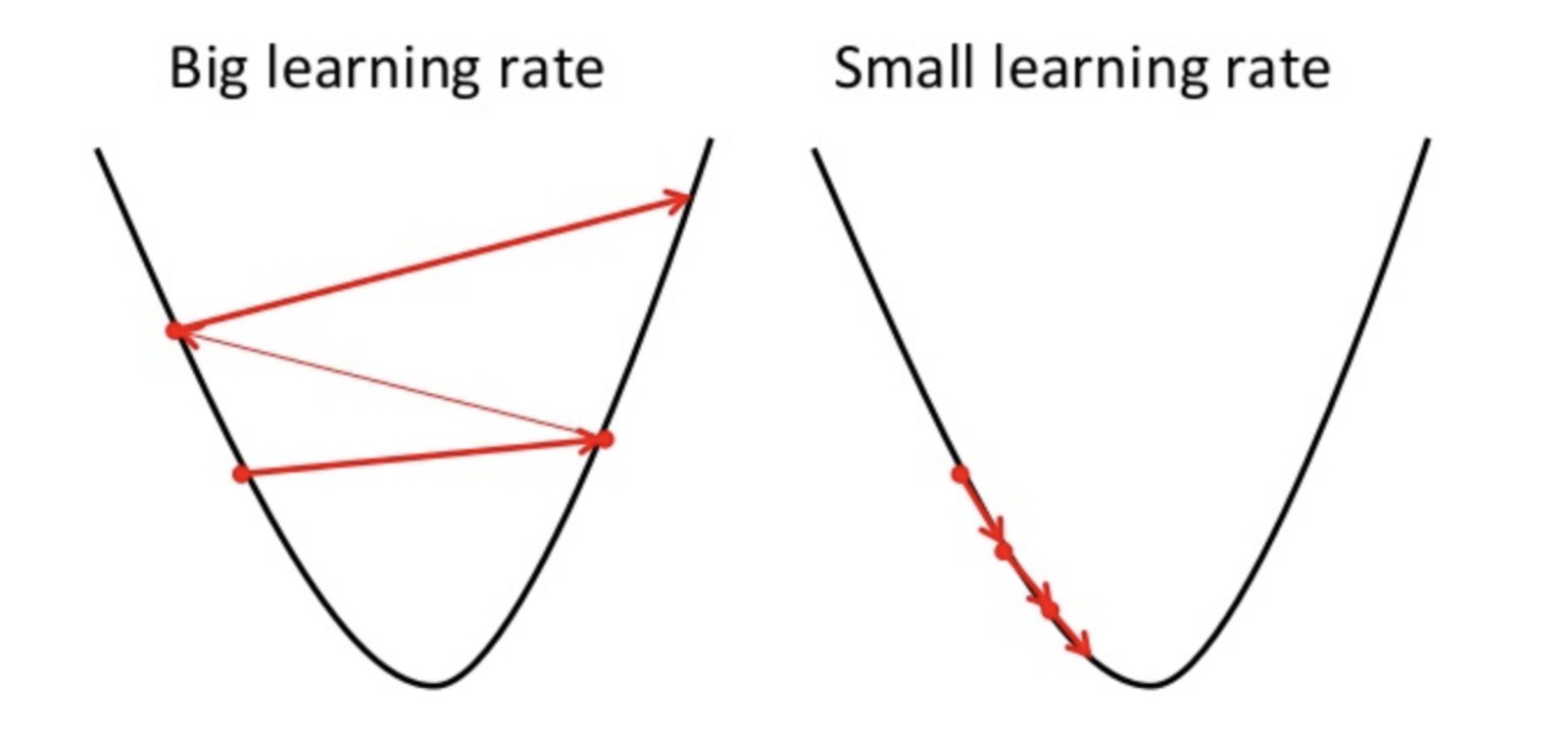

The Vanishing Gradient Problem in AI refers to the issue where the gradient of the loss function becomes infinitesimally small, slowing down learning #VanishingGradientProblem

From the Machine Learning & Data Science glossary: Long Short-Term Memory deepai.org/machine-learni… #VanishingGradientProblem #LongShortTermMemory

deepai.org

Long Short-Term Memory

LSTMs are a form of recurrent neural network invented in the 1990s by Sepp Hochreiter and Juergen Schmidhuber, and now widely used for image, sound and time series analysis, because they help solve...

Level up your data science vocabulary: Activation Function deepai.org/machine-learni… #VanishingGradientProblem #ActivationFunction

deepai.org

Activation Function

An activation function sets the output behavior of each node, or “neuron” in an artificial neural network.

Who said learning about machine learning had to be boring? 🤓 Check out the vanishing gradient problem and get ready to be amazed! 🤩 #VanishingGradientProblem deepai.org/machine-learni… 🤓🤩

✅Challenges: Training Difficulty: Deep RNNs can be hard to train due to #vanishinggradientproblem. Using LSTM or GRU units can alleviate this issue Computation Cost: More layer increase cost & training time, especially for long sequences. Code - colab.research.google.com/drive/1c4eN4cP…

Who said learning about machine learning had to be boring? 🤓 Check out the vanishing gradient problem and get ready to be amazed! 🤩 #VanishingGradientProblem deepai.org/machine-learni… 🤓🤩

From the Machine Learning & Data Science glossary: RMSProp deepai.org/machine-learni… #VanishingGradientProblem #RMSProp

From the Machine Learning & Data Science glossary: Long Short-Term Memory deepai.org/machine-learni… #VanishingGradientProblem #LongShortTermMemory

deepai.org

Long Short-Term Memory

LSTMs are a form of recurrent neural network invented in the 1990s by Sepp Hochreiter and Juergen Schmidhuber, and now widely used for image, sound and time series analysis, because they help solve...

Learn about Backpropagation deepai.org/machine-learni… #VanishingGradientProblem #Backpropagation

deepai.org

Backpropagation

Backpropagation, short for backward propagation of errors, is a widely used method for calculating derivatives inside deep feedforward neural networks.

DeepAI Term of the Day: Vanishing Gradient Problem deepai.org/machine-learni… #Backpropagation #VanishingGradientProblem

Read about ReLu deepai.org/machine-learni… #VanishingGradientProblem #ReLu

deepai.org

ReLu

ReLu is a non-linear activation function that is used in multi-layer neural networks or deep neural networks. The output of ReLu is the maximum value between zero and the input value.

Level up your data science vocabulary: Activation Function deepai.org/machine-learni… #VanishingGradientProblem #ActivationFunction

deepai.org

Activation Function

An activation function sets the output behavior of each node, or “neuron” in an artificial neural network.

DeepAI Term of the Day: Vanishing Gradient Problem deepai.org/machine-learni… #ActivationFunction #VanishingGradientProblem

Read about ReLu deepai.org/machine-learni… #VanishingGradientProblem #ReLu

deepai.org

ReLu

ReLu is a non-linear activation function that is used in multi-layer neural networks or deep neural networks. The output of ReLu is the maximum value between zero and the input value.

Read about Gated Recurrent Unit deepai.org/machine-learni… #VanishingGradientProblem #GatedRecurrentUnit

deepai.org

Gated Recurrent Unit

A gated recurrent unit is a gating mechanism in recurrent neural networks similar to a long short-term memory unit but without an output gate.

From the Machine Learning & Data Science glossary: Long Short-Term Memory deepai.org/machine-learni… #VanishingGradientProblem #LongShortTermMemory

deepai.org

Long Short-Term Memory

LSTMs are a form of recurrent neural network invented in the 1990s by Sepp Hochreiter and Juergen Schmidhuber, and now widely used for image, sound and time series analysis, because they help solve...

DeepAI Term of the Day: Vanishing Gradient Problem deepai.org/machine-learni… #MachineLearning #VanishingGradientProblem

Everything you need to know about Inception Module deepai.org/machine-learni… #VanishingGradientProblem #InceptionModule

From the Machine Learning & Data Science glossary: Gated Neural Network deepai.org/machine-learni… #VanishingGradientProblem #GatedNeuralNetwork

Learn about Activation Level deepai.org/machine-learni… #VanishingGradientProblem #ActivationLevel

deepai.org

Activation Level

The activation level of an artificial neural network node is the output generated by the activation function, or directly given by a human trainer.

From the Machine Learning & Data Science glossary: Long Short-Term Memory deepai.org/machine-learni… #VanishingGradientProblem #LongShortTermMemory

deepai.org

Long Short-Term Memory

LSTMs are a form of recurrent neural network invented in the 1990s by Sepp Hochreiter and Juergen Schmidhuber, and now widely used for image, sound and time series analysis, because they help solve...

DeepAI Term of the Day: Vanishing Gradient Problem deepai.org/machine-learni… #Backpropagation #VanishingGradientProblem

From the Machine Learning & Data Science glossary: Gated Neural Network deepai.org/machine-learni… #VanishingGradientProblem #GatedNeuralNetwork

From the Machine Learning & Data Science glossary: RMSProp deepai.org/machine-learni… #VanishingGradientProblem #RMSProp

Something went wrong.

Something went wrong.

United States Trends

- 1. #BaddiesUSA 37.4K posts

- 2. Rams 24.4K posts

- 3. Cowboys 92.5K posts

- 4. Eagles 132K posts

- 5. #TROLLBOY 1,495 posts

- 6. Stafford 11K posts

- 7. Bucs 11K posts

- 8. Baker 19.1K posts

- 9. Scotty 7,754 posts

- 10. Chip Kelly 5,377 posts

- 11. Teddy Bridgewater 1,001 posts

- 12. #RHOP 8,932 posts

- 13. Raiders 59.8K posts

- 14. Stacey 28.8K posts

- 15. Todd Bowles 1,512 posts

- 16. #ITWelcomeToDerry 10.1K posts

- 17. Pickens 29.8K posts

- 18. Browns 106K posts

- 19. Ahna 4,568 posts

- 20. Shedeur 120K posts