#algorithmic_aesthetics search results

Awesome ML development visual storytelling, love it! youtu.be/RNnKtNrsrmg #algorithmic_aesthetics #ml #visual_analytics

The machine comes to life. I created this AI video using Kaiber + stills from a TV show. Any guesses which one? It's wild how fast the community is moving. A few months ago you'd need to wrangle a disco diffusion colab to pull this off, and now it's a couple of clicks and bam:

HAWP - Line Detection (ONNX) Even though it is a bit old, I want to use it as an initial step for another model I am working on. [Code] github.com/ibaiGorordo/ON… [Video] youtu.be/AKdwQwBCaTk [HAWP] github.com/cherubicXN/hawp

LERF: Language Embedded Radiance Fields TL;DR: Grounding CLIP vectors volumetrically inside a NeRF allows flexible natural language queries in 3D abs: arxiv.org/abs/2303.09553 project page: lerf.io

Images can be ambiguous, so let's cast traditional point-representations to distributions, w/o requiring additional architecture. 👇 A Non-isotropic Probabilistic Take on Deep Metric Learning arxiv.org/abs/2207.03784 #ECCV2022 with @confusezius on #contrastive #MachineLearning

Excellent new fine-grained tracking from DeepMind: TAPIR: Tracking Any Point with per-frame Initialization and temporal Refinement arxiv: arxiv.org/abs/2306.08637 project: deepmind-tapir.github.io tldr: TapNet for localization then PIPs-style refinement; outperforms everything!

Check out our #CVPR2023 paper Recurrent Vision Transformers for Object Detection with #eventcameras! We achieve sota performance (47.2% mAP) while reducing inference time by 6x (<12ms) & improving parameter efficiency 5x! Paper, Code, Video: github.com/uzh-rpg/RVT @MathiasGehrig

OmniMotion: A game-changing method for dense, long-range motion estimation in videos. It ensures global consistency, tracks occlusions, and accurately estimates motion for all pixels. Outperforming existing techniques, OmniMotion revolutionizes video analysis.

A look at each joint visualized from #ARKit hand tracking from the #WWDC Session "Meet ARKit for spatial computing" developer.apple.com/videos/play/ww…

The machine comes to life. I created this AI video using Kaiber + stills from a TV show. Any guesses which one? It's wild how fast the community is moving. A few months ago you'd need to wrangle a disco diffusion colab to pull this off, and now it's a couple of clicks and bam:

Today we’re sharing details on Meta Lattice, a new model architecture that improves Meta’s ads systems performance and efficiency. More on this new work ➡️ bit.ly/3W07lkO Three ways that this new work is enhancing our ads system 🧵

YOLO NAS is a phenomenon. A real-time object detector with <5 millisecond latency. This will eventually be integrated into mobile cameras, and have “click to buy this item” functionality. Would not be surprised to see Apple integrate this soon.

To make neural networks as modular as brains, We propose brain-inspired modular training, resulting in modular and interpretable networks! The ability to directly see modules with naked eyes can facilitate mechanistic interpretability. It’s nice to see how a “brain” grows in NN!

Announced by Mark Zuckerberg this morning — today we're releasing DINOv2, the first method for training computer vision models that uses self-supervised learning to achieve results matching or exceeding industry standards. More on this new work ➡️ bit.ly/3GQnIKf

This is insane. We can now detect feelings in real-time through facial expressions using AI. (Open-sourced code in the next tweet)

Meta coming in hot with SAM Segment Anything Model (SAM) is a promptable segmentation system. It can "cut out" any object, in any image, with a single click. Masks can be tracked in videos, enable image editing apps, and even be lifted to 3D 🧵Quick tour and test

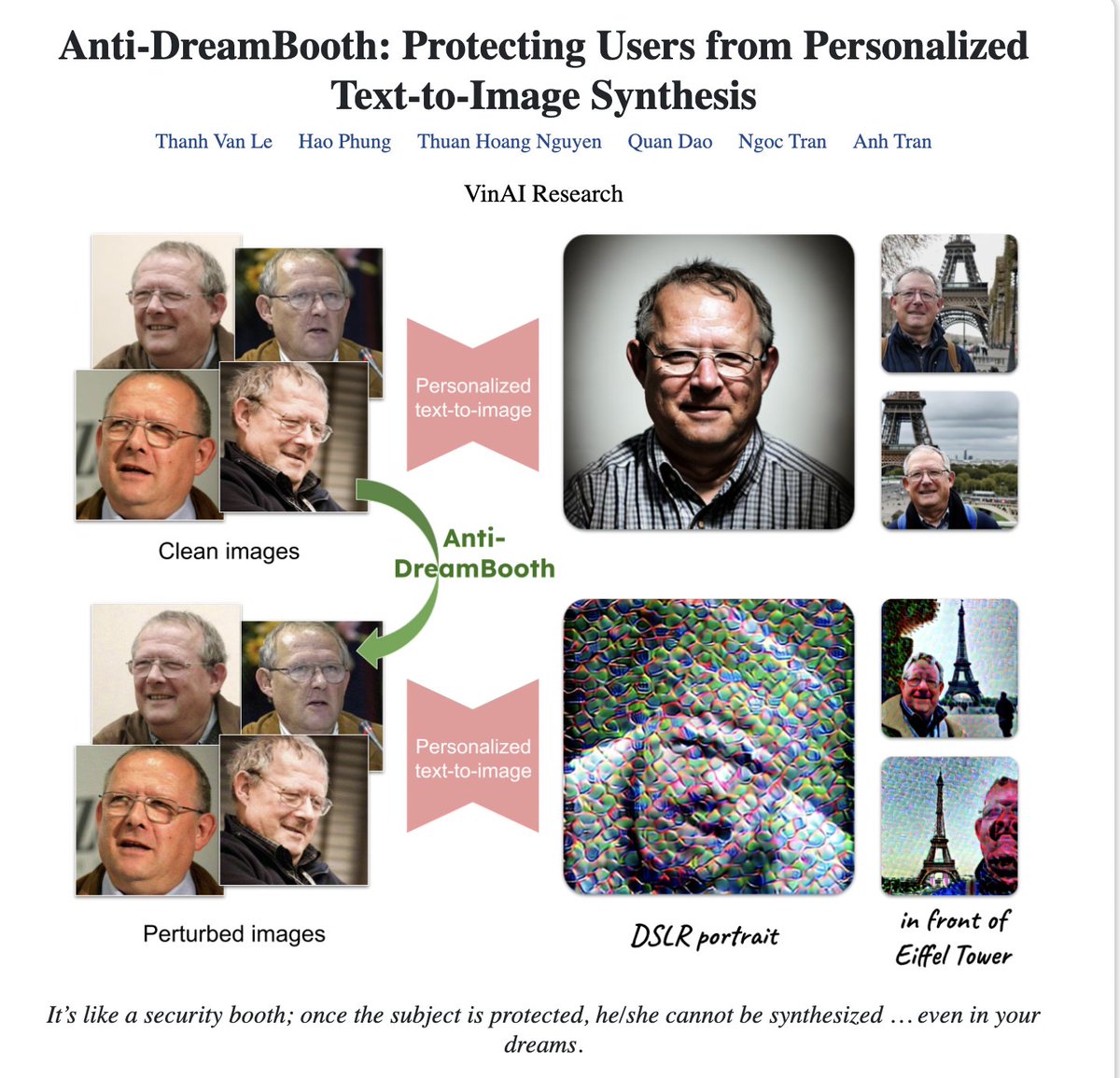

Anti-DreamBooth: Protecting users from personalized text-to-image synthesis abs: arxiv.org/abs/2303.15433 project page: anti-dreambooth.github.io github: github.com/VinAIResearch/…

Real-world cloud applications are using #opensource #computervision. CV-CUDA Beta announced at #GTC23 optimizes higher cloud throughput at a quarter of the cost and energy. Read how @Microsoft, @TencentGlobal, @Baidu, and @runwayml are adopting CV-CUDA: ➡️ nvda.ws/3neeiS3

(1/2) Check out Mask3D, a new pre-training method that embeds 3D priors into ViT backbones. Mask3D formulates a pretext reconstruction task by masking RGB and depth patches in individual RGB-D frames. arxiv.org/abs/2302.14746 youtube.com/watch?v=s0ITbs… Great work by @sekunde_ #CVPR

LERF: Language Embedded Radiance Fields TL;DR: Grounding CLIP vectors volumetrically inside a NeRF allows flexible natural language queries in 3D abs: arxiv.org/abs/2303.09553 project page: lerf.io

Just created a new Figure for a survey paper, I am quite satisfied.

NeRF update: Dollyzoom is now possible using @LumaLabsAI I shot this on my phone. NeRF is gonna empower so many people to get cinematic level shots Tutorial below - - #NeRF #NeuralRadianceFields #artificialintelligence #LumaAI

Something went wrong.

Something went wrong.

United States Trends

- 1. #River 5,860 posts

- 2. Jokic 28.4K posts

- 3. Rejoice in the Lord 1,296 posts

- 4. Good Thursday 19K posts

- 5. Lakers 52.5K posts

- 6. Namjoon 74.3K posts

- 7. FELIX VOGUE COVER STAR 10.2K posts

- 8. #FELIXxVOGUEKOREA 10.6K posts

- 9. #FELIXxLouisVuitton 9,755 posts

- 10. #ReasonableDoubtHulu N/A

- 11. #AEWDynamite 51.9K posts

- 12. Simon Nemec 2,413 posts

- 13. Mikey 66.7K posts

- 14. Clippers 15.4K posts

- 15. New Zealand 14.8K posts

- 16. Shai 16.6K posts

- 17. Thunder 39.9K posts

- 18. Visi 7,902 posts

- 19. Rory 8,476 posts

- 20. Ty Lue 1,303 posts