#batchlearning ผลการค้นหา

Batch Learning vs Online Learning ⚡ 𝐋𝐞𝐚𝐫𝐧 𝐌𝐨𝐫𝐞 👉 futureskillsacademy.com/blog/batch-vs-… #MachineLearning #AI #BatchLearning #OnlineLearning #ArtificialIntelligence #DataScience #MLModels #AIForBusiness #FutureOfWork #TechTrends #AIInnovation #ModelTraining #DataDriven #MLTechniques

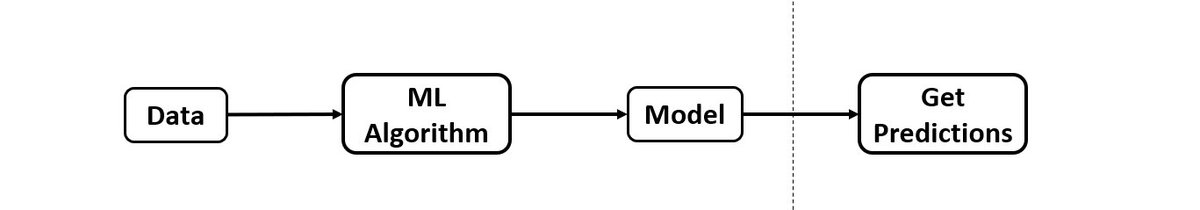

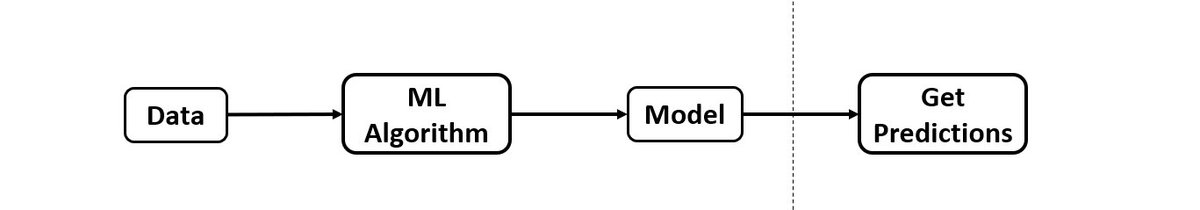

📝#Batchlearning is also called offline learning. The models trained using batch or offline learning are moved into production only at regular intervals based on performance of models trained with new data

#Batchlearning represents the training of the #models at regular intervals such as weekly, bi-weekly, monthly, quarterly, etc. In batch learning, the system is not capable of #learning incrementally. The models must be trained using all the available #data every single time

Batch learning👻 The system is incapable of learning incrementally. it must trained using all available data. #offlinelearning #batchlearning

Batch vs Online Learning in Machine Learning 🤖 𝐋𝐞𝐚𝐫𝐧 𝐌𝐨𝐫𝐞 👉 futureskillsacademy.com/blog/batch-vs-… #MachineLearning #OnlineLearning #BatchLearning #AITraining #MLModels #DataScience #AIforBeginners #FutureSkills #AIProfessionals #MLStrategy #ArtificialIntelligence

🚀 Ready to initiate your inaugural batch session? Explore numerous advantages at no cost for schools and institutions! 📚✨ #AccessibleEducation #BatchLearning #NoCostLearning #EducationalInstitutions #ExploreAdvantages #cosmosiq #schools #institutions

Batch Learning vs. Online Learning: A Comparative Guide 🧠 𝐋𝐞𝐚𝐫𝐧 𝐌𝐨𝐫𝐞 👉 buff.ly/4jDqCUL #MachineLearning #AI #BatchLearning #OnlineLearning #MLModels #AIApplications #TechInnovation #DataScience #MLTraining #TechCareers #Automation #AIResearch #FutureTech

Batch Learning vs Online Learning – What’s the Real Difference? 🤖 𝐋𝐞𝐚𝐫𝐧 𝐌𝐨𝐫𝐞 👉 futureskillsacademy.com/blog/batch-vs-… #MachineLearning #OnlineLearning #BatchLearning #AITraining #DataScience #MLOps #AIForBusiness #AICertification #UpSkillAI

Find out here! 👇 medium.com/@bharataameriy… #ML #AI #BatchLearning #OnlineLearning #100DaysOfCode #DataScience

medium.com

Batch Learning vs. Online Learning in Machine Learning

In machine learning, how models learn from data significantly impacts their efficiency and performance. Two primary learning paradigms are…

My kid wanted to read that one over and over. #batchlearning

Do you know! What does it mean by #BatchLearning, #OnlineLearning, #InstanceBasedLearning and #ModelBasedLearning? Read full article here 👉nomidl.com/machine-learni… Start Learning today! #MachineLearning #DeepLearning #Nomidl

Batching → teaching many examples at once to fully utilize the GPU. Parallelism (Data, Tensor, Pipeline) → multiple GPUs working like a team, dividing the workload This is why we need GPUs, TPUs, and clusters

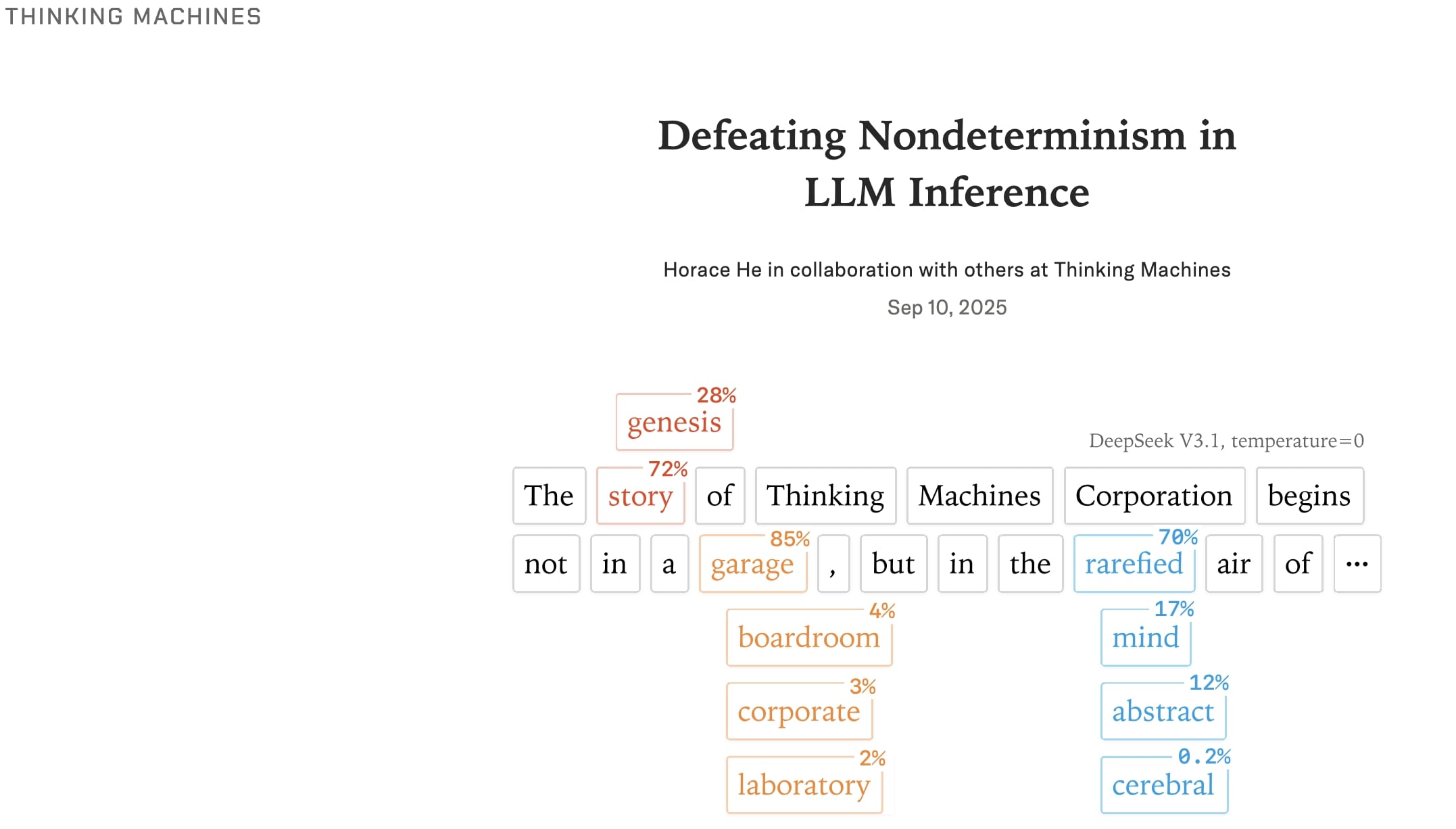

Ever wondered why LLMs vary even at temperature 0? It’s not sampling—it's lack of batch invariance. We built batch-invariant RMSNorm, Matmul, and Attention kernels, boosting reproducibility from ~80 unique outputs to 1000 identical.

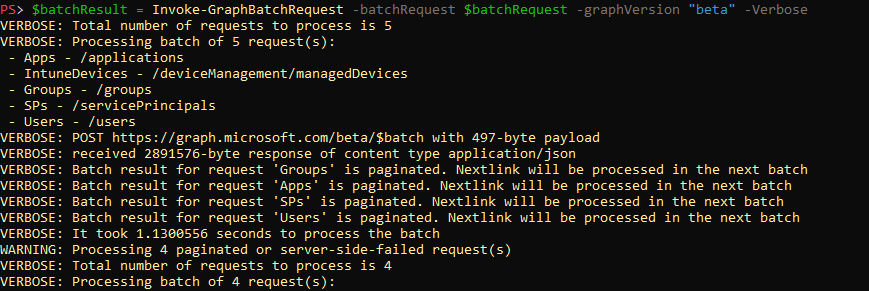

I have added batch processing for all tools.

Whenever I open my analytics, I always find these two countries. Interesting part is I am not getting any clicks on Google. This is confusing and amazing at same time.

Building Machine Learning Systems with a Feature Store: Batch, Real-Time, and LLM Systems clcoding.com/2025/11/buildi…

Defeating Non-Determinism in LLMs via Batch Invariance in Inference Kernels nextbigfuture.com/2025/11/defeat…

Select: Epistemic Neural Networks + EMAX pick the optimal small batch to make, explicitly accounting for model uncertainty. Retrospective tests: ~3× time & cost savings

Relauching BatchPro today. Spent the last few months rebuilding it to accomplish one simple task: Be your own personal AI Analyst for every @ycombinator batch. Looking forward to hearing feedback! Link is below.

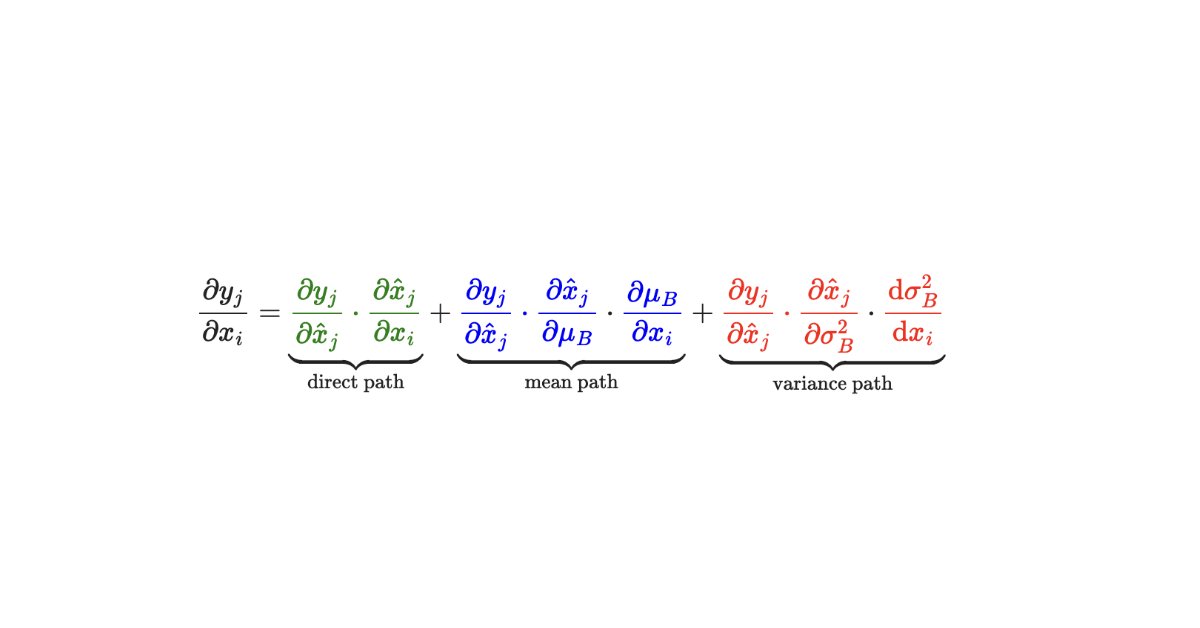

An Analytical Approach to Batch Normalization Gradients namrapatel.github.io/blog/batch-nor…

Practical takeaways: Don't: - Train attention-only - Use FullFT learning rates - Assume higher rank = better Do: - Train all layers (MLP + attention) - 10x your learning rate - Start with rank 256 - Keep batch sizes under 256

6/ The Approach: Use batch-invariant kernels that enforce a fixed reduction order regardless of batch size. This ensures consistency but may reduce efficiency slightly (e.g., 1.6-2x slowdown in tests on a single GPU with Qwen3-235B), as it limits adaptive load balancing.

Shows naive batch speculative decoding can violate output equivalence from ragged tensors; adds synchronization and dynamic grouping to address this and improve throughput (up to 3x at bs=8) without custom kernels. Useful for LLM serving engineers; probably not for end...

For anyone interested this is how to do batching doitpshway.com/how-to-use-mic…

Safer (and Sexier) Chatbots, Better Images Through Reasoning, The Dawn of Industrial AI, Forecasting Time Series - DeepLearning. AI👇 deeplearning.ai/the-batch/issu…

Exactly — but once AI agents scale, API batching becomes the bottleneck. I published a production-ready async batch processor pattern for n8n (safe HTTP, auto-retry, dynamic waits). Works perfectly for multi-agent systems: → workflowslab.gumroad.com/l/batch-proces…

Batch processing (focused): Batch 1: House chores (laundry + dishes) Batch 2: Career tasks (CV + all job applications) Do you see the difference? Your brain isn't constantly switching modes. You stay focused, finish faster, and actually feel productive ✅

You think multitasking is the best way to be productive? Hii, meet batch processing.✨ Batch processing is grouping similar tasks together and doing them in one focused block instead of jumping from one unrelated task to another. For example, you have to do your laundry,

When it comes to being productive, I talk about to-do lists a lot (maybe I'm obsessed😂) But have you ever heard of: • eat the frog • the 2-minute rule • time blocking • batch processing ?

range-based batching seems way more practical pay once for a range, get a session token, query freely in that range. reduces payment overhead massively and makes more sense for how people actually use historical data - you're usually looking at related blocks/transactions anyway

Batch your tasks to boost productivity! Research, record, edit, and schedule in chunks. Analyze performance and tailor content accordingly. What's your favorite batching method? #Productivity #ContentCreation

Batch Learning vs Online Learning ⚡ 𝐋𝐞𝐚𝐫𝐧 𝐌𝐨𝐫𝐞 👉 futureskillsacademy.com/blog/batch-vs-… #MachineLearning #AI #BatchLearning #OnlineLearning #ArtificialIntelligence #DataScience #MLModels #AIForBusiness #FutureOfWork #TechTrends #AIInnovation #ModelTraining #DataDriven #MLTechniques

Batch vs Online Learning in Machine Learning 🤖 𝐋𝐞𝐚𝐫𝐧 𝐌𝐨𝐫𝐞 👉 futureskillsacademy.com/blog/batch-vs-… #MachineLearning #OnlineLearning #BatchLearning #AITraining #MLModels #DataScience #AIforBeginners #FutureSkills #AIProfessionals #MLStrategy #ArtificialIntelligence

Batch learning👻 The system is incapable of learning incrementally. it must trained using all available data. #offlinelearning #batchlearning

🚀 Ready to initiate your inaugural batch session? Explore numerous advantages at no cost for schools and institutions! 📚✨ #AccessibleEducation #BatchLearning #NoCostLearning #EducationalInstitutions #ExploreAdvantages #cosmosiq #schools #institutions

📝#Batchlearning is also called offline learning. The models trained using batch or offline learning are moved into production only at regular intervals based on performance of models trained with new data

#Batchlearning represents the training of the #models at regular intervals such as weekly, bi-weekly, monthly, quarterly, etc. In batch learning, the system is not capable of #learning incrementally. The models must be trained using all the available #data every single time

Batch Learning vs. Online Learning: A Comparative Guide 🧠 𝐋𝐞𝐚𝐫𝐧 𝐌𝐨𝐫𝐞 👉 buff.ly/4jDqCUL #MachineLearning #AI #BatchLearning #OnlineLearning #MLModels #AIApplications #TechInnovation #DataScience #MLTraining #TechCareers #Automation #AIResearch #FutureTech

Batch Learning vs Online Learning – What’s the Real Difference? 🤖 𝐋𝐞𝐚𝐫𝐧 𝐌𝐨𝐫𝐞 👉 futureskillsacademy.com/blog/batch-vs-… #MachineLearning #OnlineLearning #BatchLearning #AITraining #DataScience #MLOps #AIForBusiness #AICertification #UpSkillAI

Do you know! What does it mean by #BatchLearning, #OnlineLearning, #InstanceBasedLearning and #ModelBasedLearning? Read full article here 👉nomidl.com/machine-learni… Start Learning today! #MachineLearning #DeepLearning #Nomidl

Something went wrong.

Something went wrong.

United States Trends

- 1. #TT_Telegram_sam11adel N/A

- 2. #hazbinhotelseason2 58.4K posts

- 3. Good Wednesday 19.7K posts

- 4. LeBron 87K posts

- 5. #hazbinhotelspoilers 3,636 posts

- 6. Peggy 20K posts

- 7. #DWTS 54.6K posts

- 8. #InternationalMensDay 25.1K posts

- 9. Baxter 2,303 posts

- 10. Kwara 180K posts

- 11. Dearborn 244K posts

- 12. Reaves 8,959 posts

- 13. Grayson 7,161 posts

- 14. Patrick Stump N/A

- 15. Whitney 16.4K posts

- 16. MC - 13 1,089 posts

- 17. Orioles 7,349 posts

- 18. Sewing 5,113 posts

- 19. Cory Mills 10.1K posts

- 20. Tatum 17.3K posts