#benefitofsmallanguagemodels 搜尋結果

After ~4 years building SOTA models & datasets, we're sharing everything we learned in ⚡The Smol Training Playbook We cover the full LLM cycle: designing ablations, choosing an architecture, curating data, post-training, and building solid infrastructure. We'll help you…

In the age of LLMs, smart people are getting smarter, while dumb people are getting dumber.

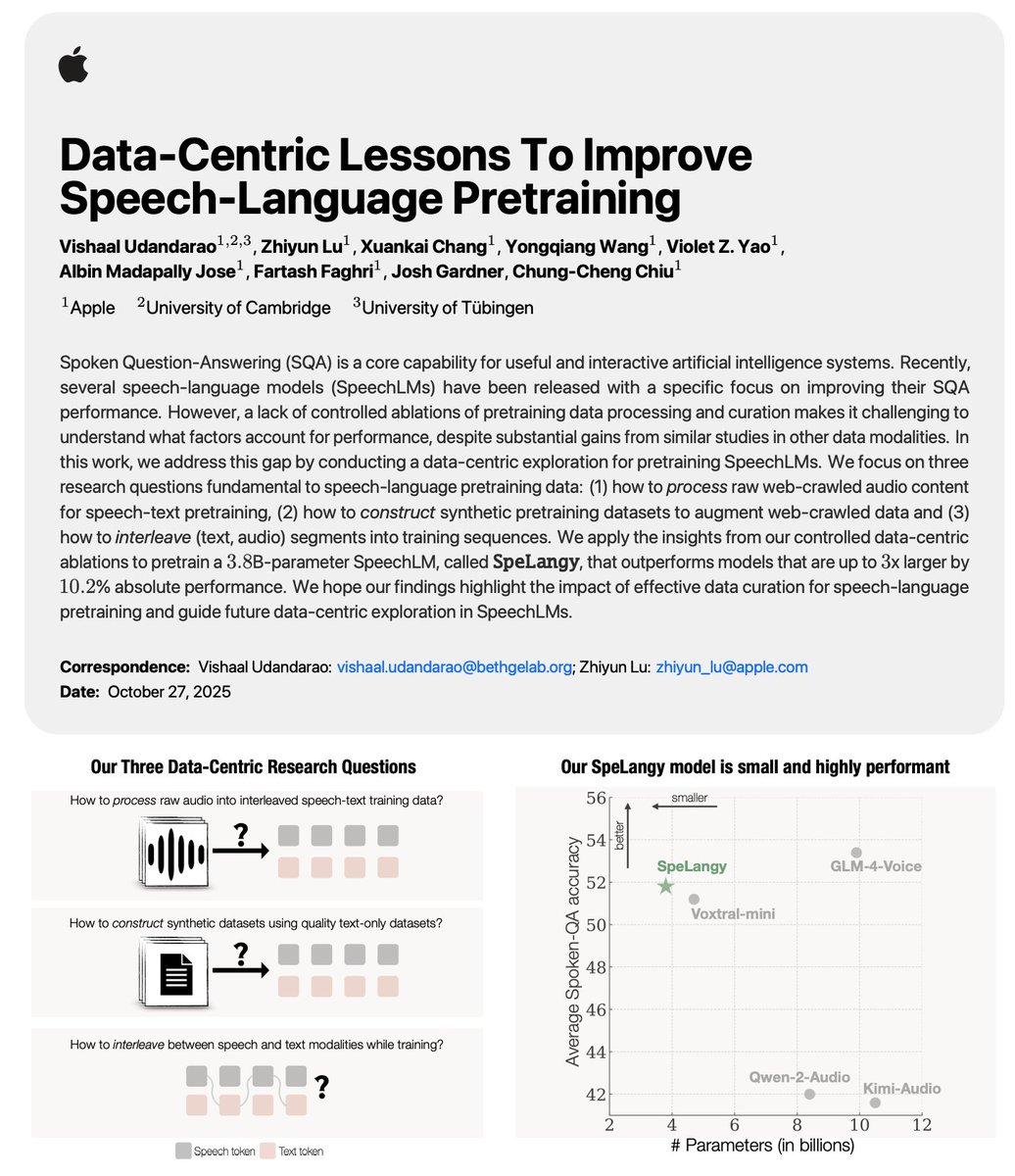

🚀New Paper arxiv.org/abs/2510.20860 We conduct a systematic data-centric study for speech-language pretraining, to improve end-to-end spoken-QA! 🎙️🤖 Using our data-centric insights, we pretrain a 3.8B SpeechLM (called SpeLangy) outperforming 3x larger models! 🧵👇

Small language models (SLMs) are revolutionizing enterprise AI. These compact powerhouses deliver domain-specific capabilities with reduced computational needs. From automating customer support to enhancing fraud detection, SLMs offer cost-effective, secure, and efficient AI…

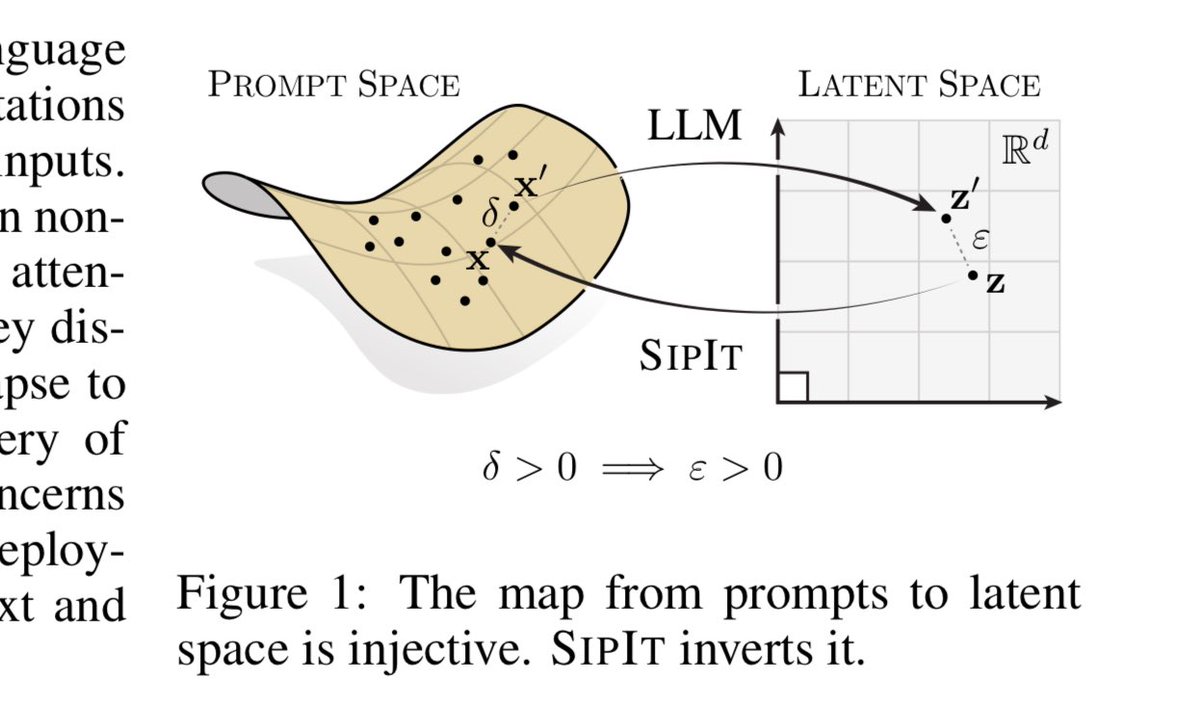

The Shocking Truth About What AI Remembers… LLMs Never Forget! Just as I stated in 2023 and began my research into this issue. It is now fully open and in an academic paper. So what is the big deal? Imagine feeding a sensitive prompt into ChatGPT, assuming the model processes…

We released SmolLM3! A smol (3B) model with: >Dual reasoning model (Choose whether to reason or not). >Long context (128k tokens). >Support for 6 languages (en, fr, es, de, it, pt). Our recipe included things like mid-training, model merging and model soup (1/2)

honestly, this is the best visualization of how large language models work that i've seen for a long time. > 3d interactive app, plays like a game > follow transformer operations step-by-step > the real architecture of Llama 8B > click on any layer, see formula and explanation…

NVIDIA's brilliant paper gives a lot of details and actionable techniques. 🎯 Small Language Models (SLMs) not LLMs are the real future of agentic AI. They gave a recipe for swapping out the large models with SLMs without breaking anything and show that 40%‑70% of calls in…

Media-generated speech can act as a bridge — giving the brain a steady model before human variability enters the mix. #SLP #autism

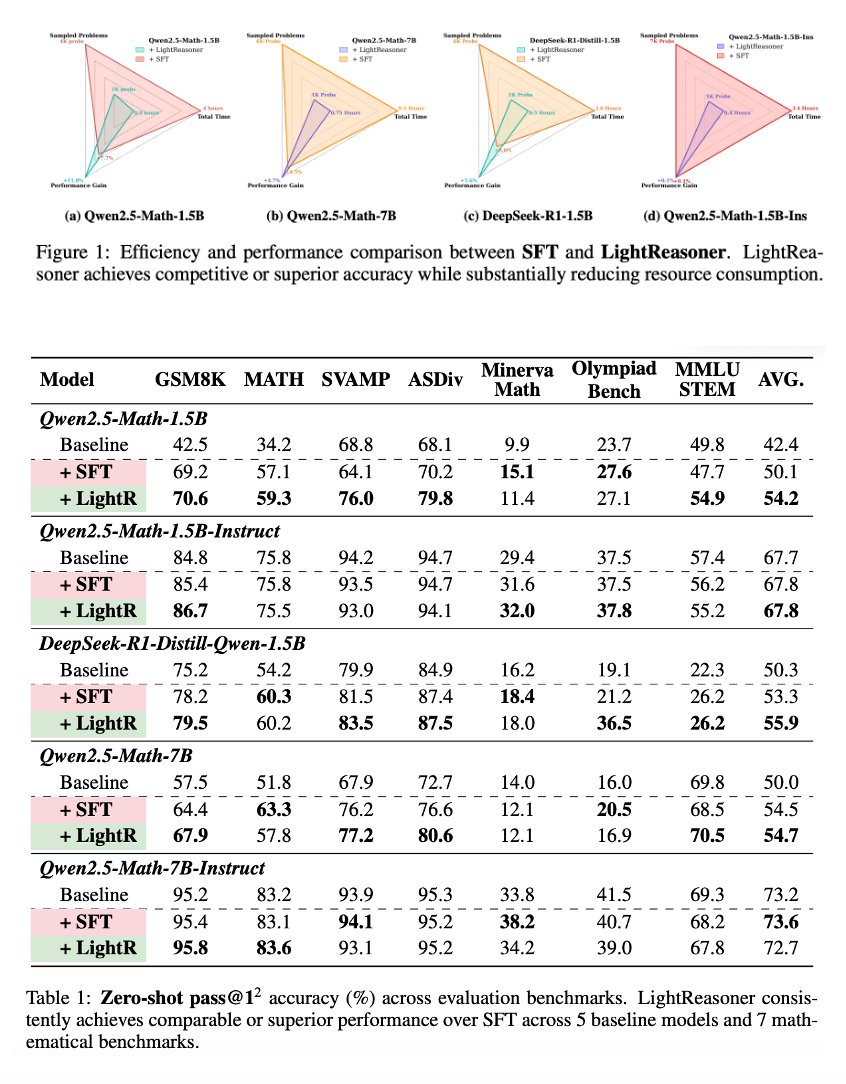

🔥 Introducing LightReasoner: Can Small Language Models Teach Large Language Models Reasoning?" New Finding: We're flipping the script on AI training—small language models (SLMs) don't just learn from large language models (LLMs), they can actually teach LLMs better and faster!…

I'm not going to be at #emnlp2025 but check out my co-authors' presentations! meta-learning teaches LLMs to learn new words faster (@wentaow10) arxiv.org/abs/2502.14791 multilingual prompting increases the cultural diversity of LLM responses (Qihan Wang) arxiv.org/abs/2505.15229

A new paper should scare you. When LLMs compete for social media likes, they make things up. When they compete for votes, they fight. When optimized for audiences, they become misaligned. Why? LLMs are trained on Sewage of Reddit and Wikipedia. Off-line data from the…

Introducing SmolLM2: the new, best, and open 1B-parameter language model. We trained smol models on up to 11T tokens of meticulously curated datasets. Fully open-source Apache 2.0 and we will release all the datasets and training scripts!

🚀 Today, we are introducing SmolTools! 🚀 Last week, at Hugging Face we made a significant leap forward with the release of SmolLM2, a compact 1.7B language model that sets a new benchmark for performance among models of its size. But beyond the impressive stats, SmolLM2 truly…

Small Language Models are the Future of Agentic AI Lots to gain from building agentic systems with small language models. Capabilities are increasing rapidly! AI devs should be exploring SLMs. Here are my notes:

Small Language Models are the Future of Agentic AI arxiv.org/abs/2506.02153

Here are 15 reasons why you should consider switching from Large Language Models (LLMs) to Small Language Models (SLMs) 📈 Economic & Cost-Efficiency 1. Drastically Lower Inference Cost 2. Reduced Infrastructure & Maintenance 3. Cheaper Fine-Tuning Agility ⚡ Performance &…

LLMS have proven that to produce language all it takes is a very simple equation. And that you don't need sensory input. In other words language is a mathematical self sustaining system. That makes the consciousness question more complex and interesting not explain it.

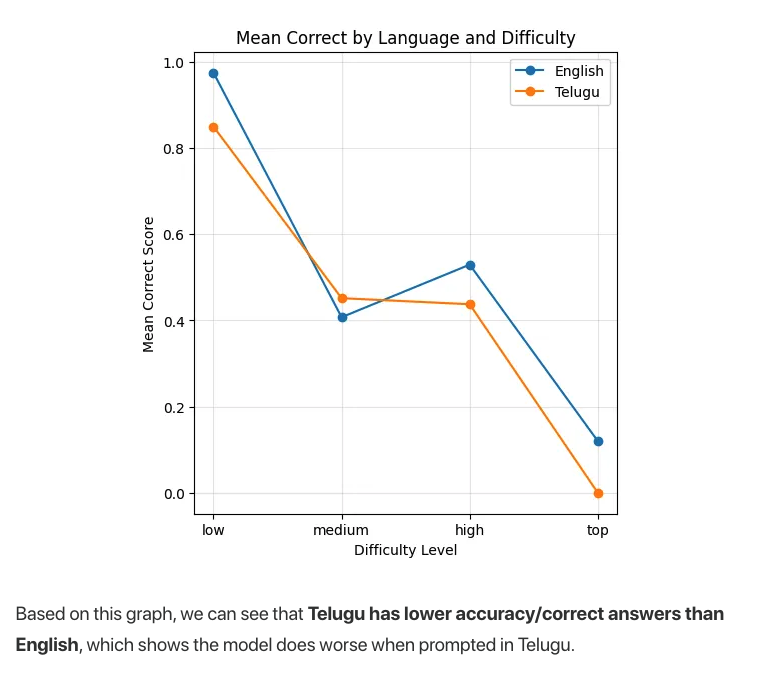

We dropped our explorations on how language impacts LLM reasoning in a new blog post! This work - done by our research intern @Madbonze16 - has many insights. 1st insight 👉 If the same math question is asked in Telugu, performance drops!

Something went wrong.

Something went wrong.

United States Trends

- 1. Halloween 3.8M posts

- 2. #DoorDashTradeorTreat 2,275 posts

- 3. #sweepstakes 3,339 posts

- 4. #smackoff 2,764 posts

- 5. Cy Fair N/A

- 6. Trick or Treat 444K posts

- 7. Disney 101K posts

- 8. ESPN 85.3K posts

- 9. Super Corredora N/A

- 10. Judges 99.7K posts

- 11. Best VP 1,437 posts

- 12. Hulu 23.8K posts

- 13. Candy 259K posts

- 14. #shortnspooky N/A

- 15. Billy Bob 6,128 posts

- 16. YouTube TV 51.6K posts

- 17. NBA Cup 6,863 posts

- 18. Jessica Lange 44.6K posts

- 19. Zverev 3,909 posts

- 20. Brent Burns N/A