#distributed_optimization 搜尋結果

Distributed search is a way to search large data sets quickly by dividing the search workload among multiple servers. Learn more about its benefits and how Oracle AI Database can help you simplify your distributed search platform. social.ora.cl/60157cQS5

Distributed search is a way to search large data sets quickly by dividing the search workload among multiple servers. Learn more about its benefits and how Oracle AI Database can help you simplify your distributed search platform. social.ora.cl/60127cZKr

Distributed search is a way to search large data sets quickly by dividing the search workload among multiple servers. Learn more about its benefits and how Oracle AI Database can help you simplify your distributed search platform. social.ora.cl/60197cOAD

Decentralized Bilevel Optimization: A Perspective from Transient Iteration Complexity ift.tt/UYolx24

Distributed search is a way to search large data sets quickly by dividing the search workload among multiple servers. Learn more about its benefits and how Oracle AI Database can help you simplify your distributed search platform. social.ora.cl/60187cycM

Distributed #machinelearning Is The Answer To Scalability And Computation Requirements;We all know the traditional way of #machinelearning, where prog 7wdata.be/data-managemen… #CMO #datagovernance

Learn how to speed up your deep learning with Distributed Training and TPUs. Perfect for your first distributed TPU project. pyimagesearch.com/2021/12/06/fas…

haha yeah that's probably a more efficient approach. Yes it's a "distributed orchestrator" that allows the network to take independent decisions (and even learn real time, I am working on that now) and then dynamically take decisions ceur-ws.org/Vol-1583/CoCoN…

decentralized compute improves efficiency via distributed nodes

but in distributed you can quantize wherever the master weights are stored, where each device is only in charge of quantizing it's small local subset of the params in parallel, and the overall batch size is vastly higher

You’re no longer tied to a single provider’s GPU inventory. No more dependency on one datacenter. No more waiting in a queue built by someone else’s demand. You’re tapping into a global mesh of lightweight devices running real inference work in parallel, on demand, without the…

Because under the hood, your request doesn’t hit a single GPU cluster. It enters a distributed mesh powered by thousands of global browser nodes. A network that scales not with hardware orders… but with people. That simplicity hides an enormous shift. (3/6)

A Unified Convergence Analysis for Semi-Decentralized Learning: Sampled-to-Sampled vs. Sampled-to-All Communication. arxiv.org/abs/2511.11560

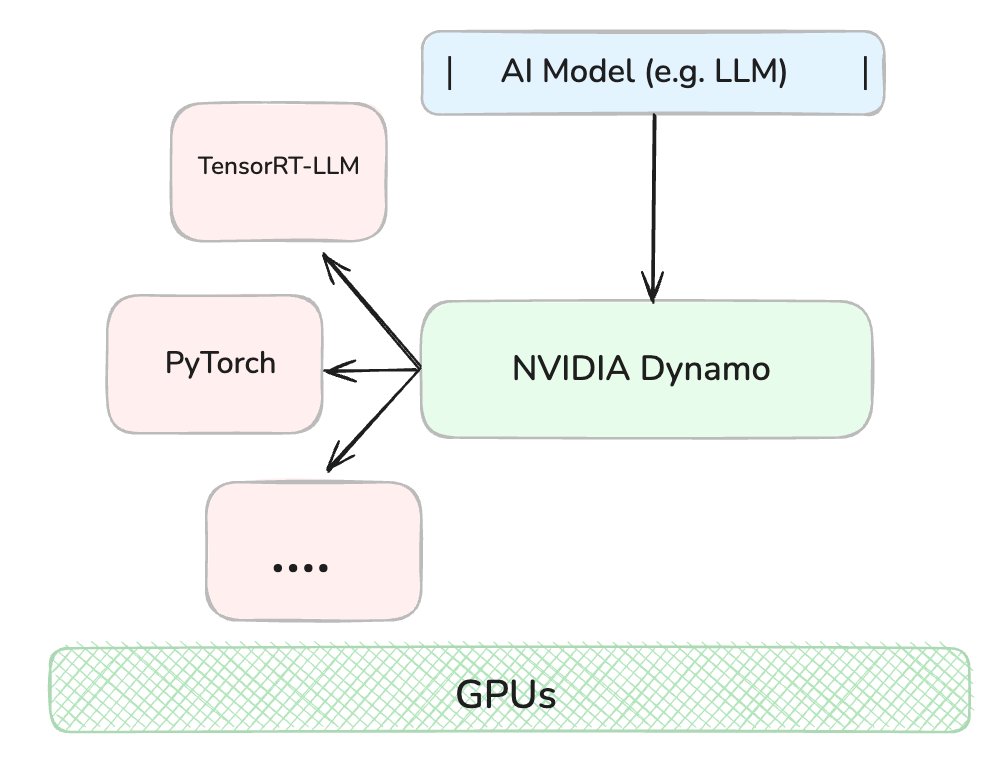

Distributed Inference with NVIDIA Dynamo Open-Source Library by Almog Elfassy medium.com/p/distributed-…

Jitian Liu, et al.: Distributed Optimization of Pairwise Polynomial Graph Spectral... arxiv.org/abs/2511.11517 arxiv.org/pdf/2511.11517 arxiv.org/html/2511.11517

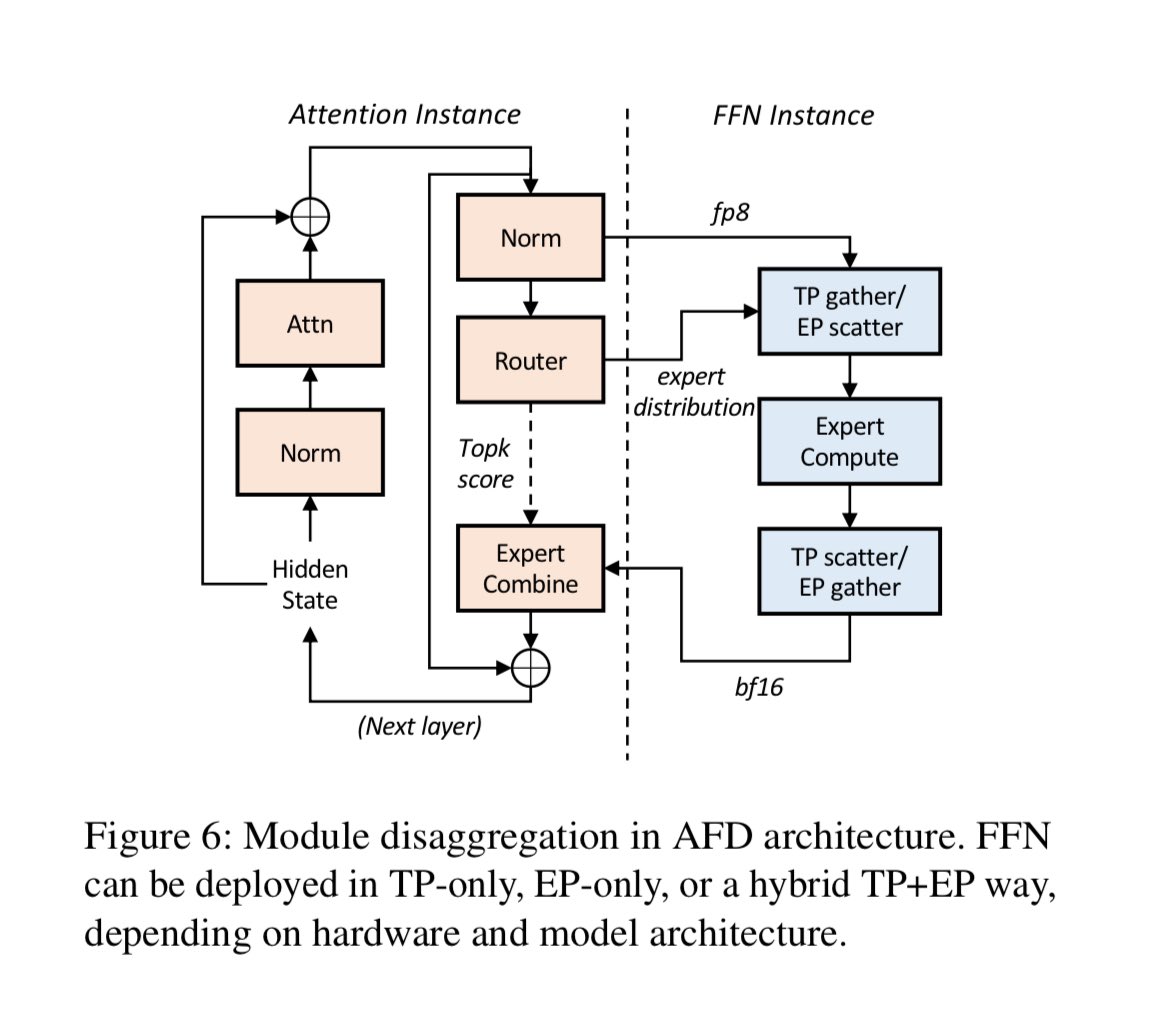

The creativity in distributed inference systems here is so inspiring. Attention-FFN disaggregation (from StepFun’s Step-3 paper). Upshot: in a disaggregated serving deployment, further optimize decode by disaggregating attention/MoE layers Benefit: - similar to p/d, attn is…

'Distributed team' 🤔 Add a timechain for a collective reference to get some extra digits on those reasoning gains :) It's the only cyber-native reference of different physical topography (time) that focuses data-streams, especially swarms by synchronicity beyond what is…

Our paper examines optimizing distributed matrix multiplication across multiple GPUs using one-sided communication. arxiv.org/pdf/2510.08874

A Bias Correction Mechanism for Distributed Asynchronous Optimization Yuan Gao, Yuki Takezawa, Sebastian U Stich. Action editor: Yingbin Liang. openreview.net/forum?id=8doMb… #asyncbc #asynchronous #distributed

Another "decentralized" AI project touts user-owned infrastructure, but their core inference still hums along on centralized cloud GPUs. Apparently, "distributed" now means geographically diverse server farms. Classic Web3 misdirection.

Something went wrong.

Something went wrong.

United States Trends

- 1. #FanCashDropPromotion 2,969 posts

- 2. Ukraine 428K posts

- 3. Le Cowboy N/A

- 4. #FursuitFriday 11.9K posts

- 5. #FridayVibes 6,120 posts

- 6. Good Friday 67.8K posts

- 7. #pilotstwtselfieday N/A

- 8. Putin 141K posts

- 9. ON SALE NOW 12.5K posts

- 10. Ja Rule 1,671 posts

- 11. Kenyon 1,496 posts

- 12. SINGSA LATAI EP4 138K posts

- 13. #สิงสาลาตายEP4 136K posts

- 14. Fang Fang 12.9K posts

- 15. Eric Swalwell 5,213 posts

- 16. Sedition 370K posts

- 17. Mark Henry N/A

- 18. Dave Aranda N/A

- 19. Happy Friyay 1,762 posts

- 20. woozi 60.9K posts