#inferenceacceleration search results

We're excited to announce Pliops' latest advancements in LLM #inferenceacceleration. Our demos show a >2X performance improvement over standard vLLM, & this is just the start. Stay tuned for more details, and join us at SC24 for the public demo. Contact: [email protected].

Escape the Cloud Tax - Post 5: Serve Faster. Spend Smarter. Scale Better. #LLM #EdgeAI #InferenceAcceleration #MLOps #GenAI #SelfHostedLLM #TokenThroughput #EdgeMatrix #LLMInfraUnlocked #AIOnCPUs #RaspberryPiAI #Inference #GenerativeAI #SelfHostedLLM linkedin.com/pulse/escape-c…

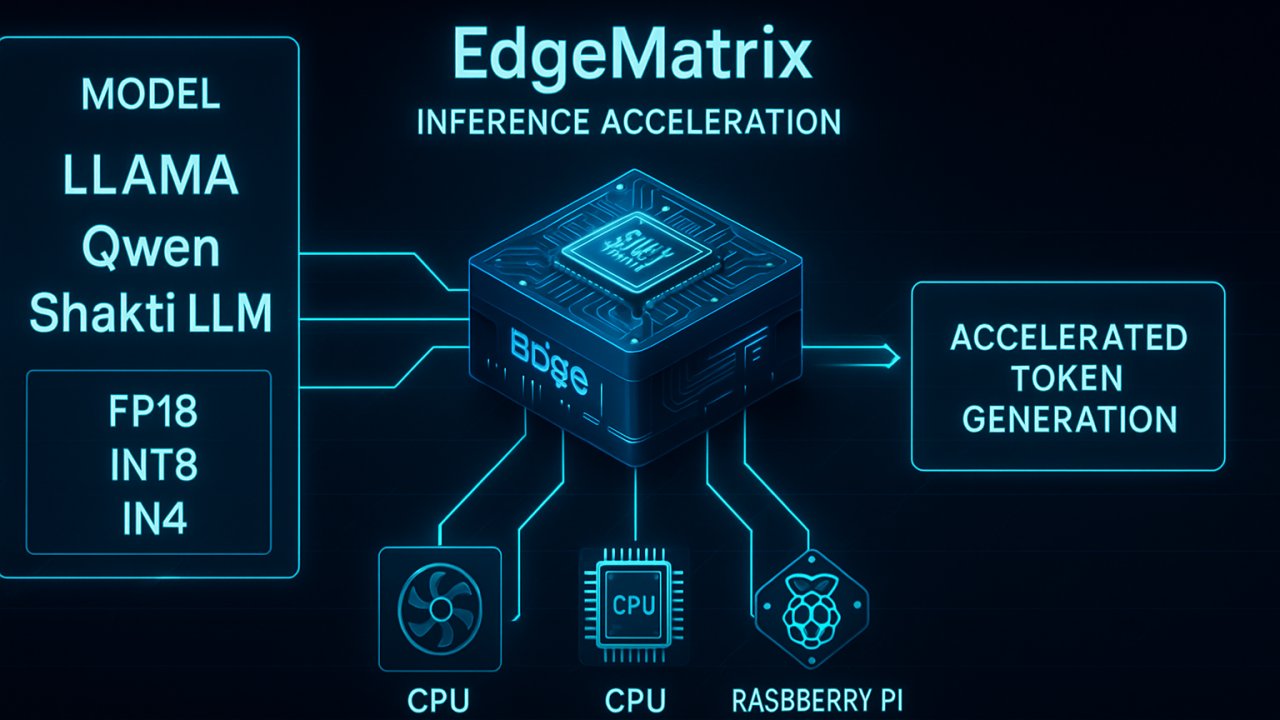

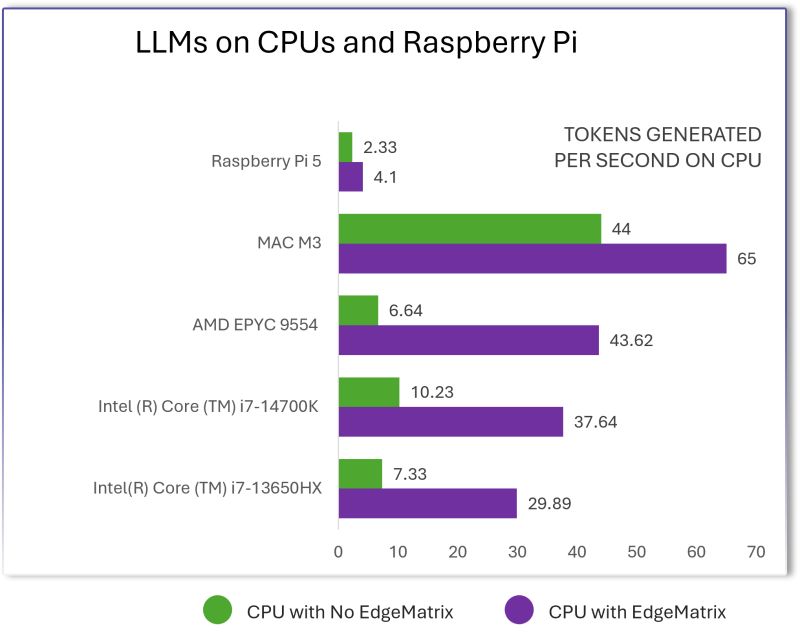

EdgeMatrix enables #LLM inference on CPUs at the edge, with: - Near real-time generation speeds - 3×–6× acceleration on average - Zero dependence on cloud APIs or external GPUs #EdgeAI #InferenceAcceleration #MLOps #GenAI #SelfHostedLLM #TokenThroughput linkedin.com/feed/update/ur…

#InferenceAcceleration with Adaptive Distributed #DNN Partition over #DynamicVideoStream, by Jin Cao, Bo Li, Mengni Fan, Huiyu Liu, from Huazhong University of Science and Technology mdpi.com/1999-4893/15/7… #mdpialgorithms via MDPI

Escape the Cloud Tax - Post 5: Serve Faster. Spend Smarter. Scale Better. #LLM #EdgeAI #InferenceAcceleration #MLOps #GenAI #SelfHostedLLM #TokenThroughput #EdgeMatrix #LLMInfraUnlocked #AIOnCPUs #RaspberryPiAI #Inference #GenerativeAI #SelfHostedLLM linkedin.com/pulse/escape-c…

EdgeMatrix enables #LLM inference on CPUs at the edge, with: - Near real-time generation speeds - 3×–6× acceleration on average - Zero dependence on cloud APIs or external GPUs #EdgeAI #InferenceAcceleration #MLOps #GenAI #SelfHostedLLM #TokenThroughput linkedin.com/feed/update/ur…

We're excited to announce Pliops' latest advancements in LLM #inferenceacceleration. Our demos show a >2X performance improvement over standard vLLM, & this is just the start. Stay tuned for more details, and join us at SC24 for the public demo. Contact: [email protected].

#InferenceAcceleration with Adaptive Distributed #DNN Partition over #DynamicVideoStream, by Jin Cao, Bo Li, Mengni Fan, Huiyu Liu, from Huazhong University of Science and Technology mdpi.com/1999-4893/15/7… #mdpialgorithms via MDPI

We're excited to announce Pliops' latest advancements in LLM #inferenceacceleration. Our demos show a >2X performance improvement over standard vLLM, & this is just the start. Stay tuned for more details, and join us at SC24 for the public demo. Contact: [email protected].

#InferenceAcceleration with Adaptive Distributed #DNN Partition over #DynamicVideoStream, by Jin Cao, Bo Li, Mengni Fan, Huiyu Liu, from Huazhong University of Science and Technology mdpi.com/1999-4893/15/7… #mdpialgorithms via MDPI

Something went wrong.

Something went wrong.

United States Trends

- 1. Comey 124K posts

- 2. GeForce Season 1,613 posts

- 3. Everton 77.2K posts

- 4. Dorgu 8,519 posts

- 5. Gueye 16.7K posts

- 6. Opus 4.5 5,384 posts

- 7. Mark Kelly 85K posts

- 8. Keane 11.1K posts

- 9. Seton Hall N/A

- 10. Halligan 39.4K posts

- 11. #MUNEVE 8,273 posts

- 12. Department of War 24.6K posts

- 13. Hegseth 30.8K posts

- 14. #WooSoxWishList 11.9K posts

- 15. Pentagon 18.2K posts

- 16. Amorim 26.5K posts

- 17. UCMJ 13.2K posts

- 18. Zirkzee 12.7K posts

- 19. Gana 55.1K posts

- 20. Creighton 2,069 posts