#mllm search results

Open-source excellence! InternVL2.5, including our 78B model, is now available on @huggingface. InternVL2.5-78B is the first open #MLLM to achieve GPT-4o-level performance on MMMU.

🤯 AI research is moving FAST! Just saw "LatticeWorld," a framework using Multimodal LLMs for interactive complex world generation. Imagine AI building entire game worlds on the fly! #AI #MLLM #GameDev

🚨 LMCache now turbocharges multimodal models in vLLM! By caching image-token KV pairs, repeated images now get ~100% cache hit rate — cutting latency from 18s to ~1s. Works out of the box. Check the blog: blog.lmcache.ai/2025-07-03-mul… Try it 👉 github.com/LMCache/LMCache #vLLM #MLLM…

Wanna generate webpages automatically? Meet🧇WAFFLE: multimodal #LLMs for generating HTML from UI images! It uses structure-aware attention & contrastive learning. arxiv.org/abs/2410.18362 #LLM4Code #MLLM #FrontendDev #WebDev @LiangShanchao @NanJiang719 Shangshu Qian

AIロボット暴走の落とし穴?!🚨 危険な視覚トリガー攻撃に要注意です! via: 未来のビジネスにAIを導入するなら必見!MLLM搭載ロボットが特定の視覚トリガーで誤動作するリスクが指摘されました。ビジネスの信頼性と安全性を守りましょう!🛡️ #AIセキュリティ #MLLM

$MLLM team anonymous, high risk to dump see rugcatcher.finance MultiLLM report here CA: BV7jYpQgdgQhx8eyVLjp2vyJhpFDHic1Q3jAYGTZpump #MLLM

Two of our papers have been accepted to the #ACL2025 main conference! Try our code generation benchmark 🐟RepoCod (lt-asset.github.io/REPOCOD/) and website generation tool 🧇WAFFLE (github.com/lt-asset/Waffle)! #LLM4Code #MLLM #FrontendDev #WebDev #LLM #CodeGeneration #Security

🔥🔥Tongyi Mobile-Agent Team presents Semi-online Reinforcement Learning, UI-S1, which simulates online RL on offline trajectories to handle sparse rewards and deployment costs. 📖Paper: huggingface.co/papers/2509.11… 🔗Code: github.com/X-PLUG/MobileA… #LLMs #Multimodal #MLLM @_akhaliq

🔥🔥🔥We introduce O3-like multimodal reasoning model, VLM-R3, which seamlessly weave the relevant sub-image content back into an interleaved chain-of-thought. 📖Paper: huggingface.co/papers/2505.16… 🔗Code: github.com/X-PLUG #LLMs #Multimodal #MLLM @_akhaliq

Apple releases Ferret 7B multimodal large language model (MLLM) #Apple #Ferret #MLLM #LLM #AI #TechAI #LearningAI #GenerativeAI #DeepbrainAI

TFAR: A Training-Free Framework for Autonomous Reliable Reasoning in Visual Question Answering Zhuo Zhi, Chen Feng, Adam Daneshmend et al.. Action editor: Nicolas THOME. openreview.net/forum?id=cBAKe… #reasoning #mllm #tools

🔥🔥Mobile-Agent-E Release!! Hierarchical multi-agent framework, and a cross-task long-term memory with self-evolving 💡Tips and 🚀Shortcuts. 🌏Project: x-plug.github.io/MobileAgent/ 📖Paper: huggingface.co/papers/2501.11… 🔗Code: github.com/X-PLUG/MobileA… #LLMs #MLLM #Agent @_akhaliq

Introducing Ovis 2.5 - our latest multimodal LLM breakthrough! Featuring enhanced visual perception & reasoning capabilities, consistently outperforming larger models across key benchmarks. #Ovis #MLLM

🦙🦙🦙We present #LAMM, a growing open-source community aimed at helping researchers and developers quickly train and evaluate Multi-modal Large Language Models (#MLLM), and further build multi-modal AI agents. As one of the very first open-source endeavors in the #MLLM field,…

LAMBDA: Advancing Autonomous Reasoning with Nuro's MLLM In this video, you'll see LAMBDA, Nuro's Multimodal Large Language Model (MLLM), and its integration into The Nuro Driver's™ onboard autonomy stack. Video source: @nuro #autonomousvehicles #MLLM #technology #engineering

🤔 Which multimodal LLM truly hits the mark on precision pointing & visual reasoning? 🏆 Jump into our #PointBattle and watch top MLLMs go head-to-head—only one can point supreme! 👉 Try it now: de87c27de85699eb1b.gradio.live #AI #MLLM #Robotics

🔥🔥PC-Agent Release!! we propose the PC-Agent framework to handle the complex interactive environment and complex tasks in PC scenarios. 📖Paper: huggingface.co/papers/2502.14… 🔗Code: github.com/X-PLUG/MobileA… #LLMs #Multimodal #MLLM @_akhaliq

Just wrapped up Day 3 at @DeepIndaba! 🌟 Thrilled to share our latest research on low-resource AI: "Closing the Gap in ASR" & "Challenging Multimodal LLMs" 💡 Great convos & feedback! #AfricanNLP #ASR #MLLM #LyngualLabs

AIロボット暴走の落とし穴?!🚨 危険な視覚トリガー攻撃に要注意です! via: 未来のビジネスにAIを導入するなら必見!MLLM搭載ロボットが特定の視覚トリガーで誤動作するリスクが指摘されました。ビジネスの信頼性と安全性を守りましょう!🛡️ #AIセキュリティ #MLLM

PixelCraft significantly boosts performance for strong MLLMs (GPT-40, Claude 3.7) on tough benchmarks like ChartXiv, ChartQAPro, & Geometry3K. Paper: arxiv.org/pdf/2509.25185 Code: github.com/microsoft/Pixe… #AI #MLLM #VisualReasoning #ComputerVision #MultiAgent

$MLLM team anonymous, high risk to dump see rugcatcher.finance MultiLLM report here CA: BV7jYpQgdgQhx8eyVLjp2vyJhpFDHic1Q3jAYGTZpump #MLLM

¡Revolución en IA! DeepMMSearch-R1 es el primer #MLLM en superar a #GPT en búsqueda web multimodal y #VQA. Usa auto-corrección y búsqueda con recorte de imágenes para un conocimiento en tiempo real. ¡El futuro de la #BúsquedaWeb ha llegado! #DeepMMSearchR1 youtu.be/7MapdFGHl1o

youtube.com

YouTube

DeepMMSearch-R1: El MLLM que supera a GPT en búsqueda web multimodal...

😆 We are presenting our work, “Bidirectional Likelihood Estimation with Multi-Modal Large Language Models for Text-Video Retrieval” in Hawaii, ICCV’25! Time:10/23(Thu), 10:45 Location: Exhibit Hall I PosterID: 232 Paper: arxiv.org/pdf/2507.23284 #ICCV2025 #Retrieval #MLLM

Open-source excellence! InternVL2.5, including our 78B model, is now available on @huggingface. InternVL2.5-78B is the first open #MLLM to achieve GPT-4o-level performance on MMMU.

Open-source excellence! InternVL2.5, including our 78B model, is now available on @huggingface. InternVL2.5-78B is the first open #MLLM to achieve GPT-4o-level performance on MMMU.

🚨 LMCache now turbocharges multimodal models in vLLM! By caching image-token KV pairs, repeated images now get ~100% cache hit rate — cutting latency from 18s to ~1s. Works out of the box. Check the blog: blog.lmcache.ai/2025-07-03-mul… Try it 👉 github.com/LMCache/LMCache #vLLM #MLLM…

Wanna generate webpages automatically? Meet🧇WAFFLE: multimodal #LLMs for generating HTML from UI images! It uses structure-aware attention & contrastive learning. arxiv.org/abs/2410.18362 #LLM4Code #MLLM #FrontendDev #WebDev @LiangShanchao @NanJiang719 Shangshu Qian

Two of our papers have been accepted to the #ACL2025 main conference! Try our code generation benchmark 🐟RepoCod (lt-asset.github.io/REPOCOD/) and website generation tool 🧇WAFFLE (github.com/lt-asset/Waffle)! #LLM4Code #MLLM #FrontendDev #WebDev #LLM #CodeGeneration #Security

🔥🔥Tongyi Mobile-Agent Team presents Semi-online Reinforcement Learning, UI-S1, which simulates online RL on offline trajectories to handle sparse rewards and deployment costs. 📖Paper: huggingface.co/papers/2509.11… 🔗Code: github.com/X-PLUG/MobileA… #LLMs #Multimodal #MLLM @_akhaliq

🔥🔥🔥mPLUG-Owl3: Towards Long Image-Sequence Understanding in Multi-Modal Large Language Models 📖Paper: arxiv.org/abs/2408.04840 🔗Code:github.com/X-PLUG/mPLUG-O… #LLMs #Multimodal #MLLM @_akhaliq

Apple releases Ferret 7B multimodal large language model (MLLM) #Apple #Ferret #MLLM #LLM #AI #TechAI #LearningAI #GenerativeAI #DeepbrainAI

🔥🔥🔥We introduce O3-like multimodal reasoning model, VLM-R3, which seamlessly weave the relevant sub-image content back into an interleaved chain-of-thought. 📖Paper: huggingface.co/papers/2505.16… 🔗Code: github.com/X-PLUG #LLMs #Multimodal #MLLM @_akhaliq

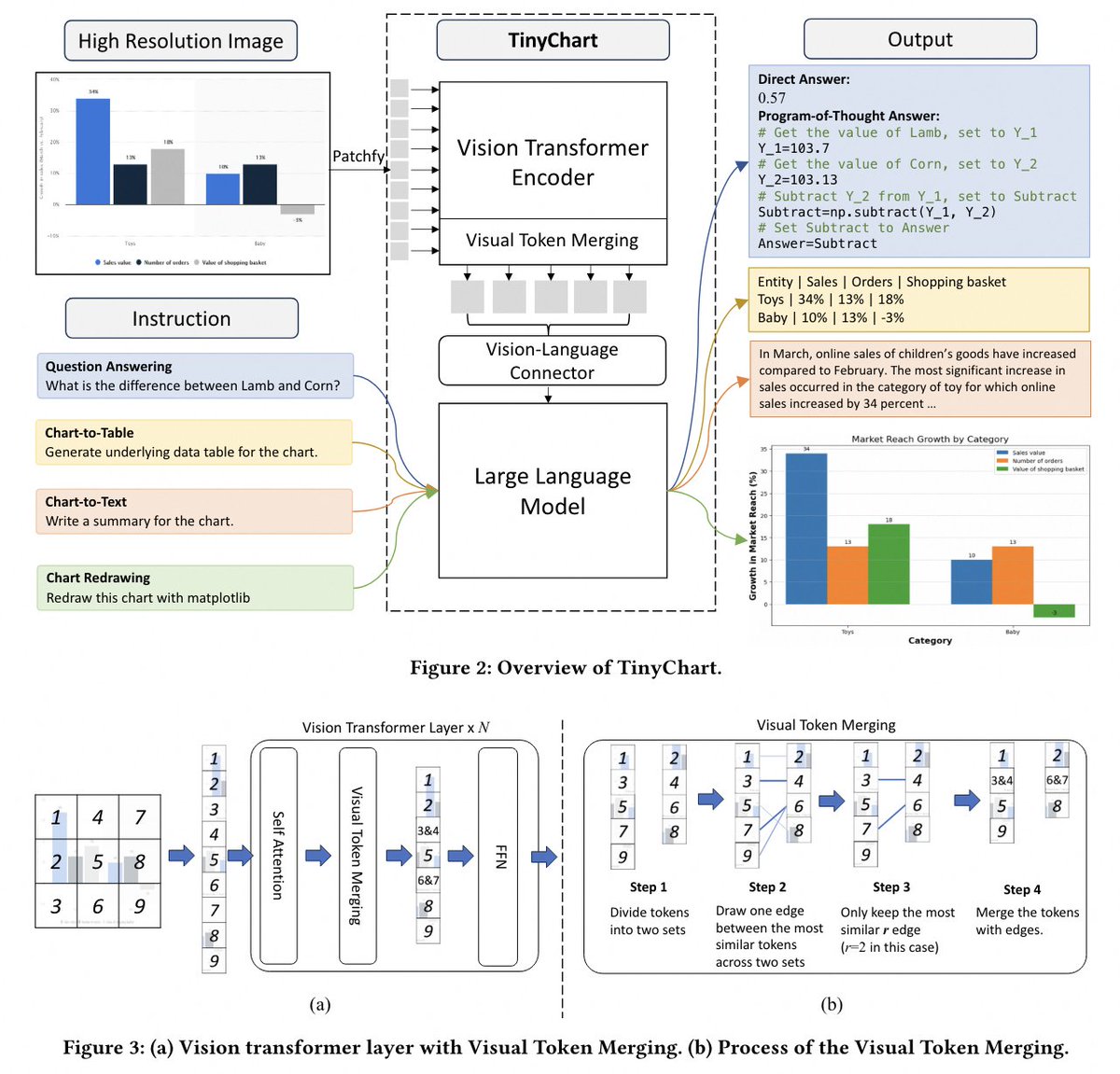

🚀mPLUG's latest work!! 🌏TinyChart: Efficient Chart Understanding with Visual Token Merging and Program-of-Thoughts Learning 🔗Code: github.com/X-PLUG/mPLUG-D… 📚Paper: huggingface.co/papers/2404.16… #multimodal #LLM #MLLM @_akhaliq

Does this mean the end of #Photoshop??? 🙄 #Apple just unveiled a revolutionary #MLLM called MGIE! 🚀 #ai #imageediting #innovation #opensource #opensourceai #opensourcecommunity #digitalmedia #technews #applemgie #artificialintelligence #genai #generativeai #generativeart

🔥🔥Mobile-Agent-E Release!! Hierarchical multi-agent framework, and a cross-task long-term memory with self-evolving 💡Tips and 🚀Shortcuts. 🌏Project: x-plug.github.io/MobileAgent/ 📖Paper: huggingface.co/papers/2501.11… 🔗Code: github.com/X-PLUG/MobileA… #LLMs #MLLM #Agent @_akhaliq

😆 We are presenting our work, “Bidirectional Likelihood Estimation with Multi-Modal Large Language Models for Text-Video Retrieval” in Hawaii, ICCV’25! Time:10/23(Thu), 10:45 Location: Exhibit Hall I PosterID: 232 Paper: arxiv.org/pdf/2507.23284 #ICCV2025 #Retrieval #MLLM

just found TinyGPT-V, a parameter-efficient #MLLM built on Phi-2 small language model framework with very promising benchmarks arxiv.org/abs/2312.16862

Introducing Ovis 2.5 - our latest multimodal LLM breakthrough! Featuring enhanced visual perception & reasoning capabilities, consistently outperforming larger models across key benchmarks. #Ovis #MLLM

🦙🦙🦙We present #LAMM, a growing open-source community aimed at helping researchers and developers quickly train and evaluate Multi-modal Large Language Models (#MLLM), and further build multi-modal AI agents. As one of the very first open-source endeavors in the #MLLM field,…

🚀 探索多模态学习的新境界!HyperLLaVA通过动态视觉和语言专家调整,显著提升了MLLMs的性能。快来查看这篇前沿研究,一起开启多模态智能的新篇章!🌟 #MLLM #HyperLLaVA #MultimodalAI

HKU and Tencent propose Plot2Code benchmark evaluating Multimodal LLMs' code generation from scientific plots, highlighting challenges and driving future research in visual coding. #AI #MLLM #DataScience

Just wrapped up Day 3 at @DeepIndaba! 🌟 Thrilled to share our latest research on low-resource AI: "Closing the Gap in ASR" & "Challenging Multimodal LLMs" 💡 Great convos & feedback! #AfricanNLP #ASR #MLLM #LyngualLabs

Multimodal models that confuse left and right… are now learning 3D space like humans. This new research redefines spatial understanding in AI. A thread on Multi-SpatialMLLM — and how it may change robotics, AR, and more 🚀🧠 #AI #MLLM #ComputerVision #Robotics

भारतजेन (BharatGen) स्रोत: NEXT IAS पत्रिका - नैतिक, समावेशी और क्षेत्र-विशिष्ट AI समाधान तैयार करना। - भारतजेन की शुरुआत विज्ञान और प्रौद्योगिकी राज्य मंत्री (स्वतंत्र प्रभार) द्वारा की गई है। विस्तार से पढ़ें - nextias.com/ca/current-aff…भारत-ai-llm-मॉडल-विकसित #NextIAS #Mllm

Something went wrong.

Something went wrong.

United States Trends

- 1. #SmackDown 29.2K posts

- 2. Caleb Wilson 3,541 posts

- 3. Giulia 10.1K posts

- 4. Lash Legend 3,260 posts

- 5. #TheLastDriveIn 1,246 posts

- 6. #OPLive N/A

- 7. Chelsea Green 4,197 posts

- 8. Kansas 23.5K posts

- 9. Darryn Peterson 1,950 posts

- 10. Reed Sheppard N/A

- 11. Nia Jax 2,395 posts

- 12. #MutantFam N/A

- 13. #Dateline N/A

- 14. Rockets 17.5K posts

- 15. Tiller 4,152 posts

- 16. Georgetown 3,176 posts

- 17. End of 3rd 1,364 posts

- 18. Dizzy 12.5K posts

- 19. Vesia 6,506 posts

- 20. Tar Heels 1,016 posts