#sparseattention search results

🚨Whoa! #DeepSeek just dropped a #SparseAttention model that slashes API costs by half the era of budget AI apps begins now. #AI #TechNews #Innovation #APIRevolution #HiddenBrains

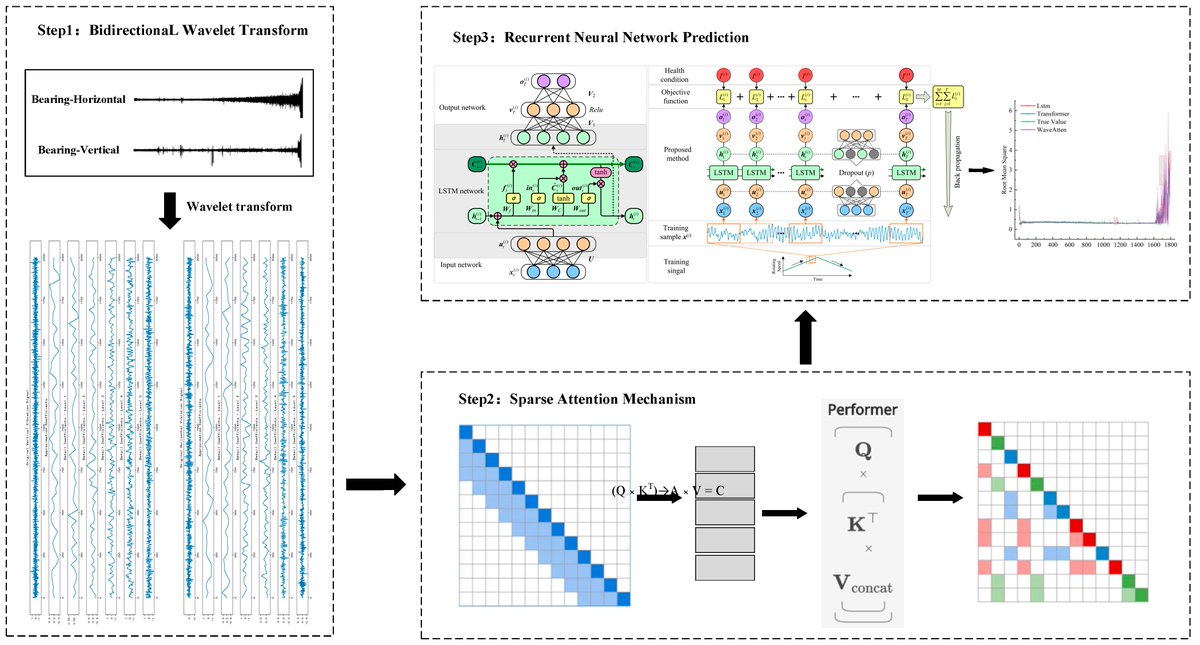

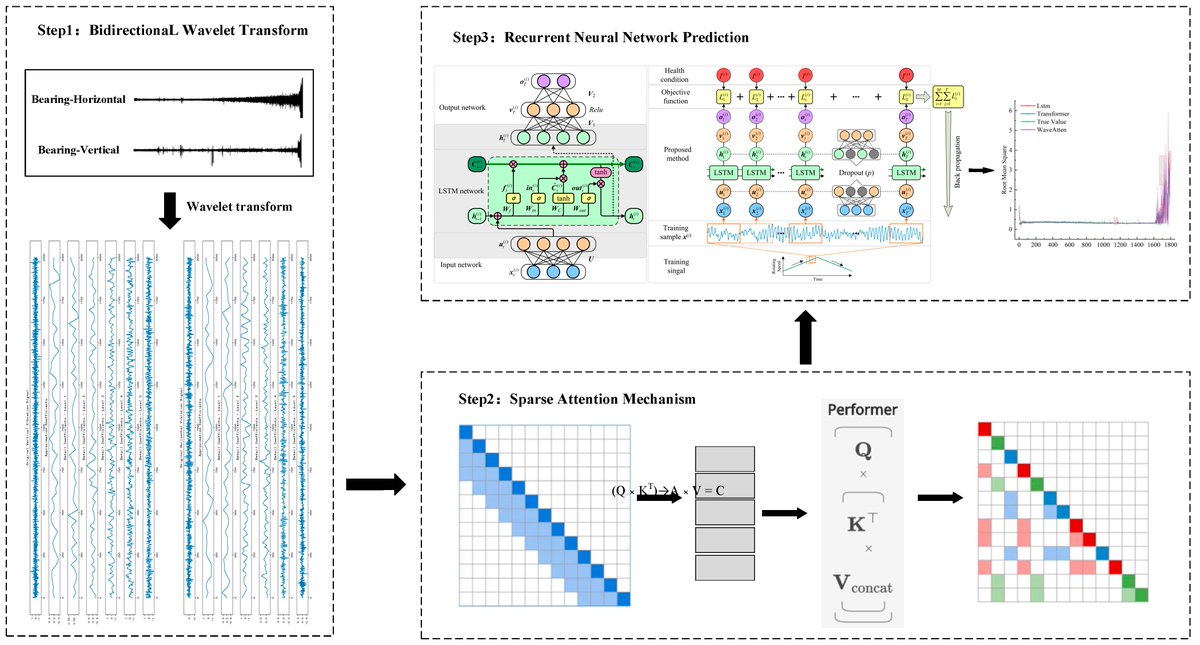

Check this newly published article "WaveAtten: A Symmetry-Aware #SparseAttention Framework for Non-Stationary Vibration #SignalProcessing" at brnw.ch/21wXpjo Authors: Xingyu Chen and Monan Wang #mdpisymmetry #wavelettransform #deeplearning

deepseek’s new sparse attention model cuts api costs by 50% efficient, affordable & scalable — without losing performance. could this break the cost barrier for ai adoption? #DeepSeek #sparseattention #AITECH #TechInnovation #artificialintelligence #codedotetechnologies

⚡Step into the future of #LLMs! Join the Sword AI Seminar on Nov 5 at @swordhealth Lisbon to explore #sparseattention, extending context windows & making #AI more efficient. Deep dive, Q&A & networking. 🎟️ Secure your spot: docs.google.com/forms/d/e/1FAI…

🧠 Meet DeepSeek Sparse Attention — a smarter way to scale AI models efficiently. ⚡ Read more 👉 extrapolator.ai/2025/09/30/dee… 🔍 Category: #AIArticles | via @ExtrapolatorAI #AI #SparseAttention #DeepSeek #AIModels #MachineLearning #DeepLearning #LLM #GenerativeAI #AITutorials…

DeepSeek V3.2-Exp: Optimize Long-Context Processing Costs with Sparse Attention #DeepSeek #SparseAttention #AIOptimization #CostEfficiency #LongContextProcessing itinai.com/deepseek-v3-2-… Understanding the Target Audience The primary audience for DeepSeek V3.2-Exp includes AI d…

Giving #deepseek's #sparseattention some #attention as part of the 2025 #inference revolution. #ai #genai @deepseek_ai linkedin.com/pulse/what-yea…

linkedin.com

What a year in Inference!! From Attention to Sparse Attention

Introduction In August 2024, I argued that the primary bottleneck in AI was compute, not network bandwidth. By the end of the year, it seemed natural to me assume the industry would soon focus on...

🔥 معماری Sparse Attention چیه؟ تکنولوژی که هزینه پردازش متنهای طولانی رو تا ۸۰٪ کاهش میده ✨ مزایا: ✅پپردازش متنهای ۱۲۸K توکنی ✅کاهش O(n²) به O(n) ✅حفظ کیفیت خروجی ✅صرفهجویی انرژی 📖 مقاله: 🔗 deepfa.ir/blog/sparse-at… #SparseAttention #AI #هوش_مصنوعی #NLP #Transformers

⚡️ Lightning in a bottle for LLMs: DeepSeek’s Sparse Attention cuts long-context compute while keeping quality high. If it scales, efficient AI becomes the default. Dive in: medium.com/@ai_buzz/light… #DeepSeek #SparseAttention #LLM #AI

medium.com

Lightning in a Bottle: How DeepSeek’s Sparse Attention Is Quietly Revolutionizing AI

Looking back at the recent surge of innovation in AI, I found myself captivated by DeepSeek’s bold move into efficient large language…

💡 DeepSeek Unveils Sparse Attention Model to Halve AI API Costs The new V3.2-exp model reduces long-context AI inference costs by 50%, enabling cheaper, faster, and more efficient AI operations. Read the analysis: tinyurl.com/34kwzn63 #AI #SparseAttention #DeepSeek

DeepSeek launches V3.2-Exp with its new Sparse Attention tech, slashing API costs by 50% while keeping performance on par with V3.1. A major move in the AI infrastructure pricing race. #TOAINews2025 #DeepSeek #SparseAttention #AI

DeepSeek unveils its V3.2-exp model with breakthrough sparse attention—for the first time, low-cost long-context AI becomes feasible, bringing powerful new capabilities to next-gen language models. #SparseAttention #AIResearch theaiinsider.tech/2025/09/30/dee…

DeepSeek’s new sparse attention model runs faster, costs 50% less, and needs less hardware. Is this the future of efficient AI? 🧠⚡ #AI #DeepSeek #SparseAttention #yugtoio #technews yugto.io/deepseeks-new-…

yugto.io

DeepSeek’s New Sparse Attention Model Promises Faster AI at Half the Cost — Even with Less Hardware

DeepSeek’s New Sparse Attention Model Promises Faster AI at Half the Cost — Even with Less Hardware

DeepSeek lanza modelo con sparse attention 🚀 ➡️ Reduce costos de API hasta 50 % ➡️ Ideal para contextos largos ➡️ Ya disponible en Hugging Face En Qwerty analizamos qué significa para productos AI 👉 somosqwerty.com/blog #AI #SparseAttention #Qwerty

#DeepSeek's efficiency gains via 8-bit quantization, #sparseattention, & #knowledgedistillation slash computational costs. But are we trading security for efficiency? Explore the risks & why AI-led #automation platforms might be smarter for enterprises: shorturl.at/6gfeD

DeepSeek's native sparse attention is implemented in pure C and CUDA! Feel free to contribute! Link: github.com/a-hamdi/native… #DeepSeek #SparseAttention #C #CUDA #AI #MachineLearning #OpenSource

DeepSeek launches sparse attention model! Cutting AI API costs by 50% without sacrificing performance. Developers, are you ready? #AI #SparseAttention #DeepSeek shorturl.at/CUCae

MInference (Milliontokens Inference): A Training-Free Efficient Method for the Pre-Filling Stage of Long-Context LLMs Based on Dynamic Sparse Attention itinai.com/minference-mil… #LongContextLLMs #MInference #SparseAttention #AIevolution #BusinessTransformation #ai #news #llm #m…

DeepSeek AI Introduces NSA: A Hardware-Aligned and Natively Trainable Sparse Attention Mechanism for Ultra-Fast Long-Context Training and Inference #DeepSeekAI #NSAMechanism #SparseAttention #AItechnology #LongContextTraining itinai.com/deepseek-ai-in…

Your attention is declining, but it isn’t weak. It’s engineered. Infinite scroll, notifications, and personalization loops change what feels worth noticing. (the same pattern as slot machines) Interactive art exposes this architecture in real time. We must reclaim our…

All of us are immersed and lost into digital screens. No talks, No human attention, Just every person with their own screen.

But does sparse attention allow you to have only x10 more expensive serving when you are like at least x20 the side. I thought linear is cheap to serve

Conversation quality drops the moment phones enter peripheral vision. Half-attention means half-processing, which means zero retention of anything that wasn’t immediately relevant.

Attention is a true zero-sum game: attention payed in one place is attention not payed in another—what would your feed look like if you took that seriously?

You can’t attract what’s meant for you when half of your attention is scattered across people , you don’t even see clearly.

Attention scarcity is exploited. Flooding feeds with sensational content drowns out competing perspectives, ensuring influencers control the narrative. #ActivismSyndicate Voices of Doom

The Voices of Doom strategy turns private frustrations into publicly amplified crises, encouraging followers to vent aggressively rather than seek constructive solutions. #ActivismSyndicate

Attention only matters when it’s scarce. When everyone throws it around carelessly, connection loses its weight.

Attention behaves like a scarce resource. Fewer tasks reduce friction and stronger incentives increase energy toward the target. Clear input yields predictable output.~~~

1/15 🧵 The problem: We're trained from birth to collapse this natural multiplicity into "focus." School, work, society - everything rewards singular attention. But this creates a massive blind spot to our actual capacity.

penning a diatribe against the poly attention economy. inattentive!!1!

Focused indifference triggers scarcity psychology. When your attention becomes rare, it gains value. Stop chasing, start building, suddenly you're the prize. They sense investment elsewhere, competition instinct activates. Paradox: detachment creates demand.

"If you get into a certain habit, you have expectations in relation to that habit, but that doesn’t mean your real ability has changed" Fascinating piece on the 'attention' crisis among pupils 👉Are pupil attention spans really decreasing? tes.com/magazine/teach…

Aujourd’hui, on vit dans une société hyper connectée où chaque notification vole un morceau de notre attention. Si tu ne protèges pas ton focus, personne ne le fera pour toi. 👉 Conseil : Déconnecte-toi un moment, sinon c’est ta vie qui se déconnectera de tes objectifs. #Pensif

🧠 Meet DeepSeek Sparse Attention — a smarter way to scale AI models efficiently. ⚡ Read more 👉 extrapolator.ai/2025/09/30/dee… 🔍 Category: #AIArticles | via @ExtrapolatorAI #AI #SparseAttention #DeepSeek #AIModels #MachineLearning #DeepLearning #LLM #GenerativeAI #AITutorials…

🚨Whoa! #DeepSeek just dropped a #SparseAttention model that slashes API costs by half the era of budget AI apps begins now. #AI #TechNews #Innovation #APIRevolution #HiddenBrains

DeepSeek V3.2-Exp: Optimize Long-Context Processing Costs with Sparse Attention #DeepSeek #SparseAttention #AIOptimization #CostEfficiency #LongContextProcessing itinai.com/deepseek-v3-2-… Understanding the Target Audience The primary audience for DeepSeek V3.2-Exp includes AI d…

⚡Step into the future of #LLMs! Join the Sword AI Seminar on Nov 5 at @swordhealth Lisbon to explore #sparseattention, extending context windows & making #AI more efficient. Deep dive, Q&A & networking. 🎟️ Secure your spot: docs.google.com/forms/d/e/1FAI…

Check this newly published article "WaveAtten: A Symmetry-Aware #SparseAttention Framework for Non-Stationary Vibration #SignalProcessing" at brnw.ch/21wXpjo Authors: Xingyu Chen and Monan Wang #mdpisymmetry #wavelettransform #deeplearning

🔥 معماری Sparse Attention چیه؟ تکنولوژی که هزینه پردازش متنهای طولانی رو تا ۸۰٪ کاهش میده ✨ مزایا: ✅پپردازش متنهای ۱۲۸K توکنی ✅کاهش O(n²) به O(n) ✅حفظ کیفیت خروجی ✅صرفهجویی انرژی 📖 مقاله: 🔗 deepfa.ir/blog/sparse-at… #SparseAttention #AI #هوش_مصنوعی #NLP #Transformers

💡 DeepSeek Unveils Sparse Attention Model to Halve AI API Costs The new V3.2-exp model reduces long-context AI inference costs by 50%, enabling cheaper, faster, and more efficient AI operations. Read the analysis: tinyurl.com/34kwzn63 #AI #SparseAttention #DeepSeek

DeepSeek lanza modelo con sparse attention 🚀 ➡️ Reduce costos de API hasta 50 % ➡️ Ideal para contextos largos ➡️ Ya disponible en Hugging Face En Qwerty analizamos qué significa para productos AI 👉 somosqwerty.com/blog #AI #SparseAttention #Qwerty

DeepSeek's native sparse attention is implemented in pure C and CUDA! Feel free to contribute! Link: github.com/a-hamdi/native… #DeepSeek #SparseAttention #C #CUDA #AI #MachineLearning #OpenSource

#DeepSeek's efficiency gains via 8-bit quantization, #sparseattention, & #knowledgedistillation slash computational costs. But are we trading security for efficiency? Explore the risks & why AI-led #automation platforms might be smarter for enterprises: shorturl.at/6gfeD

DeepSeek launches V3.2-Exp with its new Sparse Attention tech, slashing API costs by 50% while keeping performance on par with V3.1. A major move in the AI infrastructure pricing race. #TOAINews2025 #DeepSeek #SparseAttention #AI

MInference (Milliontokens Inference): A Training-Free Efficient Method for the Pre-Filling Stage of Long-Context LLMs Based on Dynamic Sparse Attention itinai.com/minference-mil… #LongContextLLMs #MInference #SparseAttention #AIevolution #BusinessTransformation #ai #news #llm #m…

DeepSeek AI Introduces NSA: A Hardware-Aligned and Natively Trainable Sparse Attention Mechanism for Ultra-Fast Long-Context Training and Inference #DeepSeekAI #NSAMechanism #SparseAttention #AItechnology #LongContextTraining itinai.com/deepseek-ai-in…

Something went wrong.

Something went wrong.

United States Trends

- 1. Sonny Gray 6,283 posts

- 2. Dick Fitts N/A

- 3. Red Sox 6,460 posts

- 4. #yummymeets N/A

- 5. Clarke 5,973 posts

- 6. National Treasure 4,696 posts

- 7. #GMMTV2026 4.12M posts

- 8. Gone in 60 1,628 posts

- 9. Giolito N/A

- 10. Happy Thanksgiving 20.1K posts

- 11. Thankful 49.4K posts

- 12. Raising Arizona N/A

- 13. Academic All-District N/A

- 14. Rush Hour 4 2,994 posts

- 15. Lord of War 1,193 posts

- 16. Breslow N/A

- 17. Chuck and Nancy 3,093 posts

- 18. Chaim 1,098 posts

- 19. Joe Ryan N/A

- 20. NextNRG Inc N/A