Starc Institute

@ARC_Guide

Starc Institute: A boundaryless academy where talent unites to ignite research and shape the future. https://starc.institute/

Hiring a postdoc at Stanford🥳

Our lab is looking to hire a new postdoc working on ectomycorrhizal fungi, climate change, and genomics. More details on the position and how to apply in the attached flyer!

Hiring a postdoc🥳

🚀 We’re hiring a #Postdoc! Join our group in Poznan, Poland to study meiotic crossover recombination in plants🌱 Highly motivated & enthusiastic candidates are welcome! 📅 Deadline: Nov 1, 2025 🔗 ibmib.web.amu.edu.pl/wp-content/upl… #PlantScience #Meiosis #PostdocJobs

Please check the details 👇

A postdoc position is available in my group! Check the details below😀

ANU is known for its academic excellence.

Visiting ANU was truly awesome. And, we at HTRC have a bunch of new data to share..over 3 TRILLION tokens available to download!

Had the pleasure & privilege of having @profdownie present to us yesterday on “Creating Data for Open Cultural Analysis Activities: The TORCHLITE Project and Extracted Features 2.5”. Excellent speaker, even better colleague!

Call for Community Activities #AAAI2026

📢 Call for Community Activities #AAAI2026 We invite submissions of proposals for including and open activities that help broaden community participation in the AI field. October 4: Submission Deadline October 18: Acceptance Notifications @RobobertoMM @marucabrera27 @RealAAAI

This is a major breakthrough-we can’t just scrape the internet for robot data anymore.🤗

Announcing Dimensional: We already have robots in the wild doing our data collection for us

Claims 2.5x speedups over eager PyTorch operations but most of the listed examples seem to be fusion benchmarks with a likely untuned compiled baseline. 6x speedups over linear, really? norm speedups would evaporate if authors upgrade from pytorch 2.5 github.com/pytorch/pytorc…

SakanaAI presents Robust Agentic CUDA Kernel Optimization • Fuses ops, boosts forward/backward passes, outperforms torch baselines • Agentic LLM pipeline: PyTorch → CUDA → evolutionary runtime optimization • Soft-verification: LLMs flag incorrect kernels (↑30% verification…

Hope to see you in San Diego😄

We'd like thank reviewers and community that 4DGT got accepted to NeurIPS 2025 as a Spotlight. We have just released the demo code in github.com/facebookresear… There are a few features to be added with some updates in our writing, thanks to the awesome suggestions from…

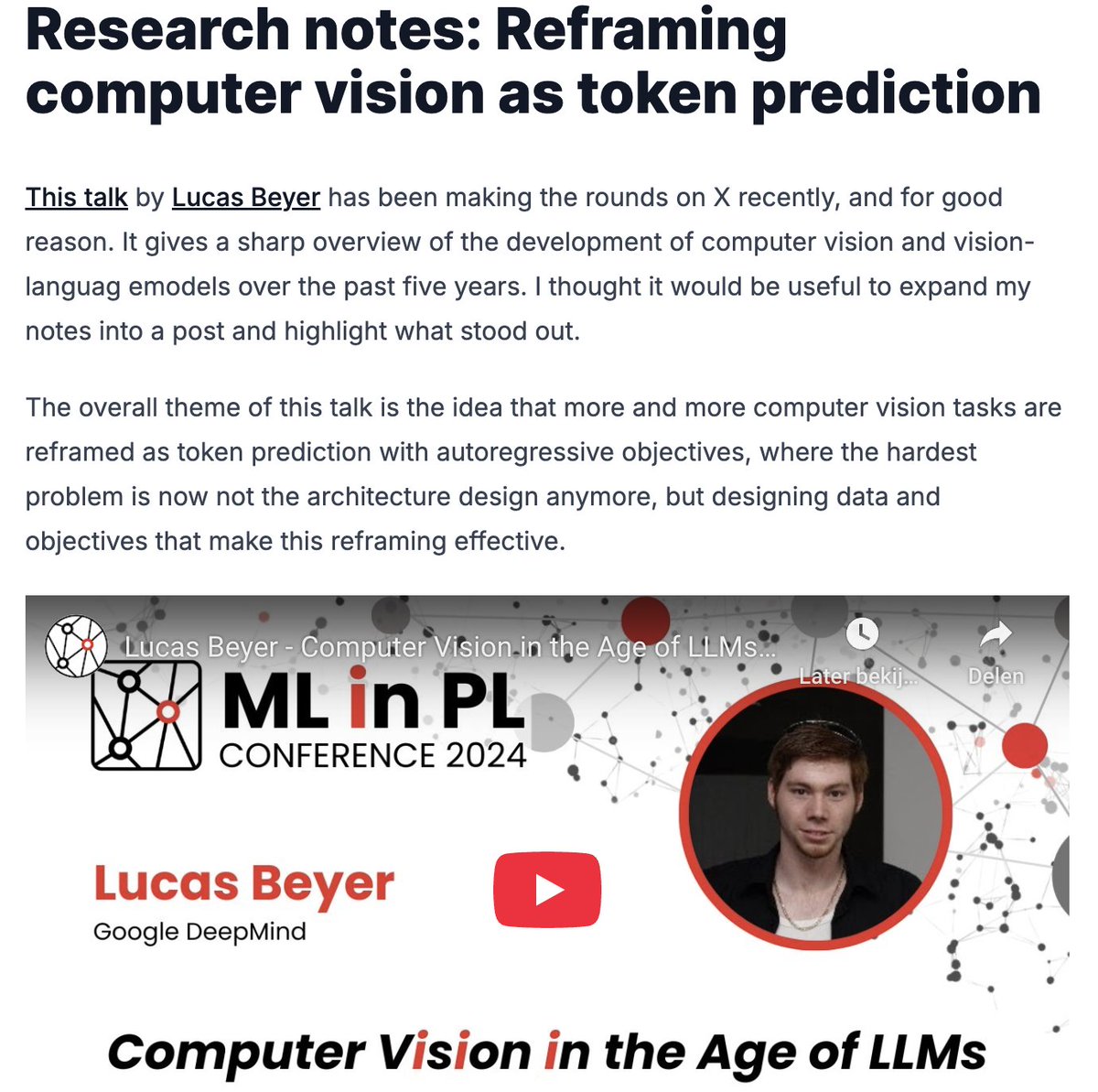

Computer vision in the age of LLMs

Liked this talk by @giffmana about computer vision in the age of LLMs, so I published my summary notes:

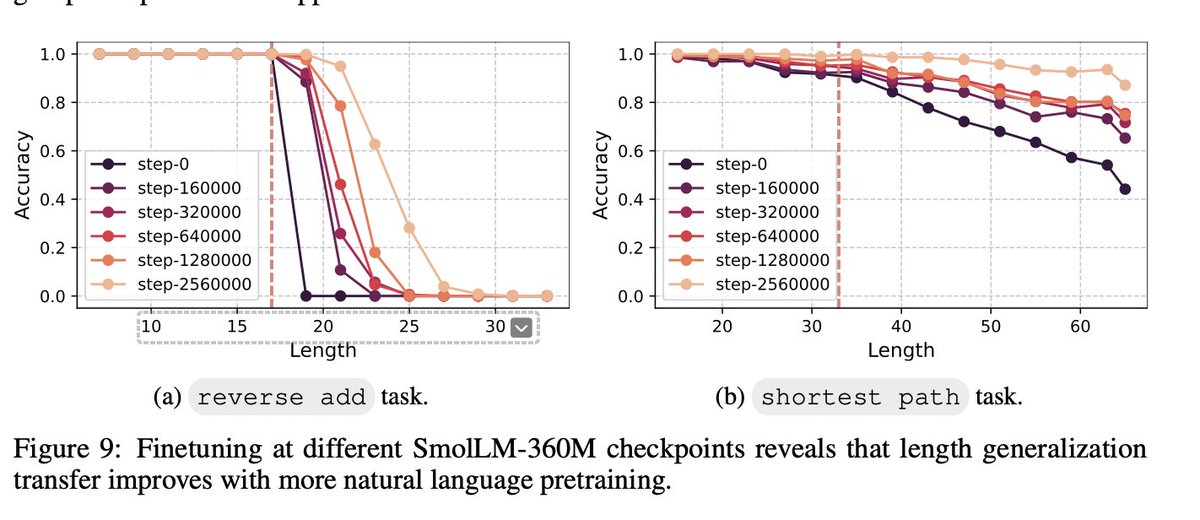

Congrats to @jackcai1206 and @nayoung_nylee for their NeurIPS Spotlight on "Extrapolation by Association: Length Generalization Transfer In Transformers" :)

Excited about our new work: Language models develop computational circuits that are reusable AND TRANSFER across tasks. Over a year ago, I tested GPT-4 on 200 digit addition, and the model managed to do it (without CoT!). Someone from OpenAI even clarified they NEVER trained…

Announcing Project Go-Big There’s no YouTube for robot data, so we’re building one ourselves - Figure now has access to >100k real homes via Brookfield - We’ve begun scaling human collect - We already have initial results: direct human video-to-robot transfer to Helix 🧬

Combine document processing, embedding, and indexing into a downstream system.

This is a fantastic tutorial showing you how to build a real-time, production-grade document processing pipeline over massive volumes of data for AI agents. The key insights here are to use streaming infrastructure to combine document processing, embedding, and indexing into a…

Optimize with purpose🤔🤔

I had an incredible time delivering my keynote "Healthy ML at Any Scale" at @pyconcolombia 2025. The talk is finally live! 🚀 Watch the full talk here: youtube.com/watch?v=wxOxML… My key message was simple: optimize with purpose. #PyConColombia #MachineLearning #AI

Intelligent humanoids should have the ability to quickly adapt to new tasks by observing humans Why is such adaptability important? 🌍 Real-world diversity is hard to fully capture in advance 🧠 Adaptability is central to natural intelligence We present MimicDroid 👇 🌐…

ha! here is something fun and totally random I've been pondering: as Oliver Sacks has beautifully written - "what is the space between two snowflakes?" Language can describe all the things, stuff, and people in intricate details. But what about the 'space', the 'nothingness' in…

Fei-Fei Li (@drfeifei) on limitations of LLMs. "There's no language out there in nature. You don't go out in nature and there's words written in the sky for you.. There is a 3D world that follows laws of physics." Language is purely generated signal.

Qwen3-Next is out! SGLang has supported it on day 0 with speculative decoding. Try it out 👇

🚀 Introducing Qwen3-Next-80B-A3B — the FUTURE of efficient LLMs is here! 🔹 80B params, but only 3B activated per token → 10x cheaper training, 10x faster inference than Qwen3-32B.(esp. @ 32K+ context!) 🔹Hybrid Architecture: Gated DeltaNet + Gated Attention → best of speed &…

AI is becoming the second brain we never knew we needed.

Super excited to bring hundreds of state-of-the-art open models (Kimi K2, Qwen3 Next, gpt-oss, Aya, GLM 4.5, Deepseek 3.1, Hermes 4, and dozens new ones every day) directly into @code & @Copilot, thanks to @huggingface inference providers! This is powered by our amazing partners…

Wow, one pothole=$1800😱An auto pothole avoidance feature is definitely a must-have now!

🚨🚨New paper on core RL: a way to train value-functions via flow-matching for scaling compute! No text/images, but a flow directly on a scalar Q-value. This unlocks benefits of iterative compute, test-time scaling for value prediction & SOTA results on whatever we tried. 🧵⬇️

United States Trends

- 1. Bears 71.7K posts

- 2. Bills 132K posts

- 3. Falcons 45.3K posts

- 4. Snell 17.9K posts

- 5. Josh Allen 22.8K posts

- 6. #Dodgers 12.9K posts

- 7. Jake Moody 8,218 posts

- 8. Caleb 40.3K posts

- 9. Bijan 28.1K posts

- 10. Turang 3,356 posts

- 11. phil 147K posts

- 12. #NLCS 11.4K posts

- 13. Swift 285K posts

- 14. Roki 5,511 posts

- 15. Jayden Daniels 8,595 posts

- 16. AFC East 7,684 posts

- 17. Brewers 43.1K posts

- 18. Joe Brady 4,314 posts

- 19. Commanders 44.5K posts

- 20. McDermott 6,535 posts

Something went wrong.

Something went wrong.

![LvZhaoyang's tweet card. [NeurIPS 2025 (Spotlight)] The implementation for the paper "4DGT Learning a 4D Gaussian Transformer Using Real-World Monocular Videos" - facebookresearch/4DGT](https://pbs.twimg.com/card_img/1976352611055042562/B-H6anYR?format=jpg&name=orig)