Data Science PY

@Data_Science_PY

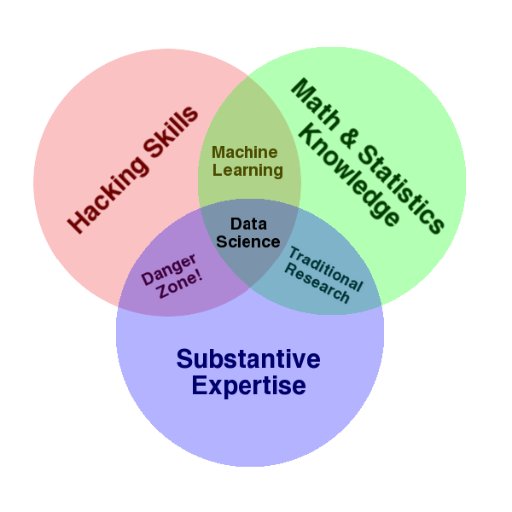

#DataScience,#MachineLearning, #AI, #BigData, #BusinessAnalytics, etc. Community from #Paraguay 🇵🇾 Curated by @rubuntu

Asuncion, Paraguay

Temmuz 2018’de katıldı

Bunları beğenebilirsin

United States Trendler

- 1. #SantaChat 9,075 posts

- 2. Jack Smith 94K posts

- 3. Venezuela 746K posts

- 4. Big Christmas 15.4K posts

- 5. Ewers 13K posts

- 6. Weaver 7,845 posts

- 7. Dan Bongino 13.6K posts

- 8. Jared Isaacman 3,519 posts

- 9. Cherki 28.3K posts

- 10. The Oscars 26.7K posts

- 11. Endrick 13K posts

- 12. NextNRG Inc 1,517 posts

- 13. Gunna 23.3K posts

- 14. Jimmy Stewart 2,963 posts

- 15. Kawhi 4,971 posts

- 16. Fani 33.3K posts

- 17. Presidential Walk of Fame 5,145 posts

- 18. Unblock 3,487 posts

- 19. Vivek 21K posts

- 20. Talavera 21.9K posts

Loading...

Something went wrong.

Something went wrong.