MIT CSAIL and @myshell_ai researchers introduce OpenVoice V2, a text-to-speech model that can clone any voice and speak in many languages. Imagine your voice going global in multiple languages. OpenVoice V2 breaks the language barrier and redefines voice interactions.

Be sure to check out the ML Theory Group tomorrow, April 11th as they host @guillemsimeon for a presentation on "TensorNet: Cartesian Tensor Representations for Efficient Learning of Molecular Potentials." Learn more: cohere.com/events/c4ai-Gu…

Join our ML Theory Group as they welcome @guillemsimeon next week on Thursday, April 11th for a presentation on "TensorNet: Cartesian Tensor Representations for Efficient Learning of Molecular Potentials." Learn more: cohere.com/events/c4ai-Gu…

If you enjoy from-scratch implementations of self-attention and multi-head attention, I have compared and collected a few implementations for you here. For readability, I particularly appreciate the compact one in the lower left, which features combined QKV matrices (courtesy of…

The state of AI following the OpenAI lawsuit

CVPR 2010 Best Paper Award Efficient Computation of Robust Low-Rank Matrix Approximations in the Presence of Missing Data using the L1 Norm A. Eriksson, A. van den Hengel

The PRIOR team @allen_ai is excited to welcome everyone to Seattle for #CVPR2024 . We have 6 exciting papers accepted to CVPR this year focussing on Multimodal AI and Robotics. See you all in June!

If you want to finetune #Gemma 7b on a free Colab instance, have a notebook! It's 2.5x faster and uses 70% less VRAM than HF with @unslothai - no more OOMs! The notebook also has 2x faster inference for Gemma, how to merge and save to llama.cpp GGUF, vLLM colab.research.google.com/drive/10NbwlsR…

Going to the lab the day after #CVPR2024 decisions. Think happy thoughts. GOOD LUCK!

📢🔥#HIRING! Responsible and #OpenAI Research (ROAR) team at @Microsoft is hiring for Full-time Research Scientists. People with experience in speech/audio/RAI are encouraged to apply here: 1. jobs.careers.microsoft.com/global/en/job/… 2. jobs.careers.microsoft.com/global/en/job/…

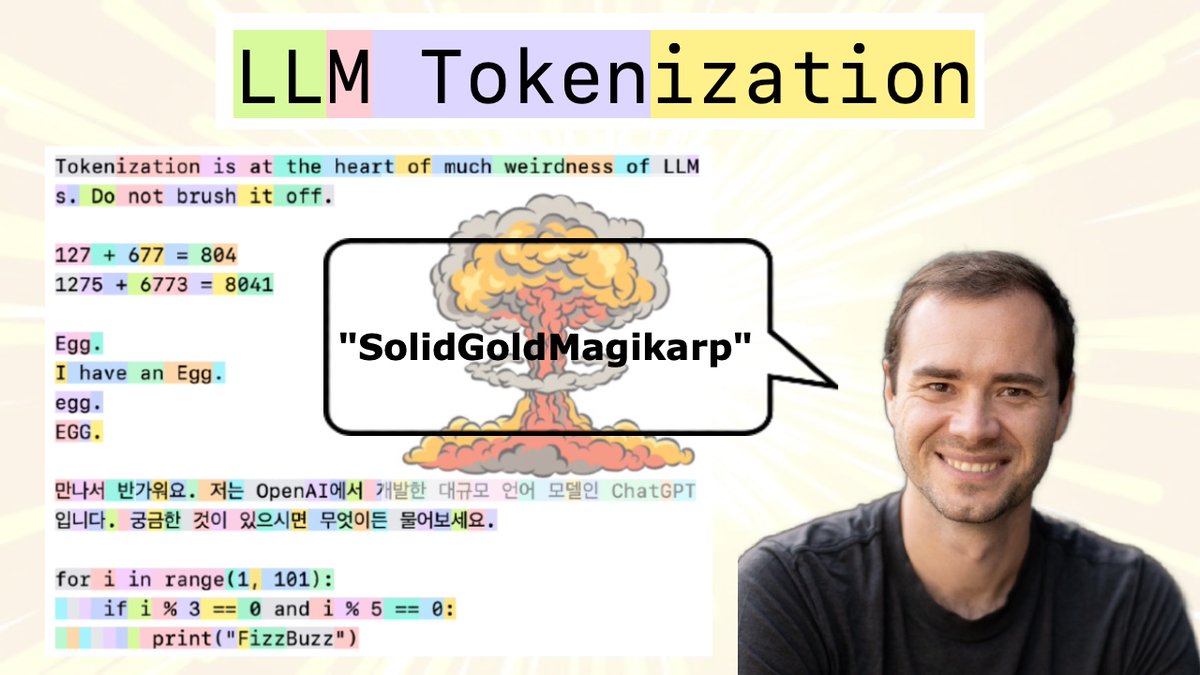

New (2h13m 😅) lecture: "Let's build the GPT Tokenizer" Tokenizers are a completely separate stage of the LLM pipeline: they have their own training set, training algorithm (Byte Pair Encoding), and after training implement two functions: encode() from strings to tokens, and…

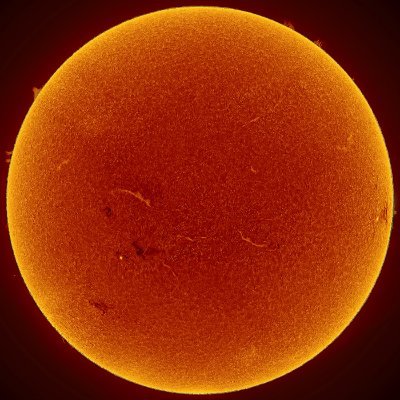

Gemini 1.5 Pro can analyze, classify and summarize huge quantities of information – including documents with thousands of pages. 📄 When given a 402-page transcript from Apollo 11’s mission to the moon, it was able to reason about conversations and events it finds. ↓

we'd like to show you what sora can do, please reply with captions for videos you'd like to see and we'll start making some!

Introducing Gemini 1.5: our next-generation model with dramatically enhanced performance. It also achieves a breakthrough in long-context understanding. The first release is 1.5 Pro, capable of processing up to 1 million tokens of information. 🧵 dpmd.ai/3SEbw4p

I have a PhD student position starting Aug 2024 at ECE @iiscbangalore, broadly in foundations of gen-AI / language agents, and associated machine learning approaches. Please apply by March 2024 if interested! ece.iisc.ac.in/~aditya/openin…

I gave several talks (at Research Week at Google and GDM) be about some of our recent work on improving LLMs using their self-generated data with access to external feedback. The slides are quite accessible, hope you find you find them useful: drive.google.com/file/d/1wCWjhQ…

As an LLM finetuner, I recently started getting into model merging. I wrote up a short tutorial on linear merging to introduce the topic: lightning.ai/lightning-ai/s… Btw does anyone happen to have good examples of LLMs that work well when merged via linear merging? And for…

We've just open-sourced the code and data for Self-play Fine-Tuning (SPIN)! Time to SPIN every model out there! 🚀🚀🚀 Code: github.com/uclaml/SPIN Data: huggingface.co/collections/UC… Models: huggingface.co/collections/UC… Project Page: uclaml.github.io/SPIN/ Many thanks to @Yihe__Deng,…

Give someone a fish, and you feed them for a day; teach someone to fish, and you feed them for a lifetime. Elevating from Weak to Strong with Self-Play Fine-Tuning (SPIN) for All LLMs. Empower, Evolve, SPIN! arxiv.org/pdf/2401.01335…

United States Trends

- 1. Giants 56.4K posts

- 2. Bills 127K posts

- 3. Bears 51.7K posts

- 4. Josh Allen 12.5K posts

- 5. Dolphins 28K posts

- 6. Caleb 40.2K posts

- 7. Russell Wilson 2,972 posts

- 8. Dart 21.6K posts

- 9. Henderson 13.3K posts

- 10. Browns 31.6K posts

- 11. Daboll 5,920 posts

- 12. Patriots 90.3K posts

- 13. Drake Maye 12.1K posts

- 14. Ravens 30.4K posts

- 15. Bryce 13.9K posts

- 16. Saints 31.4K posts

- 17. Vikings 25.9K posts

- 18. Pats 11.6K posts

- 19. JJ McCarthy 3,443 posts

- 20. Beane 4,877 posts

Something went wrong.

Something went wrong.