Fabian Schaipp

@FSchaipp

working on optimization for machine learning. currently postdoc @inria_paris.

You might like

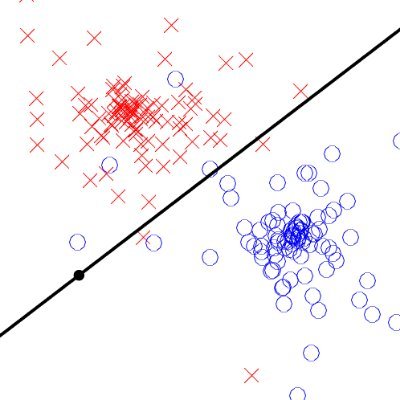

Learning rate schedules seem mysterious? Turns out that their behaviour can be described with a bound from *convex, nonsmooth* optimization. Short thread on our latest paper 🚇 arxiv.org/abs/2501.18965

The sudden loss drop when annealing the learning rate at the end of a WSD (warmup-stable-decay) schedule can be explained without relying on non-convexity or even smoothness, a new paper shows that it can be precisely predicted by theory in the convex, non-smooth setting! 1/2

love to see how well MoMo works combined with Muon

We've just finished some work on improving the sensitivity of Muon to the learning rate, and exploring a lot of design choices. If you want to see how we did this, follow me ....1/x (Work lead by the amazing @CrichaelMawshaw)

Good to see SOAP and Muon being quite performant in another setting — training of Diffusion Models. Similarly to our benchmark, the authors find Prodigy a decent “proxy-optimizer” for tuning hyperparams of Adam-like methods arxiv.org/pdf/2510.19376

TIL: Even the Sophia authors couldn't reproduce the Sophia paper's results. source: arxiv.org/pdf/2509.02046

most stylish theatre i've been to. don't miss the coffee bar in the break.

The amazing lobby of the Teatro Regio in Turin, Italy by architect Carlo Mollino

when the paper title is a question, you can usually guess the "answer"

weight decay seems to be a hot topic of this year's ICLR submissions 👀

are models getting nervous when they are set from .train() to .eval()?

If you’re scrambling a last-minute submission with an uncertain result, remember: putting it off is hard in the moment. It will sting for 10 minutes (because you care so deeply), but in 10 months you’ll be incredibly proud you made the scientifically rigorous call.

Die Berliner Verkehrssenatorin Uta Bonde (CDU) jetzt im Gespräch mit dem Tagesspiegel zum Thema Schulwegsicherheit: „Wir können nicht nach Gutdünken Tempo 30 einführen“ Autofreie Schulstraßen wie in Paris sieht sie skeptisch. Dafür ihr Rat an alle Kinder und Jugendlichen: „Helm…

Long in the making, finally released: Apertus-8B and Apertus-70B, trained on 15T tokens of open data from over 1800 languages. Unique opportunity in academia to work on and train LLMs across the full-stack. We managed to pull off a pretraining run with some fun innovations, ...

United States Trends

- 1. Packers 99.3K posts

- 2. Eagles 128K posts

- 3. Jordan Love 15.3K posts

- 4. Benítez 13.1K posts

- 5. #WWERaw 135K posts

- 6. LaFleur 14.7K posts

- 7. Green Bay 19.1K posts

- 8. AJ Brown 7,098 posts

- 9. Sirianni 5,074 posts

- 10. Patullo 12.4K posts

- 11. Jaelan Phillips 8,085 posts

- 12. McManus 4,447 posts

- 13. Veterans Day 30.6K posts

- 14. Grayson Allen 4,161 posts

- 15. Jalen 24.2K posts

- 16. Smitty 5,607 posts

- 17. #TalusLabs N/A

- 18. James Harden 1,974 posts

- 19. Berkeley 61K posts

- 20. #GoPackGo 7,966 posts

You might like

-

Konstantin Mishchenko

Konstantin Mishchenko

@konstmish -

Ben Grimmer

Ben Grimmer

@prof_grimmer -

Aaron Defazio

Aaron Defazio

@aaron_defazio -

Sierra Team

Sierra Team

@Sierra_ML_Lab -

Mathieu Dagréou

Mathieu Dagréou

@Mat_Dag -

Damek

Damek

@damekdavis -

Franck Iutzeler

Franck Iutzeler

@FranckIutzeler -

Till Richter

Till Richter

@TillRichter6 -

Nicolas Loizou

Nicolas Loizou

@NicLoizou -

Dirk L.

Dirk L.

@Dirque_L -

Robert M. Gower 🇺🇦

Robert M. Gower 🇺🇦

@gowerrobert -

Adrien Taylor

Adrien Taylor

@TaylorAdrien -

Samuel Horváth

Samuel Horváth

@sam_hrvth -

Quentin Bertrand

Quentin Bertrand

@Qu3ntinB -

Geoffrey Négiar

Geoffrey Négiar

@geoffnegiar

Something went wrong.

Something went wrong.